LECTURE 5 Random variables every possible outcome Lecture outline

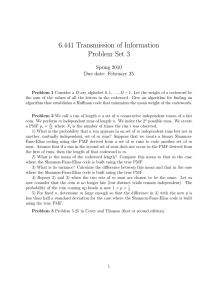

advertisement

LECTURE 5

Random variables

• Readings: Sections 2.1-2.3, start 2.4

• An assignment of a value (number) to

every possible outcome

Lecture outline

• Mathematically: A function

from the sample space Ω to the real

numbers

• Random variables

• Probability mass function (PMF)

– discrete or continuous values

• Expectation

• Can have several random variables

defined on the same sample space

• Variance

• Notation:

– random variable X

– numerical value x

How to compute a PMF pX (x)

– collect all possible outcomes for which

X is equal to x

– add their probabilities

– repeat for all x

Probability mass function (PMF)

• (“probability law”,

“probability distribution” of X)

• Notation:

• Example: Two independent rools of a

fair tetrahedral die

pX (x) = P(X = x)

= P({ω ∈ Ω s.t. X(ω) = x})

• pX (x) ≥ 0

F : outcome of first throw

S: outcome of second throw

X = min(F, S)

!

x pX (x) = 1

• Example: X=number of coin tosses

until first head

4

– assume independent tosses,

P(H) = p > 0

3

S = Second roll

2

pX (k) = P(X = k)

= P(T T · · · T H)

= (1 − p)k−1p,

1

k = 1, 2, . . .

1

2

3

F = First roll

– geometric PMF

pX (2) =

1

4

Binomial PMF

Expectation

• Definition:

• X: number of heads in n independent

coin tosses

E[X] =

$

x

• P(H) = p

• Interpretations:

– Center of gravity of PMF

– Average in large number of repetitions

of the experiment

(to be substantiated later in this course)

• Let n = 4

pX (2) = P(HHT T ) + P(HT HT ) + P(HT T H)

+P(T HHT ) + P(T HT H) + P(T T HH)

= 6p2(1 − p)2

=

"4#

2

• Example: Uniform on 0, 1, . . . , n

p2(1 − p)2

In general:

"n#

pX (k) =

pk (1−p)n−k ,

k

pX(x )

1/(n+1)

...

k = 0, 1, . . . , n

0

E[X] = 0×

1

– Easy: E[Y ] =

y

$

x

Recall:

E[g(X)] =

$

x

ypY (y)

g(x)pX (x)

• Second moment: E[X 2] =

g(x)pX (x)

• Variance

%

! 2

x x pX (x)

var(X) = E (X − E[X])2

• Caution: In general, E[g(X)] %= g(E[X])

=

$

x

Prop erties:

x

n

Variance

• Let X be a r.v. and let Y = g(X)

– Hard: E[Y ] =

n- 1

1

1

1

+1×

+· · ·+n×

=

n+1

n+1

n+1

Properties of expectations

$

xpX (x)

&

(x − E[X ])2pX (x)

= E[X 2] − (E[X])2

If α, β are constants, then:

• E[α] =

Properties:

• E[αX] =

• var(X) ≥ 0

• var(αX + β) = α2var(X)

• E[αX + β] =

2

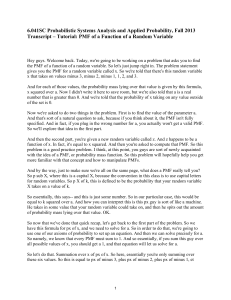

MIT OpenCourseWare

http://ocw.mit.edu

6.041SC Probabilistic Systems Analysis and Applied Probability

Fall 2013

For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.