TA: Tonja Bowen Bishop

advertisement

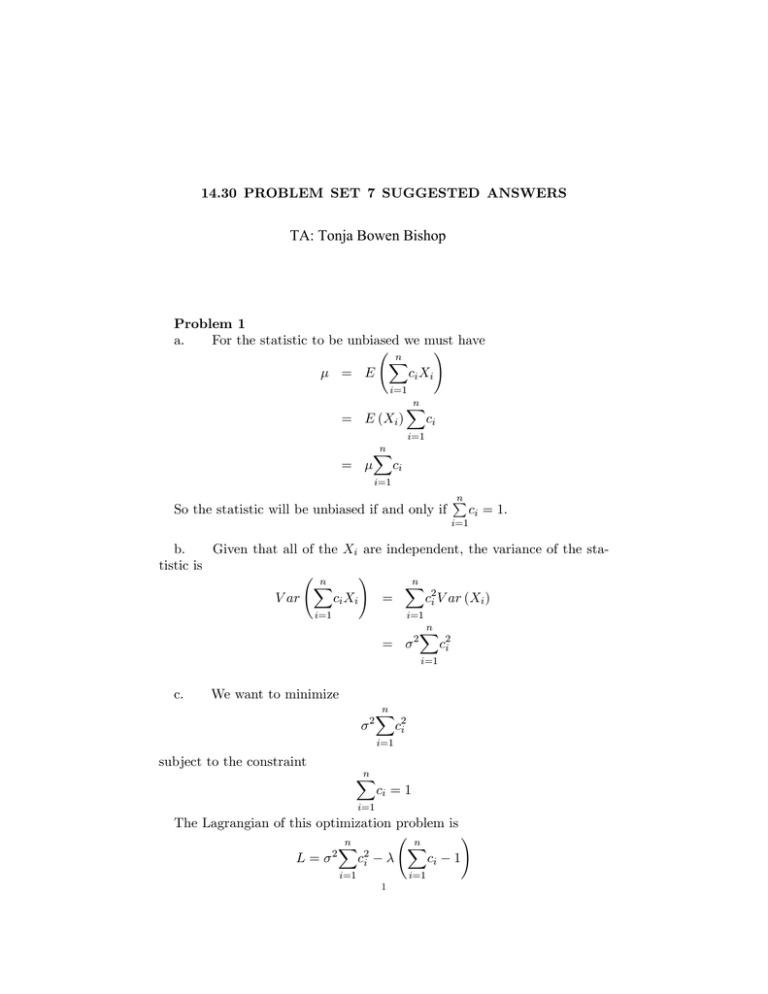

14.30 PROBLEM SET 7 SUGGESTED ANSWERS TA: Tonja Bowen Bishop Problem 1 a. For the statistic to be unbiased we must have ! n X ci Xi = E i=1 = E (Xi ) n X ci i=1 n X = ci i=1 So the statistic will be unbiased if and only if n P ci = 1. i=1 b. Given that all of the Xi are independent, the variance of the statistic is ! n n X X c2i V ar (Xi ) ci Xi = V ar i=1 i=1 2 = n X c2i i=1 c. We want to minimize 2 n X c2i i=1 subject to the constraint n X ci = 1 i=1 The Lagrangian of this optimization problem is L= 2 n X c2i n X i=1 i=1 1 ci ! 1 2 14.30 PROBLEM SET 7 SUGGESTED ANSWERS And we have the …rst-order conditions @L @ci = ci = 2 2ci =0 2 2 This implies that all of the ci are the same, that is c1 = c2 = ::: = cn = c. We can then solve for this common value c by substituting back into the n P constraint c = 1, which implies that c = n1 . i=1 Problem 2 a. n E X = E 1X Xi n i=1 = = = n 1X n ! E (Xi ) i=1 n 1X n i=1 So X is an unbiased estimator of . b. M SE X = = = = = 2 E X + V ar X ! n 1X 0 + V ar Xi n i=1 ! n X 1 V ar Xi n2 i=1 0 1 X X X 1 @ V ar (Xi ) + Cov (Xi ; Xj )A n2 i i j6=i 0 1 X X X 1 @ 2 2A + n2 i 2 = n + (n i j6=i 1) n 2 14.30 PROBLEM SET 7 SUGGESTED ANSWERS 3 c. To determine whether the sample mean is a consistent estimator, we need to see whether the MSE goes to zero as n approaches in…nity. 2 lim M SE X = n!1 lim n n!1 + (n 2 = lim n!1 n 2 = 0+ + lim = 2 1) n (n n!1 2 1) n 2 Thus (assuming a positive variance) the sample mean is not a consistent estimator of the population mean unless = 0. Problem 3 a. We know that the …rst moment of Z is Z 1 E (Z) = zf (z) dz Z0 1 = z e z dz 0 = 1 Then, we calculate our method of moments estimator by setting E (Z) = Z and solving for . 1 n = 1X Zi n i=1 bM M = n Pn i=1 zi = 1 Z b. The exponential pdf is f (z) = e z . Thus, the likelihood function for is Yn L( ; z) = e zi : i=1 Then, the log likelihood function is Yn ln L( ; z) = ln e zi i=1 Xn = ( zi ) + n ln i=1 Di¤erentiating the function with respect to and set it to 0; Xn @ ln L( ; z) n = (zi ) + = 0 i=1 @ =) Xn n zi = i=1 4 14.30 PROBLEM SET 7 SUGGESTED ANSWERS c. So we …nd that the MLE estimator is the same as the MM estimator: 1 bM LE = Pnn = Z i=1 zi p Using the invariance property, the MLE for is simply q r p c bM LE = p1 = Pnn : M LE = Z i=1 Zi d. We know that bM LE will be consistent because all MLE estimators are consistent. But is it unbiased? A direct calculation of E(bM LE ) = E Z1 is untractable (note that E Z1 6= E 1Z , in general). But we can ( ) use Jensens’s inequality to help us out, which tells us that for any random variable X, if g (x) is a convex function, then E(g (X)) g (E (X)) with a strict inequality if g (x) is strictly convex. Thus, using Z as our random variable and the strictly convex function g Z = E 1 Z > 1 , Z we have 1 1 = = E (Z) E Z and we know that our estimator is biased. Note that if we had instead used the version of the exponetial pdf that by either method, we replaces with 1 , and estimated the parameter would have found b = Z, which is both unbiased (since E (Z) = 1 = ) and consistent (by the law of large numbers). Problem 4 a. Bias b1;n = E b1;n unbiased for every n. Bias b2;n = E b2;n n n+1 = n+1 =E = E [Xn ] " 1 n+1 n X Xi i=1 # = = 6= 0 so b2;n is biased for for every n. 1 n+1 = 0 so b1;n is n X E [Xi ] = i=1 b. limn!1 P b1;n < " = limn!1 P (jXn j < ") = P ( " < Xn < P (Xn + ") P (Xn ") (because the R.V. are continuous)= F ( + ") F( ") 6= 0 for some " > 0. Thus b1;n is not consistent. # " n n X 2X 1 1 Xi = n+1 V ar [Xi ] = As for b2;n : V ar b2;n = V ar n+1 1 n+1 2 i=1 n 2 so: i=1 + ") = 14.30 PROBLEM SET 7 SUGGESTED ANSWERS limn!1 M SE b2;n = limn!1 V ar b2;n +limn!1 Bias b2;n 0; proving that b2;n is consistent. 5 2 = c. An unbiased estimator is not necessarily consistent; a consistent estimator is not necessarily unbiased. Problem 5 a. MM: Since we know that moment equation: l = 0, we only need to use the …rst 0+ h ; 2 Then, the MM estimator is obtained by solving E(X) = bh MLE: = 2 bh = The uniform pdf is 1 Xn Xi = X i=1 n 2 Xn Xi = 2X i=1 n 1 f (x) = h = l 1 h and the likelihood function and log likelihood functions are given (respectively) by L(0; h ; x1 ; x2 ; :::; xn ) = 1 n h ln L(0; h ; x) = n ln h In order to maximize the log likelihood function above, we need to minimize h subject to the constraint xi h 8 xi . Then, it must be that b. bh = max(xi ) The …rst two moments are + 2 2 l + l E(X) = h ; 2 h + l h : 3 Then, the MM estimators are obtained by solving E(X 2 ) = b2 l 2 + bh bl + bh 3 2 + blbh = = 1 Xn Xi = X; i=1 n 1 Xn X 2 = X 2: i=1 i n 6 14.30 PROBLEM SET 7 SUGGESTED ANSWERS They are given by bl = X bh = X + c. q q 2 3(X 2 X ); 3(X 2 X ): 2 We begin with the MM estimator for part a: E bh = E 2X = 2 bh 2 = bh So this estimator is unbiased. Then we consider the variance. n 4X 4 V ar bh = V ar 2X = 2 V ar (Xi ) = n n 2 1 + 2 2 2 1 2 12 i=1 Because the bias is zero and the variance approaches zero as n gets large, the MSE also approaches zero, and the estimator is consistent. For the MLE estimator, we use the fact (shown in problem 6) that, for n 1 this estimator, f (x) = nx n . Then we can see that the MLE estimator is biased: Z h nxn n E bh = h n dx = n + 1 0 h n However, as n gets large, n+1 ! 1, so the bias approaches zero, and we know that in general, MLE estimators are consistent. For the MM estimators in part b, …nding the expected value is not particularly tractable nor particularly interesting, so I will retract this portion of the question. Problem 6 Note that for a U [0; ] distribution, f (x) = 1 , F (x) = x , E (X) = 2 , 2 and V ar (X) = 12 . a. It will be important that the sample draws are independent (and identical). Then, Pr X(n) x = Pr (max(Xi ) x) n = i=1 Pr (Xi = F (x)n x n = x) 14.30 PROBLEM SET 7 SUGGESTED ANSWERS 7 b. In part a, we found the cdf of X(n) , so the pdf is just the derivaive of this: d F (x) dx (n) d x n dx nxn 1 f(n) (x) = = = n c. We want to choose constants k1 ; k2 ; and k3 such that our estimators are unbiased. For the …rst estimator, E kb1 X2 = kb1 E (X2 ) = kb1 = 2 kb1 = 2 Then, for the second estimator E kb2 X = kb2 E X = kb2 = 2 kb2 = 2 And for the third estimator kb3 Z E kb3 X(n) kb3 E X(n) x 0 n kb3 n nxn 1 n = = dx = n+1 n+1 = kb3 = n+1 n 8 14.30 PROBLEM SET 7 SUGGESTED ANSWERS d. Now we calculate the variances of our estimators, using the constants that we found in part c. 2 V ar (2X2 ) = 4V ar (X2 ) = 3 2 V ar 2X V ar = 4V ar X = n+1 X(n) n n+1 n = To …nd V ar X(n) , we will need E(n) X 2 : Z nxn+1 2 E(n) X = n dx = 0 so V ar X(n) = n n+2 2 n n+1 V ar X(n) n n+2 2 2 3n 2 = 2 n 2 (n + 2) (n + 1)2 and V ar n+1 X(n) n = n+1 n 2 2 n 2 = n (n + 2) (n + 2) (n + 1)2 Thus, whenever n > 1, b V ar kb3 X(n) < V ar k2 X < V ar kb1 X2