10.40 Appendix Connection to Thermodynamics and Derivation of Boltzmann Distribution Bernhardt L. Trout

advertisement

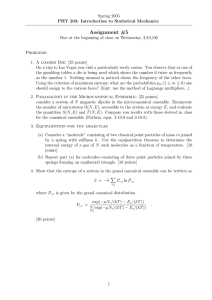

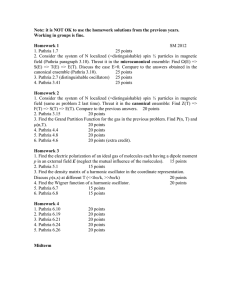

10.40 Appendix Connection to Thermodynamics and Derivation of Boltzmann Distribution Bernhardt L. Trout Outline • Cannonical ensemble • Maximum term method • Most probable distribution • Ensembles continued: Canonical, Microcanonical, Grand Canonical, etc. • Connection to thermodynamics • Relation of thermodynamic quantities to Q 0.1 Canonical ensemble In the Canonical ensemble, each system has constant N, V ,and T. After equilibration, remove all of the systems from the bath, and put them all together: Apply postulate 2 to the ensemble of systems, also called a supersystem. P P Let nj = number of systems with energy E j . Also, N = j nj and E tot = j nj E j . If we know all E j ’s, then the state of the entire ensemble would be well-defined. For example, let’s analyze an ensemble with 4 systems, labeled A, B, C, and D, where A E2 B E3 C E2 D E1 Then, E tot =E 1 + 2E 2 +E 3 → Also, the distribution of the systems, − n = (n1 , n2, n3 , ...) = (1, 2, 1). But there are many different supersystems consistent with this distribution. In fact, the number of supersystems consistent with this distribution is N! 4! → Ωtot (− n) = Q = = 12 n ! 1!2!1! j j What is the probability of observing a given quantum state, e.g. E j ? In other words, what is the fraction of systems in the ensemble in the state E j ? 1 10.40: Fall 2003 Appendix 2 (Ensemble of systems) N,V,EA N,V,EB ... N,V,EC ... N,V,Ej (Bath at temperature T) Figure 1: Canonical ensemble The answer is nj N. However, it may be the case that many distributions fulfill the conditions of the ensemble, (N, V , E tot ). For example, assume that there are two: n1 = 1, n2 = 2, n3 = 1; Ωtot = 12 and n1 = 2, n2 = 0, n3 = 2; Ωtot = 6 Then the probability of observing, for example, E 3 is 14 in the first distribution and 12 in the second. The probability in the case where both distributions make up the ensemble is: µ ¶ 1 1 × 12 + 2 × 6 1 p3 = = 4 12 + 6 3 In general, pj = µ 1 N ¶P → − → − → − Ωtot ( n )nj ( n ) nP − → → − n Ωtot ( n ) where the sum is over all distributions satisfying the conditions of (N, V , E tot ). Then, for example, we could compute ensemble averages of mechanical quantities: X E = hEi = Pj E j j 2 10.40: Fall 2003 Appendix 3 N,V,EA N,V,EB N,V,EC ... ... N,V,Ej Figure 2: Canonical ensemble forming its own bath and P = hP i = X pj Pj , j where p is the pressure. 0.2 Maximum term method Recall: pj = where µ 1 N ¶P → − → − → − Ωtot ( n )nj ( n ) nP − → → − n Ωtot ( n ) N! → Ωtot (− n) = Q . j nj ! As N → ∞, nj → ∞, for each j. Thus, the most probable distribution becomes dominant. We can call this dis→ tribution, − n ∗. → ∗ Let nj = nj in the − n ∗ distribution. Then pj = 0.3 → − n∗j 1 Ωtot ( n ∗ )n∗j = → N Ωtot (− n ∗) N Most probable distribution Which distribution gives the largest Ωtot ? Solve via method of undetermined multipliers: Take natural log of Ωtot . µ ¶ ÃX ! ÃX ! X N! → − ln (Ωtot ( n )) = ln Q ni ln ni − ni ln ni , = i ni ! i i i where we have switched the index from j to i and used Stirling’s approximation, which becomes exact as ni → ∞ : ln y! ≈ y ln y − y. → → We wish to find the set of nj ’s, which maximize Ωtot (− n ) and hence ln(Ωtot (− n )) : # " X X ∂ → n )) − α ni − β ni E i = 0 , j = 1, 2, 3, ... ln (Ωtot (− ∂nj i i 3 10.40: Fall 2003 Appendix 4 where α and β are the undetermined multipliers. Carrying out the differentiation yields à ! X ln ni − ln n∗j − α − βE j = 0, j = 1, 2, 3, ... i or n∗j = N e−α e−βE j , j = 1, 2, 3, ... Recalling that X N = yields X n∗j j e−α e−βE j = 1 j or eα = X e−βE j . j Also, hEi = P j n∗j E j N and pj = where = P j N e−α e−βE j E j N P −βE j Ej je = P −βE j je n∗j e−βEj = e−α e−βEj = P −βEj , N je Q= X e−βE j j and, as we discussed in the last lecture, is the partition function, the normalization factor. 0.4 Canonical ensemble continued and connection to thermodynamics Recall from last time, via the maximum-term method in the canonical ensemble: P ∗ P P −βE −α −βE j jE e Ej j j nj E j j Ne je hEi = = = P −βE j N N e j and pj = where, n∗j e−βE j = e−α e−βE j = P −βE , j N je Q= X e−βE j , j as we discussed in the last lecture, is the partition function, the normalization factor. In addition, as we have shown: E = hEi = 4 X j pj E j 10.40: Fall 2003 Appendix and P = hP i = where P is the pressure. X 5 pj Pj , j If we differentiate the equation for hEi, X X dhEi = E j dpj + pj dE j j =− j X µ ∂E j ¶ 1X (ln pj + ln Q) dpj + pj dV . β j ∂V N j Recall that the pressure, µ P = or µ Pj = This yields for equation 1 dhEi = − (1) ¶ ∂E ∂V N ∂E j ∂V ¶ . N X 1X 1X ln pj dpj − ln Qdpj + pj Pj dV . β j β j j [Note that X d pj ln pj j = X ln pj dpj + j = pj d (ln pj ) j X ln pj dpj + j Since, X X pj j X dpj . pj (2) pj = 1, j X dpj = 0.] j Thus, the right term in equation 2 is equal to 0. This yields 1 X pj ln pj − hP idV . dhEi = − d β j Recalling from the combined first and second laws (in intensive form, noting that since N is a constant, intensive and extensive forms are equivalent): dU = T dS − P dV Since U ↔ hEi and p ↔ hpi, 1 X T dS ↔ − d pj ln pj . β j 5 10.40: Fall 2003 Appendix Let X =− X 6 pj ln pj . j Then, dS = 1 dX. βT (3) We know that the left side of the equation is an exact differential, so the right side 1 must be a function of X. This means that must be too, and thus, βT dS = φ(X)dX = df (X). Integrating, S = f (X) + const, (4) where we can set the arbitrary constant, const, equal to 0 for convenience. Now we can make use of the additive property of S, and we can divide a system into two parts, A and B. This yields: S = S A + S B = f (X A ) + f (X B ). Note that X A+B = − X (5) pi,j ln pi,j , i,j where i is the index for the possible states of A and j is the index for the possible states of B. Then X B A B X A+B = − pA i pj (ln pi + ln pj ) i,j =− X i A pA i ln pi − X B pB j ln pj j = XA + XB. Thus, from equation 5, S = f (X A ) + f (X B ) = f (X A + X B ). For this to be so, f (X) = kX, where k is a constant. Thus, S = −k X pj ln pj . j From equations 3 and 4, 1 = k, βT and thus, 1 . kT We designate k as Boltzmann’s constant, a universal constant. β= 6 (6) 10.40: Fall 2003 0.5 Appendix 7 Microcanonical, Grand Canonical, and other ensembles Recalling the formulation for S from equation 6, and noting that in the microcanonical ensemble, 1 pj = , Ω where we recall that Ω is the total number of states with the same energy, then S = −k X j pj ln pj = −k X1 1 ln Ω Ω j = k ln Ω(N, V , E). This is Boltzmann’s famous formula for the entropy. In the Grand Canonical ensemble, the number of particles in each system is allowed to fluctuate, but µ is kept constant. This is called the (V , T, µ) ensemble. Also, there are other ensembles, such as (N, P, T ), etc. Note that from an analysis of fluctuations (Lecture 27), we shall see that in the macroscopic limit of a large number of systems, all of these ensembles are equivalent. 0.6 Relation of thermodynamic quantities to Q Recall that S = −k X pj ln pj j e−βE j Q X Q = e−βE j pj = j Plugging in the formula for pj into that for S yields S X e−βE j = −k j e−βE j Q ¶ X e−βE j µ E j − − ln Q Q kT j = −k = Q ln hEi + k ln Q T Recalling our definitions from macroscopic thermodynamics and the fact that U ↔ hEi yields A= −kT ln Q Similarly, S P U = − µ ∂A ∂T ¶ = kT V ,Ni ∂ ln Q ∂T ¶ V ,Ni ¶ ∂ ln Q ∂V T,Ni T,Ni µ ¶ ∂ ln Q = A + T S = kT 2 . ∂T V ,Ni = − µ ∂A ∂V ¶ µ = kT 7 µ + k ln Q 10.40: Fall 2003 Appendix 8 Thus, all thermodynamic properties can be written in terms of the partition function, Q(N, V , T )! In order to compute Q, all we need are the possible energy levels of the system. We can obtain these from solving the equations of quantum mechanics. 8