A feature-based semivowel recognition system

advertisement

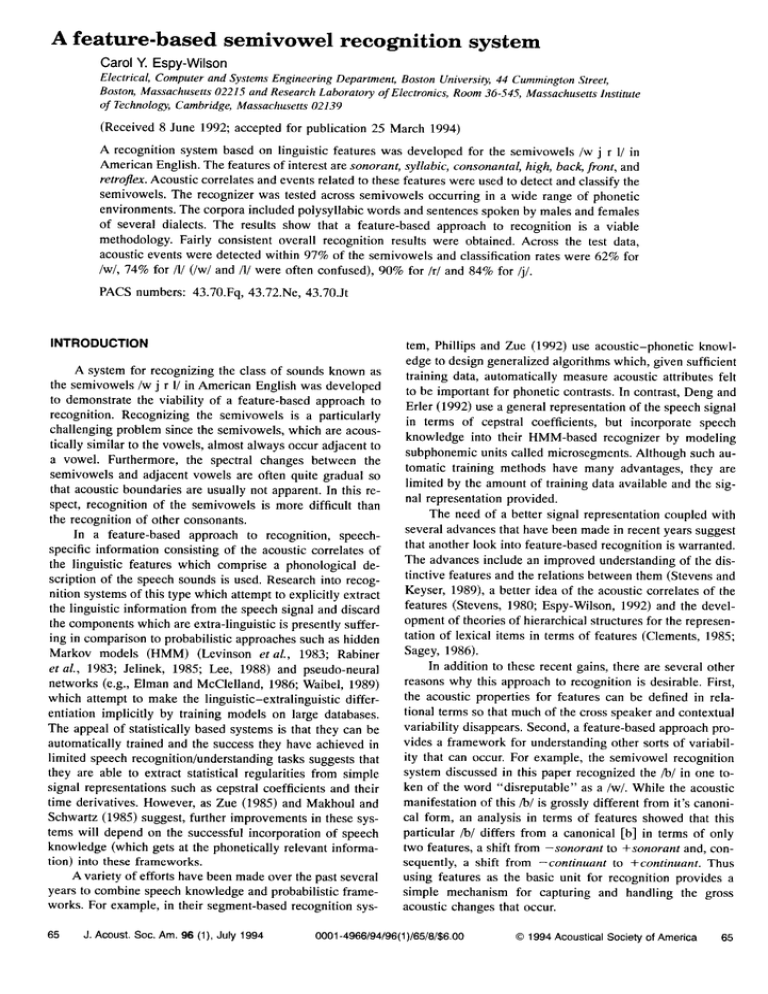

A feature-basedsemivowelrecognition system CarolY. Espy-Wilson Electrical, Computer andSystems Engineering Department, Boston University, 44Cummington Street, Boston, Massachusetts 02215andResearch Laboratory ofElectronics, Room36-545,Massachusetts' Institute of Technology, Cambridge,Massachusetts 02139 (Received 8 June1992;accepted forpublication 25 March1994) A recognition system based onlinguistic features wasdeveloped for thesemivowels/w j r 1/in American English. Thefeatures of interest aresonorant, syllabic, consonantal, high,back, front,and retroflex. Acoustic correlates andevents related tothese features wereused todetect andclassify the semivowels. Therecognizer wastested across semivowels occurring in a widerangeof phonetic environments. Thecorpora included polysyllabic words andsentences spoken bymalesandfemales of several dialects. The results showthata feature-based approach to recognition is a viable methodology. Fairly consistent overallrecognition resultswereobtained. Acrossthe testdata, acousticeventswere detectedwithin 97% of the semivowelsand classificationrateswere 62% for /w/, 74%for/1/(/w/and/1/were oftenconfused), 90%for/r/and84%for/j/. PACSnumbers:43.70.Fq,43.72.Ne,43.70.Jt INTRODUCTION tern,PhillipsandZue (1992) useacoustic-phonetic knowledgeto designgeneralized algorithms which,givensufficient A systemfor recognizingthe classof soundsknown as trainingdata, automaticallymeasureacousticattributesfelt thesemivowels/w j r 1/inAmerican English wasdeveloped to be important for phonetic contrasts. In contrast, Dengand to demonstrate the viabilityof a feature-based approach to Erler(1992)usea general representation of thespeech signal recognition. Recognizing the semivowels is a particularly in termsof cepstralcoefficients, but incorporate speech challenging problemsincethe semivowels, whichare acousknowledge into theirHMM-basedrecognizer by modeling ticallysimilartothevowels, almost alwaysoccuradjacent to subphonemic unitscalledmicrosegments. Althoughsuchaua vowel. Furthermore, the spectralchangesbetweenthe tomatictrainingmethodshavemanyadvantages, they are semivowels andadjacent vowelsareoftenquitegradualso limited by the amount of training data available and thesigthatacoustic boundaries areusuallynotapparent. In thisrenal representation provided. spect,recognitionof the semivowelsis more difficult than The needof a bettersignalrepresentation coupledwith the recognitionof otherconsonants. several advances that have been made in recent years suggest In a feature-based approachto recognition,speechthat another look into feature-based recognition is warranted. specificinformationconsisting of the acousticcorrelates of includean improved understanding of thedisthe linguisticfeatureswhichcomprise a phonological de- The advances tinctive features and the relations between them (Stevens and scription of thespeech sounds is used.Research intorecogKeyser, 1989), a better idea of the acoustic correlates of the nitionsystems of thistypewhichattemptto explicitlyextract features (Stevens, 1980; Espy-Wilson, 1992) and the develthelinguistic information fromthespeech signalanddiscard of hierarchical structures for therepresenthecomponents whichareextra-linguistic is presently suffer- opmentof theories ingin comparison to probabilistic approaches suchashidden tation of lexical itemsin termsof features(Clements,1985; Markov models (HMM) (Levinsonetal., 1983; Rabiner Sagey,1986). In additionto theserecentgains,thereare severalother et al., 1983;Jelinek,1985;Lee, 1988)andpseudo-neural networks (e.g.,ElmanandMcClelland,1986;Waibel,1989) reasonswhy thisapproachto recognitionis desirable.First, whichattemptto makethe linguistic-extralinguistic differ- the acousticpropertiesfor featurescan be definedin relaandcontextual entiationimplicitlyby trainingmodelson largedatabases. tionaltermssothatmuchof thecrossspeaker Second, a feature-based approach proThe appealof statistically basedsystems is thattheycanbe variabilitydisappears. videsa frameworkfor understanding othersortsof variabilautomatically trainedandthesuccess theyhaveachieved in recognition limitedspeech recognition/understanding taskssuggests that ity thatcanoccur.For example,the semivowel in thispaperrecognized the/b/in onetotheyareableto extractstatistical regularides fromsimple systemdiscussed as a/w/. While the acoustic signalrepresentations suchas cepstralcoefficients andtheir ken of the word "disreputable" time derivatives.However,as Zue (1985) and Makhoul and manifestation of this/b/is grosslydifferentfrom it's canoniSchwartz (1985)suggest, furtherimprovements in thesesys- cal form, an analysisin termsof featuresshowedthat this temswill dependon thesuccessful incorporation of speech particular/b/differsfroma canonical [b] in termsof only knowledge (whichgetsat thephonetically relevant informa- two features,a shiftfrom -sonorant to +sonorantand,contion) into these frameworks. sequently, a shift from -continuant to +continuant. Thus A varietyof effortshavebeenmadeoverthepastseveral yearsto combinespeechknowledgeandprobabilistic frameworks.Forexample,in theirsegment-based recognition sys- usingfeaturesas the basicunit for recognition providesa simplemechanism for capturingand handlingthe gross 65 J.Acoust. Soc.Am.96 (1),July1994 acousticchangesthat occur. 0001-4966/94/96(1)/65/8/$6.00 ¸ 1994Acoustical Society ofAmerica 65 TABLE L Mappingof featuresinto acousticproperties. Feature Sohorant Acousticcorrelate Comparablelow- and highfrequencyenergy Parameter Energyratio (0-300) Property High• (3700-7000) Nonsyllabic Dip in midfrequencyenergy Energy640-2800Hz Energy2000-3000Hz Low• Lowa Consonantal High Back Front Retroflex Abrupt amplitudechange Low F• frequency Low F 2 frequency High F 2 frequency Low F 3 frequencyand closeF 2 and F 3 Firstdifferenceof adjacentspectra F• -F 0 High Low Low High Low Low "Relative to a maximum F2F 2F 3F3 - F• Fl F0 F2 value within the utterance. Finally, in a feature-based approachto recognition,an acoustic-event-oriented as opposedto a segment-oriented schemecanbe usedfor recognition. In traditionalapproaches to recognition,either the speechsignal is segmentedinto phoneme-sized piecesto whichlabelsare assigned, or labels are assignedon a frame-by-framebasis.Soundslike the semivowels posea problemfor suchapproaches sincethere are often no obvious acoustic boundaries between them and adjacentsounds.In contrast,the systemdiscussed in this paperidentifies specificacoustic eventsaroundwhichacoustic properties for featuresare extracted. This event-oriented approach led to the detectionof selectacousticlandmarks whichsignaledthe presence of 97% of the semivowels. Anotheradvantage offeredby anevent-oriented approach is that thereis no underlyingassumption that soundsare nonoverlapping.Thus it allowsfor the possibilityof recognizing soundsthatare completelyor partiallycoarticulated. I. METHOD A. Stimuli To developthe recognition system,a database of 233 polysyllabic wordscontainingsemivowels in a varietyof phoneticenvironments was selectedfrom the 20 000-word Merriam-WebsterPocketdictionary.The semivowelsoccur adjacentto voicedand unvoicedconsonants, as well as in glottalizationand othertypesof utterance-final variability. The speakerswere recordedin a quietroomwith a pressuregradient close-talking noise-cancelling microphone (partof a SennheiserHMD 224X microphone/headphone combination).They were instructed to saythe utterances at a natural pace. For development of the recognitionalgorithms,each wordwas spokenonceby two femalesandtwo males.The females are from the northeast and the males are from the midwest.For testing,eachword was spokenonceby two additionalspeakers, onefemaleandonemalefromthesame geographical areascitedabove.The speakers were students andemployees at theMassachusetts Instituteof Technology. All are nativespeakers of Englishand reportedno hearing loss. In addition to the latter database,we also tested the rec- ognitionsystemon 14 repetitions of sentence-1 (6 females and8 males)and15 repetitions of sentence-2 (7 femalesand 8 males).The speakers cover7 U.S. geographical areasand an "other" categoryusedto classifytalkerswho moved aroundoften duringtheir childhood.Like the wordsin the otherdatabases, thesesentences were recordedusinga closetalkingmicrophone. C. Segmentation and labeling The polysyllabic wordswereexcisedfromtheircarrier phraseafter they were digitizedwith a 6.4-kHz low-pass filter and a 16-1d-Izsamplingrate, and pre-emphasized to unstressed, high and low, and front and back.A moredecompensate for the relativelyweak spectralenergyat high tailed discussion of this databaseis given in Espy-Wilson frequencies (a particularissuefor sonorants). To facilitate (1992). analysis andrecognition, thewordsweresegmented andlaTo testthe recognition system,the samedatabase anda beledby theauthorwith thehelpof playbackanddisplays of small subsetof the TIMIT database(Lamel et al., 1986) was severalattributesincludingLPC and widebandspectra,the used.In particular,the sentences "She had your dark suit in speechsignal and variousbandlimitedenergywaveforms. greasywashwaterall year"(sentence-l) and"Don'taskme The Merriam-WebsterPocketdictionaryprovideda baseline to carryanoilyraglike that"(sentence-2) werechosen since phonemic transcription of thewords.However,modifications they containseveralsemivowelsin a numberof contexts. of some of the labels were made basedon the speakers' However,note that many of the contextsrepresented in the pronunciations (for moredetails,seeEspy-Wilson, 1992). polysyllabic wordsarenot includedin the sentences. word-initial, word-final, and intervocalic positions.The semivowels occuradjacentto vowelswhichare stressed and D. Feature analysis B. Speakers and recordings The polysyllabicwordswere embeddedin the carrier phrase"_ pa." The final "pa" was addedin orderto avoid 66 d. Acoust.Soc.Am.,Vol.96, No. 1, July1994 The featureschosento separatethe semivowelsas a classfrom othersoundsaresonorant,nonsyllabic,andnasal. The featuresselectedto distinguishamongthe semivowels CarolY. Espy-Wilson: Semivowelrecognition system 66 TABLEII. Acoustic events whichmaysignalthepresence of semivowels. producedwith a more "front" articulationthan adjacent sounds. Retroflexed and some labial sounds should contain Acoustic events Semivowel Energy dip F2 dip w X y x r x x I x x F 2 peak F 3 dip X X x F 3 peak X x x x anF3 dip. Finally,anF 3 peakshouldoccurin thesemivowels/l/and/j/. In addition,some/w/'swhichare in a retrof- lexedenvironment mayalsocontain anF 3 peaksinceF 3 is generallyhigherin a/w/than it is in an adjacent retroflexed sound.The methodology usedto detecttheseacousticevents differs,depending uponwhetherthesemivowels areprevo- calic, postvocalic,or intersonorant.For more details, see Espy-Wilson(1987). areconsonantal, high,back,front, andretroflex.A moredeOnce all acousticeventshavebeenmarked,the classifitaileddiscussion of thesefeaturesaregivenin Espy-Wilson cationprocessintegratesthem,extractsthe neededacoustic (1992). properties,and throughexplicit semivowelrules decides An acoustic study(Espy-Wilson, 1992)wascarriedout whetherthe detectedsoundis a semivoweland, if so, which in orderto supplement datain the literature(e.g.,Lehiste, 1962) to determineacousticcorrelatesfor the features.The mappingbetweenfeaturesand acousticpropertiesand the parametersusedin this processare shownin Table I. (The acousticpropertiesin Table I have been refinedsince the development of thesemivowel recognition system.For a discussion,seeEspy-Wilson,1992.)To minimizetheirsensitivity to speaker,speakingrate and speakinglevel, all of the properties in TableI are basedon relativemeasures as opposedto absolute onessuchas the frequencies and amplitudesof spectral prominences. The relativeproperties areof two types.First, thereare propertieswhich examinean attributein onespeechframerelativeto anotherspeechframe. For example,the propertyusedto capturethe nonsyllabic featurelooksfor a dropin eitherof twomidfrequency energies with respectto surrounding energymaxima.Second, thereareproperties which,withina givenspeech frame,examineone part of the spectrumin relationto another.For example,thepropertyusedto capturethefeatures front and semivowelit is. At this time,by combiningall the relevant acousticcues,the recognizercancorrectlyclassifythe semivowelswhile the remainingdetectedsoundsshouldbe left unclassified. Specificruleswere appliedto integratethe extracted acousticpropertiesfor identificationof the semivowelsin prevocalic, intersonorant, andpostvocalic contexts? Given the acousticsimilaritybetweenmany/w/'s and/l/'s, a/w-l/ rulewagificluded forsounds thatwerejudged tobeequally likely-a/v;q or/1/.Therulesaredependent uponcontext so thatthey•aPture well knownacoustic differences dueto allophonic9ariation.For example,theprevocalic/1/rule states thatthe/1/.•an have either a gradual orabrupt offset, allow- ing for [he possibilityof an abruptrate of spectralchange between.the /1/ and following vowel. Severalresearchers (Joos,1948; Fant, 1960; Daiston,1975) have observeda sharp spectraldiscontinuitybetween/1/ and following stressed vowelsandtheyattributethisto therapidreleaseof the tonguetip fromthealveolarridgein theproduction of a backmeasures thedifference between F2 andFl. prevocalic/1/. On the other hand, the postvocalic/1/ rule To quantifythe properties, we useda frameworkmotirequiresthatthe rateof spectralchangebetweenthe/1/and vatedby fuzzysettheory(De Mort, 1983),whichassigns a precedingvowel be gradualsincealveolarcontactis often valuewithin the range[0,1]. A valueof 1 meansthatthe notrealizedor is realizedonlygraduallyin theproduction of propertyis definitelypresent,while a valueof 0 meansthatit a postvocalic/1/. is definitelyabsent. Valuesbetweentheseextremes represent The propertiesin the rulesare combinedusingfuzzy a fuzzyareaindicating thelevelof certainty thattheproperty logic.In thefuzzylogicframework,additionis analogous to is present/absent. a logical"or" and the resultof this operationis the maximumvalueof theproperties beingconsidered. Multiplication E. Recognitionstrategy of two or moreproperties is analogous to a logical"and." In The recognitionstrategyfor the semivowelsis divided thiscase,the resultis the minimumvalueof the properties into two steps:detectionand classification. The detection beingoperatedon. Sincethe value of any propertyis beprocess markscertaineventssignaled by changes in thepa- tween0 and 1, the resultof any rule is alsobetween0 and 1. We chose0.5 to be the dividingpointfor classification. That rameterslistedin TableI. The process beginsby findingall ruleis appliedreceives sonorantregionswithin an utterance.Next, certainacoustic is, if thesoundto whicha semivowel a scoregreaterthan or equal to 0.5, it will be classifiedas eventsaremarkedwithintheSOhorant regionsonthebasisof that semivowel. substantialenergychangeand/or substantialformant movement. Resultsof an acousticstudy(Espy-Wilson,1992) II. RESULTS showedthattheeventslistedin TableII usuallyoccurwithin thedesignated semivowel(s). Dip detection 2 is performed Thesemivowel recognizer is evaluated by comparing its withinthe timefunctionsrepresenting themidfrequency enoutputwith thehandtranscription. Giventhattheplacement ergiesto locateall nonsyllabic sounds.In addition,dip deof segmentboundaries is subjective, someflexibilityis used tection andpeakdetection 3areperformed onthetracks ofF2 andF•. An F2 dipshould be foundin sounds whichare producedwith a more "back" articulationthan adjacent sounds.An F 2 peak shouldbe found in soundswhich are 67 J. Acoust.Sec.Am.,Vol.96, No. 1, July1994 in tabulatingthe detectionand classificationresults.A semi- vowelis considered detectedif an energydip and/oroneor moreformantextremais placedsomewhere betweenthe beginning(minus10 ms) andend (plus10 ms) of its handCarolY. Espy-Wilson: Semivowel recognition system 67 TABLEIII. Overallpercent recognition results forthesemivowels. "nc"arethose sounds whichweredetected but "not classified"as a semivowelby any of the rules. Detection Classification Original w I r j 369 540 558 222 No. tokens detected 99 97 97 96 Energydip F 2 dip m2 peak F 3 dip F 3 peak 47 97 0 41 21 $1 83 0 10 54 36 46 I 95 1 35 0 92 2 78 No. tokens w 1 r j 369 540 558 222 undetected 1 3 3 4 w 1 w-I r j 52 9 31 4 0 8 56 30 0 0 3 0 0 90 0 0 0 0 0 94 nc 2 3 5 5 New speakers w I r j No. tokens 181 274 279 105 No. tokens w 1 r j 181 274 279 105 detected 98 99 96 98 undetected 2 I 4 2 Energydip F 2 dip F2 peak F 3 dip 49 93 0 37 59 85 0 7 44 49 1 90 41 0 95 0 w I w-I r 48 13 29 4 4 58 34 0 2 0 0 91 0 0 0 0 F 3 peak 30 69 2 87 j 0 0 0 85 nc 7 3 4 13 TIMIT w I r j No. tokens 28 40 49 23 No. tokens detected 96 93 100 96 undetected Energydip F 2 dip F 2 peak F 3 dip F 3 peak 61 93 0 47 61 89 83 0 36 50 57 0 91 0 70 w I w-1 r j nc 0 61 65 0 94 4 transcribed regionby the appropriate detectionalgorithms. The arbitrarilychosen10-msmarginwas not alwayslarge enoughto includeall of the detectedacousticeventsoccurringduringthesemivowels. Thus,for about1% of thesemi- w I r j 28 40 i 49 23 4 7 0 4 46 22 22 7 0 10 53 25 0 0 0 0 0 90 0 0 0 0 0 79 5 10 17 the right sideof the table.As before,the top row liststhe semivowels. The followingrowsshowthe numberof semivowel tokenstranscribed,the numberwhich were undetected (thecomplement of the corresponding numberin the detection results)and the percentage of thosesemivoweltokens detection results. transcribedwhich were classifiedby the semivowelrules. A. Semivowel recognition results For example,the resultsfor originalshowthat 90% of the 558 tokensof/r/which were transcribedwere correctlyclasThe overall recognitionresultsfor the databasesare compared in TableIII [-moredetailedrecognition resultsac- sified. The term "nc" (in the bottom row) for "not classicordingto variouscontextsare given in (Espy-Wilson, fied" meansthatoneor moresemivowelruleswasappliedto sound,but theclassification score(s) wasless 1987)]. The databaseusedto developthe recognitionsystem thedetected than0.5.A semivowel whichisconsidered undetected may is referredto as "original." "New speakers"refer to the words containedin original which were spokenby new showup in the classification resultsas being recognized. speakers. Finally,TIMIT refersto the sentences takenfrom Thus the numbers in a column within the classification retheTIMIT corpus.On the left sideof the tablearethe detec- sultsmay not alwaysadd up to 100%. tionresultswhicharegivenseparately for eachdatabase. The Whencomparingthe recognition resultsof the threedatop row lists the semivowels.The followingrows showthe tabases,the differencesbetweenTIMIT and the other coractual numberof semivowelsthat were transcribed(No. toporashouldbe keptin mind.Recallthatthe speakers of the kens),thepercentage of semivowels thatcontainoneor more originaland new speakers databases werefrom two dialect acousticeventsduringtheir hand-transcribed region(deregionswhereasthe speakersof the TIMIT databasewere tected),andthe percentage of semivowels thatcontaineach from severalgeographicalareas.In addition,the sparseness type of acousticeventmarkedby the detectionalgorithms. of the semivowelsin TIMIT affectsthe recognitionresults. For example,thedetection tablefor originalstatesthat97% Several of the contextsrepresentedin original and new of the transcribed/w/'scontainedan F 2 dip within their segspeakerswhere the semivowelsreceivehigh recognition mentedregion. scores don't occur in TIMIT. The classificationresultsfor eachdatabaseare given on vowels, further correctionswere made when tabulatingthe 68 d. Acoust.Soc.Am.,Vol.96, No. 1, July1994 CarolY. Espy-Wilson: Semivowelrecognition system 68 TABLE IV. Percentrecognition of olhersounds assemivowels. The results "disreputable" fororiginal,newspeakers, andTIMIT arelumped together. I Idizl s Irli PøJPljcHdJ^JbJ ! I J Nasals Others Vowels 740 764 3919 26 78 w 3 I I 10 4 fi 3 1 3 6 No. tokens undetected w-[ I r 2 I j 5 • 9 51 14 42 nc 1. Detection ' •, •'I•( I kHz 0.0 0.1 0.2 0.3 0.4 0.5 results In spite of the differencesbetweenthe databases, the detectionresultsare fairly consistent. The resultsfrom all threedatabases showtheimportance of usingformantinformationin additionto energymeasures. Acrosscontexts,F 2 minima are most importantin locating/w/'s and /1/'s, F 3 minimaare mostimportantin locating/r/'s,andF: maxima are mostimportantin locating/j/'s. When in an intervocalic context,however,the detectionresultsusing only energy minima comparefavorablywith thoseusing the cited formant minimum/maximum. Due to formant transitions between semivowels and ad- jacentconsonants and the placementof segmentboundaries TIME recognized os[w-l[ FIG. 1. Widehandspectrogram of theword "disreputable"whichcontainsa lenited/b/Ihat was recognizedas/w-l[. weakenedconstriction,cf. Catford, 1977) so that they are recognizedas semivowels. The latter soundsare grouped into a classcalled"others"andtheirrecognitionresultsare shownin Table IV. An exampleof thistype of confusionis shownin Fig. I wherethe intervocalic /b/ in "disreputable" was classifiedas/w-l/. As canbe seenfrom the spectrogram consonant. In adin thehandtranscription, therearea few eventslistedin the in Fig. 1, thefo/is realizedas a Sohorant dition, the formant frequencies are acceptable for a/w/and detectionresultswhich, at first glance,appearstrange.For changebetweenthe/b/and example,several/r/'sthatareadjacentto a coronalconsonant an/l/. Finally,therateof spectral the surrounding vowels is gradual. such as the first/r/in the word "foreswear" contain both an F 3 dip andanF 3 peak.The F 3 peakis dueto therisein F 3 between the/r/and 2. Classification the/s/. results The classificationresultsare alsofairly consistentacross the databases. The resultsfor/r/and/j/are muchbetterthan C. Vowels called semivowels The classification resultsfor thevowelsarealsogivenin TableIV. No detectionresultsaregivenfor the vowelssince differentportionsof the samevowel may be detectedand labeleda semivowel.For example,acrossseveralspeakers, the beginningof the/at/in "flamboyant"was classifiedas either/w/,/1/, or/w-l/and theoffglidewasclassified asa/j/. occurs,the vowel showsup in the used in the recognitionsystemprovidesa good separation When this phenomenon resultsas beingmisclassified twice.Thusthe vowel statistics between these sounds;however, in several contexts,the sysmay not add up to 100%. tem is able to correctlyclassifythesesoundsat a rate better for the databases Most of the misclassifications of vowelsare of the type than chance.For example,lurepingthe resultsof the datadescribed above. They occur because of contextualinfluence basestogether,76% of word-initial/w/'sand67% of wordas in the case of the beginning of the/at/in "flamboyant" initial/l]'s are classifiedcorrectly.If we assignhalf of the which resemblesa/w/due to the influenceof the preceding /w-l/ score to /w/ and /l/, the correct classificationrates fo/. They alsooccurwhendiphthongs like the/3I/in "flamchangeto 85% for/w/and 73% for/l/. boyant"are followedby anothervowel. In this case,the offglideof thediphthong is oftenrecognized asa semivowel. those for/w/and/I/. A considerable number of/w/'s and/l/'s are classified as/w-l/in all three databases.No one measure B. Consonants called semivowels Table IV showsthat many nasalsare called semivowels. One mainreasonfor thisconfusionis the lackof a parameter whichcaptures thefeaturenasal.The maincueusedfor the nasal-semivowel distinctionis the abruptness of the spectral changebetweenthe sonorantconsonant and adjacentvowel(s). This propertyaccountsfor the generallyhighermis- Other errors occurred because semivowels which are in the underlyingtranscription werenot handlabeled,butweredetectedand classifiedby the recognitionsystem.Suchan ex- ampleis the/l/in theword"stalwart."Finally,someerrors occur becauseof coarticulationas in the word "harlequin" shownin Fig. 2. The lowestpointof F 3 whichsignalsthe/r/ is at the beginningof what is labeled/o/,suggesting thatthe classificationof nasalsas/1/as opposedto one of the other articulationof thesetwo soundsoverlap. However, given the semivowels. discrepancy in timebetweenwherethe/r/is recognized and whereit appearsin thetranscription, therecognitionof the/r/ In addition to the nasals,severalvoiced obstruentsare lenited(theprocesswherebya consonant is producedwith a 69 J. Acoust.Soc.Am., Vol. 96, No. 1, July 1994 is classified as an error. CarolY. Espy-Wilson: Semivowelrecognition system 69 TABLE V. Percentrecognition of semivowels by HMM system."Other" "harlequin" IhlalrllI^1 kølklwlzInl I are thosesemivowelswhich were detected,bu, classifiedas some sound otherthana semivowel.(Note:The overallphoneaccuracyof the HMMbasedsystemof 60% increases substantially to 71%withcontext-dependent models andphonebigramprobabilities asphonotactic constraints. Specifically,therecognition ratesincrease to 80% for/w/, 81% for/1/, 82% for/r/ and58% for/j/.) kHz No. tokens 0.0 0.1 0.2 0.$ 0.4 0.5 06 TIME (seconds) FIG. 2. Wideband spectrogram of theword"harlequin."Thevowel/a/and /r/are co-articulated sothatthebeginningof whatis transcribed asthe/a/is recognizedas an/r/. III. SUMMARY AND DISCUSSION The frameworkdevelopedfor the feature-basedapproachused in the recognitionsystemfor semivowelsis basedon threekey assumptions. First, it assumesthat the abstract features have acoustic correlates which can be reli- ablyextracted from thephysicalsignal.The acoustic properties are derivedfrom relativemeasuresas opposedto absolute measurements so that they are lesssensitiveto speaker differences,speakingrate, and context.Second,the framework is baseduponthe generalideathat the acousticmanifestationof a changein thevalueof a featureis markedby a specificeventin the appropriate acousticparameter(s). An acousticevent can be a minimum, maximumor an abrupt spectralchangein a parameter.Finally, the frameworkis based on the notion that these events serve as landmarks for when the acoustic correlates for features should be extracted to classifythe soundsin the signal. The results obtained show that the feature-based frame- work is a viable methodologyfor speaker-independent continuousspeechrecognition. Fairly consistent recognitionresults were obtainedfor the three corporawhich include polysyllabicwords and sentences which were spokenby malesand femalesof severaldialects.Thus one major conclusion that can be drawn from these data is that much of the across-speaker variabilitydisappears if relativemeasures are usedto extractthe acousticpropertiesfor features. As a comparison, the baselinesemivowelrecognition results obtained from a three-state HMM with Gaussian mix- ture observationdensities(Lamel and Gauvain, 1993) are shown in Table V. These data were obtained using 61 database which contains 8 sentences from eachof 24 speakers.A similartableof results is shownin Table VI for the feature-based semivowelrecognition systemwherewe havepooledthe dataacrossthe da- tabasesnew speakersand TIMIT (the resultsfor original were not includedsince this databasewas used to develop 1 r j 144 291 270 50 detected 87 82 79 86 w 61 4 0 0 I 5 58 0 0 r 1 1 56 0 j 0 0 0 56 20 19 23 30 other to the scoresfor/w/and/1/. (Thisreassignment makescomparisoneasierandis reasonable sincethe soundsassigned to thiscategory werefelt to be equallylikely a/w/or/1/.) In comparing therecognition resultsof TablesV andVI, two differencesshouldbe kept in mind. First, the resultsof theHMM systemarebasedon TIMIT sentences whereasthe semivowelrecognitionresultsare basedon a smallerand differentset of TIMIT sentences, and polysyllabicwords. Second,the HMM systemwas designedfor a closedclass problem,that is to distinguishbetween39 possiblephone labels.The feature-based system,on the otherhand,wasdesigned to recognize semivowelsin unrestrictedspeech, which is an open classproblem. The HMM systemdetects60% of the semivowels, and 71% of thosedetectedare correctlyclassified.In addition, 3% (156 tokens out of a total of 4999) of nonsemivowel soundsare recognizedas semivowels.The feature-based system detects92% of the semivowels(this numberdoes not include those semivowelslisted in Table VI as "nc") and correctlyclassifies85% of thosedetected.However,it classifies22% of the nonsemivowelsoundsas semivowels.(Recall from the discussions in Sees.II B and II C thatmanyof these"misclassifications" are in fact reasonable.)Since the HMM and feature-based systemsare operatingat different correctrecognition/false recognitiontrade-offpoints,it is difficultto compareaccuracy.However,thesedata suggest that soundscanbe adequatelydetectedon the basisof acoustic eventsand that a signal representation in terms of the TABLE VI. Percentrecognition of semivowels by thefeature-based system. Dataacrossthe databases newspeakers andTIMIT arepooledandthe/w-l/ scoresare incorporated in /w/ and /1/ scores."nc" are thosesemivowel which were detectedbut "not classified"as a semivowelby any of the rules. context-independent phonemodelswhichare mappedto 39 phonesfor scoring(Lee andHon, 1989).The dataarebased on the core test set of the TIMIT w No. tokens w 1 r j 209 314 328 128 detected 98 98 97 98 w 62 21 2 0 I 28 74 0 0 r 4 0 91 0 j 0 0 0 84 nc 6 3 4 14 therecognition system).Half of the/w-l/score wasassigned 70 d. Acoust.Soc. Am., Vol. 96, No. 1, July 1994 Carol Y. Espy-Wilson:Semivowelrecognitionsystem 70 acoustic correlates of features is extracting therelevant information fromthespeech signal. Withthesedifferences in mind,a comparison of therecognitionresults showthatthefeature-based recognition sys- on spectralshapeas opposedto formantfrequencies. The trackingof formantfrequencies is oftenproblematic during consonantssince, due to the constrictedvocal tract, the for- mantsmay mergeor be obscuredby nearbyantiresonances. tem doessubstantially betterin termsof detectionand clas- sification of thesemivowels. Thesedatasuggest thatsounds can be adequatelydetectedon the basisof acousticevents andthata signalrepresentation in termsof the acousticcorrelatesof featuresis extracting therelevantinformation from the speechsignal. While the feature-based recognition resultsare encouraging,an analysisof the errorshas broughtforth several issues, whichneedto beaddressed to improveandextendthe feature-based approach andto appropriately evaluate its performance.First,phenomenasuchas coarticulationand leni- tion makethe presenthand-transcription procedure inadequatefor evaluatingphonemerecognition performance. As in the caseof "harlequin"discussed in Sec.III C, speech soundsoftenoverlap,at leastto someextent,so thatsomeof the strongestacousticevidencefor a featurethat is distinc- IV. CONCLUSIONS In conclusion, thefeature-based approach to recognition showsmuch promise.However,a great deal of work still needsto be doneto understand andreliablyextractall of the acoustic correlates of thelinguisticfeatures; to specifyall of the featurechangesthat can occurand in what domains;to definean appropriate lexicalrepresentation for thewordsin the lexicon; and to developstrategiesthat can match a feature-based representation of the lexical itemswith the extractedpropertiesfor features. ACKNOWLEDGMENTS This work wassupported in partby a XeroxFellowship and NSF GrantNo. BNS-8920470.It is basedin parton a Ph.D.thesisby the authorsubmitted to the Department of ElectricalEngineeringand ComputerScienceat MIT The authorgratefullyacknowledges the encouragement and ad- tivefor a particular soundmayoccuroutsideof theregion transcribed for thatsound.Presenthand-transcription techniquesdon'tallowfor suchoverlapsothatmatching in such casesis problematic. In thecaseof lenition,theoftenlarge vice of Professor Kenneth N. Stevens. I also want to acacousticchangethat accompanies weakenedconsonants is knowledge thehelpfulcomments of CorineBickley,Caroline notreflected in thehandtranscription. Thustheevaluation of Huang,and Melanie Matthies,which greatlyimprovedthe misclassifications resultingfrom lenitionis not straightforqualityandclarityof thispaper.Specialthanksto Lori Lamel ward. andJean-LucGauvainfor providingconfusionmatricesobSecond,an analysisof the insertions andothermisclastainedfrom theirHMM-basedrecognition system. sifications showthat, in manycases,errorsoccurbecause decisions abouttheunderlying phoneroes arebeingmadetoo •Theformants wcrctracked automatically (Espy-Wilson, 1987)during the early in the recognition process. The portionsof the wavedetectedSOhorant regionsof the waveforms.The algorithmwas basedon forms recognizedas semivowelsdo look like semivowels. peak-pickingof the seconddifferenceof the log-magnitudelinear- predictionspectra. Thusto improverecognition resultswouldrequirethatconina particular signal isperformed byfinding minima relative textualinfluencesand featurechangesdue to coarticulation 2Dipdetection to adjacentmaxima.A dip in a signalcanoccurat ( 1) thebeginningof the and lenitionbe takeninto accountbeforelabelingis done. signalin whichcascthesignalrisessubstantially fromsomelow point,(2) fromsome One possibilityis to not integratethe extractedacoustic theendof thesignalin whichcasethesignalfallssubstantially propertiesto make a decisionabout what the soundsare within a word before lexical access.Instead,lexical access canbe performeddirectlyfrom theextractedacoustic properties.In thisway, the underlyingsoundswithin a word are not knownuntil thewordhasbeenrecognized. Finally,thereappears to be a hierarchy of features which may governnot only the appropriate acousticpropertyfor features, butalsowhatfeaturesareapplicable duringdifferentportionsof thewaveform.In particular, theacoustic correlatesof someor all of thefeatures maydifferdepending uponwhetherthe soundis syllabicso that the vocal tract is relativelyopen,or nonsyllabicso that the vocal tract is more constricted. For example,the acousticcorrelateusedin this studyfor the featurehigh is one often associated with vowels.However,thisproperty whichshouldseparate theliquids /1 r/ from the glides/w j/ groupedall of the semivowels together.Alongthis sameline, the featuresbackandfront were usedto helpdistinguish amongthe semivowels. However, given that the semivowelsare usuallynonsyllabic,the featureslabial andcoronalshouldprobablybe usedin addition to or instead of the more vowel-like features. The latter featuresmay alsobe moredesirablebecausethey are based 71 d.Acoust. Soc.Am.,Vol.96, No.1, July1994 highpoint,and (3) insidethe signalin whichcasethe signalconsists of a fall followedby a rise. For furtherdetailsseeEspy-Wilson (1987). -•Peak detection in a particular waveform is performed by inverting the waveformandapplyingthe dip detectionalgorithm. 4Before peakdetection anddipdetection areperformed, missing frames in theformanttracksarefilledin automatically by an interpolation algorithm and theresultingformanttracksare smoothed. 5Theprevocalic semivowels rulesare:/w/-(veryback)+(back)(high +maybehigh)(gradual onset)(maybecloseF2F3+not closeF2F3); /I/ =(back+mid)(gradual offset+abrupt offset)(maybe high+nonhigh+low) (mayberetroflex+not retroflex) (maybecloseF2F.•+notcloseF2F3);/wI/=(back)(maybehigh)(gradualoffset)(maybecloseF2F3+notclose F2F3);/r/=(retroflex) (closeF2F3+maybecloseF2F3)+(mayberetroflex) (closeF2F3) (gradualoffset)(back+mid)(maybehigh+nonhigh+low) and /j/=(front)(high+maybe high) (gradualoffset+abrupt offset).The intersonorannt semivowels rulesare/w/= (veryback)+(back)(high + maybe high)(gradual onset)(gradual offset)(maybe closeF2F3+notcloseF2F3); /l/=(back+mid)(maybe high+ nonhigh +low)(gradual onset+abrupt onset)(gradualoffset+abrupt offset)(maybe retroflex+not retroflex) (maybecloseF2F3+not closeF2F3);/w-l/=(back)(maybehigh)(gradual onset)(gradual offset)(maybecloseF2F3+ notcloseF2F3);/r/--(retroflex) (closeF2F3+maybccloseF2F3)+(mayberetroflex) (closeF2F3)(gradual onset) (gradual offset)(back+mid)(maybc high+nonhigh+low); /j/ -(front)(high+maybchigh) (gradualonset)(gradualoffset).The postvocalicsemivowelrulesare:/I/=(very back+back)(gradualonset)(notretroflex)(notcloseF2F3)(maybehigh+nonhigh+low)and /ff-(retroflex) (closeF2F3)+(mayberetroflex)(close F2F3)(maybchigh+nonhigh +1ow)(back+mid)(gradualonset). CarolY. Espy-Wilson: Semivowel recognition system 71 Catford,J. C. (1977).Fundamental Problems in Phonetics (IndianaU.P., Bloomington,IN). Clements, G. N. (1985)."The geometry of phonological features,"Phonol. Year. 2, 225-252. Daiston,R. M. (1975). "Acousticcharacteristics of english/w,r,l/spoken correctly byyoung children andadults," J.Acoust. Soc.Am.57,462-469. De Mori, Renato(1983).Computer Modelsof SpeechUsingFuzzyAlgorithms(Plenum,New York). Deng,L., andErler,K. (1992)."Structural designof hiddenMarkovmodel speech recognizer usingmultivalued phonetic features: Comparison with segmental speechunits,"J. Acoust.Soc.Am. 92, 3058-3067. Elman,J. L., andMcClelland,J. L. (1986)."Exploitinglawfulvariabilityin thespeech wave,"in Invariance andVariability in Speech Processes, ed- itedby J. S. PerkellandD. H. Klatt(Erlbaum, Hillsdale, NJ),pp.360380. Espy-Wilson, C. Y. (1987)."An acoustic-phonetic approach to speech recognition:Applicationto the semivowels," TechnicalRep. No. 531, ResearchLaboratoryof Electronics, MIT. Espy-Wilson, C. Y. (1992)."Acoustic measures for linguistic features distinguishing thesemivowels inAmerican English," J.Acoust. Soc.Am.92, 736-757. Fant,G. (1960).AcousticTheoryof SpeechProduction (Mouton,The Netherlands). Jakobson, R., Fant, G., and Halle, M. (1952). "Preliminaries to speech analysis,"MIT Acoustics Lab.Tech.Rep.No. 13. Jelinek,E (1985)."The Development of an experimental discretedictation recognizer," Proc.IEEE 73, 1616-1625. Joos,M. (1948)."Acousticphonetics," Lang.Suppl.24, 1-136. Lamel,L., Kassel,R., and Seneft,S. (1986). "Speechdatabase development:Designandanalysis of theacoustic-phonetic corpus," Proc.Speech Recog.Workshop,CA. Lamel,L., and Gauvain,J. (1993). "Cross-lingual experiments with phone recognition," Proc.IEEE Int. Conf.Acoust.SpeechSignalProcess. 72 J. Acoust.Soc.Am.,Vol.96, No. 1, July1994 Lee, K. (1988).Automatic Speech Recognition: TheDevelopment of the SPHINXSystem (KluwerAcademic, Norwell,MA). Lee,K., andHon,H. (1989)."Speaker-independent phonerecognition using hiddenMarkov models,"IEEE Trans.Acoust.SpeechSignalProcess 37, 1641-1648. Lehiste,I. (1962). "Acousticalcharacteristics of selectedenglishconsonants,"ReportNo. 9, Universityof Michigan,Communication Sciences Laboratory, Ann Arbor,Michigan. Levinson, S. E., Rabiner,L. R., andSondhi,M. M. (1983)."An introduction to the application of the theoryof probabilistic functions of a Markov process toautomatic speech recognition," BellSyst.Technol. J.62, 10351074. Makhoul,J., andSchwartz,R. (1985). "Ignorancemodeling,"in fitvariance andVariability inSpeech Processes, editedby J. S. PerkellandD. H. Klatt (Erlbaum,Hillsdale,NJ), pp. 344-345. Phillips,M., andZue,V. (1992)."Automatic discovery of acoustic measurementsfor phoneticclassification," Proc.SecondIntern.Conf. Spoken Lang. Proc. 1, 795-798. Rabiner,L., Levinson,S., and Sondhi,M. (1983). "On the applicationof vectorquantization andhiddenMarkovmodelsto speaker independent isolatedwordrecognition," Bell Syst.Technol.J. 62, 1075-1105. Sagey,E. C. (1986)."The representation of features andrelations in nonlinearphonology," doctoraldissertation, Massachusetts Instituteof Technology,Department of Linguistics. Stevens, K. N. (1980)."Acousticcorrelates of somephonetic categories," J. Acoust. Soc. Am. 68, 836-842. Stevens,K. N., and Keyset,J. K. (1989). "Primaryfeaturesandtheirenhancement in consonants," Language65, 81-106. Waibel, A. (1989). "Phonemerecognitionusing time-delayneural networks,"IEEE Trans.Acoust.Speech,SignalProcess. 37, 328-339. Zue, V. W. (1985). "The use of speechknowledgein automaticspeech recognition," Proc.IEEE 73, 1602-1615. CarolY. Espy-Wilson: Semivowel recognition system 72