Protocols Recent and Current Work. Richard Hughes-Jones The University of Manchester

advertisement

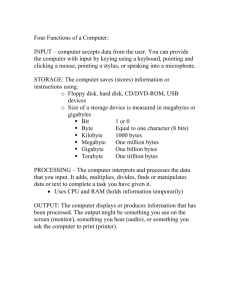

Protocols Recent and Current Work. Richard Hughes-Jones The University of Manchester www.hep.man.ac.uk/~rich/ then “Talks” ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 1 Outline SC|05 TCP and UDP memory-2-memory & disk-2-disk flows 10 Gbit Ethernet VLBI Jodrell Mark5 problem – see Matt’s Talk Data delay on a TCP link – How suitable is TCP? z 4th Year MPhys Project Stephen Kershaw & James Keenan Throughput on the 630Mbit JB-JIVE UKLight Link 10 Gbit in FABRIC ATLAS Network tests on Manchester T2 farm The Manc-Lanc UKLight Link ATLAS Remote Farms RAID Tests HEP server 8 lane PCIe RAID card ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 2 Collaboration at SC|05 Caltech Booth The BWC at the SLAC Booth SCINet Storcloud ESLEA Boston Ltd. & Peta-Cache Sun Meeting , 20-21 Jun 2006, ESLEA Technical Collaboration R. Hughes-Jones Manchester 3 Bandwidth Challenge wins Hat Trick The maximum aggregate bandwidth was >151 Gbits/s 130 DVD movies in a minute serve 10,000 MPEG2 HDTV movies in real-time 22 10Gigabit Ethernet waves Caltech & SLAC/FERMI booths In 2 hours transferred 95.37 TByte 24 hours moved ~ 475 TBytes Showed real-time particle event analysis SLAC Fermi UK Booth: 1 10 Gbit Ethernet to UK NLR&UKLight: z transatlantic HEP disk to disk z VLBI streaming 2 10 Gbit Links to SALC: z rootd low-latency file access application for clusters z Fibre Channel StorCloud 4 10 Gbit links to Fermi z Dcache data transfers SC2004 101 Gbit/s FNAL-UltraLight SLAC-ESnet-USN In to booth UKLight FermiLab-HOPI SLAC-ESnet ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester Out of booth 4 ESLEA and UKLight sc0501 SC|05 1000 900 R a teM b it/s 800 700 600 500 400 300 200 100 0 16:00 17:00 18:00 19:00 20:00 21:00 22:00 23:00 21:00 22:00 23:00 21:00 22:00 23:00 21:00 22:00 23:00 21:00 22:00 23:00 time sc0502 SC|05 1000 900 R a teM b it/s 800 700 600 500 400 300 200 100 0 16:00 17:00 18:00 19:00 20:00 date -time sc0503 SC|05 1000 900 700 600 500 400 300 200 100 0 16:00 17:00 18:00 19:00 20:00 date -time sc0504 SC|05 1000 900 R a teM b it/s 800 700 600 500 400 300 200 100 0 16:00 17:00 18:00 19:00 20:00 date -time UKLight SC|05 4500 4000 3500 R ateM b it/s 6 * 1 Gbit transatlantic Ethernet layer 2 paths UKLight + NLR Disk-to-disk transfers with bbcp Seattle to UK Set TCP buffer and application to give ~850Mbit/s One stream of data 840-620 Mbit/s Stream UDP VLBI data UK to Seattle Reverse TCP 620 Mbit/s R a teM b it/s 800 3000 2500 2000 1500 1000 500 0 16:00 17:00 18:00 19:00 20:00 date -time ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 5 SLAC 10 Gigabit Ethernet 2 Lightpaths: Routed over ESnet Layer 2 over Ultra Science Net 6 Sun V20Z systems per λ dcache remote disk data access 100 processes per node Node sends or receives One data stream 20-30 Mbit/s Used Netweion NICs & Chelsio TOE Data also sent to StorCloud using fibre channel links Traffic on the 10 GE link for 2 nodes: 3-4 Gbit per nodes 8.5-9 Gbit on Trunk ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 6 VLBI Work TCP Delay and VLBI Transfers Manchester 4th Year MPhys Project by Stephen Kershaw & James Keenan ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 7 VLBI Network Topology ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 8 VLBI Application Protocol Sender TCP & Network Receiver Timestamp1 Timestamp2 Data1 Timestamp3 Data2 Timestamp4 Packet loss Timestamp5 Data3 VLBI data is Constant Bit Rate Data4 ●●● tcpdelay instrumented TCP program emulates sending CBR Data. Records relative 1-way delay Time Receiver Sender RTT Remember Bandwidth*Delay Product BDP = RTT*BW Segment time on wire = bits in segment/BW Time ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester ACK 9 Check the Send Time Slope 0.44 ms/message From TCP buffer size & RTT Expect ~42 messages/RTT ~0.6ms/message Send time sec 10,000 Messages Message size: 1448 Bytes Wait time: 0 TCP buffer 64k Route: Man-ukl-JIVE-prod-Man RTT ~26 ms Send time – 10000 packets 1 sec Message number ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 10 Send Time Detail TCP Send Buffer limited After SlowStart Buffer full 26 messages Send time sec packets sent out in bursts each RTT Program blocked on sendto() About 25 us One rtt Message 76 Message 102 100 ms Message number ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 11 1-Way Delay 1 way delay – 10000 packets 1 way delay 100 ms 10,000 Messages Message size: 1448 Bytes Wait time: 0 TCP buffer 64k Route: Man-ukl-JIVE-prod-Man RTT ~26 ms 100 ms Message number ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 12 1 way delay 10 ms 1-Way Delay Detail = 1 x RTT 26 ms = 1.5 x RTT 10 ms ≠ 0.5 x RTT Why not just 1 RTT? Message number After SlowStart TCP Buffer Full Messages at front of TCP Send Buffer have to wait for next burst of ACKs – 1 RTT later Messages further back in the TCP Send Buffer wait for 2 RTT ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 13 Route: LAN gig8-gig1 Ping 188 μs 1 way delay 10 ms 1-Way Delay with packet drop 5 ms 10,000 Messages Message size: 1448 Bytes Wait times: 0 μs Drop 1 in 1000 Message number 28 ms Manc-JIVE tests show times increasing with a “saw-tooth” around 10 s 800 us ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 14 10 Gbit in FABRIC ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 15 FABRIC 4Gbit Demo 4 Gbit Lightpath Between GÉANT PoPs Collaboration with Dante Continuous (days) Data Flows – VLBI_UDP and multi-Gigabit TCP tests ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 16 10 Gigabit Ethernet: UDP Data transfer on PCI-X Sun V20z 1.8GHz to 2.6 GHz Dual Opterons Connect via 6509 XFrame II NIC PCI-X mmrbc 2048 bytes 66 MHz One 8000 byte packets 2.8us for CSRs 24.2 us data transfer effective rate 2.6 Gbit/s Data Transfer CSR Access 2.8us 2000 byte packet, wait 0us ~200ms pauses 8000 byte packet, wait 0us ~15ms between data blocks ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 17 ATLAS ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 18 ESLEA: ATLAS on UKLight 1 Gbit Lightpath Lancaster-Manchester Disk 2 Disk Transfers Storage Element with SRM using distributed disk pools dCache & xrootd ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 19 MancÆ Lanc ~800 Mbit/s but packet loss Send times Pause 695 μs every 1.7ms So expect ~600 Mbit/s pyg13-gig1_19Jun06 1000 900 800 700 600 500 400 300 200 100 0 50 bytes 100 bytes 200 bytes 400 bytes 600 bytes 800 bytes 1000 bytes 1200 bytes 1400 bytes 1472 bytes 0 10 20 Spacing between frames us 3500 30 40 W11 pyg13-gig1_19Jun06 3000 1-w aydelayus Lanc Æ Manc Plateau ~640 Mbit/s wire rate No packet Loss Recv Wire rate Mbit/s udpmon: Lanc-Manc Throughput 2500 2000 1500 1000 500 0 6200000 6220000 6230000 Send time 0.1us 6240000 6250000 6240000 6250000 W11 pyg13-gig1_19Jun06 3000 1-w aydelayus Receive times (Manc end) No corresponding gaps 6210000 3500 2500 2000 1500 1000 500 0 6200000 6210000 6220000 6230000 Recv time 0.1us ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 20 MancÆ Lanc Plateau ~890 Mbit/s wire rate Recv Wire rate Mbit/s udpmon: Manc-Lanc Throughput gig1-pyg13_20Jun06 1000 900 800 700 600 500 400 300 200 100 0 100 bytes 200 bytes 400 bytes 600 bytes 800 bytes 1000 bytes 1200 bytes 1400 bytes 1472 bytes 0 10 20 Spacing between frames us %Packet loss 40 50 bytes 100 bytes 200 bytes 80 60 400 bytes 600 bytes 40 800 bytes 1000 bytes 20 0 0 10 20 Spacing between frames us 7000 30 40 1200 bytes 1400 bytes 1472 bytes W11 gig1-pyg13_20Jun06 6000 5000 1-way delay us 1way delay 30 gig1-pyg13_20Jun06 100 Packet Loss Large frames 10% when at line rate Small frames 60% when at line rate 50 bytes 4000 3000 2000 1000 0 0 1000 2000 Packet No. 3000 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 4000 5000 21 ATLAS Remote Computing: Application Protocol Event Filter Daemon EFD SFI and SFO Request event Request-Response time (Histogram) Send event data Process event Request Buffer Send OK Send processed event ●●● Event Request EFD requests an event from SFI Time SFI replies with the event ~2Mbytes Processing of event Return of computation EF asks SFO for buffer space SFO sends OK EF transfers results of the computation tcpmon - instrumented TCP request-response program emulates the Event Filter EFD to SFI communication. ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 22 tcpmon: TCP Activity Manc-CERN Req-Resp 150 100 50000 Data Bytes In 200 100000 50 200 400 600 800 1000 time 1200 1400 1600 1800 DataBytesOut (Delta DataBytesIn (Delta CurCwnd (Value 250000 200000 0 2000 250000 200000 150000 150000 100000 100000 50000 50000 0 0 200 400 600 800 1000 1200 time ms 1400 1600 1800 CurCwnd Data Bytes Out 250 150000 0 2000 250000 180 160 140 120 100 80 60 40 20 0 200000 150000 100000 50000 0 200 400 600 800 1000 1200 time ms 1400 1600 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 1800 0 2000 23 Cwnd Transfer achievable throughput 120 Mbit/s Event rate very low Application not happy! 300 0 Data Bytes Out TCP Congestion window gets re-set on each Request TCP stack RFC 2581 & RFC 2861 reduction of Cwnd after inactivity Even after 10s, each response takes 13 rtt or ~260 ms 350 200000 0 TCPAchive Mbit/s Web100 hooks for TCP status Round trip time 20 ms 64 byte Request green 1 Mbyte Response blue TCP in slow start 1st event takes 19 rtt or ~ 380 ms DataBytesOut (Delta 400 DataBytesIn (Delta 250000 tcpmon: TCP Activity Manc-cern Req-Resp no cwnd reduction DataBytesOut (Delta 400 DataBytesIn (Delta 1200000 800000 300 250 600000 200 150 100 50 400000 200000 0 0 1000 1500 time 2000 700 600 600000 300 400000 200 200000 0 TCPAchive Mbit/s 1000000 Cwnd 400 0 500 1000 1500 time ms 2000 2500 0 3000 1200000 900 800 700 600 500 400 300 200 100 0 1000000 800000 600000 400000 200000 0 3 Round Trips 1200000 800000 500 100 Transfer achievable throughput grows to 800 Mbit/s Data transferred WHEN the application requires the data 0 3000 2500 PktsOut (Delta PktsIn (Delta CurCwnd (Value 800 num Packets TCP Congestion window grows nicely Response takes 2 rtt after ~1.5s Rate ~10/s (with 50ms wait) 500 Data Bytes In 350 1000000 1000 2000 3000 4000 time ms 5000 6000 7000 2 Round Trips ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 0 8000 24 Cwnd Data Bytes Out Round trip time 20 ms 64 byte Request green 1 Mbyte Response blue TCP starts in slow start 1st event takes 19 rtt or ~ 380 ms Recent RAID Tests Manchester HEP Server ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 25 “Server Quality” Motherboards Boston/Supermicro H8DCi Two Dual Core Opterons 1.8 GHz 550 MHz DDR Memory HyperTransport Chipset: nVidia nForce Pro 2200/2050 AMD 8132 PCI-X Bridge PCI 2 16 lane PCIe buses 1 4 lane PCIe 133 MHz PCI-X 2 Gigabit Ethernet SATA ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 26 Disk_test: 7000 afs6 R0 5disk areca 8PCIe 10 Jun06 Read 8k 6000 Throughput Mbit/s areca PCI-Express 8 port Maxtor 300 GB Sata disks RAID0 5 disks Mbit/s 8k r Mbit/s 8k w 5000 4000 3000 2000 1000 Read 2.5 Gbit/s Write 1.8 Gbit/s 0 0.0 500.0 7000 Read 1.7 Gbit/s Write 1.48 Gbit/s 1500.0 2000.0 2500.0 File size Mbytes 3000.0 3500.0 4000.0 afs6 R5 5disk areca 8PCIe 10 Jun06 Read 8k 6000 Throughput Mbit/s RAID5 5 data disks 1000.0 Mbit/s 8k r Mbit/s 8k w 5000 4000 3000 2000 1000 0 0.0 500.0 7000 Read 2.1 Gbit/s Write 1.0 Gbit/s 1500.0 2000.0 2500.0 File size Mbytes 3000.0 3500.0 4000.0 afs6 R6 7disk areca 8PCIe 10 Jun06 Read 6000 Throughput Mbit/s RAID6 5 data disks 1000.0 5000 4000 Mbit/s 8k r 3000 Mbit/s 8k w 2000 1000 0 0.0 500.0 1000.0 1500.0 2000.0 2500.0 File size Mbytes 3000.0 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 3500.0 4000.0 27 Any Questions? ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 28 More Information Some URLs 1 UKLight web site: http://www.uklight.ac.uk MB-NG project web site: http://www.mb-ng.net/ DataTAG project web site: http://www.datatag.org/ UDPmon / TCPmon kit + writeup: http://www.hep.man.ac.uk/~rich/net Motherboard and NIC Tests: http://www.hep.man.ac.uk/~rich/net/nic/GigEth_tests_Boston.ppt & http://datatag.web.cern.ch/datatag/pfldnet2003/ “Performance of 1 and 10 Gigabit Ethernet Cards with Server Quality Motherboards” FGCS Special issue 2004 http:// www.hep.man.ac.uk/~rich/ TCP tuning information may be found at: http://www.ncne.nlanr.net/documentation/faq/performance.html & http://www.psc.edu/networking/perf_tune.html TCP stack comparisons: “Evaluation of Advanced TCP Stacks on Fast Long-Distance Production Networks” Journal of Grid Computing 2004 PFLDnet http://www.ens-lyon.fr/LIP/RESO/pfldnet2005/ Dante PERT http://www.geant2.net/server/show/nav.00d00h002 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 29 More Information Some URLs 2 Lectures, tutorials etc. on TCP/IP: www.nv.cc.va.us/home/joney/tcp_ip.htm www.cs.pdx.edu/~jrb/tcpip.lectures.html www.raleigh.ibm.com/cgi-bin/bookmgr/BOOKS/EZ306200/CCONTENTS www.cisco.com/univercd/cc/td/doc/product/iaabu/centri4/user/scf4ap1.htm www.cis.ohio-state.edu/htbin/rfc/rfc1180.html www.jbmelectronics.com/tcp.htm Encylopaedia http://www.freesoft.org/CIE/index.htm TCP/IP Resources www.private.org.il/tcpip_rl.html Understanding IP addresses http://www.3com.com/solutions/en_US/ncs/501302.html Configuring TCP (RFC 1122) ftp://nic.merit.edu/internet/documents/rfc/rfc1122.txt Assigned protocols, ports etc (RFC 1010) http://www.es.net/pub/rfcs/rfc1010.txt & /etc/protocols ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 30 Backup Slides ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 31 SuperComputing ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 32 SC2004: Disk-Disk bbftp bbftp file transfer program uses TCP/IP UKLight: Path:- London-Chicago-London; PCs:- Supermicro +3Ware RAID0 MTU 1500 bytes; Socket size 22 Mbytes; rtt 177ms; SACK off Move a 2 Gbyte file Web100 plots: InstaneousBW AveBW CurCwnd (Value) 2000 1500 1000 500 0 0 Disk-TCP-Disk at 1Gbit/s is here! 5000 10000 time ms 15000 20000 InstaneousBW AveBW CurCwnd (Value) 2500 TCPAchive Mbit/s Scalable TCP Average 875 Mbit/s (bbcp: 701 Mbit/s ~4.5s of overhead) 45000000 40000000 35000000 30000000 25000000 20000000 15000000 10000000 5000000 0 Cwnd Standard TCP Average 825 Mbit/s (bbcp: 670 Mbit/s) TCPAchive Mbit/s 2500 45000000 40000000 35000000 30000000 25000000 20000000 15000000 10000000 5000000 0 2000 1500 1000 500 0 0 5000 10000 time ms 15000 Cwnd 20000 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 33 SC|05 HEP: Moving data with bbcp What is the end-host doing with your network protocol? Look at the PCI-X 3Ware 9000 controller RAID0 1 Gbit Ethernet link 2.4 GHz dual Xeon ~660 Mbit/s PCI-X bus with RAID Controller Read from disk for 44 ms every 100ms PCI-X bus with Ethernet NIC Write to Network for 72 ms Power needed in the end hosts Careful Application design ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 34 10 Gigabit Ethernet: UDP Throughput 1500 byte MTU gives ~ 2 Gbit/s Used 16144 byte MTU max user length 16080 DataTAG Supermicro PCs Dual 2.2 GHz Xenon CPU FSB 400 MHz PCI-X mmrbc 512 bytes wire rate throughput of 2.9 Gbit/s CERN OpenLab HP Itanium PCs Dual 1.0 GHz 64 bit Itanium CPU FSB 400 MHz PCI-X mmrbc 4096 bytes wire rate of 5.7 Gbit/s SLAC Dell PCs giving a Dual 3.0 GHz Xenon CPU FSB 533 MHz PCI-X mmrbc 4096 bytes wire rate of 5.4 Gbit/s 16080 bytes 14000 bytes 12000 bytes 10000 bytes 9000 bytes 8000 bytes 7000 bytes 6000 bytes 5000 bytes 4000 bytes 3000 bytes 2000 bytes 1472 bytes an-al 10GE Xsum 512kbuf MTU16114 27Oct03 6000 5000 Recv Wire rate Mbits/s 4000 3000 2000 1000 0 0 5 10 15 20 25 Spacing between frames us 30 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 35 40 35 10 Gigabit Ethernet: Tuning PCI-X 16080 byte packets every 200 µs Intel PRO/10GbE LR Adapter PCI-X bus occupancy vs mmrbc 10 mmrbc 1024 bytes DataTAG Xeon 2.2 GHz 8 6 us PCI-X Transfer time mmrbc 512 bytes Measured times Times based on PCI-X times from the logic analyser Expected throughput ~7 Gbit/s Measured 5.7 Gbit/s 4 measured Rate Gbit/s rate from expected time Gbit/s Max throughput PCI-X 2 0 0 10 1000 2000 3000 4000 Max Memory Read Byte Count 5000 CSR Access Data Transfer Interrupt & CSR Update Kernel 2.6.1#17 HP Itanium Intel10GE Feb04 8 6 4 measured Rate Gbit/s rate from expected time Gbit/s Max throughput PCI-X 2 mmrbc 2048 bytes PCI-X Sequence us PCI-X Transfer time mmrbc 4096 bytes 5.7Gbit/s 0 0 1000 2000 3000 4000 Max Memory Read Byte Count 5000 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 36 10 Gigabit Ethernet: TCP Data transfer on PCI-X Sun V20z 1.8GHz to 2.6 GHz Dual Opterons Connect via 6509 XFrame II NIC PCI-X mmrbc 4096 bytes 66 MHz Data Transfer Two 9000 byte packets b2b Ave Rate 2.87 Gbit/s CSR Access Burst of packets length 646.8 us Gap between bursts 343 us 2 Interrupts / burst ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 37 TCP on the 630 Mbit Link Jodrell – UKLight – JIVE ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 38 TCP Throughput on 630 Mbit UKLight TCPAchive Mbit/s test 0 Dup ACKs seen Other Reductions InstaneousBW CurCwnd (Value4500000 1000 800 600 400 200 0 0 20 40 60 80 100 4000000 3500000 3000000 2500000 2000000 1500000 1000000 500000 0 120 Cwnd Manchester gig7 – JBO mk5 606 4 Mbyte TCP buffer time s InstaneousBW CurCwnd (Value)7000000 6000000 800 5000000 600 4000000 400 3000000 Cwnd test 1 TCPAchive Mbit/s 1000 2000000 200 1000000 0 0 20 40 60 80 100 0 120 time s InstaneousBW CurCwnd (Value7000000 6000000 800 5000000 600 4000000 400 3000000 2000000 200 1000000 0 0 20 40 60 80 100 0 120 time s ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 39 Cwnd test 2 TCPAchive Mbit/s 1000 Comparison of Send Time & 1-way delay Send time sec 26 messages Message 76 Message 102 100 ms Message number ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 40 1-Way Delay 1448 byte msg 900000 one-way 800000 50 ms 1-way delay us 700000 600000 500000 400000 300000 200000 100000 0 0 2000 4000 6000 Packet No. 8000 10000 12000 PktsOut (Delta) PktsIn (Delta) CurCwnd (Value) 800 700 600 500 400 300 200 100 0 2000000 1500000 1000000 500000 0 1000 2000 3000 4000 5000 6000 7000 8000 Cw nd n u m Packets Message number Route: Man-ukl-ams-prod-man Rtt 27ms 10,000 Messages Message size: 1448 Bytes Wait times: 0 μs DBP = 3.4MByte TCP buffer 10MByte Web100 plot Starts after 5.6 Sec due to Clock Sync. ~400 pkts/10ms Rate similar to iperf 0 9000 time ms ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 41 Related Work: RAID, ATLAS Grid RAID0 and RAID5 tests 4th Year MPhys project last semester Throughput and CPU load Different RAID parameters z Number of disks z Stripe size z User read / write size Different file systems z Ext2 ext3 XSF Sequential File Write, Read Sequential File Write, Read with continuous background read or write Status Need to check some results & document Independent RAID controller tests planned. ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 42 HEP: Service Challenge 4 Objective: demo 1 Gbit/s aggregate bandwidth between RAL and 4 Tier 2 sites RAL has SuperJANET4 and UKLight links: RAL Capped firewall traffic at 800 Mbit/s CPU + Disks RAL Tier 2 ADS Caches RAL Site 3 x 5510 + 5530 N x 1Gb/s CPU + Disks CPUs + Disks SuperJANET Sites: N x 1Gb/s Glasgow Manchester Oxford QMWL UKLight Site: 5510-2 stack 5510 + 5530 10Gb/s 4x 1Gb/s 5510-3 stack CPUs + Disks Router A FW 1Gb/s 1Gb/s to SJ4 2 x 1Gb/s CPUs + Disks 5530 CPUs + Disks Lancaster Many concurrent transfers from RAL to each of the Tier 2 sites 10Gb/s 10Gb/s UKLight Router Oracle RACs Tier 1 ~700 Mbit UKLight Peak 680 Mbit SJ4 Applications able to sustain high rates. SuperJANET5, UKLight & new access links very timely ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 43 4 x 1Gb/s to CERN 1Gb/s to Lancaster Network switch limits behaviour End2end UDP packets from udpmon Only 700 Mbit/s throughput 1000 50 bytes 100 bytes 200 bytes 400 bytes 600 bytes 800 bytes 1000 bytes 1200 bytes 1400 bytes 1472 bytes w05gva-gig6_29May04_UDP RecvWirerateMbits/s 900 800 700 600 500 400 300 200 100 0 0 % Packet loss Lots of packet loss Packet loss distribution shows throughput limited 5 10 15 20 Spacing between frames us 100 90 80 70 60 50 40 30 20 10 0 25 30 35 40 50 bytes 100 bytes 200 bytes 400 bytes 600 bytes 800 bytes 1000 bytes 1200 bytes 1400 bytes 1472 bytes w05gva-gig6_29May04_UDP 0 5 10 15 20 Spacing between frames us 25 30 35 40 w05gva-gig6_29May04_UDP wait 12us 14000 14000 12000 1-waydelayus 12000 1-way delay us 10000 8000 6000 10000 8000 6000 4000 2000 4000 0 2000 0 0 500 510 520 530 Packet No. 540 550 100 200 300 Packet No. 400 ESLEA Technical Collaboration Meeting , 20-21 Jun 2006, R. Hughes-Jones Manchester 500 600 44