National Grid Service UK Dr Andrew Richards National Grid Service Coordinator

advertisement

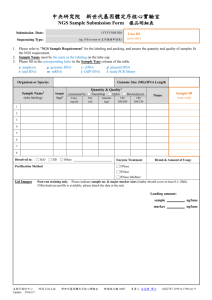

UK National Grid Service Dr Andrew Richards National Grid Service Coordinator e-Science Centre NGS - History • The UK e-Science Grid needed some dedicated resources – Previously relied on donated resources from e-Science Centres • Worked well for – Exploring technology – Establishing what needed to be done – Generating collaborations – Early adopters • Not good for – Production quality resources » service levels » capability and capacity – Non computer scientists – Thus to 2 compute and 2 Data Clusters funded for three years 2 Pre-NGS • The Grid Engineering Task Force (ETF) was formed in October 2001 to guide and carry out the construction, testing and demonstration of the UK e-Science Grid. Now starting production phase. • It contains members from each of the ten UK e-Science Centres and CCLRC plus Centres of Excellence. • ETF members are deploying the UK e-Science Grid using Globus middleware with support from the Grid Support Centre (GSC). • At Easter 2003, the ETF achieved a major milestone by deploying the UK e-Science Grid linking the Regional e-Science Centres and other participating institutions at Level 2 phase. 3 Background - Tender • The UK e-Science Grid needed some dedicated resources – Initially 2 compute clusters but data seen as critical – CCLRC involvement to drive it forward • CCLRC commitment – To provide an additional Data Cluster – Leading to 2 compute and 2 Data Clusters • European Tender – Tender requirements included hardware, software and system management tools – Restricted – Questionnaire date 15th April • 18 responses – Invited to tender date 11th June • 8 invited to tender leading to 5 responses • Reponses received 30th July – Cluster Vision won • Met all mandatory requirements • Desirables requirements made them the best value for money • Order placed 1st week September • Delivery 1st week November 4 NGS Overview • UK – National Grid Service (NGS) – JISC funded £1.06M Hardware (2003-2006) • Oxford, White Rose Grid (Leeds) - Compute Cluster • Manchester – Data Cluster • E-Science Centre funded 420k Data Cluster, 18TB SAN, 40 CPUs Oracle, Myrinet – JISC funded £900k staff effort including 2.5SY at CCLRC e-Science Centre • The e-Science Centre leads and coordinates the project for the JISC funded clusters – Production Grid Resources • Stable Grid Platform – gt2 with experimental gt3/4 interfaces to Data Clusters • Interoperability with other grids such as EGEE • Allocation and resource control – unified access conditions on JISC funded kit • Applications – As users require and licenses allow 5 NGS Members 6 NGS - Resources • NGS today – 2 x Compute Clusters (Oxford and White Rose Grid – Leeds) • 128 CPUs (64 Dual Xeon Processor Nodes) • 4TB NFS shared file space • fast programming interconnect – Myrinet – Data Clusters (Manchester and CCLRC) • 40 CPUs (20 Dual Xeon Processor Nodes) • Oracle 9iRAC on 16 CPUs • 18TB fibre SAN • fast programming interconnect – Myrinet – In total 6 FTE’s effort across all 4 sites. • just enough to get started – Plus HPCx and CSAR – National HPC services 7 NGS - Applications • The software environment common to all clusters is: – – – – – – RedHat ES 3.0 PGI compilers Intel Compilers, MKL PBSPro TotalView Debugger In addition, the data clusters at CCLRC-RAL and Manchester support Oracle 9i RAC & Oracle Application Server – Globus 2 using VDT release 8 Availability Ab Initio Molecular Orbital Theory eMaterials Fasta The DL_POLY Molecular Simulation Package 9 Objectives for UK NGS • NGS should become a key component of UK Grid Computing – Ease of access and use – Integrate with other Grids ( FP6 project - EGEE ) • High availability & Usage • Attract a diverse user base 10 Early Adopters 11 NGS - Status • 82 Users registered (this excludes sysadmins etc…) (Early Bird Users) • Users from Leeds, Oxford, UCL, Cardiff, Southampton, Imperial, Liverpool, Sheffield, Cambridge, Edinburgh, BBSRC and CCLRC. • Users on projects such as: – – – – Orbital Dynamics of Satellite Galaxies (Oxford) Bioinformatics (using BLAST), (John Owen BBSRC) GEODISE project (Southampton), singlet meson project within the UKQCD collaboration (QCDGrid Liverpool), also QCDGrid entries from Edinburgh, – census data analysis for geographical information systems (Sheffield), – NGS EGEE interoperability testing (Dave Colling Imperial), – MIAKT project (image registration for medical image anaylsis – Imperial). 12 EGEE / NGS 13 Monitoring 14 NGS - Plan NGS going forward • Full production September 2004 (AHM) – – – – – – – • Ensure user registration and peer review procedures in place Virtual Organisation Management tools in place Interoperate with other grids such as EGEE Allocation and resource control in place across NGS Define service level so that other sites can join NGS Grow application base and user base Access via Resource Brokers, Portals and command line. Forward look – – – – – NGS and hence Grid technologies embedded in scientists daily work Renewal of Core NGS service Web services etc etc… Additional sites… Not to replace traditional HPC services such as HPCx 15 Contacts / Support • GOC Director – Neil Geddes; n.i.geddes@rl.ac.uk • HPC Services Group – Pete Oliver; p.m.oliver@rl.ac.uk • NGS Grid Coordinator – Andrew Richards; a.j.richards@rl.ac.uk • NGS Website http://www.ngs.ac.uk ( and http://www.ngs.rl.ac.uk ) • Helpdesk (via Grid Support Centre) – Email: support@grid-support.ac.uk 16