e-Science: Stuart Anderson National e-Science Centre

advertisement

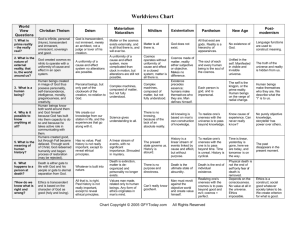

e-Science: Stuart Anderson National e-Science Centre Cool White Dwarves Issues 1 • Astronomers are looking for: – – – Many objects in globular clusters Very faint objects Interested in observations of many locations • But: – The observations are noisy: • Artifacts created by the sensor technology, scanning and digitizing. • Junk in orbit, e.g. satellite tracks. • Computer Science can help: - Pattern recognition, computational learning, data mining. - But: Astronomers are more picky. Cool Dwarves are faint and close •The sky is full of faint objects. • Cool White Dwarves are close. • So they move about relative to the background stars. • The illustrated observations cover a period of 30 years. • We need to match up very faint objects observed by different equipment at different times. Issues 2 • Astronomers have a model of how luminous CWDs are that predicts how distant they are and hence how they move over time. • We can use computational learning (aka data mining) to recognize CWDs provided we have a model that allows tractable learning. • We can use the model to create training cases for various learning techniques. • Astronomers also want to observe the same objects at different wavelengths. • Models of objects can be used as a basis for data mining to link observations. Problem Scale • Cosmos (old technology), megabytes per plate. • Super Cosmos (current technology), gigabytes per plate. • Cosmos and Super Cosmos use 1m telescope images • Vista (new technology): imaging in visible and x-ray using digital detectors, 4m telescope, terabytes per night. • Sky surveys look at large-scale structure of space so many images are involved e.g. to estimate the density of CWDs in the galaxy. E-Science and Old Science • Computational models have been used for many years. • e-Science systems will include vast collections of observed data. • Scientific models are the essential organizing principle for data in such systems. • Currently we are hand-crafting models that organise subsets of the data (e.g. CWDs). • Can we create experimental environments that allow scientists to create new models of phenomena and test them against data? Data, Information and Knowledge • Much Grid work identifies a three-layer architecture for data. • Data is the raw data acquired from sensors (e.g. telescopes, microscopes, particle detectors). • Information is created when we “clean up” data to eliminate artifacts of the collection process. • Knowledge is information embedded within an interpretive framework. • Science provides strong interpretive frameworks Pattern: More science “in silico” • Improved sensors, more sensors, huge increase in data volume. • Need to “clean”, “mine” structure data. • Support complex models and large-scale data collections inside the computer(s) • Support for flexible model development and using models to organise and access data. • E.g. in databases, spatial organisation, temporal organisation and support for queries exploiting that structure – useful for Geoscience? Credits • Cosmos, Super Cosmos and Vista are projects looking at large scale structure of the cosmos, based at the Royal Observatory Edinburgh. • Chris Williams, Bob Mann and Andy Lawrence are working on using computational learning to analyse super Cosmos data at RoE. • Andy Lawrence is director of the AstroGrid project that is a major UK contribution to the international “Virtual Observatory” that will federate the worlds major astronomical data assets. Whither Data Management? • Scientific data is not particularly well behaved. • In particular, it does not fit the relational model particularly well. • We need new data models that are better suited to the needs of science (and everyone else too!). • The model should attempt to support the work of scientists effectively. • Current data models are not particularly useful. Curated Databases • Useful scientific databases are often curated : they are created/ maintained with a great deal of “manual” labour. What really happens DB2 DB1 Database people’s idea of what happens select xyz from pqr where abc Inter-dependence is Complex GERD EpoDB TRRD BEAD TransFac GenBank GAIA Swissprot A few of the 500 or so public curated molecular biology databases Issues in Curated Databases • Data integration (always a problem). Need to deal with schema evolution • Data provenance. How do you track data back to its source (this information is typically lost) • Data annotation. How should annotations spread through this network? • Archiving. How do you keep all the archives when you are “publishing” a new database every day? Archiving • Some recent results on efficient archiving (Buneman, Khanna, Tajima, Tan) • OMIM (On-line Mendelian Inheritance in Man) is a widely used genetic database. A new version is released daily. • Bottom line, we can archive a year of versions of OMIM with <15% more space than the most recent version A Sequence of Versions “Pushing” time down [Driscoll, Sarnak, Sleator, Tarjan: “Making Data Structures Persistent.” ] The final result (for the randomly selected data) Predicted expansion for a year’s archive: < 15% Summary: technical issues • Why and where: – better characterization of where (new ideas needed) – negation/aggregation • Keys: – – – inference rules for relative keys foreign key constraints interaction between keys and DTDs/types • Types for deterministic model (and other models). • Annotation • Temporal QLs and archives Pattern: Better support for work • Data is increasingly complex and interdependent. • “Curating” the data is continuous, and involves international effort to increase the scientific value of the data. • Understanding the way we work with data is the key to providing adequate support for that work. • Deeper support for projects working across the globe. Credits • These issues are being addressed by Peter Buneman at Edinburgh. • Peter has recently joined Informatics and NeSC. • He has worked for a number of years on Digital Libraries and Biological Data Management.