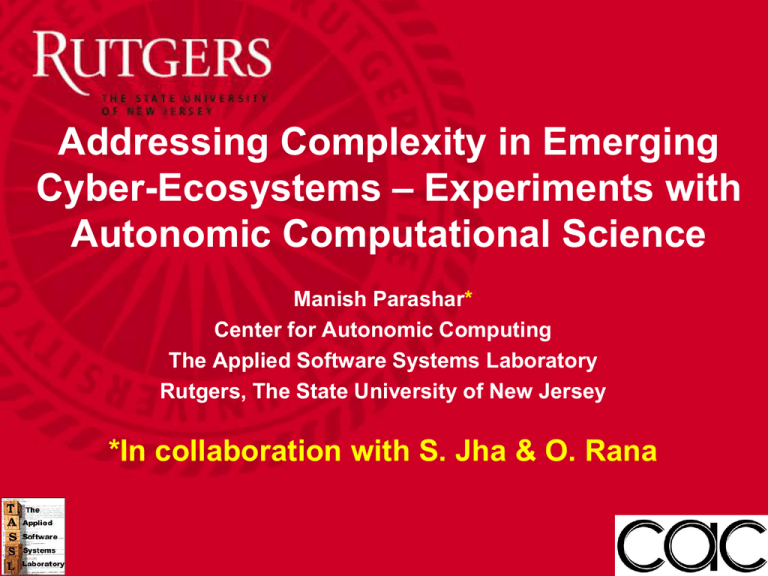

Addressing Complexity in Emerging – Experiments with Cyber-Ecosystems Autonomic Computational Science

advertisement

Addressing Complexity in Emerging Cyber-Ecosystems – Experiments with Autonomic Computational Science Manish Parashar* Center for Autonomic Computing The Applied Software Systems Laboratory Rutgers, The State University of New Jersey *In collaboration with S. Jha & O. Rana eSI Visitor Seminar – 01/13/10 Outline of My Presentation • Computational Ecosystems – Unprecedented opportunities, challenges • Autonomic computing – A pragmatic approach for addressing complexity! • Experiments with autonomics for science and engineering • Concluding Remarks eSI Visitor Seminar – 01/13/10 The Cyberinfrastructure Vision • “Cyberinfrastructure integrates hardware for computing, data and networks, digitally-enabled sensors, observatories and experimental facilities, and an interoperable suite of software and middleware services and tools…” - NSF’s Cyberinfrastructure Vision for 21st Century Discovery • A global phenomenon; several LARGE deployments – UK National Grid Service (NGS) /European Grid Infrastructure (EGI), TeraGrid, Open Science Grid (OSG), EGEE, Cybera, DEISA, etc., etc. • New capabilities for computational science and engineering – seamless access • resources, services, data, information, expertise, … – seamless aggregation – seamless (opportunistic) interactions/couplings Cyberinfrastructure => CyberEcosystems 21st century Science and Engineering: New Paradigms & Practices • Fundamentally data-driven/data intensive • Fundamentally collaborative eSI Visitor Seminar – 01/13/10 Unprecedented opportunities for Science/Engineering • Knowledge-based, information/data-driven, context/contentaware computationally intensive, pervasive applications – Crisis management, monitor and predict natural phenomenon, monitor and manage engineered systems, optimize business processes • Addressing applications in an end-to-end manner! – Opportunistically combine computations, experiments, observations, data, to manage, control, predict, adapt, optimize, … • New paradigms and practices in science and engineering? – How can it benefit current applications? – How can it enable new thinking in science? The Instrumented Oil Field (with UT-CSM, UT-IG,Seminar OSU, – eSI Visitor 01/13/10 UMD, ANL) Model Driven Detect and track changes in data during production. Invert data for reservoir properties. Detect and track reservoir changes. Assimilate data & reservoir properties into the evolving reservoir model. Use simulation and optimization to guide future production. Data Driven eSI Visitor Seminar – 01/13/10 Many Application Areas …. • Hazard prevention, mitigation and response – Earthquakes, hurricanes, tornados, wild fires, floods, landslides, tsunamis, terrorist attacks • Critical infrastructure systems – Condition monitoring and prediction of future capability • Transportation of humans and goods – Safe, speedy, and cost effective transportation networks and vehicles (air, ground, space) • Energy and environment – Safe and efficient power grids, safe and efficient operation of regional collections of buildings • Health – Reliable and cost effective health care systems with improved outcomes • Enterprise-wide decision making – Coordination of dynamic distributed decisions for supply chains under uncertainty • Next generation communication systems – Reliable wireless networks for homes and businesses • ………… • Report of the Workshop on Dynamic Data Driven Applications Systems, F. Darema et al., March 2006, www.dddas.org Source: M. Rotea, NSF eSI Visitor Seminar – 01/13/10 The Challenge: Managing Complexity, Uncertainty (I) • Increasing application, data/information, system complexity – Scale, heterogeneity, dynamism, unreliability, … • New application formulations, practices – Data intensive and data driven, coupled, multiple physics/scales/resolution, adaptive, compositional, workflows, etc. • Complexity/uncertainty must be simultaneously addressed at multiple levels – Algorithms/Application formulations • Asynchronous/chaotic, failure tolerant, … – Abstractions/Programming systems • Adaptive, application/system aware, proactive, … – Infrastructure/Systems • Decoupled, self-managing, resilient, … eSI Visitor Seminar – 01/13/10 The Challenge: Managing Complexity, Uncertainty (II) • The ability of scientists to realize the potential of computational ecosystems is being severely hampered due to the increased complexity and dynamism of the applications and computing environments. • To be productive, scientists often have to comprehend and manage complex computing configurations, software tools and libraries as well as application parameters and behaviors. • Autonomics and self-* can help ? (with the “plumbing” for starters…) eSI Visitor Seminar – 01/13/10 Outline of My Presentation • Computational Ecosystems – Unprecedented opportunities, challenges • Autonomic computing – A pragmatic approach for addressing complexity! • Experiments with autonomics for science and engineering • Concluding Remarks eSI Visitor Seminar – 01/13/10 The Autonomic Computing Metaphor • Current paradigms, mechanisms, management tools are inadequate to handle the scale, complexity, dynamism and heterogeneity of emerging systems and applications • Nature has evolved to cope with scale, complexity, heterogeneity, dynamism and unpredictability, lack of guarantees – self configuring, self adapting, self optimizing, self healing, self protecting, highly decentralized, heterogeneous architectures that work !!! • Goal of autonomic computing is to enable self-managing systems/applications that addresses these challenges using high level guidance – Unlike AI duplication of human thought is not the ultimate goal! “Autonomic Computing: An Overview,” M. Parashar, and S. Hariri, Hot Topics, Lecture Notes in Computer Science, Springer Verlag, Vol. 3566, pp. 247-259, 2005. eSI Visitor Seminar – 01/13/10 Motivations for Autonomic Computing Source:http://www.almaden.ibm.com/almaden/talks/Morris_AC_10-02.pdf 2/27/07: Dow fell 546. Since worst plunge took place after 2:30 pm, trading limits were not activated 8/12/07: 20K people + 60 planes held at LAX after computer failure prevented customs from screening arrivals Source: http:idc 2006 8/3/07: (EPA) datacenter energy use by 2011 will cost $7.4 B, 15 power plants, 15 Gwatts/hour peak 8/1/06: UK NHS hit with massive computer outage. 72 primary care + 8 acute hospital trusts affected. Key Challenge Current levels of scale, complexity and dynamism make it infeasible for humans to effectively manage and control systems and applications eSI Visitor Seminar – 01/13/10 Autonomic Computing – A Pragmatic Approach • Separation + Integration + Automation ! • Separation of knowledge, policies and mechanisms for adaptation • The integration of self–configuration, – healing, – protection,– optimization, … • Self-* behaviors build on automation concepts and mechanisms – Increased productivity, reduced operational costs, timely and effective response • System/Applications self-management is more than the sum of the self-management of its individual components M. Parashar and S. Hariri, Autonomic Computing: Concepts, Infrastructure, and Applications, CRC Press, Taylor & Francis Group, ISBN 0-8493-9367-1, 2007. eSI Visitor Seminar – 01/13/10 Autonomic Computing Theory • Integrates and advances several fields – Distributed computing • Algorithms and architectures – Artificial intelligence • Models to characterize, predict and mine data and behaviors – Security and reliability • Designs and models of robust systems – Systems and software architecture • Designs and models of components at different IT layers – Control theory • Feedback-based control and estimation (From S. Dobson et al., ACM Tr. on Autonomous & Adaptive Systems, Vol. 1, No. 2, Dec. 2006.) – Systems and signal processing theory • System and data models and optimization methods • Requires experimental validation eSI Visitor Seminar – 01/13/10 Autonomics for Science and Engineering ? • Autonomic computing aims at developing systems and application that can manage and optimize themselves using high-level guidance or intervention from users • only Manage application/information/system – dynamically adapt to changes in accordance with business policies and complexity objectives and take care of routine elements of management • not just hide it! • Separation of management and optimization policies from enabling mechanisms – allows a repertoire of a mechanisms to be automatically orchestrated at • Enabling new thinking, formulations runtime to respond to heterogeneity, dynamics, etc. • E.g.,do develop strategies that are capable of identifying and characterizing • how I think about/formalize my problem patterns at design and at runtime and, using relevant (dynamically defined) policies, managing and optimizing the patterns. differently? • Application, Middleware, Infrastructure eSI Visitor Seminar – 01/13/10 A Conceptual Framework for ACS (GMAC 07, with S. Jha and O. Rana) • Hierarchical • Within and across level … eSI Visitor Seminar – 01/13/10 Crosslayer Autonomics eSI Visitor Seminar – 01/13/10 Existing Autonomic Practices in Computational Science (GMAC 09, SOAR 09, with S. Jha and O. Rana) Autonomic tuning of the application Autonomic tuning by the application eSI Visitor Seminar – 01/13/10 Spatial, Temporal and Computational Heterogeneity and Dynamics in SAMR Spatial Heterogeneity Temperature Temporal Heterogeneity OH Profile Simulation of combustion based on SAMR (H2-Air mixture; ignition via 3 hot-spots) Courtesy: Sandia National Lab eSI Visitor Seminar – 01/13/10 Autonomics in SAMR • Tuning by the application – Application level: when and where to refine – Runtime/Middleware level: When, where, how to partition and load balance – Runtime level: When, where, how to partition and load balance – Resource level: Allocate/de-allocate resources • Tuning of the application, runtime – – – – – – When/where to refine Latency aware ghost synchronization Heterogeneity/Load-aware partitioning and load-balancing Checkpoint frequency Asynchronous formulations … eSI Visitor Seminar – 01/13/10 Outline of My Presentation • Computational Ecosystems – Unprecedented opportunities, challenges • Autonomic computing – A pragmatic approach for addressing complexity! • Experiments with autonomics for science and engineering • Concluding Remarks eSI Visitor Seminar – 01/13/10 Autonomics for Science and Engineering – Application-level Examples • Autonomic to address complexity in science and engineering • Autonomic as a paradigm for science and engineering • Some examples: – Autonomic runtime management – multiphysics, adaptive mesh refinement – Autonomic data streaming and in-network data processing – coupled simulations – Autonomic deployment/scheduling – HPC Grid/Cloud integration – Autonomic workflows – simulation based optimization (Many system level examples not presented here …) eSI Visitor Seminar – 01/13/10 Coupled Fusion Simulations: A Data Intensive Workflow eSI Visitor Seminar – 01/13/10 Autonomic Data Streaming and In-Transit Processing for Data-Intensive Workflows • Workflow with coupled simulation codes, i.e., the edge turbulence particle-in-cell (PIC) code (GTC) and the microscopic MHD code (M3D) -- run simultaneously on separate HPC resources • Data streamed and processed enroute -- e.g. data from the PIC codes filtered through “noise detection” processes before it can be coupled with the MHD code • Efficiently data streaming between live simulations -- to arrive just-intime -- if it arrives too early, times and resources will have to be wasted to buffer the data, and if it arrives too late, the application would waste resources waiting for the data to come in • Opportunistic use of in-transit resources “An Self-Managing Wide-Area Data Streaming Service,” V. Bhat*, M. Parashar, H. Liu*, M. Khandekar*, N. Kandasamy, S. Klasky, and S. Abdelwahed, Cluster Computing: The Journal of Networks, Software Tools, and Applications, Volume 10, Issue 7, pp. 365 – 383, December 2007. eSI Visitor Seminar – 01/13/10 Autonomic Data Streaming & In-Transit Processing Budget estimation In-Transit Level “Reactive” management Slack metric Generator metric updates Simulation Slack metric corrector Coupling LLC Controller Data flow Application Level “Proactive” management Sink In-Transit node Simulation Slack metric corrector Slack metric Generator Slack metric adjustment – Application level • Proactive QoS management strategies using model-based LLC controller • Capture constraints for in-transit processing using slack metric – In-transit level • Opportunistic data processing using dynamic in-transit resource overlay • Adaptive run-time management at in-transit nodes based on slack metric generated at application level – Adaptive buffer management and forwarding eSI Visitor Seminar – 01/13/10 Autonomics for Coupled Fusion Simulation Workflows eSI Visitor Seminar – 01/13/10 Autonomic Streaming: Implementation/Deployment Rutgers University SLAMS Data In-Transit NERSC SS FFT DAS SLAMS • ArchS ADSS Sort data DAS DTS DAS Scale data • DTS = Data Transfer service Sink ORNL SS ADSS BudjS SLAMS • CBMS = LLC Controller based buffer management service Data Consumers DAS DAS Data Producers – SS = Simulation Service (GTC) – ADSS = Autonomic Data Streaming Service PPPL CBMS Simulation Workflow FFT DAS SLAMS VisS BMS Rutgers University DTS PS – DAS = Data Analysis Service – SLAMS = Slack Manager Service – PS = Processing Service – BMS = Buffer Management Service – ArchS = Archiving data at sink • Simulations executes on leadership class machines at ORNL and NERSC • In-transit nodes located at PPPL and Rutgers eSI Visitor Seminar – 01/13/10 Adaptive Data Transfer 140 120 100 100 80 80 60 60 40 40 DTS to WAN DTS to LAN Bandwidth Congestion 20 20 0 0 0 • 4 6 8 10 12 14 Controller Interval 16 18 20 22 24 Data transferred over WAN Congested at intervals 9-19 – • 2 No congestion in intervals 1-9 – • Bandwidth (Mb/sec) Data Transferrred by DTS(MB) 120 Controller recognizes this congestion and advises the Element Manager, which in turn adapts DTS to transfer data to local storage (LAN). Adaptation continues until the network is not congested – Data sent to the local storage by the DTS falls to zero at the 19th controller interval. eSI Visitor Seminar – 01/13/10 – Effective Network Transfer Rate dips below the threshold (our case around 100Mbs) ADSS-0 Buffer ADSS-1 ADSS-2 Buffer Buffer 5 80 4 60 3 40 2 20 1 0 0 0 Transfer Simulation 100 Data Transfer Data Transfer Data Transfer 20 40 60 80 100 120 Data Generation Rate (Mbps) 140 160 % Network throughput vs Mbps Number of ADSS Instances vs Mbps % Network throughput is difference between the max and current network transfer rate Number of ADSS Instances • Create multiple instances of the Autonomic Data Streaming Service (ADSS) % Network throughput Adaptation of the Workflow eSI Visitor Seminar – 01/13/10 Reservoir Characterization: EnKF-based History Matching (with S. Jha) • Black Oil Reservoir Simulator – simulates the movement of oil and gas in subsurface formations • Ensemble Kalman Filter – computes the Kalman gain matrix and updates the model parameters of the ensembles • Hetergeneous, dynamic workflows • Based on Cactus, PETSc eSI Visitor Seminar – 01/13/10 Experiment Background and Set-Up (2/2) • Key metrics – Total Time to Completion (TTC) – Total Cost of Completion (TCC) • Basic assumptions – TG gives the best performance but is relatively more restricted resource. – EC2 is a relatively more freely available but is not as capable. • Note that the motivation of our experiments is to understand each of the usage scenarios and their feasibility, behaviors and benefits, and not to optimize the performance of any one scenario. eSI Visitor Seminar – 01/13/10 Autonomic Integration of HPC Grids & Clouds (with S. Jha) • Acceleration: Clouds used as accelerators to improve the application time-to-completion – alleviate the impact of queue wait times or exploit an additionally level of parallelism by offloading appropriate tasks to Cloud resources • Conservation: Clouds used to conserve HPC Grid allocations, given appropriate runtime and budget constraints • Resilience: Clouds used to handle unexpected situations – handle unanticipated HPC Grid downtime, inadequate allocations or unanticipated queue delays eSI Visitor Seminar – 01/13/10 Objective I: Using Clouds as Accelerators for HPC Grids (1/2) • Explore how Clouds (EC2) can be used as accelerators for HPC Grid (TG) work-loads – – – – 16 TG CPUs (1 node on Ranger) average queuing time for TG was set to 5 and 10 minutes. the number of EC2 nodes from 20 to 100 in steps of 20. VM start up time was about 160 seconds eSI Visitor Seminar – 01/13/10 Objective I: Using Clouds as Accelerators for HPC Grids (2/2) The TTC and TCC for Objective I with 16 TG CPUs and queuing times set to 5 and 10 minutes. As expected, more the number of VMs that are made available, the greater the acceleration, i.e., lower the TTC. The reduction in TTC is roughly linear, but is not perfectly so, because of a complex interplay between the tasks in the work load and resource availability eSI Visitor Seminar – 01/13/10 Objective II: Using Clouds for Conserving CPU-Time on the TeraGrid • Explore how to conserve fixed allocation of CPU hours by offloading tasks that perhaps don’t need the specialized capabilities of the HPC Grid Distribution of tasks across EC2 and TG, TTC and TCC, as the CPU-minute allocation on the TG is increased. eSI Visitor Seminar – 01/13/10 Objective III: Response to Changing Operating Conditions (Resilience) (1/4) • Explore the situation where resources that were initially planned for, become unavailable at runtime, either in part or in entirety – How can Cloud services be used to address this situations and allow the system/application to respond to a dynamic change in availability of resources. • Initially 16 TG CPUs for 800 minutes allocated. After about 50 minutes of execution (i.e., 3 Tasks were completed on the TG), available CPU time is change to only 20 CPU minutes remain eSI Visitor Seminar – 01/13/10 Objective III: Response to Changing Operating Conditions (Resilience) (2/4) Allocation of tasks to TG CPUs and EC2 nodes for usage mode III. As the 16 allocated TG CPUs become unavailable after only 70 minutes rather than the planned 800 minutes, the bulk of the tasks are completed by EC2 nodes. eSI Visitor Seminar – 01/13/10 Objective III: Response to Changing Operating Conditions (Resilience) (3/4) Number of TG cores and EC2 nodes as a function of time for usage mode III. Note that the TG CPU allocation goes to zero after about 70 minutes causing the autonomic scheduler to increase the EC2 nodes by 8. eSI Visitor Seminar – 01/13/10 Objective III: Response to Changing Operating Conditions (Resilience) (4/4) Overheads of resilience on TTC and TCC. eSI Visitor Seminar – 01/13/10 Autonomic Formulations/Programming Element Manager Functional Port Computational Element Control Application strategies Application requirements Application workflow Port Operational Port Autonomic Element Event generation Other Interface invocation Actuator invocation Element Manager Internal state Rules Contextual state Composition manager Interaction rules Interaction rules Interaction rules Interaction rules Behavior rules Behavior rules Behavior rules Behavior rules eSI Visitor Seminar – 01/13/10 The Instrumented Oil Field • • Production of oil and gas can take advantage of installed sensors that will monitor the reservoir’s state as fluids are extracted Knowledge of the reservoir’s state during production can result in better engineering decisions – economical evaluation; physical characteristics (bypassed oil, high pressure zones); productions techniques for safe operating conditions in complex and difficult areas Detect and track changes in data during production Invert data for reservoir properties Detect and track reservoir changes Assimilate data & reservoir properties into the evolving reservoir model Use simulation and optimization to guide future production, future data acquisition strategy “Application of Grid-Enabled Technologies for Solving Optimization Problems in Data-Driven Reservoir Studies,” M. Parashar, H. Klie, U. Catalyurek, T. Kurc, V. Matossian, J. Saltz and M Wheeler, FGCS. The International Journal of Grid Computing: Theory, Methods and Applications (FGCS), Elsevier Science Publishers, Vol. 21, Issue 1, pp 19-26, 2005. eSI Visitor Seminar – 01/13/10 Effective Oil Reservoir Management: Well Placement/Configuration • Why is it important – Better utilization/cost-effectiveness of existing reservoirs – Minimizing adverse effects to the environment Bad Management Better Management Much Bypassed Oil Less Bypassed Oil eSI Visitor Seminar Autonomic Reservoir Management: “Closing the Loop” using – 01/13/10 Optimization Management decision Dynamic Decision System Optimize • Economic revenue • Environmental hazard •… Based on the present subsurface knowledge and numerical model Dynamic DataDriven Assimilation Subsurface characterization Improve knowledge of subsurface to reduce uncertainty Data assimilation Acquire remote sensing data Update knowledge of model Improve numerical model Experimental design Plan optimal data acquisition START Processing Middleware Autonomic Grid Middleware Grid Data Management eSI Visitor Seminar – 01/13/10 An Autonomic Well Placement/Configuration Workflow Generate Guesses Start Parallel Instance connects to DISCOVER IPARS Instances Send Guesses DISCOVER Notifies Clients Clients interact with IPARS SPSA Send guesses VFSA client DISCOVER Optimization Service IPARS Factory client Exhaustive Search If guess in DB: send response to Clients and get new guess from Optimizer History/ Archive d Data MySQL Database If guess not in DB instantiate IPARS with guess as parameter AutoMate Programming System/Grid Middleware Sensor/ Context Data Oil prices, Weather, etc. eSI Visitor Seminar – 01/13/10 Autonomic Oil Well Placement/Configuration permeability Contours of NEval(y,z,500)(10) Pressure contours 3 wells, 2D profile Requires NYxNZ (450) evaluations. Minimum appears here. VFSA solution: “walk”: found after 20 (81) evaluations eSI Visitor Seminar – 01/13/10 Autonomic Oil Well Placement/Configuration (VFSA) “An Reservoir Framework for the Stochastic Optimization of Well Placement,” V. Matossian, M. Parashar, W. Bangerth, H. Klie, M.F. Wheeler, Cluster Computing: The Journal of Networks, Software Tools, and Applications, Kluwer Academic Publishers, Vol. 8, No. 4, pp 255 – 269, 2005 “Autonomic Oil Reservoir Optimization on the Grid,” V. Matossian, V. Bhat, M. Parashar, M. Peszynska, M. Sen, P. Stoffa and M. F. Wheeler, Concurrency and Computation: Practice and Experience, John Wiley and Sons, Volume 17, Issue 1, pp 1 – 26, 2005. Summary eSI Visitor Seminar – 01/13/10 • CI and emerging computational ecosystems – Unprecedented opportunity • new thinking, practices in science and engineering – Unprecedented research challenges • scale, complexity, heterogeneity, dynamism, reliability, uncertainty, … • Autonomic Computing can address complexity and uncertainty – Separation + Integration + Automation • Experiments with Autonomics for science and engineering – Autonomic data streaming and in-transit data manipulation, Autonomic Workflows, Autonomic Runtime Management, … • However, there are implications – Added uncertainty – Correctness, predictability, repeatability – Validation eSI Visitor Seminar – 01/13/10 Thank You! Email: parashar@rutgers.edu