A Matter of Life and Death: Practical and Ethical Autopsy Tool

advertisement

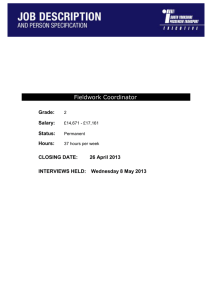

Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France A Matter of Life and Death: Practical and Ethical Constraints in the Development of a Mobile Verbal Autopsy Tool Jon Bird1, Peter Byass2,3,4, Kathleen Kahn2,3,4, Paul Mee2,3,4 and Edward Fottrell2,5 1 UCL Interaction Centre, University College London, UK, jon.bird@ucl.ac.uk Umeå Centre for Global Health Research, Umeå University, Sweden, peter.byass@epiph.umu.se 3 MRC/Wits Rural Public Health and Health Transitions Research Unit (Agincourt), School of Public Health, Faculty of Health Sciences, University of the Witwatersrand, South Africa, kathleen.kahn@wits.ac.za 4 INDEPTH Network, Accra, Ghana, paul.mee@agincourt.co.za 5 UCL Institute for Global Health, University College London, UK, e.fottrell@ucl.ac.uk 2 ABSTRACT Verbal autopsy (VA) involves interviewing relatives of the deceased to identify the probable cause of death and is typically used in settings where there is no official system for recording deaths or their causes. Following the interview, physician assessment to determine probable cause can take several years to complete. The World Health Organization (WHO) recognizes that there is a pressing need for a mobile device that combines direct data capture and analysis if this technique is to become part of routine health surveillance. We conducted a field test in rural South Africa to evaluate a mobile system that we designed to meet WHO requirements (namely, simplicity, feasibility, adaptability to local contexts, cost-effectiveness and program relevance). If desired, this system can provide immediate feedback to respondents about the probable cause of death at the end of a VA interview. We assessed the ethical implications of this technological development by interviewing all the stakeholders in the VA process (respondents, fieldworkers, physicians, population scientists, data managers and community engagement managers) and highlight the issues that this community needs to debate and resolve. Author Keywords Verbal autopsy; mobile devices; ethics; HCI4D. ACM Classification Keywords H.5.m. Information interfaces and presentation (e.g., HCI): Miscellaneous. INTRODUCTION Currently, the causes of two thirds of the world’s deaths are not recorded [13] and the World Health Organization (WHO) receives reliable cause of death statistics from only 31 of its 193 member states. This lack of knowledge about mortality patterns is not only a concern for academic popuPermission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. CHI 2013, April 27–May 2, 2013, Paris, France. Copyright © 2013 ACM 978-1-4503-1899-0/13/04...$15.00. 1489 lation health scientists: national and local health managers also recognise that this information deficit impacts on their ability to plan and evaluate public health interventions and prioritize resources [3]. Verbal autopsy (VA) [15] is the process of interviewing relatives or other close caregivers of a deceased person to elicit the symptoms and circumstances of their death. At present, trained VA fieldworkers use paper-based questionnaires to ask respondents questions about the signs and symptoms of the terminal illness, typically including an ‘open history’ in which the respondent describes in their own words the circumstances and sequence of events leading to death. These data are later assessed by two or three physicians to identify the probable cause of death in a process known as physician-coded verbal autopsy (PCVA). VA remains the best available approach for assessing causes of death in communities in which most deaths occur at home [16]. PCVA is usually the most time consuming step in the VA process. Although in simple cases it might only take a few minutes for a physician to identify the probable cause of death from a VA questionnaire, they typically do the analysis in their spare time rather than as a full-time job. For example, in Agincourt, South Africa, which was the location of the field study described in this paper, it takes at least one year and occasionally several years from the respondent interview to the completion of a PCVA. InterVA (http://www.interva.net) is well-established software that can automatically and rapidly assign probable causes of death to VA data that have been converted into a digital format. Based on the answers to a series of yes/no questions (with relevant skip patterns depending on the age of the deceased and responses to filter questions), InterVA uses Bayesian principles to identify probable causes of death. Not only is the software significantly quicker than PCVA at identifying probable causes of death (seconds compared to years), there is some evidence that physician death certification can be highly variable and imprecise [11]. This is in contrast to InterVA which, like any mathematical model, is consistent and reproducible enabling causes of death from different times and locations to be compared. To date, VA Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France has predominantly been applied in research settings by population health scientists. However, there is a strong desire from WHO, the Health Metrics Network, the US Centers for Disease Control and other major health organizations to extend VA methods to routine health surveillance, outside of research settings [16]. This has led to WHO recently revising the standard VA questionnaires and a short but useful list of diagnoses that can realistically be ascertained by a limited number of questions. InterVA-4 has been designed to align with these new WHO instruments and has integrated insights from previous versions of InterVA with expert consensus. Coinciding with these developments in automated interpretation, WHO further recognizes the pressing need to develop mobile devices that facilitate the application of VA for the routine registration of deaths: “Users of VA have identified the need for simpler data collection instruments coupled with IT (e.g. mobile phones or hand-held devices). These large scale users of VA have a perspective different from that (sic) one of researchers, giving priority to the VA instrument’s simplicity, feasibility and adaptability to local contexts, cost-effectiveness and program relevance. A simplified VA instrument coupled with automated methods to ascertain causes of death can be a stepping-stone to increase the coverage of operational and representative civil and vital registration systems.” [13] Combining the ability to record, analyse and store VA data in a mobile device has a number of potential benefits, including significantly reducing the time taken to collect and analyse and present it. This is primarily because using analysis software to automatically assign probable cause of death means that the typically lengthy and unstandardized PCVA stage is no longer required. Moving away from paper questionnaires to direct electronic data capture also obviates the need to convert paper-based VA questionnaires into a digital form, further reducing data processing time and cost as well as removing the potential for transcription errors to be introduced at this stage. Data collection can also be standardized and errors reduced by automatically ensuring that all and only the appropriate questions are asked. A final potential benefit is that it is no longer necessary to store large numbers of paper questionnaires (which can be a significant overhead). The development of a mobile, automated VA system also creates unique opportunities for the feedback of probable diagnoses to respondents in what are traditionally one-way encounters. VA data collected during an interview are typically recorded on paper at the respondent’s home and then taken back to an office where they are entered into a computer and later analysed. The results of the analysis are not usually fed back to respondents although community-level communication of aggregated results does occur [8]. A mobile VA system incorporating analysis software could compute the probable cause of death at the end of the interview. It would then be possible for fieldworkers to feed this back 1490 to respondents and also provide them with relevant health information where appropriate. For example, if the probable cause of death of a respondent’s spouse indicated a lifestyle disease, such as type 2 diabetes or chronic obstructive pulmonary disease (COPD), then the respondent could potentially benefit from information about a healthy diet or the risks of smoking, assuming that they share a similar lifestyle to the deceased. The complexities of providing such information need considerable thought, however, in particular when the information may be sensitive or cause the respondent distress. For example, this could occur if the probable cause of death is stigmatized, such as HIV, or when the probable cause of death is an infectious disease, such as TB, and a respondent could be worried that they too were infected. The precise means and ethics of providing respondents with the probable cause of death and health information have had very little consideration in the population health research community. This is partly because scientists collecting population mortality statistics typically see their role as one of measurement rather than public health intervention. Another reason, as mentioned above, is that the current VA process can take a year or more to determine the probable cause of death after the interview. As a result, providing immediate cause of death and health information to individual respondents has remained a hypothetical issue. Our initial goal in this study was to assess the data capture, analysis and storage capabilities of a novel mobile InterVA system (known as MIVA) in terms of the characteristics that WHO has identified as necessary if VA is to become a part of routine health surveillance; namely, simplicity, system feasibility, adaptability to local context, cost-effectiveness and program relevance. However, by combining real-time electronic data capture with InterVA in a mobile device we created a system that can compute the probable cause of death of the deceased at the end of the VA interview. One contribution of this paper is to highlight the new opportunities that arise from recording and analysing health data on mobile phones. Another is to discuss how the field testing of MIVA made the ethical implications of providing cause of death and health information to respondents a very real and pressing concern. We present the views of various stakeholders on the risks and benefits of providing direct feedback of cause of death to respondents, and whether, given the technical ability, this should be done. We identify the ethical issues, made apparent by the field study, that the VA community should debate in order to clarify the role that MIVA-like systems will play in health surveillance and intervention. More generally, our study identifies ethical issues that are relevant to the design of mobile devices that both collect and analyse health data. A final contribution is our discussion of some of the challenges of recording ‘open histories’ on mobile devices. Our findings are relevant to the design of mobile forms, in particular highlighting the challenge of collecting unstructured data. Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France RELATED WORK Since the emergence of Human-Computer Interaction for Development (HCI4D) in the late 1990s, improving healthcare has been the focus of many projects [2, 6, 10]. PDAs have often been used to improve the efficiency and effectiveness of medical data collection [7, 11]. The only previous study we are aware of that uses a PDA to collect and analyse VA data was carried out in 2008 in Burkina Faso and Indonesia [4]. The system was used as part of a one-off research project that evaluated a safe-motherhood programme and therefore only focused on the deaths of women of reproductive age. The implementation was successful, with hundreds of VA interviews being successfully completed over 6 months and assigned a cause of death. However, issues resulting from directly feeding back cause of death information to respondents were only superficially explored, establishing that respondents are extremely likely to agree with the InterVA diagnosis of cause of death for the simple reason that it is derived from a computer. More recently, mobile phones have been increasingly used for health data collection in the developing world, typically lower-end devices because of their low cost, long battery life and robustness. For example, CommCare [14] runs on a Nokia C2-01 (~USD75), and in a longitudinal study in rural India was found to be more effective than a paper-based system and preferred by health workers because of the status derived from using the device and being able to use the phone outside of a work context. However, smartphones are reducing in price and becoming more robust and offer features such as processing power, larger screens and touch interfaces. We chose a low specification smartphone in our field study because of its ability to both collect and analyse data as well as its longer battery life compared to more expensive devices. Choosing an appropriate technology is one of the key characteristics of HCI4D projects that have successfully designed technologies to fit local contexts [9]. Two other key factors are: first, building strong relationships with local partners, carefully identifying all of the stakeholders involved (some of whom may have conflicting requirements); and second, allowing users to participate, to varying extents, in the design process. These findings about relationships, participation and appropriate technology choice have informed our approach, as we detail in the following sections, influencing our choice of site for the field study, our design approach and choice of mobile device. In the next section we describe the design of MIVA and how it was shaped by the needs of multiple users and the WHO practical constraints. SYSTEM DESIGN Our goal in developing MIVA was to build a mobile application for the direct data capture, rapid interpretation, presentation and storage of cause of death information for use in low-income settings. The software was designed to 1491 integrate the latest WHO VA standard questions with InterVA-4 analysis software. Users The system has a number of different users, each with different needs, all of whom place practical constraints on the design. First, are the field workers who will take the device out into the field to interview relatives of the deceased. Second, are the local data managers whose job is to ensure that the VA data collected by the fieldworkers is securely stored in a form that makes it easy to analyse and present. Third, are the respondents who are interviewed about the death of a close relative. Fourth, are local, national and global public health managers who need to plan and evaluate public health interventions and allocate resources. Fifth, are population scientists who require mortality data for their research. We used a ‘weak’ participatory design approach, purposely leaving parts of the design incomplete so they could be finished with the help of fieldworkers and data managers in the field study. Our goal was to facilitate fieldworkers’ sense of ownership of the system and thereby encourage them to provide useful feedback on the design, which can be difficult to elicit in developing world contexts [1]. Two researchers conducted the field study, one an experienced VA researcher (EF), the other an HCI researcher and programmer (JB) who had developed the software and could make rapid changes in response to feedback. WHO VA standard questions VA cannot ascertain all causes of death and does not perform equally well for all causes. Given these limitations, WHO has developed standard questionnaires that ascertain 63 causes of death with reasonable accuracy from a VA interview. An expert review group has created three standard questionnaires: death of a child aged under 4 weeks; death of a child aged 4 weeks to 14 years; death of a person aged 15 years and above. Each of these begin with a ‘general information’ module that records socio-demographic information and information for data management, such as time, date and location of the interview and a unique ID. The subsequent modules include questions to ascertain the signs and symptoms that help determine the probable cause of death. Some questions relating to accidents and systemic signs and symptoms (for example, for fever and intestinal symptoms) are always asked; other questions are asked depending on the age and sex of the deceased. Our focus was on designing a system that displayed only the relevant signs and symptoms questions, given the age and sex of the deceased and answers to previous questions. InterVA-4 The existing InterVA-4 software runs on Windows in batch mode. The answers to the 246 WHO VA signs and symptoms questions are stored in a text file and, based on the ‘yes’ answers, the most probable cause or causes of death are calculated using a Bayesian approach informed by a table of probabilities associating symptoms to causes. The Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France MIVA software was designed to use as much of the existing InterVA-4 software as possible, in particular three spreadsheet files which specify: a) the order of questions; b) the probabilities associating symptoms to causes (derived by consulting experts); and c) the question skip patterns. These files are used to configure the software each time it loads, whereas the well-established Bayesian algorithm is hard coded into the application. Platform Mobile devices, particularly phones, are the most widely used technology in HCI4D [9], due to their widespread use in the developing world, their cheapness, portability and low energy consumption compared to laptops. Our goal was to develop software that could be used on a wide range of mobile phones. We therefore decided to develop software that runs in HTML 5 browsers, a technology that is found on many mobile devices and is cost-effective as all major browsers are free to download. The software consists of an HTML page that is stored locally on the mobile device. JavaScript is used to implement the data capture and storage functions. Some browsers, for example Firefox, have addons that enable the browser to run in full screen mode without navigation bars, which is useful as users cannot inadvertently navigate away from the browser while using the system. For the field study reported in this paper we installed the software on four Sony Xperia U smartphones. These were chosen for their small size, good battery life (more than one day in offline mode) and low price (GBP160). They can run all HTML 5 browsers, including Firefox, Chrome and Opera and are therefore a good platform for testing the software on multiple browsers. During the field study, the phones were used in silent mode and auto-rotate was switched off as users found it both confusing and annoying when the screen switched from portrait to landscape when they were conducting a VA interview. Adaptability and maintenance The software was designed so that it could be easily maintained and adapted to local contexts by non-programmers; the key parameters of the system can be changed and updated using only a spreadsheet application. When the software is loaded in a browser it reads the three locally stored configuration files described in the previous section. All of these can be generated from spreadsheets using a script file that comes with MIVA. For example, localization involves translating the spreadsheet containing the English version of the WHO VA questions into the local language, running the script that converts the spreadsheet into a text file and then uploading it to the mobile phone via a USB connection. Updating the system with changes to the VA questions, InterVA probabilities or question skip patterns is similarly straightforward. Interface We purposefully designed the interface to be very simple and have as few navigation controls on the screen as possible. Each question is presented, in turn, on a single screen without any scrolling necessary. The system runs best in 1492 full screen mode without any browser controls or phone utility bars visible (which can be achieved using freely available browser add-ons). The interface consists of radio buttons and two rectangular navigation buttons (a previous and next button, the functions of which are communicated symbolically rather than by text) (Figure 1). There are two types of question: multiple choice, where there might be up to 7 exclusive and exhaustive options, each of which can be selected by a radio button; and simple questions with three exclusive answer options: ‘yes’, ‘no’ and ‘don’t know’. All of the questions are closed and involve selecting one option. When a question screen is first presented to a user, none of the radio buttons are checked. If a user tries to navigate to the next or previous question before they have selected an answer then the navigation button turns red and the user is not able to move to another question. Once they have selected an answer the navigation buttons turn grey once more and users are allowed to move to the next question or previous questions. If all of the relevant VA questions have been asked then a small green circle appears at the bottom of the screen between the navigation buttons. When this button is pressed, MIVA stores the question responses in the browser’s local storage and takes the field worker to a finish screen. MIVA could at this point display the results of the InterVA4 analysis and show the calculated probable cause of death. However, in the study reported in this paper, we decided to withhold the cause of death information until the research and community engagement managers better understood any potential associated risks, and because the VA fieldworkers who were using our MIVA system recognized that they did not have the necessary training to answer all the questions that a respondent might ask about the cause of death and its possible implications. In order to assess the ethical implications of providing respondents with a probable cause of death at the end of the interview, as part of our field study we not only evaluated MIVA but also interviewed the different stakeholders involved in the VA process (including respondents, fieldworkers, physicians, population scientists, data managers and community engagement managers) to find out their views. FIELD STUDY A preliminary evaluation was designed to document and assess the process of localizing the MIVA software, the ability to train local fieldworkers in its use, the feasibility and utility of MIVA for routine VAs, and opportunities and risks in data storage, transmission and presentation. The preliminary evaluation of MIVA took place in the Agincourt Health and Socio-demographic Surveillance System (AHDSS), South Africa. Part of the INDEPTH international network of HDSSs (www.indepth-network.org), the Agincourt study site constitutes a sub-district of Bushbuckridge district, Mpumalanga Province, in the rural northeast of South Africa. Since 1992, annual household surveys have provided basic information on vital events, namely, births, deaths and migrations. All identified deaths are followed-up Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France Figure 2. Two fieldworkers conducting a verbal autopsy interview using mobile phones running MIVA Figure 1. MIVA screen shots: Left – recording the age group of the deceased. The previous question navigation button is greyed out as this is the first question. Right – the final screen showing a typical ‘Yes/No/Don’t know’ question and the green finish button. The images show the questions in English but for the field study they were translated into Shangaan, the local language in the Agincourt field study site in rural South Africa. with a VA interview administered by a trained fieldworker using a paper questionnaire. AHDSS currently covers approximately 16,000 households in 27 villages representing a population of approximately 90,000 individuals [8]. The Agincourt site was selected as an ideal location to conduct the field study given the population’s and staff’s extensive experience in VA processes since 1993, making them well-placed to compare MIVA to existing systems and provide feedback on MIVA and the WHO questions that it incorporates. A further benefit of conducting the study in the AHDSS is the ability to capture the views of a range of end-users who routinely work with or are exposed to VA. Field observations and qualitative data capture through unstructured interviews with all stakeholder groups complemented basic quantitative data on the duration of interviews and interview completion rates. Over a period of seven days spread over two weeks we worked directly with four AHDSS VA fieldworkers, who all have at least one year’s experience of carrying out VAs, previous experience of fieldwork on other surveys, and one of whom was a VA supervisor. The fieldworkers (3 female, 1 male) live in the community where they carry out VA interviews, have completed a secondary school education and are aged in their 30s and 40s. An initial focus group discussion on the challenges and experiences of VA fieldwork provided an opportunity to build rapport with the fieldworkers, which was considered crucial if they were to provide honest and critical feedback. One driver (who takes fieldworkers to respondents’ homes), one data typist (who had also been a VA respondent the previous year) and the data manager also participated. MIVA was then introduced 1493 as prototype software whose design the fieldworkers could potentially influence. An initial translation of the WHO VA questions and causes of death into Shangaan, the local language, was completed by a data typist. This formed the basis for training the fieldworkers on the questions they were expected to ask and assessing their comprehension of all questions and the causes of death that may be diagnosed by InterVA/MIVA. During training, fieldworkers were encouraged to verify translations and to correct any mistranslations or ambiguities. These translations were subsequently incorporated into MIVA, demonstrating to the fieldworkers their ability to influence its design and heightening the sense of participation. Following this initial three-day training, the translations were loaded onto four mobile phones and the fieldworkers were trained in the use of MIVA on the phones – this was the first time that the fieldworkers saw the mobile devices. Initially, the fieldworkers were shown a version of the software on a laptop and presented with a demonstration of how the interface worked. Fieldworkers then carried out role-play interviews, initially with the MIVA developers and subsequently among themselves where they practiced asking questions one at a time and entering the results. During this process, fieldworkers also learned how to correct data entered into MIVA and how corrections might result in different skip patterns of subsequent questions. The process of role-play identified further translation issues which were rapidly updated in the software. From days 4 to 7, fieldworkers conducted a total of 10 real VA interviews with respondents who had suffered bereavement and consented to a VA interview during the routine AHDSS processes in the preceding year (Figure 2). Cases for interview were selected from a list of all completed VAs in Agincourt village (closest to the field office), with both male and female respondents of varying ages (late teens to over 70 years) and deceased individuals ranging from babies to the elderly. All respondents were provided with details of the current study and signed a consent form to be re-interviewed and included in the current evaluation of MIVA. For the first six interviews, two fieldworkers attended each interview – one asking the questions but both entering the answers into MIVA, with Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France each fieldworker having at least one opportunity to ask the questions. The final four interviews were carried out by individual fieldworkers on their own. database and that they are indeed gathering data for the correct individual. RESULTS Ten VA interviews were successfully completed over a period of four days and the mean interview time using MIVA was 22 minutes (s.d. 5 minutes), with interview times getting shorter the more experience the fieldworkers acquired. The mobile phone batteries lasted all day and were charged each evening when the fieldworkers returned to AHDSS office after conducting their VA interviews. The display consumes the majority of the electricity and this can be diminished by reducing its brightness to 50%, a level at which the high contrast interface can still be clearly read. In this mode, MIVA could be used for several days in the field before the phone requires recharging. Each completed VA interview generates less than 2KB of data to be stored on the device or transmitted to a database. Several hundred VA interviews can easily be stored on the mobile phone. None of the fieldworkers felt that carrying the mobile phones and using them in the field posed a security risk to themselves or the devices, but recognized that this would not necessarily be the case in other settings such as urban centers. Findings from the field study are presented under headings that represent the key desirable features of a mobile VA device as described by WHO. Simplicity Although the text size on the mobile phone screen was quite small, none of the fieldworkers reported difficulty reading it. Two out of the four fieldworkers had had previous experience with a touch screen device and all owned and were familiar with mobile phones. After a short training session with the mobile phone, all of the field workers were able to use the touch interface to select a question response and navigate through the questionnaire. Feedback on the MIVA interface was received from fieldworkers as well as the AHDSS data team, who suggested ‘yes’, ‘no’ and ‘don’t know’ options for questions, despite the fact that only affirmative answers are used to derive cause of death by the InterVA-4 analysis algorithm. It was felt that having the three exhaustive options, one of which would have to be selected before progressing with the VA interview, would reassure fieldworkers who could feel uncomfortable leaving any particular question without a definitive response. The requirement to enter an answer before progressing with the interview further ensures that all necessary questions are asked. The three options and response requirements were added to MIVA before field testing. However, during the field testing of MIVA, the fieldworkers suggested a further option of “refused to answer” as they felt that the current three options would not satisfactorily capture a respondent’s unwillingness to disclose information. Fieldworkers also expressed a desire for a facility that would enable interviews to be suspended, saving all entered data in a retrievable format should the respondent wish to postpone completion of the interview to a later date. The option of pre-populating the MIVA system with basic background information of the deceased was suggested by the data management team. Such an option may be suitable for pre-enumerated populations, such as those in health and demographic surveillance systems, where information on the basic background and demographic characteristics of the deceased will already be stored in a central database. Pre-populated software could be used to allocate interviews to fieldworkers and may simplify data management, quality assurance and field activity coordination. However, fieldworkers felt that this would reduce the flexibility and autonomy they have over how they manage their workload. A more suitable solution might be to store the basic background information on the mobile device and populate the relevant MIVA fields with relevant information, for example, age and sex, when the fieldworker enters a unique identifier for that particular case. This would provide an opportunity for the fieldworker to verify data in the 1494 System Feasibility and Acceptability Overall, all fieldworkers gave extremely positive feedback on the MIVA system, describing it as better than the paperbased questionnaires they typically use to carry out VAs. One of the features that all of the fieldworkers liked about the system was its ability to automatically skip irrelevant questions and the fact that it was impossible to miss relevant ones. They felt that this helped the interview to flow more naturally and efficiently. The portability of the mobile phone and digital storage of data was also considered to be a considerable advantage, overcoming the need to transport and securely store numerous paper questionnaires. The mobile phones were considered to be discreet (Figure 2) and during field observations it was apparent that the presence of the mobile phone did not seem to intrude into the dialogue between fieldworker and respondent and was perhaps less intrusive than multi-paged A4 paper questionnaires. During focus-group discussions and informal qualitative interviews with the VA fieldworkers we conducted before introducing the MIVA system, fieldworkers had described their work as “very challenging”, mainly because respondents can become very emotional during the VA interview, particularly if the deceased died suddenly or unexpectedly. According to the fieldworkers, the paperbased interviews that they carry out in AHDSS can take between one to two hours to complete and fieldworkers can conduct up to four interviews per day. This AHDSS VA tool begins with an “open history” section in which the respondent describes in their own words the circumstances and sequence of events leading to death. VA fieldworkers are trained to prompt respondents to provide as much detail as possible and to report events in the sequence they occurred. The open history is translated into English and transcribed in longhand by the field worker during the Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France interview. Fieldworkers report it is the most time consuming step of the interview and also described it as the part of the interview in which respondents can become very emotional and consequently where they can feel most “uncomfortable”. Within this context, therefore, the fieldworkers recognized the speed of interview using MIVA as a major strength and reported that it would enable them to complete more interviews per day. Part of the reason for the quick interview time compared to the paper-based VA questionnaire currently used by AHDSS was the absence of a free-text, openhistory section in MIVA. In addition to reducing the time, fieldworkers also felt that the exclusive use of closed questions kept the interview fact-based and less emotional for the respondents. None of the ten interview respondents were displeased or distracted by the use of mobile phones for data collection, perhaps reflecting the ubiquitous nature of mobile telephony in rural South Africa. Indeed, two of the respondents were holding their own mobile phones during the interviews and one accepted a phone call. There were no issues in asking any of the questions that were presented in the ten interviews conducted. One respondent was observed to become uncomfortable and slightly upset when asked about the total costs of care incurred during the deceased’s terminal illness, evidently having suffered a heavy financial burden. Another respondent, a grown son to a deceased mother, found questions relating to his mother’s menopause strange and felt it was unrealistic to expect him to know such personal details about this aspect of his mother. Nevertheless, neither of these respondents took offence to the questions, reflecting the skill and experience of the fieldworkers. Despite the closed question design of all MIVA questions, respondents did not always respond with simple yes, no or don’t know responses, often giving longer explanations and describing situations that ultimately lead to a clear definitive answer. After the field study, an international health organization expressed a desire to build into MIVA a facility to capture longer narratives or make open-ended notes. Though not necessary for deriving a cause of death from MIVA and potentially being challenging on small devices with touch-screen keyboards, this facility was recognized as being particularly important to clinicians and those involved in PCVA. Adaptability to Local Context Translation and localization of the WHO questions and MIVA took approximately one and a half days; training fieldworkers to use MIVA on the mobile phones took approximately one day. It is important to note that the VA fieldworkers were highly trained and experienced. It was beneficial to have an imperfect, first pass translation of the questions from English to Shangaan prior to fieldworker training. This forced the fieldworkers to carefully think about the meaning of each question, increased familiarity 1495 with the questions and, through correcting the translations which were directly entered into a spreadsheet and subsequently updated in MIVA, enhanced feelings of ownership over the design process and the MIVA tool. None of the fieldworkers felt that any of the WHO VA questions were too sensitive to ask, although they recognized a need to be sensitive in asking some questions, demonstrating the importance of having highly skilled fieldworkers and reflecting the collective experience of the fieldworkers in this study, each of whom has completed several hundred VA interviews. Fieldworkers identified some questions that were inappropriate or not applicable to their setting. For example, they felt that descriptions of vomited coagulated blood as “coffee grounds” or describing a cyanosed baby as “blue” would not be recognized by respondents in their population. Nevertheless, the meanings of such indicators were easily explained to the fieldworkers who felt that, with appropriate training, they would be able to convey the meaning of all the WHO VA questions to respondents. Fieldworker interpretation and understanding of the MIVA cause of death list was limited for the majority of causes. Where fieldworkers felt that they did understand what a specific cause was, their understanding was often shown to be limited or incorrect when probed for further details. This has important implications in terms of providing direct feedback of cause of death to respondents and the fieldworkers themselves recognized that they would need further training before they would be able to do this. The fieldworkers were able to understand the idea of multiple causes contributing to death. Furthermore, they felt that the community in which they lived and worked would be able to understand this idea if explained carefully, although further investigation is needed to verify this. Cost Effectiveness Whilst a rigorous evaluation of cost-effectiveness was beyond the scope of this study, the reduced interview time observed and potential for a greater interview rate per day, combined with the increasing affordability of suitable mobile phone technology and the reduced need for a data entry stage, are indicative of overall cost-effectiveness and with a potential cost advantage over traditional paper-based methods, especially over the long term as digital data storage would be a lesser cost than that for paper-based questionnaires. Program Relevance In this context, program relevance relates to the suitability of tools to meet the needs of those who want to know cause of death data. However, there is a wide range of end-users and, within the field of VA research, it is increasingly becoming apparent that it is unlikely that any single tool can satisfy the needs of all. While program relevance may typically be applied to the end-users of the data, there are several other key stakeholders for whom MIVA has programmatic relevance, not least those directly involved in gathering, processing and storing the data. The perceptions of Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France fieldworkers have already been described, whose overwhelming view is that the MIVA system could greatly facilitate their work, although possible improvements have been noted and described above. Data managers, who are tasked with processing VA data and packaging it in a format useful to researchers and health program implementers, also praised MIVA for the opportunities it offers to simplify the data process and enhance data quality. Data security was identified as a priority issue by this group of stakeholders. It was felt that MIVA data, which are currently only stored in the local memory of the browser, should also be backed-up onto encrypted SD cards on the mobile and, given suitable connectivity, uploaded as frequently as possible to a central database. Within the AHDSS, which is essentially an academic research site for population health and socio-demographic surveillance, the MIVA system offers timely, standardized data capture and analysis as well as opportunities for more cost-effective field procedures. The standardized nature of MIVA-derived cause of death results is likely to appeal to researchers wishing to explore mortality trends over time and space. Such features are also likely to address the needs of local health managers, such as those tasked with prioritizing services for their community; MIVA could enable rapid and low-cost estimation of the major mortality cause burdens. Such data could subsequently be transferred upwards to inform national and global health authorities, such as the WHO. Those involved with health promotion or public health interventions may be more attracted to the potential for direct feedback of cause of death to VA respondents. Similarly, those involved in community engagement felt that the timeliness of results from MIVA was a significant advantage over paper-based methods and felt that direct feedback of cause to respondents was a positive development. In this way, the MIVA approach has blurred the boundaries between health measurement and health promotion, raising a number of operational and ethical issues that are explored further in the Discussion section and relate directly to the final stakeholder group – the VA respondents - who are the group potentially impacted the most by the opportunities offered by MIVA. DISCUSSION We begin by discussing two operational issues relating to MIVA and then move on to considering the ethical issue. First, by keeping all of the questions closed and not having an open history section, fieldworkers were able to complete VA interviews using the mobile system far more quickly than with the paper-based questionnaires used in the AHDSS (around 20 minutes for a MIVA interview compared to 1 or 2 hours using the paper questionnaire). As reported above, fieldworkers liked the fact that respondents did not become emotional when answering the closed MIVA questions. This raises the issue of how useful is an open history, and more generally open questions, for VAs. 1496 Do open responses provide a reliable source of data? We interviewed one of the designers of the AHDSS questionnaire about their motivation for including an open history section and she argued that not only did it provide data that could help the process of PCVA, it was also emotionally valuable for respondents as it gave them an opportunity to talk about the death of their relative. Although an open history is redundant for InterVA analysis, WHO is keen for some optional open text fields to be added to MIVA that could be used to record other observations at the end of a VA interview. Although it is feasible to add these to the interface, it will be tricky for a fieldworker to add large amounts of text data quickly using a small touch screen keyboard. For example, it would probably not be possible to listen to an open history in Shangaan, translate it into English and record it in real time, as is currently done on paper-based VA questionnaires in the AHDSS. One possible compromise is to write down open histories, responses to other open questions and extra observations in longhand on paper and to link these records to the digital data generated by MIVA with a unique interview ID. Another possible approach is to use audio recordings but this would require respondents to be fitted with lapel microphones if the quality is to be sufficiently high. Furthermore, automatically labelling and storing audio recordings could not be done from within an HTML5 browser and would require dedicated application software. MIVA can potentially reduce the cost of carrying out VAs by decreasing the time it takes to carry out three stages in the process: conducting the interview; analysing the responses to generate the probable cause of death; and inputting the interview data into a database. A second operational issue, raised by some AHDSS programme managers, was that another saving that could derive from MIVA is not having to use more highly skilled and paid VA fieldworkers to carry out interviews. This is because it is only necessary to ask closed questions and the system automatically calculates which ones need to be asked. However, we do not think that MIVA replaces trained VA fieldworkers; rather, we argue that the mobile system is only effective because of the trained interviewer using it. For example, interviewers have to interpret respondents’ answers if they do not follow the ‘yes’, ‘no’ or ‘don’t know’ format and it is crucial that they are sensitive to the emotional needs of respondents. A final and important issue raised by the MIVA field study is that it provides the opportunity of directly feeding-back information to interview respondents unlike the traditional one-way VA interview. This blurs the boundary between health surveillance and opportunities for health promotion activities and presents pressing ethical challenges that have to be debated by the VA community. We explored the ethics of providing respondents with a probable cause of death by interviewing different stakeholders in the VA process to find out their opinion on this issue, an issue that has not been considered in previous VA ethical discussions [5]. Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France All of the relatives of the deceased who we asked (9/10 interviewed) told us that they would like to know the probable cause of death of their relative after the VA interview. VA fieldworkers reported that they are often asked by respondents during interviews if they can tell them the cause of death of their relative. All four fieldworkers said they would be prepared to give a diagnosis if the respondents wanted to know. They thought that people would believe the diagnosis of the mobile phone as they consider them to be a type of computer (and therefore trust them). Field workers acknowledge that it could be difficult to inform respondents of the probable cause of death, particularly if it involved a stigmatized disease, such as HIV. They recognize that they would need extra training so that they could answer respondents’ questions about causes of death and related health issues. For example, when doing the translation of the WHO VA questions from English into Shangaan, we found that dementia and chronic obstructive pulmonary disease (COPD) were new medical concepts for them. Some of the fieldworkers also showed a limited understanding of causes of death - for example, one thought that congenital malformation of a baby was the consequence of a sexually transmitted infection (STI). Although this could be the case, it is not necessarily so, and this example illustrates how limited medical knowledge could result in misleading and unnecessarily traumatic feedback being given to respondents. Fieldworkers also thought that people in their community could deal with multi-causality, that is, understand that MIVA might generate more than one probable cause of death and that high, medium and low are better ways of giving probability information rather than numbers. In contrast to VA respondents and fieldworkers, the majority of population research scientists that we interviewed at AHDSS were of the opinion that respondents should not be told the probable cause of death. They recognize the importance of the issue and it is one that concerns them for two main reasons. First, they worry that InterVA tells us about the pattern of the probable causes of death in a population but cannot reliably provide this information for an individual case. We call this the ‘scale’ issue – can InterVA provide a reliable probable cause of death for an individual? Second, there are population scientists concerned that providing respondents with feedback on the probable cause of death moves their work “out of the comfort zone of surveillance systems” and into the complex realm of public health interventions. We refer to this as the ‘intervention’ issue. For example, one population scientist questioned the extent to which researchers are responsible for the health of the families they survey. He gave the example of the probable cause of death being an infectious disease such as TB and asked whether researchers would then be responsible for finding and informing the deceased’s recent close contacts. A further concern he had was who would finance this activity. 1497 Another stakeholder we interviewed about giving respondents information about the probable cause of death was a medical practitioner who carried out PCVA. She was concerned that the confidentiality rights of the deceased could potentially be compromised and explained that doctors had responsibilities to patients, even after they had died, and that they would withhold some medical information from a deceased’s family if it was not relevant to the cause of death. One example they gave was not informing a husband of the fact that his deceased wife had undergone an abortion if this had not led to her death. We can label this the ‘confidentiality’ issue. A final stakeholder we interviewed was a WHO VA expert who acknowledged that there is no simple answer to the question of whether respondents should be informed about the deceased’s probable cause of death and that it is an important issue that needs to be carefully considered. In his opinion the information should only be for the immediate family and it should be conveyed by a medically trained person, for example a health visitor or nurse, rather than by a fieldworker at the end of a VA interview. However, this again raises the question of how this would be funded. In order to make progress on the ethics of providing respondents with a probable cause of death, a useful first step would be for the VA community to debate the ‘scale’ issue. Our stakeholder interviews found three differing opinions on this issue. The first is that InterVA is a population tool and is not reliable for individual cases. If correct, then this resolves the ethical debate because MIVA could not provide a reliable probable cause of death. The second viewpoint is that a validation process needs to be carried out, for example, by comparing the results of PCVA and InterVA, case by case, and seeing the extent to which they agree. If there is sufficient agreement, it is argued, then InterVA is validated and the ethical challenge remains. The third opinion was that InterVA could be used for individual cases but when doing VA, regardless of whether it is PCVA or InterVA, “there is an inherent uncertainty that you can’t get away from”. This population scientist referred us to published studies comparing hospital doctors’ diagnoses of the cause of death and what pathologists determined as the cause of death and in 30% of cases there was disagreement [11]. He argued that comparing InterVA to PCVA is therefore not a good way of validating the analysis software. Given these diverging opinions, a key practical challenge for the VA community is determining the validity and scope of InterVA. If InterVA can provide reliable probable causes of death for individual cases then two key ethical challenges are to resolve the ‘intervention’ and ‘confidentiality’ issues. As stated earlier, some VA stakeholders, for example, many population scientists, see their role as gathering demographic data rather than engaging in public health interventions and therefore they do not think that they are responsible for giving respondents probable cause of death information after a VA interview. However, the distinction between Session: Clinical Settings CHI 2013: Changing Perspectives, Paris, France observation and intervention is not always clear-cut. For example, one of the VA fieldworkers we worked with explained that he had been trained to explain to respondents that participating in VAs is valuable for their community because their purpose is “To help the health system and those who are still living”. In this case, it might be argued that regardless of the cost, and irrespective of whether scientists want the responsibility or not, they do have an ethical obligation to provide the community they are observing with information that improves health systems. Whether this responsibility is fulfilled by providing feedback in the form of aggregated cause of death statistics at annual community meetings (as is currently done in AHDSS [8]) or providing information to relatives about an individual’s probable cause of death is a key ethical issue to be debated by the VA community. The existing medical ethics literature can potentially provide insights into how the ‘confidentiality’ issue can be resolved. tiple levels in a centralized setting. Proc. PD (2004), 5364. CONCLUSION The field study of MIVA in rural South Africa has demonstrated that this system meets practical WHO requirements for a mobile verbal autopsy tool that can be used in lowincome settings. It also raised two operational issues: the value of open questions for VAs; and the importance of trained fieldworkers to use MIVA effectively. By incorporating VA data capture and InterVA functionality on a mobile phone, MIVA can provide a probable cause of death at the end of an interview. This raises pressing ethical issues in the VA community, with different stakeholders holding different views on whether respondents should be informed, and if so, by whom. Some population scientists question the validity of InterVA to analyse individuals’ probable cause of death (the ‘scale’ issue); furthermore, this stakeholder group sees its role as doing research rather than running public health projects (the ‘intervention’ issue). Doctors are concerned that the rights of the deceased are not compromised (the ‘confidentiality’ issue). Respondents and fieldworkers were in favour of reporting the probable cause of death to relatives of the deceased as they believe this information will benefit individuals and their wider community. They view this issue as being as much a matter of saving lives as one of recording deaths. ACKNOWLEDGEMENTS This work was supported by UCL Institute for Global Health, UCL computer science strategic research fund and a Wellcome Trust Strategic Award, (085417ma /Z/08/Z). The Wellcome Trust, UK has funded the Agincourt verbal autopsies (058893/Z/99/A; 069683/Z/02/Z; 085477/Z/08/Z) REFERENCES 1. Anokwa, Y. et al. Stories from the field: Reflections on HCI4D experiences. Information Technologies and International Development 5(4) (2009), 101-115. 3. Byass, P. Who needs cause-of-death data? PLoS Medicine 4(11) (2007), e333. doi:10.1371/journal.pmed.0040333 4. Byass, P., et al. Direct data capture using hand-held computers in rural Burkina Faso: Experiences, benefits and lessons learnt. Tropical Medicine and International Health 13(1) (2008), 25-30. 5. Chandramohan, D. et al. Ethical issues in the application of verbal autopsies in mortality surveillance systems. Tropical Medicine and International Health 10(11) (2005), 1087-1089. 6. DeRenzi, B. et al. E-IMCI: Improving paediatric health care in low-income countries. Proc. CHI (2008), 753762. 7. Grisedale, S., Graves, M., and Grünsteidl, A. Designing a graphical interface for healthcare workers in rural India. Proc. CHI (1997), 471-478. 8. Kahn K., Collinson, M. A., Gómez-Olivé, F. X., et al. Profile: Agincourt Health and Socio-demographic Surveillance System. International Journal of Epidemiology, 41 (2012), 988-1001. 9. Ho, M., Smyth, T. N., Kam, M. and Dearden, A. M. Human-Computer Interaction for development: The past, present, and future. Information Technologies and International Development 5(4), 2009) 1-18. 10. Ho, M., Owusu, E. K. and Aoki, P. M. Claim Mobile: Engaging conflicting stakeholder requirements in healthcare in Uganda. ICTD (2009), 35-45. 11. Lahti, R. A. and Pentillä, A. Cause-of-death query in validation of death certification by expert panel: Effects on mortality statistics in Finland. Forensic Science International 131 (2003), 113-124. 12. Luk, R., Ho, M. and Aoki, P.M. Asynchronous remote medical consultation for Ghana. Proc. CHI (2008), 743752. 13. Mathers, C. D. et al. Counting the dead and what they died from: An assessment of the global status of cause of death data. Bulletin of the World Health Organization 83 (2005), 171-177. 14. Medhi, I., Jain, M., Tewari, A. et al. Combating rural child malnutrition through inexpensive mobile phones. NordiCHI (2012). 15. Soleman, N. et al. Verbal autopsy: Current practices and challenges. Bulletin of the World Health Organization 84 (2006), 239-245. 16. Verbal Autopsy Standards: The 2012 WHO Verbal Autopsy Standard (2012). 2. Braa, J. et al. Participatory health information systems development in Cuba: The challenge of addressing mul- 1498