A Review of Supervised Learning Based Classification for Text to Speech System

advertisement

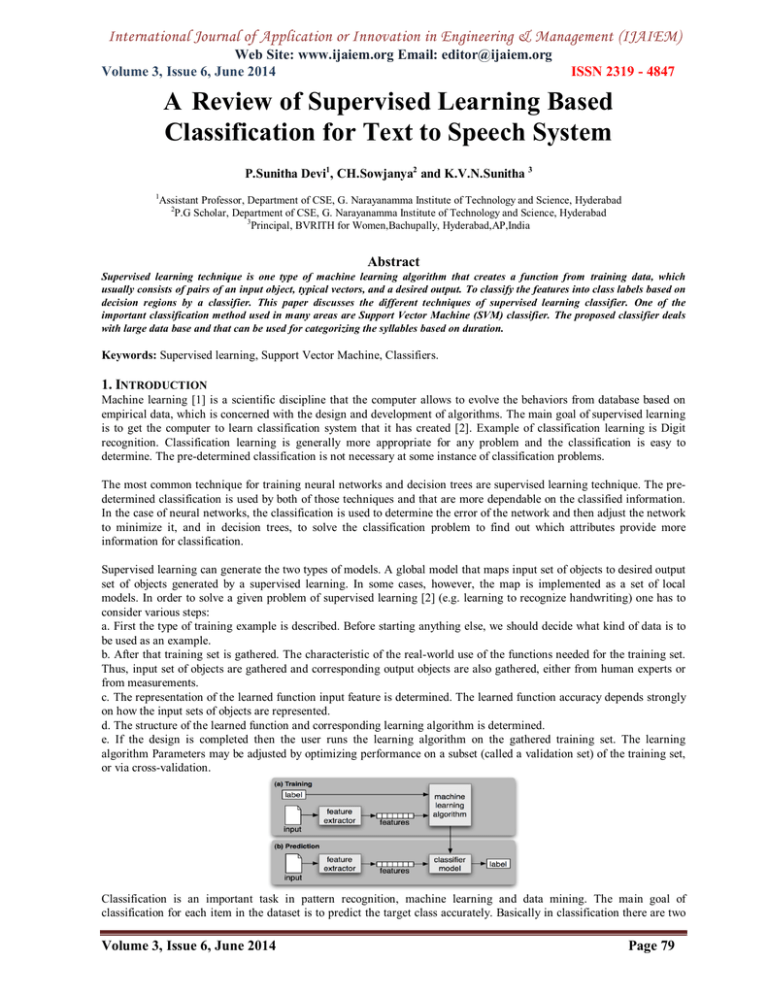

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 A Review of Supervised Learning Based Classification for Text to Speech System P.Sunitha Devi1, CH.Sowjanya2 and K.V.N.Sunitha 3 1 Assistant Professor, Department of CSE, G. Narayanamma Institute of Technology and Science, Hyderabad 2 P.G Scholar, Department of CSE, G. Narayanamma Institute of Technology and Science, Hyderabad 3 Principal, BVRITH for Women,Bachupally, Hyderabad,AP,India Abstract Supervised learning technique is one type of machine learning algorithm that creates a function from training data, which usually consists of pairs of an input object, typical vectors, and a desired output. To classify the features into class labels based on decision regions by a classifier. This paper discusses the different techniques of supervised learning classifier. One of the important classification method used in many areas are Support Vector Machine (SVM) classifier. The proposed classifier deals with large data base and that can be used for categorizing the syllables based on duration. Keywords: Supervised learning, Support Vector Machine, Classifiers. 1. INTRODUCTION Machine learning [1] is a scientific discipline that the computer allows to evolve the behaviors from database based on empirical data, which is concerned with the design and development of algorithms. The main goal of supervised learning is to get the computer to learn classification system that it has created [2]. Example of classification learning is Digit recognition. Classification learning is generally more appropriate for any problem and the classification is easy to determine. The pre-determined classification is not necessary at some instance of classification problems. The most common technique for training neural networks and decision trees are supervised learning technique. The predetermined classification is used by both of those techniques and that are more dependable on the classified information. In the case of neural networks, the classification is used to determine the error of the network and then adjust the network to minimize it, and in decision trees, to solve the classification problem to find out which attributes provide more information for classification. Supervised learning can generate the two types of models. A global model that maps input set of objects to desired output set of objects generated by a supervised learning. In some cases, however, the map is implemented as a set of local models. In order to solve a given problem of supervised learning [2] (e.g. learning to recognize handwriting) one has to consider various steps: a. First the type of training example is described. Before starting anything else, we should decide what kind of data is to be used as an example. b. After that training set is gathered. The characteristic of the real-world use of the functions needed for the training set. Thus, input set of objects are gathered and corresponding output objects are also gathered, either from human experts or from measurements. c. The representation of the learned function input feature is determined. The learned function accuracy depends strongly on how the input sets of objects are represented. d. The structure of the learned function and corresponding learning algorithm is determined. e. If the design is completed then the user runs the learning algorithm on the gathered training set. The learning algorithm Parameters may be adjusted by optimizing performance on a subset (called a validation set) of the training set, or via cross-validation. Classification is an important task in pattern recognition, machine learning and data mining. The main goal of classification for each item in the dataset is to predict the target class accurately. Basically in classification there are two Volume 3, Issue 6, June 2014 Page 79 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 phases, a training phase and validation phase. In training phase a training set is identified for the construction of a classifier. Each record in the training set will have several attributes, one of which is the class label attribute which indicates the class to which that record belongs. The classifier, once built and tested, can be used to predict the class label of new records that do not yet have a class label attribute value. In the validation phase, a test set is used to test the correctness of the classifier. The classifier, once tested and certified, can used to predict the class label of unclassified data in the future. Support vector machine (SVM) is a highly desirable classification method and it is a well known classifier due to its excellent classification accuracy, generalization and compact model. The largest separation (or margin) between the two classes are represented by a hyper plane [3]. It minimizes the empirical classification error and maximizes the geometric margin. However this kind of maximum-margin hyper plane may not exist because of class overlapping or mislabeled examples. In the linearly separable case the hyper plane is easy to compute, however in the general case it is necessary to use a soft margin SVM by introducing slack variables. In this way it is possible to find a hyper plane that splits the examples as clearly as possible. In order to find a separation hyper plane, it is necessary to solve a Quadratic Programming Problem (QPP). This is computational expensive, it has O (n3 ) time and O (n2 ) space complexities with n data [4]. So the standard SVM is unfeasible for large data sets [5]. Many researchers have tried to find possible methods to apply SVM classification for large datasets. These methods can be divided into four types: a) reducing training data sets (data selection) [6], b) using geometric properties of SVM [1], c) modifying SVM classifiers [7], d) decomposition [5]. The data selection method chooses the objects which are possible to be the support vectors. These data are used for SVM training. Generally, the number of the support vectors is much small compared with the whole data. Clustering is another effective tool to reduce data set size, for example, hierarchical clustering [8] and parallel clustering [9]. The geometric properties of SVM can also be used to reduce the training data. In separable case, the maximum-margin hyper plane is equivalent to finding the nearest neighbors of each class [10]. The random sampling method [6] is simple and commonly used for large data sets. However it needs to be applied several times and the obtained results are not repeatable. Data selection methods choose objects which are support vectors. These data are used for training the SVM classifier. Generally, the number of the support vectors is a small subset of the whole data [11]. The goals of this type of method are: a) fast detection of support vectors which define the optimal separating hyper plane, b) remove the data which are impossible to be Support Vectors, c) obtain similar accuracy using the reduced data set. The Decision Tree (DT) classifier is a method that has been used as a preprocessing step for SVM in recent years. Generally, the classification accuracy of SVM is better than DT. Different techniques have been discussed for classification, such as Decision trees, Naive Bayesian, Neural networks, K nearest Neighbours, Radial Basis Function, Gaussian mixture model, Support vector machine etc. Among these models, Support vector machine models are widely used for classification.When you submit your paper print it in one-column format, including figures and tables. In addition, designate one author as the “corresponding author”. This is the author to whom proofs of the paper will be sent. Proofs are sent to the corresponding author only. 2. CLASSIFICATION METHODS: 2.1 Decision Tree: Decision tree learning is a method commonly used in data mining. Decision tree is mainly used for classification. It uses the “Divide and conquer” strategy. It is an example of machine learning supervised algorithm [12]. Decision tree classifier is a hierarchical structure where at each level a test is applied to one or more attribute values that may have one of two outcomes. The outcome may be a leaf, which allocates a class, or a decision node, which specifies a further test on the attribute values and forms a branch or sub-tree of the tree. Decision tree is a classifier method able to produce models that can be comprehensible by human experts. Among the advantages of decision trees over other classification methods are: robustness to noise, ability to deal with redundant attributes, generalization ability via post-pruning strategy and a low computational cost and the decision trees are trained using data sets with nominal attributes. When a data set X is large, the computational burden is heavy. We first use decision tree to separate X into several subsets. A decision tree is a classifier whose model resembles a tree structure T, this structure is built from a labeled data set X. A decision tree is composed of nodes, and edges that connect the nodes. There are two type nodes: internal and terminal. An internal node has branches to connect to other ones, called its sons. A terminal node does not have any sons. The terminal node is called a leaf L of T. In order to explain the general training process of decision trees on numeric attributes, let’s represent the training data sets as in (1). Volume 3, Issue 6, June 2014 Page 80 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 Such that: With X Training set, A vector that represents an example or object in X, The category or class of , M Number of examples in training set, d Number of features, L Number of classes. R Relation The general methodology to build a decision tree is as follows: beginning from root node (it contains X), split the data into two or more smaller disjoint subsets, each subset should contain all or most of its elements with the same class, however this is not necessary. By partitioning input data into pure regions with respect to certain measurement of the impurity. The measurements are defined in terms of instance distribution of the input splitting region. The measurements of impurities followed in this paper are entropy, misclassifications and Gini (2). (2) Where CL is the number of classes, is the probability of example i to be of the class t, which is the number of examples in the class t divided by the size of the set X, i.e., . is used to create partitions in the input space, where value of the same type with the attribute i. is attribute of training set X, is a 2.2 Naive Bayesian Classification: Given relation(k,A1 ,……..An ,C ), where k is the key of the relation R and A1 ,….An ,different attributes C is the class label attribute. Given an unclassified data sample (which has values for all the attributes except for the class label C).a classification technique will predict the class label (C) value for the given sample and thus determine its class. The naive Bayesian classification is as follows [13]: Let X be a data sample whose class label is unknown. Let H be the hypothesis that belongs to class,C.P(H|X) is the posterior probability of H given X.P(H) is the prior probability of H then according to Bayes theorem, P (H|X) =P (X|H) P (H)/P(X). The Naive Bayesian classification uses this theorem in the following way. Each data sample is represented by a feature vector, X=(X1 …Xn ) depicting the measurements made on the sample from A1 …An. Given classes,C1 ……Cm ,the Naïve Bayesian Classifier will predict the class label,Cj which has the highest posterior probability, conditioned on X. P (Cj |X)>P(Ci |X),where i is not equal to j. P(X) is constant for all the classes so P (X|Cj ) P(Cj ) is to be maximized. The Naive assumption ‘class conditional independence of values’(The presence or absence of an attribute is independent of the presence or absence of any other attribute in the feature space) is made to reduce the computational complexity.Thus, P(X|Ci )=P(Xk | Ci )* ….* P(Xn | Ci ) For Categorical attributes, P(Xk | Ci )=Si Xk /Si Where Si is the number of samples in class Ci and Si Xk is the number of training samples of class Ci ,having Ak the value of Xk. Bayesian classification is simple in nature due to its strong conditional independence assumption, allowing each attribute to contribute independently in the classification process. This makes the classifier more computationally efficient and attractive for various applications. 2.3 K-Nearest Neighbor Classification(KNN): K-Nearest Neighbour is a method for classifying objects based on closest training examples in the feature space. In this an object is classified by a majority vote of its neighbours, with the object being assigned to the class most common amongst its K nearest neighbours (K is a positive integer, typically small).If K=1,then the object is simply assigned to the class of its nearest neighbor[14][15]. Volume 3, Issue 6, June 2014 Page 81 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 The classification process is as follows: Determine a suitable distance metric. Here the distance metric can be Manhattan Distance, Euclidean Distance, Minkowski Distance etc. Find the K nearest neighbours using the selected distance metric. Find the plurality class of the K-nearest neighbours by following voting mechanism on the class labels of the K nearest neighbours. Assign that class to the sample to be classified. Some of the distance metrics (between points X and Y ) are summarized as follows: Here X=(X1 ,X2 ,……Xm ) and Y=(Y1 ,Y2 ,….Ym ) are the feature vectors of X and Y respectively.’r’ in Murkowsky distance is the order to which the distance is calculated. Euclidean distance is nothing but the Minkowsky distance of order 2, and Manhattan distance is Minkowsky distance of order 1. The training examples are vectors in a multidimensional feature space, each with a class label. The training phase of the algorithm consists only of storing the feature vectors and class labels of the training samples. In the classification phase, K is a user-defined constant, and an unlabelled vector (a query or test point) is classified by assigning the label which is most frequent among the k training samples nearest to that query point. Usually Euclidean distance is used as the distance metric; however this is only applicable to continuous variables. In cases such as text classification, another metric such as the overlap metric (or hamming distance) can be used. Assumptions in KNN: Basically KNN assumes the data to be present in a feature space. That means the data points are located in a metric space. The data considered can be scalar or possibly multidimensional vectors. Since the points are located in a feature space, they will have a notion of distance. In this technique the number ‘k’ plays a key role. This number decides how many nearest neighbours (where neighbor is defined based on the distance metric being used) influence the classification. This is usually taken as an odd number if the number of classes is a multiple of 2.If k=1,then the algorithm is reduced to a simple case called the Nearest Neighbours algorithm. The behavior of the algorithm based on k value is as follows. Case 1: k=1 or Nearest Neighbour Rule: This is reduced to the simplest scenario called the Nearest Neighbour rule, described as follows: Let ‘X’ be the point which has to be labeled. Find the point ‘Y’ which is closest to X. Now Nearest Neighbour rule is to simply assign the label ‘Y’ to ‘X’. Case 2: K>1 or K-Nearest Neighbour Rule: This is purely a straight forward extension of case 1 i.e., 1NN.It is as follows: Find the K Nearest Neighbours and perform a majority voting. Typically K is taken as an odd number when the number of classes is a multiple of 2.If suppose k=7 and there are 4 instances of say class C1 and 3 instances of class C2 .In this case,KNN says that new point has to be labeled as C1 as it forms the majority. We follow a similar argument also when there are multiple classes. It is quite obvious that the accuracy increases when you increase K but the computation cost also increases. 2.4 The RBF network classifier: The Radial basis function (RBF) network is a one hidden layer neural network with several forms of radial basis activation functions [16]. The most common one is the Gaussian function defined by, fj (x)=exp(- ) Where f is determining the center of basis function and x is the dimensional input vector. In a RBF network, a neuron of the hidden layer is activated whenever the input vector is close enough to its center vector. There are several techniques and heuristics for optimizing the basis functions parameters and determining the number of hidden neurons needed to best classification [17]. This work discusses two training algorithms: forward selection (FS) and Gaussian mixture model (GMM). The first one allocates one neuron to each group of faces of each individual and if different faces of the same individual are not close to each other, more than one neuron will be necessary. The second training method regards the basis functions as the components of a mixture density model, whose parameters m and s are to be optimized by maximum likelihood [8]. Volume 3, Issue 6, June 2014 Page 82 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 2.5 Neural Network classifier: Neural network consist of set of neurons which are distributed across different layers. The input layer has one neuron for one value of past observation and one neuron for predicted value. The other layers are output layer and in between input and output layers are the hidden layers. At the training phase, input and weights are fed into neural network and desired output is drawn at a learning rate of p. For time series analysis, neural network captures temporal patterns in data in the form of past memory associated with the model and then uses this to know the future behavior. Neural networks are applied majorly in modeling non linear relationship among data without any prior assumption of relationship. 2.6 Gaussian Mixture Models: The Gaussian Mixture Model Classifier (GMM) can be used to classify a wide variety of N-dimensional signals. It is a basic but useful supervised learning classification algorithm [19]. Mixture models are used to make statistical inferences about the properties of the sub-population given only observations on the pooled population. A Gaussian mixture model can be Bayesian or Non-Bayesian. A variety of approaches focus on maximum likelihood estimate (MLE) as expectation maximization (EM) or maximum a posterior (MAP). Expectation step of each data point is a partial membership in each constituent distribution is computed by calculating expectation values for the each data point membership variables. In maximization step mixing coefficients and component model parameters are recomputed for the distribution parameters. 2.7 Support Vector Machine: Basically SVM classification can be grouped into two types: linearly separable and linearly inseparable cases. The nonlinear separation can be transformed into linear case via a kernel mapping. In the linear inseparable case, the convex hulls intersect. The convex hull based methods do not work well for SVM, because SVs are generally located on the exterior boundaries of data distribution. On the other hand, the vertices of the convex-concave hull are the border points and they are possible to be the support vectors [20]. Support Vector Machines (SVM) is based on statistical learning theory developed by Vapnik. It classifies data by determining a set of support vectors, which are members of the set of training inputs that outline a hyper plane in feature space. The training set X is given as (1) Where i d, i (1.....CL). The CL is the number of classes. SVM classifies data sets with an optimal separating hyper plane, which is given by T i + (2) This hyper plane is obtained by solving the following quadratic programming problem = (3) Such that: Where are the slack variables, to tolerate misclassifications. C>0 is a regularization parameter. (3) is equivalent to the following dual problem with the Lagrange multipliers (4) Such that: With the coefficients corresponding to . All with nonzero are called support vectors. The function K is the kernel which must satisfy the Mercer condition [4]. The resulting optimal decision function is Where is the input data, is the input data, are Lagrange multipliers. A previously unseen sample x can be classified by (5). There is a Lagrangian multiplier α for each training point. When the maximum margin of the hyper plane is found, only the closed points to the hyper plane satisfy α > 0. These points are called support vectors (SV), the other points satisfy α = 0. So the solution is sparse. Here b is determined by KarushKuhn-Tucker conditions: Volume 3, Issue 6, June 2014 Page 83 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 (6) The basic idea behind support vector machine is illustrated with the example shown in Figure 1. Figure 1: An example of a two separating hyper planes B1 and B2 In this example the data is assumed to be linearly separable. Therefore, there exists a linear hyper plane (or decision boundary) that separates the points into two different classes. In the two-dimensional case, the hyper plane is simply a straight line. In principle, there are infinitely many hyper planes that can separate the training data. Figure 1 shows two such hyper planes, B1 and B2. Both hyper planes can divide the training examples into their respective classes without committing any misclassification errors. Although the training time of even the fastest SVMs can be extremely slow, they are highly accurate, owing to their ability to model complex nonlinear decision boundaries. They are much less prone to over fitting than other methods. 3. Proposed Method: SVM Classification: By the study of all the classification techniques, among that SVM is better approach for classification. The major disadvantage in naive Bayesian classification is due to the strong independence assumption it is not possible to use two or more attributes together. In decision tree classification the main disadvantage is that it consumes a lot of time and space for building, training and storing the decision tree. It can be computationally expensive for certain applications and may not be suitable for applications which have real time considerations. The major disadvantage in K nearest Neighbour classification is that it consumes lot of memory space and also the classification process is slower. The limitation of the GMM algorithm is that, for computational reasons, if the dimensionality of the problem is too high, it can fail to work. If this is the case with user data then user might want to try SVM classification algorithm instead. Another one is that the user must set the number of mixture models that the algorithm will try and fit to the training dataset. In many instances the user will not know how many mixture models should be used and may have to experiment with a number of different mixture models in order to find the most suitable number of models that works for their classification problem. According to the geometric properties of SVM, the separating hyper plane is determined by the support vectors which are a small subset of whole data. The support vectors are close to their opposite class i.e., support vectors are near to the boundaries. The aim of SVM is to find the data which are near to the support vectors. The proposed method i.e., Support vector machine models are used for predicting the durations of the syllables and improving the prediction accuracy for modeling syllable duration for Telugu Text to speech. The proposed method works as follows: a) The framework is started with Training phase where input to the SVM consists of a set of phonological, positional an contextual features are extracted from training text and a model is constructed by machine learning based on transformed versions of these. b) To categorize the syllables based on duration by using SVM Classification model. c) To predict the durations of the syllables by using SVM Regression model. d) To evaluate the model objectively by computing the mean absolute error, standard deviation and linear Correlation coefficient between predicted and actual syllable durations. The proposed classification strategy can be described in the following steps: Volume 3, Issue 6, June 2014 Page 84 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 a. b. c. ISSN 2319 - 4847 Discover all regions that contain all or most of their examples with the same label. This label is the majority class for that region. Determine all its adjacent or neighbor regions whose majority class is opposite. Because in this detecting data that are located near to the opposite label. Search the data with shorter distances. 4. CONCLUSION AND FUTURE SCOPE: This paper introduces supervised learning method and describes about the different supervised learning classifiers. Using Support Vector Machines as a supervised machine learning algorithm is now days the most popular approach. Some aspects of SVM’s are covered that reflect why that is suitable for classification. The proposed classifier is used to classify the syllables based on duration. In the rest of the paper some interesting kind of classifier systems are described briefly. Similar analysis can be carried with large database that would include more number of syllables to increase the word coverage and that will improve the performance of speech systems. REFERENCES: [1] T.GDietterich, “Machine Learning”. In Rob the Wilson and Frank Kiel (Eds.), the MIT Encyclopedia of Cognitive Sciences, MIT Press, 497-498, 1999. [2] S.Abney. “Supervised Learning for Computational Linguistics. Chapman & Hall/ CRC, 2007. [3] N. Cristianini and J. Shawe-Taylor, An Introduction to Support Vector Machines and Other Kernel-based Learning Methods,CambridgeUniversity Press, Mar. 2000. [4] C. M. Bishop, Pattern Recognition and Machine Learning, Secaucus, NJ, USA: Springer-Verlag New York, Inc., 2006. [5] J. Platt, Fast training of support vector machines using sequential minimal optimization, Advances in Kernel Methods: Support Vector Machines, pp. pp. 185–208, 1998. [6] Y. Lee and O. L. Mangasarian, Rsvm: Reduced support vector machines, Data Mining Institute, Computer Sciences Department, University of Wisconsin, 2001, pp. 100–107. [7] J.A.K.Suykens , J.Vandewalle , Least squares support vector machine classifiers, Neural Processing Letters, vol. 9, no. 3, Jun. 1999, pp. 293– 300. [8] X.Li, J.Cervantes, W.Yu, Fast Classification for Large Data Sets via Random Selection Clustering and Support Vector Machines, Intelligent Data Analysis, Vol.16, No.6, 897-914, 2012. [9] C.Pizzuti, D.Talia, P-Auto Class: Scalable Parallel Clustering for Mining Large Data Sets, IEEE Trans. Knowledge and Data Eng., vol.15, no.3, pp.629-641, 2003. [10] K.P. Bennett , E.J. Bredensteiner, Duality and Geometry in SVM Classifiers, 17th International Conference on Machine Learning, San Francisco, CA, 2000. [11] D. M. J. Tax and R. P. W. Duin, “Support vector data description,” Mach. Learn., vol. 54, no. 1, pp. 45–66, Jan. 2004. [12] Asdrubal Lopez Chau,Xiaoou Li,Wen Yu,data Selection Using Decision Tree for SVM Classification,2012 IEEE 24 International conference on Tools with Artificial Intelligence. [13] Y. Yang, X. Liu, “A re-examination of text categorization methods,” Annual ACM Conference on Research and Development in Information Retrieval, USA, 1999, pp. 42-49. [14] Saravanan thirumuruganathan, A Detailed Introduction to K-Nearest Neighbor (KNN) Algorithm,http://saravananthirumuruganathan.wordpress.com/2010/05/17/a-detailed-introduction-to-k-nearestneighbor-knn-algorithm/ [15] Website link: http://www.fon.hum.uva.nl/praat/manual/kNN_classifiers_1__What_is_a_kNN_classifier_.html [16] Carlos Eduardo Thomaz,Raul Queiroz Feitosa,Alvaro Veiga Design of Radial Basis Function Network as Classifier in Face Recognition Using Eigenfaces SBRN’98 – Symposium Brasileiro de Redes Neurais, Belo Horizonte, Minas Gerais, dezembro de 1998. [17] C. M. Bishop, "Neural Networks for Pattern Recognition", Oxford, England: Oxford Press, 1996. [18] K. ROY+, C. CHAUDHURI, M. KUNDU, M. NASIPURI AND D. K. BASU,” Comparison of the Multi Layer Perceptron and the Nearest Neighbor Classifier for Handwritten Numeral Recognition”, JOURNAL OF INFORMATION SCIENCE AND ENGINEERING 21, 1247-1259 (2005). [19] Eoin M. Thomas, Andriy Temko, Gordon Lightbody, William P. Marnane and Geraldine B. Boylan, A Gaussian mixture model based statistical classification system for neonatal seizure detection, 978-1-4244- 4948-4/09© 2009 IEEE. [20] A.Moreira, M.Y.Santos, Concave Hull: A K- earest Neighbours Approach for the Computation of the Region Occupied by a Set of Points, GRAPP (GM/R), Pages 61–68, 2007. AUTHOR PROFILE Dr.K.V.N.Sunitha Currently working as Principal, BVRIT Hyderabad college of Engineering for women, Nizampet, Hyderabad has done her B.Tech ECE from NagarjunaUniversity, M.Tech Computer Science from REC Warangal. She completed her Ph.D from JNTU, Hyderabad in 2006. She has 21 years of Teaching Experience, worked at various engineering colleges. She received "Academic Excellence Award" by the management of G.Narayanamma Institute of Technology & Science on 18th September 2005. She also received Volume 3, Issue 6, June 2014 Page 85 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 6, June 2014 ISSN 2319 - 4847 "Best computer Science engineering Teacher award for the year 2007" by Indian Society for Technical Education ISTE. She has been recognized & invited by AICTE as NBA expert evaluator. Her autobiography was included in "Marquis Who is Who in the World " , 28th edition 2011, since August 2012. She has authored four text books, "Programming in UNIX and Compiler design"- BS Publications & "Formal Languages and Automta Theory" by Tata Mc Graw Hill , " Theory of Computation" by TMH in 2011, “Compiler Construction by Pearson India pvt ltd. She is an academic advisory member & Board of Studies member for other Engineering Colleges. She has published more than 75 papers in International & National Journals and conferences. She is a reviewer for many national and International Journals. She is fellow of Institute of engineers, Sr member for IEEE & International association CSIT, and life member of many technical associations like CSI and ACM. Mrs.P.Sunitha Devi presently working as Assistant Professor in CSE Dept, G.Narayanamma Institute of Tech& Science, Hyderabad. She has completed her M.Tech from JNTU Hyderabad and currently pursuing Research in the area of Telugu Text to Speech Synthesis. She is Life Member of CSI. 1) CH.Sowjanya P.G Scholar, Department of CSE, G. Narayanamma Institute of Technology and Science, Hyderabad. Obtained her B.Tech degree from TKR College Of Engineering and Technology Medbowli, Meerpet , CSE Stream,2012 passed out. Volume 3, Issue 6, June 2014 Page 86