Generalized pCN Metropolis algorithms for Bayesian inference in Hilbert spaces

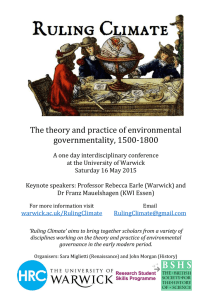

advertisement

Generalized pCN Metropolis algorithms

for Bayesian inference in Hilbert spaces

Oliver Ernst, Daniel Rudolf, Björn Sprungk

Data Assimilation and Inverse Problems (U Warwick)

23rd February, 2016

FAKULTÄT FÜR MATHEMATIK

Warwick, Feb 2016 · B. Sprungk

1 / 28

Outline

1. Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

2. The gpCN-Metropolis algorithm

3. Convergence

Warwick, Feb 2016 · B. Sprungk

2 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Next

1. Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

2. The gpCN-Metropolis algorithm

3. Convergence

Warwick, Feb 2016 · B. Sprungk

3 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Motivation: uncertainty quantification in groundwater flow modelling

Groundwater flow modelling:

I

PDE model for groundwater pressure head p, e.g.,

−∇ · (eκ(x) ∇p(x)) = 0

I

Noisy observations of κ and p at locations xj , j = 1, . . . , J

I

Interested in functional f of flux u(x) = −eκ(x) ∇p(x)

(e.g., breakthrough time of pollutants)

UQ approach:

I

Model unknown coefficient κ as (Gaussian) random function

I

Employ observational data to fit stochastic model for κ

I

Compute expectations or probabilities for resulting random f

Warwick, Feb 2016 · B. Sprungk

4 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Motivation: uncertainty quantification in groundwater flow modelling

Groundwater flow modelling:

I

PDE model for groundwater pressure head p, e.g.,

−∇ · (eκ(x) ∇p(x)) = 0

I

Noisy observations of κ and p at locations xj , j = 1, . . . , J

I

Interested in functional f of flux u(x) = −eκ(x) ∇p(x)

(e.g., breakthrough time of pollutants)

UQ approach:

I

Model unknown coefficient κ as (Gaussian) random function

I

Employ observational data to fit stochastic model for κ

I

Compute expectations or probabilities for resulting random f

Warwick, Feb 2016 · B. Sprungk

4 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Obtain stochastic model for κ via Bayes

I

Random function in Hilbert space H with CONS {φm }m∈N

X

κ(x, ω) =

ξm (ω) φm (x),

m≥1

where ξ = (ξm )m∈N random vector in `2

I

Employ measurements of κ to fit Gaussian prior µ0 for κ resp. ξ:

ξ ∼ µ0 = N (0, C0 )

I

on `2

Conditioning prior µ0 on noisy observations p ∈ RJ of p

p = G(ξ) + ε,

(ξ, ε) ∼ µ0 ⊗ N (0, Σ)

results in posterior via Bayes’ rule [Stuart, 2010]

µ(dξ) ∝ exp (−Φ(ξ)) µ0 (dξ),

Warwick, Feb 2016 · B. Sprungk

5 / 28

1

Φ(ξ) = |p − G(ξ)|2Σ−1 .

2

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Obtain stochastic model for κ via Bayes

I

Random function in Hilbert space H with CONS {φm }m∈N

X

κ(x, ω) =

ξm (ω) φm (x),

m≥1

where ξ = (ξm )m∈N random vector in `2

I

Employ measurements of κ to fit Gaussian prior µ0 for κ resp. ξ:

ξ ∼ µ0 = N (0, C0 )

I

on `2

Conditioning prior µ0 on noisy observations p ∈ RJ of p

p = G(ξ) + ε,

(ξ, ε) ∼ µ0 ⊗ N (0, Σ)

results in posterior via Bayes’ rule [Stuart, 2010]

µ(dξ) ∝ exp (−Φ(ξ)) µ0 (dξ),

Warwick, Feb 2016 · B. Sprungk

5 / 28

1

Φ(ξ) = |p − G(ξ)|2Σ−1 .

2

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Obtain stochastic model for κ via Bayes

I

Random function in Hilbert space H with CONS {φm }m∈N

X

κ(x, ω) =

ξm (ω) φm (x),

m≥1

where ξ = (ξm )m∈N random vector in `2

I

Employ measurements of κ to fit Gaussian prior µ0 for κ resp. ξ:

ξ ∼ µ0 = N (0, C0 )

I

on `2

Conditioning prior µ0 on noisy observations p ∈ RJ of p

p = G(ξ) + ε,

(ξ, ε) ∼ µ0 ⊗ N (0, Σ)

results in posterior via Bayes’ rule [Stuart, 2010]

µ(dξ) ∝ exp (−Φ(ξ)) µ0 (dξ),

Warwick, Feb 2016 · B. Sprungk

5 / 28

1

Φ(ξ) = |p − G(ξ)|2Σ−1 .

2

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Markov chain Monte Carlo (MCMC) sampling

Eµ [f ] =

R

I

Employ MCMC sampling to compute

I

Construct Markov chain (ξ k )k∈N in H = `2 with transition kernel

H

f (ξ) µ(dξ).

M (η, A) := P(ξ k+1 ∈ A|ξ k = η)

which is µ-reversible:

M (ξ, dη) µ(dξ) = M (η, dξ) µ(dη)

I

Then µ stationary measure of chain and (under suitable conditions)

n

1X

f (ξ k )

n

n→∞

−−−→

k=1

Warwick, Feb 2016 · B. Sprungk

6 / 28

Eµ [f ]

a.s.

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Efficiency of Markov chain Monte Carlo

I

µ-reversible Markov chain geometrically ergodic and ξ 1 ∼ µ, then

!

n

√

1X

d

n

f (ξ k ) − Eµ [f ]

−−→ N (0, σf2 ),

n

k=1

see [Roberts & Rosenthal, 1997], where

σf2

∞

X

=

γf (k),

γf (k) = Cov f (ξ 1 ), f (ξ 1+k )

k=−∞

I

Rapid decay of autocovariance function γf yields high statistical

efficiency.

I

Common measure of efficiency is effective sample size:

ESSf (n) = n

Warwick, Feb 2016 · B. Sprungk

7 / 28

σf2

Varµ (f )

.

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

The Metropolis-Hastings (MH) algorithm

Metropolis-Hastings algorithm for generating state ξ k+1 of Markov chain

1. Draw a new state η from proposal kernel P (ξ k , dη):

η ∼ P (ξ k ).

2. Accept proposal η with acceptance probability α(ξ k , η), i.e., draw

a ∼ Uni[0, 1] and set

(

η, a ≤ α(ξ k , η),

ξ k+1 =

ξ k , otherwise.

Transition kernel of resulting chain is called Metropolis kernel and reads

Z

M (ξ, dη) = α(ξ, η)P (ξ, dη) + 1 − α(ξ, ζ) P (ξ, dζ) δξ (dη).

Warwick, Feb 2016 · B. Sprungk

8 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

The Metropolis-Hastings (MH) algorithm

Metropolis-Hastings algorithm for generating state ξ k+1 of Markov chain

1. Draw a new state η from proposal kernel P (ξ k , dη):

η ∼ P (ξ k ).

2. Accept proposal η with acceptance probability α(ξ k , η), i.e., draw

a ∼ Uni[0, 1] and set

(

η, a ≤ α(ξ k , η),

ξ k+1 =

ξ k , otherwise.

Transition kernel of resulting chain is called Metropolis kernel and reads

Z

M (ξ, dη) = α(ξ, η)P (ξ, dη) + 1 − α(ξ, ζ) P (ξ, dζ) δξ (dη).

Warwick, Feb 2016 · B. Sprungk

8 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

MH algorithms in Hilbert space

To ensure µ-reversibility of Metropolis kernel we need to choose

dν >

α(ξ k , η) = min 1,

(ξ , η) ,

dν k

where ν(dξ, dη) := P (ξ, dη) µ(dξ) and ν > (dξ, dη) := ν(dη, dξ).

Since µ and µ0 are equivalent (Bayes’ rule), a µ0 -reversible proposal P ,

P (ξ, dη) µ0 (dξ) = P (η, dξ) µ0 (dη),

will yield

dµ

dν >

(ξ, η) =

(η)

dν

dµ0

Warwick, Feb 2016 · B. Sprungk

−1

dµ

(ξ)

= exp Φ(ξ) − Φ(η) .

dµ0

9 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

MH algorithms in Hilbert space

To ensure µ-reversibility of Metropolis kernel we need to choose

dν >

α(ξ k , η) = min 1,

(ξ , η) ,

dν k

where ν(dξ, dη) := P (ξ, dη) µ(dξ) and ν > (dξ, dη) := ν(dη, dξ).

Since µ and µ0 are equivalent (Bayes’ rule), a µ0 -reversible proposal P ,

P (ξ, dη) µ0 (dξ) = P (η, dξ) µ0 (dη),

will yield

dν >

dµ

(ξ, η) =

(η)

dν

dµ0

Warwick, Feb 2016 · B. Sprungk

−1

dµ

(ξ)

= exp Φ(ξ) − Φ(η) .

dµ0

9 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Examples

Gaussian random walk-proposal:

P (ξ) = N (ξ, s2 C0 )

I

s ∈ R+ is a stepsize parameter typically tuned such that acceptance

rate

Z Z

ᾱ :=

α(ξ, η) P (ξ, dη) µ(dξ) ≈ 0.234.

I

This proposal P is not µ0 reversible.

H

H

√

Preconditioned Crank-Nicolson-proposal: P (ξ) = N ( 1 − s2 ξ, s2 C0 )

I

Derived from a CN-scheme for (the drift of) the SDE

√

dξ t = (0 − ξ t ) dt + 2 dBt

where Bt is C0 -Brownian motion in H , see [Cotter et al., 2013].

I

This proposal is µ0 reversible.

Warwick, Feb 2016 · B. Sprungk

10 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Examples

Gaussian random walk-proposal:

P (ξ) = N (ξ, s2 C0 )

I

s ∈ R+ is a stepsize parameter typically tuned such that acceptance

rate

Z Z

ᾱ :=

α(ξ, η) P (ξ, dη) µ(dξ) ≈ 0.234.

I

This proposal P is not µ0 reversible.

H

H

√

Preconditioned Crank-Nicolson-proposal: P (ξ) = N ( 1 − s2 ξ, s2 C0 )

I

Derived from a CN-scheme for (the drift of) the SDE

√

dξ t = (0 − ξ t ) dt + 2 dBt

where Bt is C0 -Brownian motion in H , see [Cotter et al., 2013].

I

This proposal is µ0 reversible.

Warwick, Feb 2016 · B. Sprungk

10 / 28

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Implications for MCMC algorithm performance

Problem: Bayesian inference in 2D groundwater flow model.

Acceptance rate vs. stepsize s for different dimensions M of ξ ∈ RM .

Random walk-proposal P (ξ) = N (ξ, s2 C0 )

1

0.9

Average acceptance rate

0.8

0.7

0.6

0.5

M = 10

0.4

M = 40

0.3

M = 160

M = 640

0.2

0.1

0 −3

10

−2

−1

10

10

stepsize s

Warwick, Feb 2016 · B. Sprungk

11 / 28

0

10

Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

Implications for MCMC algorithm performance

Problem: Bayesian inference in 2D groundwater flow model.

Acceptance rate vs. stepsize s for different dimensions M of ξ ∈ RM .

√

pCN-proposal P (ξ) = N ( 1 − s2 ξ, s2 C0 )

1

0.9

Average acceptance rate

0.8

0.7

0.6

0.5

0.4

M = 10

M = 40

0.3

M = 160

0.2

M = 640

0.1

0 −3

10

−2

−1

10

10

stepsize s

Warwick, Feb 2016 · B. Sprungk

11 / 28

0

10

The gpCN-Metropolis algorithm

Next

1. Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

2. The gpCN-Metropolis algorithm

3. Convergence

Warwick, Feb 2016 · B. Sprungk

12 / 28

The gpCN-Metropolis algorithm

Motivation

Observation:

Higher statistical efficiency when proposal employs same covariance as

target measure µ, see [Tierney, 1994], [Roberts & Rosenthal, 2001], . . .

Example: µ = N (0, C ) in 2D, MH with different proposal covariances

1

1

0.5

0.5

0

0

−0.5

−0.5

−1

−1.5

−1

−0.5

0

0.5

1

−1

−1.5

1.5

P (ξ) = N (ξ, s2 I )

Warwick, Feb 2016 · B. Sprungk

−1

−0.5

0

0.5

P (ξ) = N (ξ, s2 C )

13 / 28

1

1.5

The gpCN-Metropolis algorithm

Motivation

Observation:

Higher statistical efficiency when proposal employs same covariance as

target measure µ, see [Tierney, 1994], [Roberts & Rosenthal, 2001], . . .

Example: µ = N (0, C ) in 2D, MH with different proposal covariances

1

1

0.5

0.5

0

0

−0.5

−0.5

−1

−1.5

−1

−0.5

0

0.5

1

−1

−1.5

1.5

P (ξ) = N (ξ, s2 I )

Warwick, Feb 2016 · B. Sprungk

−1

−0.5

0

0.5

P (ξ) = N (ξ, s2 C )

13 / 28

1

1.5

The gpCN-Metropolis algorithm

Motivation

Observation:

Higher statistical efficiency when proposal employs same covariance as

target measure µ, see [Tierney, 1994], [Roberts & Rosenthal, 2001], . . .

Example: µ = N (0, C ) in 2D, MH with different proposal covariances

ACRF

ACRF

1

1

0.9

X

0.9

X1

0.8

X2

0.8

X

1

0.7

0.7

0.6

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0

0

2

0.1

50

100

0

0

150

lag

100

2

P (ξ) = N (ξ, s I )

Warwick, Feb 2016 · B. Sprungk

50

lag

2

P (ξ) = N (ξ, s C )

13 / 28

150

The gpCN-Metropolis algorithm

How to approximate posterior covariance in advance

I

If forward map G were linear,then

µ = N (m, C) ,

I

C = (C0−1 + G∗ Σ−1 G)−1 .

Idea: Linearization of nonlinear G at ξ 0

e

G(ξ) ≈ G(ξ)

:= G(ξ 0 ) + Jξ,

J = ∇G(ξ 0 )

yields approximation to posterior covariance

e = (C −1 + J ∗ Σ−1 J)−1 .

C≈C

0

I

Good choice for ξ 0 might be maximum a posteriori estimate:

−1/2

ξ MAP = argmin |p − G(ξ)|2Σ−1 + kC0 ξk2 .

ξ

Warwick, Feb 2016 · B. Sprungk

14 / 28

The gpCN-Metropolis algorithm

How to approximate posterior covariance in advance

I

If forward map G were linear,then

µ = N (m, C) ,

I

C = (C0−1 + G∗ Σ−1 G)−1 .

Idea: Linearization of nonlinear G at ξ 0

e

G(ξ) ≈ G(ξ)

:= G(ξ 0 ) + Jξ,

J = ∇G(ξ 0 )

yields approximation to posterior covariance

e = (C −1 + J ∗ Σ−1 J)−1 .

C≈C

0

I

Good choice for ξ 0 might be maximum a posteriori estimate:

−1/2

ξ MAP = argmin |p − G(ξ)|2Σ−1 + kC0 ξk2 .

ξ

Warwick, Feb 2016 · B. Sprungk

14 / 28

The gpCN-Metropolis algorithm

How to approximate posterior covariance in advance

I

If forward map G were linear,then

µ = N (m, C) ,

I

C = (C0−1 + G∗ Σ−1 G)−1 .

Idea: Linearization of nonlinear G at ξ 0

e

G(ξ) ≈ G(ξ)

:= G(ξ 0 ) + Jξ,

J = ∇G(ξ 0 )

yields approximation to posterior covariance

e = (C −1 + J ∗ Σ−1 J)−1 .

C≈C

0

I

Good choice for ξ 0 might be maximum a posteriori estimate:

−1/2

ξ MAP = argmin |p − G(ξ)|2Σ−1 + kC0 ξk2 .

ξ

Warwick, Feb 2016 · B. Sprungk

14 / 28

The gpCN-Metropolis algorithm

Generalized pCN-proposals

I

Class of admissible proposal covariances:

CΓ = (C0−1 + Γ)−1 ,

I

Γ positive, self-adjoint and bounded

Define generalized pCN-proposal kernel as

PΓ (ξ) = N (AΓ ξ, s2 CΓ ),

cf. operator weighted proposals [Law, 2013] and [Cui et al., 2014]

I

Enforcing µ0 -reversibility of PΓ – as for pCN-proposal P0 – yields

1/2

AΓ = C 0

I

p

−1/2

I − s2 (I + HΓ )−1 C0 ,

Boundedness of AΓ on H not obvious

Warwick, Feb 2016 · B. Sprungk

15 / 28

1/2

1/2

HΓ := C0 ΓC0 .

The gpCN-Metropolis algorithm

Generalized pCN-proposals

I

Class of admissible proposal covariances:

CΓ = (C0−1 + Γ)−1 ,

I

Γ positive, self-adjoint and bounded

Define generalized pCN-proposal kernel as

PΓ (ξ) = N (AΓ ξ, s2 CΓ ),

cf. operator weighted proposals [Law, 2013] and [Cui et al., 2014]

I

Enforcing µ0 -reversibility of PΓ – as for pCN-proposal P0 – yields

1/2

AΓ = C 0

I

p

−1/2

I − s2 (I + HΓ )−1 C0 ,

Boundedness of AΓ on H not obvious

Warwick, Feb 2016 · B. Sprungk

15 / 28

1/2

1/2

HΓ := C0 ΓC0 .

The gpCN-Metropolis algorithm

Generalized pCN-Metropolis

gpCN Metropolis

The Metropolis algorihtm with the gpCN-proposal kernel

PΓ (ξ) = N (AΓ ξ, s2 CΓ ),

where s ∈ (0, 1), and the acceptance probability

α(ξ, η) = min {1, exp(Φ(ξ) − Φ(η))}

is well-defined and yields a µ-reversible Markov chain in H .

It is called gpCN Metropolis and its Metropolis kernel denoted by MΓ .

Note, for Γ = 0 we recover the pCN Metropolis algorithm with kernel M0 .

Warwick, Feb 2016 · B. Sprungk

16 / 28

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

dp

dx (x)

I

1D model:

d

dx

I

Observations:

4

p = p(0.2j) j=1 + ε,

I

Prior:

κ Brownian bridge on [0, 1], i.e.,

eκ(x)

√

= 0,

p(0) = 0, p(1) = 2

ε ∼ N (0, σε2 I)

M

2 X

ξm (ω) sin(mπx),

ξm ∼ N (0, m−2 )

π m=1

R1

Quantity of interest: f (ξ) = 0 exp(κ(x, ξ)) dx

κ(x, ξ(ω)) ≈

I

I

Proposals for MH-MCMC

I

Results

Warwick, Feb 2016 · B. Sprungk

17 / 28

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

I

Proposals for MH-MCMC

pCN:

P1 (ξ) = N (ξ, s2 C0 )

√

P2 (ξ) = N ( 1 − s2 ξ, s2 C0 )

Gauss-Newton RW (GN-RW):

P3 (ξ) = N (ξ, s2 CΓ )

gpCN:

P4 (ξ) = N (AΓ ξ, s2 CΓ )

Gaussian random walk (RW):

Γ = σε−2 JJ > ,

I

Results

Warwick, Feb 2016 · B. Sprungk

17 / 28

J = ∇G(ξ MAP )

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

I

Proposals for MH-MCMC

I

Results

Autocorrelation for M = 50, σε = 0.1

1

RW

pCN

GN-RW

gpCN

0.8

0.6

0.4

0.2

0

100

102

lag

Warwick, Feb 2016 · B. Sprungk

17 / 28

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

I

Proposals for MH-MCMC

I

Results

Autocorrelation for M = 400, σε = 0.01

1

RW

pCN

GN-RW

gpCN

0.8

0.6

0.4

0.2

0

100

102

lag

Warwick, Feb 2016 · B. Sprungk

17 / 28

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

I

Proposals for MH-MCMC

I

Results

Effective sample size vs. dimension

ESS

105

104

103

RW

pCN

GN-RW

gpCN

102

103

dimension M

Warwick, Feb 2016 · B. Sprungk

17 / 28

The gpCN-Metropolis algorithm

Numerical experiment

I

Setting

I

Proposals for MH-MCMC

I

Results

Effective sample size vs. noise variance

ESS

105

104

103

10-4

RW

pCN

GN-RW

gpCN

10-3

noise variance

Warwick, Feb 2016 · B. Sprungk

17 / 28

10-2

σ2ǫ

Convergence

Next

1. Problem setting and Metropolis-Hastings algorithms in Hilbert spaces

2. The gpCN-Metropolis algorithm

3. Convergence

Warwick, Feb 2016 · B. Sprungk

18 / 28

Convergence

Geometric ergodicity and spectral gaps

Consider Markov chain {ξ k }k∈N0 with µ-reversible transition kernel M .

I

Kernel M is L2µ -geometrically ergodic if r > 0 exists such that

kµ − νM k kTV ≤ Cν exp(−r k)

I

∀ν :

dν

∈ L2µ (H ).

dµ

Define associated Markov operator M : L2µ → L2µ by

Z

Mf (ξ) :=

f (η) M (ξ, dη).

H

I

L2µ -spectral gap of operator M:

I

M is L2µ -geometrically ergodic iff gapµ (M) > 0.

Warwick, Feb 2016 · B. Sprungk

19 / 28

gapµ (M) := 1 − kM − Eµ kL2µ →L2µ

Convergence

Proving geometric ergodicity of gpCN-Metropolis

I

For pCN-Metropolis kernel M0 an L2µ -spectral gap was proven in

dµ

[Hairer et al., 2014] under certain conditions on dµ

.

0

I

Our Strategy:

Take a comparative approach and relate gapµ (MΓ ) to gapµ (M0 ) in

order to prove

0 < gapµ (M0 )

I

=⇒

0 < gapµ (MΓ ).

Idea behind:

If kernels M0 and MΓ do not “differ” too much, then (maybe) they

inherit convergence properties from each other.

Warwick, Feb 2016 · B. Sprungk

20 / 28

Convergence

Comparison result

Theorem (Comparison of spectral gaps)

For i = 1, 2 let Mi be µ-reversible Metropolis kernels with proposals Pi

and acceptance probability α.

Assume that

1. the associated Markov operators Mi are positive,

2. the Radon-Nikodym derivative ρ(ξ; η) :=

3. and for a β > 1 we have

R R

sup

µ(A)∈(0, 21 ]

A Ac

dP1 (ξ)

dP2 (ξ) (η)

ρβ (ξ; η) P2 (ξ, dη) µ(dξ)

< ∞,

µ(A)

Then

gapµ (M1 )2β ≤ Kβ gapµ (M2 )β−1 .

Warwick, Feb 2016 · B. Sprungk

exists,

21 / 28

Convergence

Application to pCN-/gpCN-Metropolis

Lemma (Positivity of Markov operators)

The Markov operator MΓ associated to gpCN-Metropolis is positive for

any admissible Γ.

Lemma (Absolute continuity, integrability of density)

There exists a density between the pCN- and gpCN-proposal

ρΓ (ξ; η) :=

dP0 (ξ)

(η)

dPΓ (ξ)

and constants b, K < ∞ such that for β < 1 +

Z

H

Warwick, Feb 2016 · B. Sprungk

ρβΓ (ξ; η) PΓ (ξ, dη) ≤ K exp

22 / 28

1

2kHΓ k

b2

2

kξk2 .

Convergence

Restricted measures and Markov chains

I

For R > 0 set BR := {ξ ∈ H : kξk < R} and define

µR (dξ) :=

I

1

1B (ξ) µ(dξ).

µ(BR ) R

For Metropolis kernel M with proposal P and acceptance probability

α set

Z

MR (ξ, dη) := αR (ξ, η)P (ξ, dη)+ 1 −

αR (ξ, ζ)P (ξ, dζ) δξ (dη)

H

where αR (ξ, η) := 1BR (η)α(ξ, η).

Lemma

If M is self-adjoint and positive on L2µ (H ), then so is MR on L2µR (H )

and

c

gapµR (MR ) ≥ gapµ (M) − sup M (ξ, BR

).

ξ∈BR

Warwick, Feb 2016 · B. Sprungk

23 / 28

Convergence

Restricted measures and Markov chains

I

For R > 0 set BR := {ξ ∈ H : kξk < R} and define

µR (dξ) :=

I

1

1B (ξ) µ(dξ).

µ(BR ) R

For Metropolis kernel M with proposal P and acceptance probability

α set

Z

MR (ξ, dη) := αR (ξ, η)P (ξ, dη)+ 1 −

αR (ξ, ζ)P (ξ, dζ) δξ (dη)

H

where αR (ξ, η) := 1BR (η)α(ξ, η).

Lemma

If M is self-adjoint and positive on L2µ (H ), then so is MR on L2µR (H )

and

c

gapµR (MR ) ≥ gapµ (M) − sup M (ξ, BR

).

ξ∈BR

Warwick, Feb 2016 · B. Sprungk

23 / 28

Convergence

Main result

Theorem (Spectral gap of gpCN-Metropolis for restricted measure)

If

gapµ (M0 ) > 0,

then for any admissible Γ and any > 0 there exists a number R < ∞

such that

kµ − µR kTV < and gapµR (MΓ,R ) > 0.

Proof employs previous lemmas and comparison theorem to show

gapµ (M0 ) > 0

Warwick, Feb 2016 · B. Sprungk

=⇒

gapµR (M0,R ) > 0

24 / 28

=⇒

gapµR (MΓ,R ) > 0.

Convergence

Outlook: gpCN-Metropolis with local proposal covariance

Density ρΓ (ξ) =

I

dP0 (ξ)

dPΓ (ξ)

allows for state-dependent proposal covariances:

Let ξ 7→ Γ(ξ) be measurable and define proposal kernel

Ploc (ξ) := N (AΓ(ξ) ξ, s2 CΓ(ξ) ).

I

Following an approach as in [Beskos et al., 2008] we get

Ploc (ξ, dη) µ0 (dξ) =

ρΓ(η) (η, ξ)

Ploc (η, dξ) µ0 (dη).

ρΓ(ξ) (ξ, η)

I

Obtain µ-reversible MH algorithm with proposal Ploc and

ρΓ(ξ) (ξ, η)

αloc (ξ, η) := min 1, exp(Φ(η) − Φ(ξ))

ρΓ(η) (η, ξ)

I

Same approach works for

Warwick, Feb 2016 · B. Sprungk

√

0 (ξ) := N ( 1 − s2 ξ, s2 C

Ploc

Γ(ξ) ).

25 / 28

Convergence

Numerical experiment revisited

Same problem setting as before, but now also consider “local” proposals

√

local pCN:

P5 (ξ) = N ( 1 − s2 ξ, s2 CΓ(ξ) )

local gpCN:

P6 (ξ) = N (AΓ(ξ) ξ, s2 CΓ(ξ) )

with Γ(ξ) = ∇G(ξ)> Σ−1 ∇G(ξ).

ESS

Effective sample size vs. dimension

104

pCN

gpCN

local pCN

local gpCN

103

102

dimension M

Warwick, Feb 2016 · B. Sprungk

26 / 28

Convergence

Numerical experiment revisited

Same problem setting as before, but now also consider “local” proposals

√

local pCN:

P5 (ξ) = N ( 1 − s2 ξ, s2 CΓ(ξ) )

local gpCN:

P6 (ξ) = N (AΓ(ξ) ξ, s2 CΓ(ξ) )

with Γ(ξ) = ∇G(ξ)> Σ−1 ∇G(ξ).

Effective sample size vs. noise variance

ESS

104

pCN

gpCN

local pCN

local gpCN

102

10-4

10-3

noise variance σ2ǫ

Warwick, Feb 2016 · B. Sprungk

26 / 28

10-2

Conclusions

I

Generalized pCN-Metropolis allows to beneficially employ

approximation of posterior covariance for proposal kernel

I

gpCN seems to perform independent of dimension and noise variance

I

Geometric ergodicity of gpCN-Metropolis proven by general

framework for relating spectral gaps of Metropolis algorithms

I

gpCN-Metropolis with state-dependent proposal covariance possible

Some open issues:

I

Proof of higher efficiency of gpCN-Metropolis

I

Proof and theoretical understanding of variance-independence

Warwick, Feb 2016 · B. Sprungk

27 / 28

Conclusions

I

Generalized pCN-Metropolis allows to beneficially employ

approximation of posterior covariance for proposal kernel

I

gpCN seems to perform independent of dimension and noise variance

I

Geometric ergodicity of gpCN-Metropolis proven by general

framework for relating spectral gaps of Metropolis algorithms

I

gpCN-Metropolis with state-dependent proposal covariance possible

Some open issues:

I

Proof of higher efficiency of gpCN-Metropolis

I

Proof and theoretical understanding of variance-independence

Warwick, Feb 2016 · B. Sprungk

27 / 28

References

A. Beskos, G. Roberts, A. Stuart, and J. Voss.

MCMC methods for diffusion bridges.

Stoch. Dynam., 8(3):319–350, 2008.

S. Cotter, G. Roberts, A. Stuart, and D. White.

MCMC methods for functions: Modifying old algorithms to make them faster.

Stat. Sci., 28(3):283–464, 2013.

T. Cui, K. Law, and Y. Marzouk.

Dimension-independent likelihood-informed MCMC.

arXiv:1411.3688, 2014.

M. Hairer, A. Stuart, and S. Vollmer.

Spectral gaps for a Metropolis-Hastings algorithm in infinite dimensions.

Ann. Appl. Probab., 24(6):2455–2490, 2014.

D. Rudolf, B. Sprungk.

On a generalization of the preconditioned Crank-Nicolson Metropolis algorithm.

arXiv:1504.03461, 2015.

A. Stuart.

Inverse problems: a Bayesian perspective.

Acta Numer., 19:451–559, 2010.

Warwick, Feb 2016 · B. Sprungk

28 / 28