Document 13134388

advertisement

2011 International Conference on Telecommunication Technology and Applications

Proc .of CSIT vol.5 (2011) © (2011) IACSIT Press, Singapore

Pushout with Differentiated Dropping for High-Speed Networks

Jui-Pin Yang 1 and Yuan-Sun Chu 2

1

Department of Information Technology and Communication, Shih-Chien University

2

Department of Electrical Engineering, National Chung-Cheng University

1

Abstract. Pushout (PO) queue management scheme is capable of fair buffer utilization and good loss

performance, but it is possessed of high implementation complexity and poor protection against short-term

burstiness of lightly loaded flows. To improve above drawbacks, a simple but efficient scheme, pushout with

differentiated dropping (PDD), is proposed in this paper. We design a weight function to estimate the weight

of each active flow according to corresponding traffic intensity, which provides discriminative packet

treatment when the buffer is full. The PDD maintains two records, that is, maximum and sub-maximum

products of queue length and weight value. By comparing both with that of each arrival packet, the PDD can

correctly decide to accept, push out or discard the new arrival. Simulation results show that PDD scheme can

support better fair bandwidth sharing than that of PO.

Keywords: pushout, differentiated dropping, queue management

1. Introduction

When a network router is equipped with an adequate queue management scheme, low packet loss

probability and high throughput are achievable. Furthermore, it is beneficial to enhance fair bandwidth

sharing and make the congestion control mechanisms easier [1-2]. Roughly, we could classify the queue

management schemes into three kinds of categories including static threshold, dynamic thresholds and

pushout. In the static threshold category [3-4], one or more fixed thresholds should be assigned that is used

to limit the growth of queue lengths. In order to adapt to traffic variations, the thresholds should be

dynamically changed that contributes to better performance. Correspondingly, a dynamic thresholds category

is proposed [5-6]. Both threshold-based categories are simple to implement, but they can only perform well

under specific traffic conditions. Consequently, their suitability to be deployed in high-speed networks with

high performance requirement has been questioned.

A well-known pushout (PO) category and several related variants have been proposed [7-8]. The main

idea behind the PO is that the whole of buffer is shared by all queues and then no limit is imposed on the

buffer usage before the buffer is filled up. When an arrival packet encounters a full buffer, one of resided

packets belonging to the longest queue will be pushed out that makes room for the new arrival. The arrival

packet will be discarded directly if it destines to the longest queue. Many previous surveys point out that the

PO is able to support higher throughput and lower loss performance over the threshold-based categories.

In this paper, we principally discuss the performance matrix of fair bandwidth sharing. In the first place,

we estimate the traffic intensity of active flows and then use a weight function to calculate their weight

values. By comparing the product of queue length and weight value of each active flow with two

approximate maximum and sub-maximum products, the arrival packets will be judged as acceptance,

1

Corresponding author. Tel.: + 886-7-6678888 ext. 6404; fax: +886-7-6679999

E-mail address: juipin.yang@msa.hinet.net

47

pushout or discard. We named this scheme pushout with differentiated dropping (PDD), because it

differentiates to push out arrival packets according to their products. Various applications require different

quality of services. By marking a parameter in the packet headers of a specific flow, the PDD can support

different degrees of bandwidth sharing. However, the PO is incapable of action.

2. Pushout with Differentiated Dropping Scheme

In the first place, the definition of a flow is a stream of packets which have the same source and

destination IP addresses. We calculate average packet arrival rate for each active flow to represent their

intensity estimation. An active flow with large intensity means that their arrival packets have higher

probability to be discarded. The intensity estimation is an exponential weighted moving average, similar to

random early detection (RED) [9]. Let m j ,k be the amount of arrival packet size belonging to active flow j

during time interval k. The duration of a time interval is of RT and the link capacity is of C . Furthermore,

the W p is a parameter that affects the dependency of estimation on short-term or long-term traffic conditions.

Particularly, we modify the rule that is in charge of the maintenance of each active flow record in the

ActiveList of the DRR. When active flow j has empty queue, we do not remove flow j from the ActiveList

unless m j ,k = 0 . This can reduce the frequency to insert or remove records from the ActiveList. I j ,k denotes

the intensity estimation of active flow j during time interval k, which is computed as:

I j ,k = wp ⋅

m j ,k −1

+ ( 1 − wp ) ⋅ I j ,k −1

C ⋅ RT

(1)

After flow intensity estimation, we would like to design a weight function. The main idea of the PDD is

that those flows with lower flow intensity will be assigned a lower weight value. There are many probable

choices to assign the weight values. The simplest approach is to make the weight value of each flow linearly

increase relative to its flow intensity. However, the may lead to insufficient discrimination. Therefore, a

suitable weight function can greatly upgrade the fairness of bandwidth sharing.

Based on our simulations and analysis, we design a non-linear weight function in which active flows

with lower intensity are given relatively smaller weight values, while active flows with higher intensity are

given relatively larger weight values. We select a particular weight function in equation (2) because it can

differentiate active flows. Here, α is a parameter and WF j , k is the weight value of active flow j during time

interval k.

⎧logα ( 1 + ( α − 1 ) ⋅ I i ,k )

WFi ,k = ⎨

⎩ I i ,k

0 ≤ I i ,k ≤ 1⎫

⎬

I i ,k > 1

⎭

(2)

Next, the PDD maintains two records that have the maximum and sub-maximum products of queue

length and weight value using the max and submax variables. When the full is filled up, the PDD estimates

the product of queue length and weight value of the arrival packet. By comparing its product with that of

flow max and submax, three actions are involved to deal with the arrival including acceptance, pushout or

discard. Equation (3) means that at least h resided packets with product max will be pushed out (from the

head of flow queue max), in order to make sufficient room to accommodate the arrival packet with size L .

Also, the same rule is applied to the product submax.

h

min{ ∑ S max,i } ≥L

(3)

i =1

Where S max,i is the packet size of ith resided packet with product max.

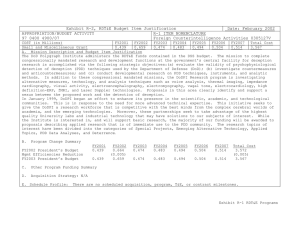

Finally, the complete pseudo codes of the PDD are depicted in Figure 1.

48

Fig 1: Pseudo codes of the PDD scheme

49

3. Simulation Results

We consider a single congested link between two routers and both have the same configuration which is

composed of PDD (compared PO) queue management and DRR scheduling. All network links have the same

link capacity C = 10 Mbps. The other parameters are set as follows; N = 10 , B = 20 Kbytes, W p = 0.3 ,

RT = 16 ms and α = 10 . Each flow generates packets by a specific ON − OFF traffic model. Furthermore,

each flow has infinite data to transmit. To simplify the computer simulations, we assume that all packets

have the same size of 1 Kbytes. Ultimately, total simulation time is set at 200 seconds and quantum size in

the DRR is set at 1 Kbytes. We compare the performance of fair bandwidth sharing between PDD and PO.

Our previous surveys proved that the PO performs better than threshold-based schemes [6]. Consequently,

only the PO is selected as a comparison with PDD in overall simulations. A pushout operation may involve

one or more resided packets to be pushed out that leads to additional overheads. Therefore, low packet

pushout probability is preferred. Unless otherwise specified, all parameters are applied to our simulations.

In Figure 2, we plot normalized bandwidth ratio for each flow under different buffer sizes. There are 10

flows, indexed from 1 to 10, and flows transmit several times more than max-min fair rate. We describe

average traffic load for each flow as [1 2 3 4 5 6 7 8 9 10 (Mbps)]. The definition of normalized bandwidth

ratio is equivalent to attainable bandwidth rate divided by the max-min fair rate. Under max-min fairness,

each flow should obtain average bandwidth of 1 Mbps. Therefore, the ideal normalized bandwidth ratio for

each flow is equal to 1.0.

Fig. 2: Normalized bandwidth ratio versus per flow under different buffer sizes

and average traffic loads from 1 Mbps to 10 Mbps

When B is set at 10 Kbytes, the fair buffer size for each flow is equal to 1 Kbytes. Undoubtedly, the

buffer is severely insufficient in this case. This causes the most unfair bandwidth sharing either PDD or PO,

respectively. Although DRR is capable of fair bandwidth sharing, but it lacks of resided packets to be

dequeued for some of flows. Consequently, an excellent scheduling algorithm may perform poorly if no

adequate queue management scheme collocates. The PDD supports much better fairness than the PO in this

situation, because PDD allows lightly loaded flows increasing in queue length during short-term traffic

burtiness. Inversely, PDD gives a higher probability to discard arrival packets of heavily loaded flows

because they can rapidly resupply packets to the DRR. When B increases, both PDD and PO achieve better

fairness for the lightly loaded flows (flow 1 or flow 2). From different buffer sizes, the PDD always performs

good fairness over the PO thoroughly.

50

The simulation results also show that PO is partial to heavily loaded flows. For example, flow 10

obtains the largest normalized bandwidth ratio near 1.15 when B is set at 10 Kbytes. This may encourage

network users to violate congestion control mechanisms because they are able to occupy more bandwidth.

Therefore, this may greatly aggravate congestion. In the PDD, the flow 8 obtains the largest normalized

bandwidth ratio near 1.08. Higher traffic intensity will result in relatively higher weights, so flow 10 properly

obtains ideal normalized bandwidth ratio near 1.02. When B is set at 60 Kbytes, both schemes equip with

sufficient buffer size that contribute to individual approximately best fairness. From the simulations, the

PDD not only achieves good fairness but also benefits to congestion control mechanisms.

In Figure 3, we discuss the effect of different average traffic loads which consist of [1 1.1 1.2 1.3 1.4

1.5 1.6 1.7 1.8 1.9 (Mbps)]. All flows have close average traffic loads, so their weights are approaching. In

the PO, the flow 10 occupies the largest normalized bandwidth ratio near 1.21 when B is set at 10 Kbytes.

As for the PDD, the flow 10 obtains the largest normalized bandwidth ratio near 1.12 when B is set at 10

Kbytes. Besides, flow 1 obtains better fairness than that of Figure 3 under various buffer sizes. The reason is

that the probability of a full buffer becomes lower, so more arrival packets of flow 1 are admitted to enter the

buffer. From Figure 1 and Figure 2, the PDD always provides better fairness than that of PO under different

traffic conditions.

Fig. 3: Normalized bandwidth ratio versus per flow under different buffer sizes

and average traffic loads from 1 Mbps to 1.9 Mbps

In Figure 4, we change the configuration that consists of different number of flows and average traffic

loads. There are 30 flows, indexed from 1 to 30, and flows transmit several times more than max-min fair

rate. We describe average traffic load for each flow as [0.3333 0.6667 1.0 1.3333 1.6667 2.0 ……. 10

(Mbps)]. According to max-min fairness, each flow should obtain average bandwidth of 0.3333 Mbps. In the

PO, the flow 30 occupies the largest normalized bandwidth ratio near 1.03 when B is set at 30 Kbytes. As

for the PDD, the flow 30 obtains less normalized bandwidth ratio near 0.87 when B is set at 30 Kbytes. The

reason for this phenomenon in the PDD is the same as that of Figure 3. However, the flow 30 performs

worse because more flows have approaching average traffic loads to flow 30. The PDD provides flow 1 with

much better fairness than that of PO when B is set at 30 Kbytes. The PO scheme always allocates more

bandwidth to the heavily loaded flow, but the PDD protects the lightly loaded flows and punishes the heavily

loaded flows by giving less bandwidth. In a word, the PDD has much better performance than that of PO no

matter fairness or congestion control.

51

Fig. 4: Normalized bandwidth ratio versus per flow under different buffer sizes

and average traffic loads from 0.3333 Mbps to 10 Mbps

4. Conclusions

In this paper, we proposed the PDD scheme that is not only as simple as the threshold-based schemes,

but performs much better than the PO. From the simulation results, the PDD has better fair bandwidth

sharing, lower implementation complexity and overheads than the PO under a great variety of traffic

conditions. In the future, we would like to extend the PDD to support different packet loss priorities while

keeps the simplicity and efficiency. Finally, we would like to modify current weight function that contributes

to provide more stable and good performance. In a word, the PDD is a simple but effective queue

management scheme suitable for high-speed networks.

5. Acknowledgements

The authors acknowledge support under NSC Grant NSC 99-2221-E-158-003, Taiwan, R.O.C.

6. References

[1] B. Braden. Recommendations on queue management and congestion avoidance in the Internet. RFC 2309.

[2] A. Mankin, k. Ramakrishnan. Gateway congestion control survey. RFC 1254.

[3] M. Irland, Buffer management in a packet switch. IEEE Trans. Commun. COM-26 1978: 328-337.

[4] F. Kamoun, L. Kleinrock. Analysis of shared finite storage in a computer node environment under general traffic

conditions. IEEE Trans. Commun. COM-28 1980: 992-1003.

[5] A. K. Choudhury, E. L. Hahne. Dynamic queue length thresholds for shared-memory packet switches. IEEE/ACM

Trans. Netw. 6 (2) 1998: 130-140.

[6] J. P. Yang. Performance analysis of threshold-based selective drop mechanism for high performance packet

switches. Performance Evaluation, 57 2004: 89-103.

[7] S.X. Wei, E.J. Coyle, M-T.T. Hsiao. An optimal buffer management policy for high-performance packet switching.

in: Proceedings of GLOBECOM’91 1991, 924-928.

[8] I. Cidon, L. Georgiadis, R. Guerin. Optimal buffer sharing. IEEE J. Sel. Areas Commun. 13 (7) 1995: 1229-1239.

[9] S. Floyd and V. Jacobson. Random early detection for congestion avoidance. IEEE/ACM Transactions on

Networking, 1(4), 1993: 397-413.

52