16 Physical Tamper Resistance C H A P T E R

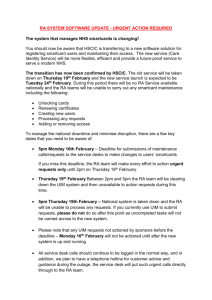

advertisement