GeoMig: Online Multiple VM Live Migration Flavio Esposito Walter Cerroni

advertisement

GeoMig: Online Multiple VM Live Migration

Flavio Esposito

Walter Cerroni

Advanced Technology Group

Exegy Inc., St. Louis, MO

DEI - University of Bologna, Italy

Abstract—The Cloud computing paradigm enables innovative

and disruptive services by allowing enterprises to lease computing, storage and network resources from physical infrastructure

owners, to offer a persistently available service. This shift

in infrastructure management responsibility has brought new

revenue models and new challenges to Cloud providers. One of

those challenges is to efficiently migrate multiple virtual machines

(VMs) within the hosting infrastructure, since these migrations

are often required to be “live”, i.e., without noticeable service

interruptions. In this paper we propose a geometric programming

model and an online multi-VM live migration algorithm based on

such model. The goal of the geometric program is to minimize the

total migration time via optimal bit-rate assignments. By solving

our geometric program we gained qualitative and quantitative

insights into the design of efficient solutions for multi-VM live

migrations. We found that transferring merely a few rounds

of dirty memory pages are enough to significantly lower the

total migration time. We also demonstrated that, under realistic

settings, the proposed method converges sharply to an optimal

bit-rate assignment, making our approach a viable solution for

improving current live-migration implementations.

I. I NTRODUCTION

Resource virtualization is one of the key technologies for

an efficient deployment of Cloud computing services [1].

Decoupling service instances from the underlying processing,

storage and communication hardware allows flexible deployment of any application on any server within any data center,

independently of the specific operating system and platform

being used. In particular, the use of virtual machines (VMs) to

implement end-user services enables flexible workload management operations, such as server consolidation and multitenant isolation [2]. Live migration is an additional feature that

allows VMs to move from one host to another, with minimal

disruption to end-user service availability [3].

Most of the (virtual) services hosted today within a VM

are based on multi-tier applications [4]. Typical examples

include front-end, business logic and back-end tiers of ecommerce services, or clustered MapReduce computing environments. Joint deployment of multiple correlated VMs is also

envisioned by the emerging Network Function Virtualization

paradigm, which is radically changing the way network operators plan to develop and build future network infrastructures,

adopting a Cloud-oriented approach [5]. In general, a Cloud

customer should be considered as a tenant running multiple

VMs which could be either strictly or loosely correlated, and

exchange significant amounts of traffic. Therefore, moving a

given tenant’s workload often means migrating a group of

VMs, as well as the virtual networks used to interconnect

them. On the other hand, migrating a set of tenants for physical

machine maintenance requires moving multiple virtual machines running uncorrelated processes.

Related Work. Although existing Cloud products do offer a

range of solutions for joint management of multiple VMs running multi-tier applications [6], they do not allow simultaneous

VM live migration, do not consider potential future failures,

or do not optimize the migration bandwidth allocated to

each memory migration round, leading to unnecessarily longer

service disruption times. After the seminal work on livemigration [3], a significant number of implementations and

research efforts were carried out with the idea of moving VMs

with minimal service interruption [2], [7]–[9]. Most of the

existing work deals with single VM migration; few solutions

however considered the issue of migrating groups of correlated VMs, such as those executing multi-tier applications. In

particular, VMFlockMS [10] focuses on the migration of large

VM disk images across different data centers. Differently from

our work, this approach is intended mainly for non-live VM

migrations and the optimal allocation of inter-data center link

bandwidth is not considered. Ye et al. [11] experimentally

attempted to assess the role of different resource reservation

techniques and migration strategies on the live-migration of

multiple VMs. Kikuchi et al. [12] investigated the performance

of concurrent live-migrations in both VM consolidation and

dispersion experiments. Differently from our approach which

considers an optimal bit-rate allocation, these solutions do

not capture the significant impact of network resources on

live-migration performance. Other implementation-based studies were carried out to experiment with simultaneous livemigration under different assumptions and pursuing different

objectives [13]–[16] but they do not optimize bandwidth

allocation for each memory transfer round. To our knowledge,

the only online algorithm designed for VM live migration was

proposed in [17], where VM placement heuristics consider

server workload, VM performance degradation and energy but

do not capture network topology and bandwidth allocation for

server interconnection, as we do in our approach. Furthermore,

their migration model does not capture memory dirtying rates.

Our Contribution. In this paper, we dissect the impact of

the multi-VM live migration downtime and propose GeoMig,

a randomized online algorithm that uses a novel geometric

program to optimize each memory transfer round during the

migration of multiple VMs. We derive GeoMig’s competitive

ratio from known k-server problem results. We first propose

a geometric program [18] to optimize a set of bandwidth

2

allocation variables, each of them representing the allocated

bandwidth of a given migration round. As a consequence, the

optimal bandwidth values minimize the migration latency. In

contrast to earlier contributions in this area, e.g. [17], our

model also captures the multi-commodity flow costs of the

underlying physical network hosting the memory transfers.

Our model provides a quantitative analysis of the memory precopy phase, and the resulting service downtime. GeoMig’s

objective aims at limiting two conflicting metrics: the service

interruption (downtime), and the time of VM memory precopy. We quantify the tussle between the downtime and the

pre-copy in Section IV. GeoMig’s objective also controls the

iterative transfer algorithm used in live migration.

With our evaluation, we are able to address qualitative

design hypothesis, such as the stop-and-copy procedure always

increases the migration time when transferring multiple VMs,

and quantitative questions, such as how many dirty page transferring rounds are necessary to minimize the total migration

time? We also empirically demonstrate that, under realistic

settings, the proposed geometric program converges sharply

to an optimal bit-rate assignment solution, making it a viable

contribution to develop advanced Cloud management tools.

Paper Organization. The rest of the paper is organized as

follows: we introduce our optimization model in Section II

and GeoMig algorithm in Section III; we then discuss performance and other insights in Section IV; finally, Section V

concludes our work.

II. O PTIMAL M IGRATION OF M ULTIPLE VM S

•

•

•

•

•

•

•

•

Vmem,j is the memory size of the j-th VM to be migrated,

∀j ∈ J = {1, . . . , M };

Di,j is the memory dirtying rate during round i in the

push (i.e., pre-copy) phase of the j-th VM;

Vi,j is the amount of dirty memory of VM j copied during

round i;

Ti,j is the time needed to transfer Vi,j ;

nj is the number of rounds in the push phase of VM j;

R is the total bit-rate of the migration channel;

ri,j is the channel bit-rate reserved in round i to migrate

VM j;

τdown is the VM maximum allowed downtime.

The equations that rule the live-migration process of VM j

are [20]:

V0,j = Vmem,j = r0,j T0,j

(1)

Vi,j = Di−1,j Ti−1,j = ri,j Ti,j

i ∈ Ij = {1, . . . , nj } (2)

The last transfer round, i.e. the stop-and-copy phase, starts

as soon as i reaches the smallest value nj such that:

Vnj ,j = Dnj −1,j Tnj −1,j ≤ rnj ,j τdown

(3)

Writing equations (1) and (2) recursively results in:

T0,j =

Vmem,j

r0,j

Ti,j =

i

Vi,j

Vmem,j Y Dh−1,j

=

ri,j

r0,j

rh,j

(4)

i ∈ Ij

(5)

h=1

In this section we present our optimization model for

multiple VM migrations. We start with a quantitative analysis

of the live-migration process, then we introduce the optimal

formulation of migration rate allocation, and finally we discuss the geometric programming approach used to solve the

problem.

Note how Equation 5 is a posynomial function [21]. To

simplify the formulation of the optimization model, we fix

the size of the problem to be solved, i.e., we assume a fixed

number of rounds n̄ common to all VM migrations:

A. Optimization Model

Depending on the choice of n̄, the assumed number of rounds

can be sufficient or not to reach the stop condition. This means

that, for a given set of input parameters (namely Vmem,j , Di,j ,

and R), the chosen value of n̄ could be different from the

smallest which satisfies inequality (3). Under this assumption

we are able to quantify the behavior of the pre-copy algorithm

using n̄ as a control parameter and understand the role of the

number of rounds on live-migration performance.

From equations (4) and (5) we can compute two objective

functions when n̄ rounds are executed in the push phase: the

total pre-copy time, computed as the sum of the duration of

the push phase of each VM:

!

M

n̄−1

i

X

XY

Vmem,j

Dh−1,j

1+

(7)

Tpre (n̄, r) =

r0,j

rh,j

j=1

i=1

The two key parameters which quantify the performance of

VM live-migration are the downtime and the total migration

time. These two quantities tend to have opposite behaviors, and

should be carefully balanced. In fact, the downtime measures

the impact of the migration on the end-user’s perceived quality

of service, whereas the total migration time measures the

impact on the Cloud network infrastructure. The effect of

a generic pre-copy algorithm on VM migration timings has

already been modeled, starting with the simple case of a

single VM [19]. Then the same model has been generalized,

extending it to multiple VMs and evaluating how the performance parameters depend on VMs migration scheduling and

mutual interactions when providing services to the end-user

[20]. In this section we leverage the aforementioned multiple

VMs migration performance model to formulate a geometric

programming optimization methodology, whose goal is to find

a tradeoff between downtime and migration time.

We adopt the following parameter definitions:

• M is the number of VMs to be migrated in a batch;

nj = n̄,

Ij = I = {1, . . . , n̄}

∀j ∈ J

(6)

h=1

and the total downtime, computed as the sum of the duration

of the stop-and-copy phase of each VM:

Tdown (n̄, r) =

M

n̄

X

Vmem,j Y Dh−1,j

r0,j

rh,j

j=1

h=1

(8)

3

The expression in (7), which increases with the number of

rounds n̄, quantifies the amount of time required to bring all

migrating VMs to the stop-and-copy phase. On the other hand,

the second objective function in (8) measures the period during

which any VM is inactive, so it is suitable to quantify the

downtime of the services provided by the migrating VMs. This

expression does not include the duration of the resume phase,

which typically has a fixed value determined by technology.

If the allocated migration bit-rate ri,j is always greater than

the dirtying rate Di−1,j , the expression in (8) decreases when

n̄ increases. Therefore, with these two objective functions we

intend to capture the opposite trend of migration time and

downtime.

At each round i of the migration of each VM j, given

Vmem,j and Di−1,j , we wish to allocate the bit-rates ri,j so

that a combination of the two objective functions

Tmig (n̄, r) = Cpre Tpre (n̄, r) + Cdown Tdown (n̄, r)

(9)

which we call the total migration time, is minimized.1 Given a

flow network, that is, a directed graph G = (V, E) with source

s ∈ V and sink t ∈ V, where edge (u, v) ∈ E has capacity

C(u, v) > 0, flow fi,j (u, v) ≥ 0 is the number of bits flowing

over edge (u, v) for migration round i and VM j. Formally,

we have the following geometric program:

min Tmig (n̄, r)

(10a)

ri,j

s. t. Di−1,j < ri,j

M

X

ri,j ≤ R

∀i ∈ I ∀j ∈ J

(10b)

∀i ∈ I

(10c)

j=1

M

X

fi,j (u, v) ≤ C(u, v) ∀ (u, v) ∈ V ∀i

(10d)

j=1

X

∀i ∀j ∀ u 6= si,j , ti,j

fi,j (u, w) = 0

(10e)

w∈V

fi,j (u, v) = −fi,j (v, u) ∀i ∀j ∀ (u, v) ∈ V

(10f)

X

X

fi,j (si,j , w) =

fl (w, ti,j ) = di,j ∀ si,j , ti,j

w∈V

w∈V

(10g)

Di,j ≥ 0

∀i ∈ I ∀j ∈ J

ri,j > 0 ∀i ∈ I ∀j ∈ J

(10h)

(10i)

The page dirtying rate constraints (10b) ensure that the

VM migration is sustainable, i.e., during the entire migration

process, we can transfer (consume) memory faster than the

memory to be transferred is produced. The set of constraints

(10c) ensure that at each memory transfer round we do not

allocate more bit-rate than it is at any time available (R).

Equations (10d)-(10g) are the flow conservation constraints

and ensure that the net flow on each physical link is zero,

except for the source si,j and the destination ti,j , and that each

1 Note

that, in case of a single VM migration (M = 1) and choosing

Cpre = Cdown = 1, the expression in (9) gives exactly the time needed to

perform the push and stop-and-copy phases, i.e., the actual VM transfer time.

flow demand di,j , i.e., the bit-rate associated to the migration

of VM j at during round i, is guaranteed on each physical

link along the path from si,j to ti,j .

III. O NLINE M ULTI -VM L IVE - MIGRATION WITH GeoMig

So far we have shown how minimizing the migration time

depends on the bandwidth allocation for each dirty memory

page transfer. In this section, we argue that the destination

physical machine and the route that leads to it are also

important aspects to consider when designing a multi-VM livemigration algorithm. We consider a realistic (online) setting in

which the migration algorithm is unaware of the future multiVM migration requests, since the application behavior or the

network failures are unknown.

Historically, the performance of online algorithms have been

evaluated using the competitive analysis, where the online

algorithm is compared to the optimal offline algorithm that

knows in advance the (migration) request sequence. Given an

input sequence σ, if we denote the cost incurred by the online

algorithm A as CA (σ), and the cost incurred by the optimal

offline algorithm opt as Copt , then A is called c-competitive

if there exist a constant a such that CA (σ) ≤ c · Copt + a

for all request sequences σ. The factor c is called competitive

ratio of A. In this section, we present GeoMig, a multi-VM

live-migration online algorithm that leverages our geometric

program (10), and we show that the following competitive

ratio inequality holds: c ≤ 54 M · 2M − 2M , where M is the

number of VMs to be simultaneously migrated.

GeoMig Intuition. It is perhaps counterintuitive at first that

a greedy algorithm which migrates in an online fashion a set

of VMs to their closest physical machines, i.e., with smallest

cost, does not minimize the total migration cost over time. The

migration cost may be minimal if we consider a single (multiVM) live-migration request, but it may not be for a sequence

of multiple migration requests. Note that we do not propose

to migrate a set of VMs to a generic random point, but to a

random point in the set of feasible space of hosting machines.

For example, all physical machines reachable within a given

delay, or in a given geolocation within a data-center.

Example 1. (Knowing future failures would help). Assume

that a VM which has to migrate from a source physical

node (PN) S experiences the lowest cost when moving to

a PN D1 , with a downtime (cost) of 5 seconds, but a less

preferred path would lead the VM to a destination physical

machine D2 with a downtime of 6 seconds. Assume also that

immediately after the migration to D1 is complete, PN D1

becomes unavailable. The VM needs to migrate again e.g.,

going back to PN S, hence experiencing another 5 seconds

of downtime. The total downtime over the longer time period

is hence cost(S → D1 ) + cost(D1 → S) = 10 seconds, but

it would have been merely cost(S → D2 ) = 6 seconds if we

had known about the subsequent D1 failure.

For the reasons explained in Example 1, it is well known

that, in general, randomized algorithms may improve the competitive ratio of online algorithms. This is because, intuitively,

4

an adaptive adversary cannot predict the online algorithm

moves as they are unknown.

Migrating k-servers. We observe that, given a physical network, the problem of selecting the lowest cost hosting physical

machines to migrate multiple VMs to k = M distinct physical

machines can be reduced from the k-server problem. The kserver problem is that of planning the movement of k servers

on the vertices of a graph G under a sequence of requests.

Each request consists of the name of a vertex, and is satisfied

by placing a server at the requested vertex (migrating the VM

to one of the k servers). The requests must be satisfied in

their order of occurrence. The cost of satisfying a sequence

of requests is the distance moved by the servers. We map

the cost function of moving servers in the Euclidean space to

the precomputed pairwise migration costs resulting from our

geometric program (10).

Based on the above observations, we designed GeoMig

leveraging Harmonic [22] — a known randomized algorithm

designed for the online k-server problem. The reduction from

the k-server problem led us to the following results:

Proposition III.1. Let R be a randomized online algorithm

that manages the migration problem of multiple VMs to k

distinct servers in any physical network, modeled as an undirected graph. Then the competitive ratio against an adaptive

online adversary is CR aon ≥ k.

Proof. (sketch) In the subsection Migrating k-servers we

have informally described the reduction from the online kserver to the online migration to k servers. The result is then

an immediate Corollary of Theorem 13.7 of [23].

Theorem III.1. Given k potentially hosting physical machines, the competitive ratio of GeoMig is c ≤ 54 k · 2k − 2k.

th

Proof. (sketch) Let di be the distance between the i server

managed by the Harmonic algorithm to the requested point,

for 1 ≤ i ≤ k. The Harmonic algorithm [22] chooses

independently from the past j th server with probability pj =

1/di

Pk

. Because Grove [24] showed that the competitive

j=1 1/dj

ratio of the Harmonic algorithm problem is bounded by

5k

k

4 · 2 − 2k, we have the claim.

GeoMig uses randomized choices to subvert the high cost

induced by a non-oblivious adaptive adversary. Let us

denote with x the ID of the physical machine currently hosting

the VM to be migrated, and with yi the identifier of the new

candidate physical machine, where i belongs to the index set of

all feasible physical machines. Then, the destination physical

machine yi is chosen with probability:

pi =

1/d(x, yi )

,

k

X

1/d(x, yj )

(11)

j=1

where d(x, yi ) is the migration cost for moving a VM from

physical machine x to yi . The smaller the cost to migrate

a VM to physical machine yi , the higher the probability to

pick yi as a new hosting physical machine (among the k

feasible). With this randomized strategy, an adversary that

picks the VM to be migrated closest to the next failing physical

node cannot harm the overall long term service downtime,

as it cannot deterministically predict the GeoMig destination

physical machine.

IV. P ERFORMANCE E VALUATION

In this section, we first we show how the GeoMig performance compares with respect to a greedy migration strategy

heuristic, and then, by solving our geometric program across a

wide range of parameter settings, we answer a set of qualitative design questions, such as, should live-migration always

be used when transferring multiple VMs? and quantitative

questions, such as few dirty page transferring rounds are often

enough to minimize the total migration time.

A. Simulation Environment

To validate our model and gain insights into the performance

of (online) multi-VM live-migration, we built our own simulator leveraging the geometric program solver GGPLAB [25].

GGPLAB uses the primal dual interior point method [26]

to solve the convex version of the original (non linear)

geometric program. We were able to obtain similar results

across a wide range of the parameter space, but we present

only a significant representative subset that summarizes our

messages. We generate our physical network topologies using

the BRITE [27] topology generator and we were able to

obtain similar results using physical “fat-trees”, BarabasiAlbert, and Waxman connectivity models, with a wide of

physical network sizes. All our results are obtained with 95%

confidence intervals.

B. Simulation Results

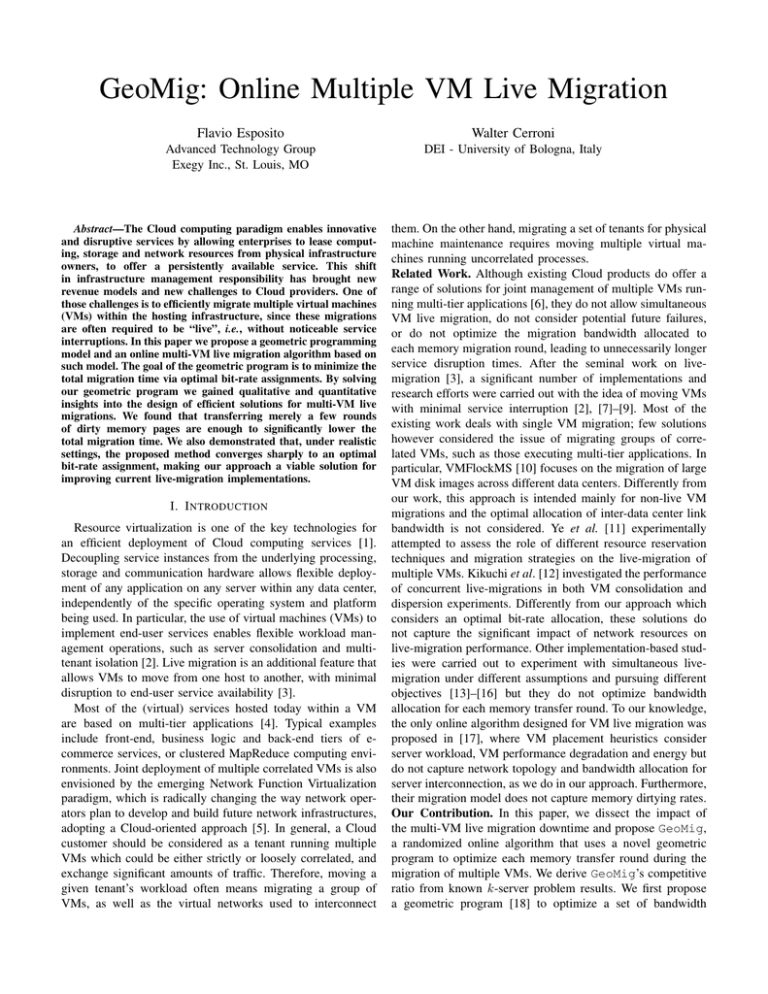

(1) GeoMig improves up to 47% the cost of migrating

multiple VMs. We found that, independently from the size and

the connectivity model, in a physical network with up to 10%

of random physical node failures, a greedy alternative which

always selects the cheapest e.g., closest destination physical

machines has an higher average migration cost (Figures 1a

and 1b.) To render our results agnostic from the chosen bandwidth, we normalize our costs (migration times) dividing them

by the average hop-to-hop cost over the physical network.

(2) Independently from the migration strategy used (online

or offline), the downtime can be reduced up to two order

of magnitudes while increasing the number of transferring

rounds. In Figure 1c we show the impact of the downtime,

when migrating simultaneously 6 VMs. The maximum rate

was set to 1 Gbps, and the values of minimum migration

time are obtained solving Problem (10) when Cpre = 0 and

Cdown = 1. The size of the VMs is chosen from a gaussian

distribution with mean given in the legend and standard

deviation 300 MB. The results are shown in semi-logarithmic

scale to clarify that the downtime may diminish of two orders

of magnitude, as we increase the number of rounds. The same

two order of magnitude results were obtained sampling the

a smaller and an higher number of VMs with sizes from a

5

50

200

2

10

150

100

2500

mean VM size = 4 GB

mean VM size = 2 GB

mean VM size = 1 GB

2000

Pre-copy

Time

[s][s]

Migration

Time

100

Greedy

GeoMig

Optimal

Downtime [s]

150

3

10

250

Greedy

GeoMig

Optimal

Normalized Migration Cost

Normalized Migration Cost

200

1

10

0

10

50

1500

10

20

30

40

Live−Migrated VMs

50

0

(a) Barabasi-Albert (100 ph. machines).

10

20

30

40

Live−Migrated VMs

500

10

50

B

1000

−1

0

mean VM size = 4 GB

mean VM size = 2 GB

mean VM size = 1 GB

0

0

2

4

6

8

Memory Transferred [rounds]

10

(c) Tdown impact.

(b) Waxman (200 ph. machines).

0

2

4

6

8

Memory Transferred [rounds]

10

(d) Pre-copy saturation.

Fig. 1. (a-b) The Geomig online multi-VM live-migration strategy has a lower (normalized) migration cost than a Greedy heuristics that always select the

destination physical machines with the lowest cost. Costs were computed using our geometric problem on a physical network following (a) Barabasi-Albert

and (b) Waxman connectivity model. (c) Downtime reduced of 2 order of magnitude with only a few more rounds. (d) The pre-copy time reaches a saturation

point after a few dirty page transferring rounds.

2

600

500

400

300

200

100

0

0.6

0.5

10

mean VM size = 1 GB

mean VM size = 2 GB

mean VM size = 4 GB

4 GB after 10 iterations

50

Main VM memory only

Main VM + 5 dirty pages (rounds)

Main VM + 10 dirty pages (rounds)

40

0.4

0.3

nj=0

1

Migration Time [s]

Migration Time [s]

700

Convergence Time of GP [s]

mean VM size = 4 GB

mean VM size = 2 GB

mean VM size = 1 GB

Duality Gap

0.7

800

30

20

10

nj=1

nj=3

nj=5

0

10

n =7

j

0.2

nj=9

10

0.1

−1

10

0

2

4

6

8

Memory Transferred [rounds]

(a)

10

0

−2

0

2

4

6

8

Memory Transferred [rounds]

10

(b)

0 0

10

1

Iterations

(c)

10

0

0.2

0.4

0.6

Objective Weight α

0.8

1

(d)

Fig. 2. The downtime significantly decreases when increasing the number of rounds: (a) Impact of total migration time during the live-migration of 6 VMs.

(b) Convergence time of the geometric program solved with an iterative method. (c) After only 10 iterations, the primal-dual interior point method that solves

our geometric program finds rates very close to the optimal. (d) Impact of the weight α in the “tradeoff” objective function α · Tpre +(1 − α)Tdown .

uniform and a bimodal distribution where 20% of the time

the sampled VM has size 10 times bigger (results not shown).

Although a diminishing delay is expected when the number of allowed rounds increases, quantifying such downtime

decrease is important to gain insights on how to tune or rearchitect the QEMU-KVM hypervisor migration functionalities. For example, delay-sensitive applications like online gaming or high-frequency trading may require memory migrations

with the maximum possible number of transferring rounds.

For bandwidth-sensitive applications instead, e.g., peer-to-peer

applications, it may be preferable to reduce the migration bitrate, tolerating a longer service interruption as opposed to a

prolonged phase with a lower bit-rate. We have also tested

our model with different values of maximum bit-rate, as well

as different dirtying rates, but we did not find any significant

qualitative difference and hence we omit such results.

(3) The total migration time improvement diminishes as we

increase the number of transferring rounds. This diminishing

effect is a direct consequence of the diminishing duration of

each subsequent transferring round. This result gives insights

on the importance on allocating enough bandwidth, to guarantee a given quality of service to VMs running memoryintensive applications or with high dirtying rate.

The values of minimum migration time were obtained

solving GeoMig (and so Problem 10) when Cpre = 1 and

Cdown = 0. In Figure 1d, the impact of the pre-copy time

only is computed for 3 VMs at a maximum available rate of

0.5 Gbps. The size of the 6 VMs is chosen from a gaussian

distribution with mean as shown in the legend and standard

deviation 300 MB.

(4) Few transferring rounds are enough to minimize the total

migration time. In this experiment, we evaluate GeoMig with

a full posynomial function representing the total migration

time Tmig (n̄, r), i.e., when both downtime and pre-copy time

have equal weight Cdown = Cpre = 1 in the geometric

program objective function. Note that, even after the change of

variables, the posynomial function Tmig (n̄, r) is a summation

of two non-linear functions, so we should not expect to see

the total migration time as merely a summation of the two

values obtained solving separately the two subproblems with

Cdown = 0 and Cpre = 0, respectively. For this experiment,

we selected the results with 6 VMs chosen from a uniform

distribution with average size indicated by the legend, and

standard deviation of 300 MB (Figure 2a); similar results were

obtained with VM size chosen from a gaussian and a bimodal

distribution.

(5) GeoMig has a rapid convergence time and near optimal

values of bit-rate are reached after only few iterations. To the

total migration time we also have to add the convergence time

of the iterative algorithm which solves the geometric program.

If this time is too high, it may be inconvenient to wait for an

optimal solution. In Figure 2b and 2c we show that this is not

6

the case: the GeoMig convergence time is bounded by roughly

60 ms per round using a machine with standard processing

power (Intel core i3 CPU at 1.4 GHz and 4 GB).

(6) The stop-and-copy procedure always increases the migration time when transferring multiple VMs. To gain additional

insights on the impact of the two different components of the

total migration time, we solve GeoMig with different weights

on the two objective function coefficients, the pre-copy time

and the downtime. In particular, we set Cpre = α where

α ∈ [0, 1] and Cdown = (1 − α), and assess the impact of

the migration time for different transferring rounds n̄ as we

increase the value of α from 0 to 1 (Figure 2d.) nj = n̄ is a

parameter of the figure, while α represents how much weight

we assign to the pre-copy phase during the bit-rate assignment.

Migrating the VMs with a single stop-and-copy phase

means setting n̄ = nj = 0. In this case, the total migration

time is equivalent to the pre-copy time, which is equivalent to

the downtime. As soon as the number of rounds increases

(parameter n̄ > 0) the total migration time drops. This

suggests that we should never transfer (multiple) VMs with

merely a stop-and-copy procedure.

(7) Increasing the number of transferring rounds does not help

after the first few rounds. Increasing the number of rounds

leads to a diminishing marginal improvement in the total

migration time as shown by the different gradients (or slopes)

of the curves with n̄ > 0 in Figure 2d. Another interesting

observation from Figure 2d is that, as we assign more weight

to the pre-copy time component of the objective function, the

resulting total migration time increases.

V. C ONCLUSIONS

In this paper we studied the live-migration of multiple virtual machines, a fundamental problem in networked Cloud service management. First, we built a live-migration model, and a

geometric programming formulation that, when solved, returns

the minimum total migration time by optimally allocating the

bit-rates across the multiple VMs to be migrated. Then we

proposed a novel randomized online multi-VM live-migration

algorithm, GeoMig, and showed that the competitive ratio of

GeoMig is c ≤ 54 k · 2k − 2k, where k is the number of

potentially hosting physical machines which satisfy the set

of migration constraints given by our geometric program. The

optimization problem used by GeoMig aims at simultaneously

limiting both the service interruption (downtime), and the

time of VM pre-copy, along with a proper control of the

iterative memory copying algorithm used in live-migration.

By solving the geometric program across a wide range of

parameter settings, we were able to answer qualitative and

quantitative design questions. We have also shown that, under

realistic settings, the proposed geometric program converges

sharply to an optimal bit-rate assignment, making it a viable

and useful solution for improving the current live-migration

implementations.

R EFERENCES

[1] R. Buyya, J. Broberg, and A. M. Goscinski, Eds., Cloud Computing:

Principles and Paradigms. New York: Wiley, 2011.

[2] V. Medina and J. M. Garcı́a, “A survey of migration mechanisms of

virtual machines,” ACM Comp. Surveys, Jan 2014.

[3] C. Clark, K. Fraser, S. Hand, J. G. Hansen, E. Jul, C. Limpach,

I. Pratt, and A. Warfield, “Live migration of virtual machines,” in

Proceedings of the 2nd USENIX Symposium on Networked Systems

Design & Implementation (NSDI), Boston, MA, May 2005.

[4] X. Wang, Z. Du, Y. Chen, and S. Li, “Virtualization-based autonomic

resource management for multi-tier web applications in shared data

center,” Journal of Systems and Software, vol. 81, no. 9, pp. 1591–1608,

September 2008.

[5] “Network Functions Virtualisation (NFV): Network operator perspectives on industry progress,” ETSI White Paper, The European Telecommunications Standards Institute, 2013.

[6] S.

J.

Bigelow.

(2014,

Nov.)

How

can

an

enterprise

benefit

from

using

a

VMware

vApp?

[Online].

Available:

http://searchvmware.techtarget.com/answer/

How-can-an-enterprise-benefit-from-using-a-VMware-vApp

[7] D. Kapil, E. Pilli, and R. Joshi, “Live virtual machine migration

techniques: Survey and research challenges,” in Advance Computing

Conference (IACC), 2013 IEEE 3rd International, Feb 2013, pp. 963–

969.

[8] S. Acharya and D. A. D’Mello, “A taxonomy of live virtual machine

(VM) migration mechanisms in cloud computing environment,” in Proc.

of ICGCE, Chennai, India, Dec. 2013.

[9] R. Boutaba, Q. Zhang, and M. F. Zhani, “Virtual machine migration:

Benefits, challenges and approaches,” in Communication Infrastructures for Cloud Computing: Design and Applications, H. Mouftah and

B. Kantarci, Eds. IGI-Global, 2013.

[10] S. Al-Kiswany, D. Subhraveti, P. Sarkar, and M. Ripeanu, “VMFlock:

Virtual machine co-migration for the cloud,” in Proceedings of the

20th International ACM Symposium on High-Performance Parallel and

Distributed Computing (HPDC ’11), San Jose, CA, June 2011.

[11] K. Ye, X. Jiang, D. Huang, J. Chen, and B. Wang, “Live migration of

multiple virtual machines with resource reservation in cloud computing

environments,” in Proceedings of IEEE International Conference on

Cloud Computing (CLOUD 2011), July 2011.

[12] S. Kikuchi and Y. Matsumoto, “Performance modeling of concurrent live

migration operations in cloud computing systems using PRISM probabilistic model checker,” in Proceedings of the 4th IEEE International

Conference on Cloud Computing, (CLOUD 2011), Washington, DC, July

2011.

[13] U. Deshpande, X. Wang, and K. Gopalan, “Live gang migration of

virtual machines,” in Proceedings of the 20th International ACM Symposium on High Performance Parallel and Distributed Computing (HPDC

’11), San Jose, CA, June 2011.

[14] U. Deshpande et al., “Inter-rack live migration of multiple virtual

machines,” in Proc. of VTDC, Delft, The Netherlands, June 2012.

[15] K. Ye, X. Jiang, R. Ma, and F. Yan, “VC-migration: Live migration of

virtual clusters in the cloud,” in Proceedings of the 13th ACM/IEEE

International Conference on Grid Computing (GRID 2012), Beijing,

China, September 2012.

[16] E. Keller, S. Ghorbani, M. Caesar, and J. Rexford, “Live migration of

an entire network (and its hosts),” in In Proc. of HotNets-XI, 2012.

[17] A. Beloglazov and R. Buyya, “Optimal online deterministic algorithms

and adaptive heuristics for energy and performance efficient dynamic

consolidation of virtual machines in cloud data centers,” Conc. and

Comp.: Practice and Experience, Sept. 2012.

[18] R. J. Duffin, E. L. Peterson, and C. M. Zener, Geometric Programming:

Theory and Application. New York: Wiley, 1967.

[19] H. Liu et al., “Performance and energy modeling for live migration of

virtual machines,” Cluster Computing, vol. 16, no. 2, June 2013.

[20] W. Cerroni and F. Callegati, “Live migration of virtual network functions

in cloud-based edge networks,” in Proc. of ICC, June 2014.

[21] S. Boyd and L. Vandenberghe, Convex Optimization.

New York:

Cambridge University Press, 2004.

[22] P. Raghavan and M. Snir, “Memory versus randomization in on-line

algorithms,” IBM Journal of Research and Development, vol. 38, no. 6,

pp. 683–707, November 1994.

[23] R. Motwani and P. Raghavan, Randomized Algorithms. New York, NY,

USA: Cambridge University Press, 1995.

[24] E. F. Grove, “The harmonic online k-server algorithm is competitive,” in

Proceedings of the Twenty-third Annual ACM Symposium on Theory of

Computing, ser. STOC ’91. New York, NY, USA: ACM, 1991, pp. 260–

266. [Online]. Available: http://doi.acm.org/10.1145/103418.103448

[25] [Online]. Available: http://stanford.edu/∼boyd/ggplab/

[26] S. J. Wright, Primal-Dual Interior-Point Methods. Philadelphia: Society

for Industrial and Applied Mathematics (SIAM), 1997.

[27] A. e. a. Medina, “BRITE: An approach to universal topology generation,” in In Proc. of MASCOT, 2001, pp. 346–.