Expert Information and Nonparametric Bayesian Inference of Rare Events Hwan-sik Choi November 2012

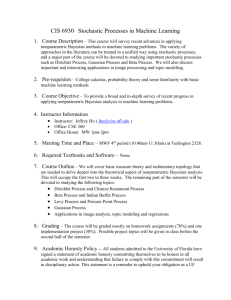

advertisement

Expert Information and Nonparametric

Bayesian Inference of Rare Events

Hwan-sik Choi∗

November 2012

Abstract

Prior distributions are important in Bayesian inference of rare events because historical data

information is scarce, and experts are an important source of information for elicitation of a

prior distribution. We propose a method to incorporate expert information into nonparametric

Bayesian inference on rare events when expert knowledge is elicited as moment conditions on a

finite dimensional parameter θ only. We generalize the Dirichlet process mixture model to merge

expert information into the Dirichlet process (DP) prior to satisfy expert’s moment conditions.

Among all the priors that comply with expert knowledge, we use the one that is closest to the

original DP prior in the Kullback-Leibler information criterion. The resulting prior distribution

is given by exponentially tilting the DP prior along θ. We provide a Metropolis-Hastings

algorithm to implement our approach to sample from posterior distributions with exponentially

tilted DP priors. Our method combines prior information from an econometrician and an expert

by finding the least-informative prior given expert information.

JEL: C11, C14, C15, G11, G31

Keywords: Dirichlet process mixture, defaults, Kullback-Leibler information criterion, maximum entropy, Metropolis-Hastings algorithm.

∗ hwansik@purdue.edu. Department of Consumer Science, Purdue University, 812 W State St. West Lafayette,

IN 47907, USA.

1

1

Introduction

We develop a nonparametric Bayesian framework that incorporates expert information for inference

of rare events. Inference of rare events such as defaults in a high grade portfolio, extreme losses, and

other catastrophic events is critical in measuring credit risk and systemic risk, which are important

in risk management for optimal hedging and economic capital calculations. But inference of rare

events is difficult because of lack of historical data information. Therefore, it is important to use

all sources of information including non-data information, and the Bayesian method provides a

natural and coherent mechanism of combining all available information. However, the use of noninformative or objective priors in a Bayesian model for rare events is often not satisfactory because

of the scarcity of information in data. Kiefer (2009, 2010) proposes to use expert information for

default estimation as an additional source of information, and argues that the Bayesian approach is

a natural theoretical framework for inference on rare events. He uses expert information to elicit the

Beta distribution prior or a mixture of the Beta distributions for a default probability. Although

Kiefer’s approach is illustrated with the binomial sampling distribution for the number of defaults

in a portfolio, it can be easily extended to any general parametric models.

In this paper, we argue that nonparametric models are useful for rare events, and show that

expert information can be effectively incorporated in nonparametric models too. Consider the

following situation for motivation of using nonparametric models for rare events. Rare events are

often associated with the tails of sampling distributions. For this reason, the rare events are also

called the tail-risk events. For a parametric model, a finite number of parameters would determine

the entire distribution, and inference of tail probabilities can be done from inference of the finite

dimensional parameter. In this case, the problem of scarce tail-risk events is remedied by the use

of the parametric assumption, which is a strong form of non-data information. Consequently, a

parametric model improves the efficiency of using data information. However, when there is a

concern for misspecification, the impact of misspecification can be serious for inference of tail-risk

events. Since frequent events would dominate the data information, they would drive estimation

of the parameters as well. But frequent events may not be relevant to tail-risk events if the model

2

is misspecified. Moreover, it is difficult to check the adequacy of a parametric model specifically

for tail probabilities because of the scarcity of rare events. For example, the maximum likelihood

(ML) estimator for the mean parameter of normal distributions with a known variance would be the

sample mean, which is a sufficient statistic. All inference can be done with the sufficient statistic.

But if the normal distributions are misspecified, it would be better to do inference on the tail

probabilities with emphasizing the samples far away from the sample mean since they are more

relevant. For this reason, using a nonparametric model can be appealing for rare events when we

do not have strong confidence in a parametric model.

Of course, an important disadvantage of nonparametric models is reduction in efficiency of

using data. Therefore, additional information becomes very desirable, and expert information

is particularly useful as complementary information in this setting. However, elicitation of expert

opinion becomes difficult when the dimension of a model parameter increases. Practically, it is easier

for an expert to talk about a few aspects of rare events rather than the entire shape of sampling

distributions. Our approach is to use expert information on a finite dimensional parameter, and

to incorporate the expert information in the nonparametric Bayesian model. In particular, we

consider the Dirichlet process mixture (DPM) model. The sampling distribution of the DPM

model is an infinite mixture of a family of distributions, and the mixing distribution works as an

infinite dimensional parameter of the model. The DPM model uses the Dirichlet process as a prior

distribution over the mixing distributions. The Dirichlet process (DP) is a random measure over

a space of distributions. Since the development of the DP by Freedman (1963), its theoretical

properties and usefulness in Bayesian analysis are shown by Ferguson (1973), Ferguson (1974),

Blackwell and MacQueen (1973), and Antoniak (1974). Especially, the DP is widely used as a prior

distribution of nonparametric Bayesian models (Lo (1984)).

The DPM model has become popular with the development of the Markov Chain Monte Carlo

(MCMC) methods such as Escobar (1994), Escobar and West (1995), MacEachern and Müller

(1998), Neal (2000), and Ishwaran and Zarepour (2000) for sampling posterior distributions with

DP priors. In economics, Hirano (2002) studies semiparametric Bayesian inference for a panel

model with a countable mixture of normally distributed errors with mixing weights drawn from

3

the DP. Griffin and Steel (2011) consider a serially correlated sequence of DPs with applications to

financial time series and GDP data, and Jensen and Maheu (2010) use the DP for the distribution

of errors that are multiplied by the volatility process in a stochastic volatility model. Taddy and

Kottas (2010) apply the DPM model for inference in quantile regressions.

In the DPM model, a mixing distribution drawn from the DP determines the sampling distribution F that is a member of the space F of all sampling distribution functions of a nonparametric

model. Therefore, the DP gives a distribution over the functional space F. Given a sampling

distribution function F , we consider a finite dimensional vector θ of functionals of F related to the

rare events that an expert has knowledge on. We elicit expert opinion about the distribution of θ.

Elicitation of expert information requires a careful design. Generally, it is easier for an expert to

think about probabilities or quantiles than moments. Kadane and Wolfson (1998) and Garthwaite,

Kadane, and O’Hagan (2005) discuss the issues in elicitation of expert opinions. Kadane, Chan,

and Wolfson (1996) study elicitation of a subjective prior for Bayesian unit root models. Chaloner

and Duncan (1983) develops a computer scheme to elicit expert information from a predictive

distribution.

In our approach, expert information is in the form of moment conditions on θ that the DP prior

may not satisfy. We combine the expert information and the DP prior by modifying the DP prior

to satisfy the moment conditions. Among all such modifications, we find the prior distribution

that is closest to the original DP prior in the Kullback-Leibler information criterion. The resulting prior distribution is given by exponentially tilting the DP prior along θ. We also provide a

Metropolis-Hastings algorithm to implement our approach to draw samples from the exponentially

tilted DP prior. Our method gives a simple way to combine the prior information from an econometrician and an expert by finding the least-informative prior given expert information, based on

an econometrician’s prior.

4

2

Expert information in nonparametric Bayesian inference

2.1

Dirichlet process mixture models

Consider the DPM model for n observations {yi }ni=1 given by

yi | ξi ∼ Kξi

ξi | G ∼ G

G ∼ P,

where X ∼ F means the distribution of X is F , Kξi = K(·|ξi ) is a distribution function with a

parameter ξi ∈ Ξ ⊂ Rd , and ξi is a random draw from a mixing measure G defined on a probability

space (Ξ, B), where B is a σ-field of subsets of Ξ. Let G be the space of all mixing measures on Ξ.

In the DPM model, a measure G ∈ G is thought to be an infinite dimensional parameter, and the

prior distribution of G is given by a Dirichlet process P = DP(αG0 ) with a concentration parameter

α > 0 and a base measure G0 ∈ G. The mean of DP(αG0 ) is G0 , so we can write

EG = G0 ,

(2.1)

where

Z

EG =

G P(dG).

(2.2)

d

The concentration parameter α is inversely related to the variance of DP(αG0 ), and we have G → G0

as α → ∞. Any finite dimensional distribution of DP(αG0 ) is a Dirichlet distribution, i.e. for an

arbitrary finite measurable partition (B1 , B2 , . . . , Br ) of Ξ, we have

(G(B1 ), G(B2 ), . . . , G(Br )) ∼ Dirichlet(αG0 (B1 ), αG0 (B2 ), . . . , αG0 (Br )),

and EG(B) = G0 (B) for all B ∈ B. From

Pr

j=1

αG0 (Bj ) = αG0 (Ξ) = α, α is also called a total

mass parameter. See Ferguson (1973) and Ferguson (1974) for the detailed development of the

5

DP prior and its properties, and Ghosh and Ramamoorthi (2003) and Hjort, Holmes, Müller, and

Walker (2010) for an overview of nonparametric Bayesian methods.

For notational simplicity, we omit the subscript i. The distribution function F (y|G) of y given

G can be written in a mixture form as

Z

F (y|G) =

K(y|ξ) G(dξ),

(2.3)

where K(y|ξ) is used as a mixing kernel. For convenience of exposition of our main idea, we also

assume that the probability density function of K(y|ξ) exists for all ξ. Then we can write the

sampling density of y given G as

Z

f (y|G) =

k(y|ξ) G(dξ),

(2.4)

where the mixing kernel k(y|ξ) is the probability density function of K(y|ξ). The DPM model

has become popular in Bayesian nonparametric modeling because of its flexibility compared to a

parametric model or a mixture model with a fixed number of mixing components. To generate a

posterior distribution for the DPM model, various sampling schemes were developed. Blackwell and

MacQueen (1973) develops Pólya urn schemes for DP priors of Ferguson (1973). Escobar (1994)

and Escobar and West (1995) provide generalized Pólya urn Gibbs samplers for the DPM model.

Neal (2000), Ishwaran and Zarepour (2000) and Ishwaran and James (2001) also provide alternative

sampling methods for a general class of priors that include DP priors.

In our framework, experts have information on a k-dimensional parameter of interest

θ = ϕ(F (y|G)),

(2.5)

where ϕ(·) is a vector of functionals of the sampling distribution F (y|G) of y. Note that the expected

value of θ can be written as

Z

Eθ =

ϕ(F (y|G)) P(dG).

6

The functional ϕ can be a linear functional such as moments of F (y|G), or a value of the distribution

function F (c|G) at a fixed point y = c. But it can also be a nonlinear functional such as quantiles,

inter-quantile ranges, hazard functions of F (y|G), or the quantities regarding the distribution of

the maximum or minimum of N samples. See Gelfand and Mukhopadhyay (1995) and Gelfand and

Kottas (2002) for more examples of ϕ and their properties. When ϕ is linear, from (2.3), we can

simplify θ to a mixture of ϕ(K(y|ξ)),

Z

θ=

ϕ(K(y|ξ)) G(dξ),

(2.6)

and using (2.1), the expected value of θ becomes

Z

Eθ =

ϕ(K(y|ξ)) G0 (dξ).

(2.7)

An important class of a linear functional ϕ is given by

Z

ϕ(F (y|G)) =

h(y)f (y|G) dy,

where h : R → Rk . Note that if h(y) = y p , then θ becomes a p-th order moment of F (y|G), and if

h(y) = I{y ≤ c}, where I(·) is an indicator function, then θ is the cumulative distribution function

evaluated at c. From (2.4), we can easily prove (2.6) by

Z

Z

θ=

h(y) k(y|ξ) G(dξ) dy

ZZ

=

h(y)k(y|ξ) dy G(dξ)

Z

= ϕ(K(y|ξ)) G(dξ).

But for a general nonlinear functional ϕ, (2.6) or (2.7) would not hold. As suggested by Kadane

and Wolfson (1998), elicitation of expert information should include questions about quantiles or

probabilities rather than moments, and we consider asking experts about quantiles (nonlinear ϕ)

7

and values of distribution functions at fixed points (linear ϕ) in this paper to illustrate our approach

with an example.

In our framework, expert’s information is in the form of l moment restrictions on θ such that

Eg(θ) = 0,

(2.8)

where g(·) is a function g : Rk → Rl . From (2.5), we can write (2.8) as

Eϕ̃(F (y|G)) = 0,

where ϕ̃ = g ◦ ϕ is an l-dimensional functional. If both g and ϕ are linear, ϕ̃ is a linear functional

and Eg(θ) is simplified to

ZZ

Eg(θ) =

ϕ̃(K(y|ξ)) G(dξ) P(dG)

Z

=

ϕ̃(K(y|ξ)) G0 (dξ).

(2.9)

This implies that Eg(θ) depends on G0 only, when ϕ̃ is linear and G is from DP(αG0 ).

Let

D(Q k P) =

Z

log(dQ/dP) dQ

be the Kullback-Leibler information criterion (KLIC, Kullback and Leibler (1951), White (1982))

from a measure Q to P. The goal of our paper is to merge the expert information (2.8) into the

DP prior P by finding a new measure Q that is closest to P in the KLIC by solving

min D(Q k P),

(2.10)

Q = {Q : EQ g(θ) = 0},

(2.11)

Q∈Q

where

R

and EQ g(θ) = g(θ) Q(dG) is the expectation of g(θ) with respect to Q. The solution Q∗ to (2.10)

8

is given by the Gibbs canonical density π ∗ given by

π∗ =

dQ∗

exp(λ0∗ g(θ))

= P

,

dP

E exp(λ0∗ g(θ))

(2.12)

where λ∗ is the solution of the unconstrained convex problem

min EP exp(λ0 g(θ)),

λ

(2.13)

and the minimum KLIC with Q∗ is given by

D(Q∗ k P) = − log(EP exp(λ0∗ g(θ))).

See Csiszár (1975) and Cover and Thomas (2006) for an information theoretic treatment of this

problem, and Kitamura and Stutzer (1997) for a discussion of the optimization problem in the

context of semiparametric estimation. Intuitively, the solution Q∗ gives the least informative Q ∈ Q

that satisfies (2.11). Geometrically speaking, the KLIC from Q to P in (2.10) is −1-divergence of

Amari (1982) among the class of α-divergence (|α| ≤ 1) from P to Q. In Amari’s terminology, we

get the minimizer Q∗ ∈ Q of −1-divergence by “−1-projection” of P onto the space Q, and the

projection is given by exponential tilting of P (Kitamura and Stutzer (1997)). In this paper, we

develop a practical method to find Q∗ and implement nonparametric Bayesian inference with Q∗

as a prior distribution.

2.2

Semiparametric models and geometric interpretation

In this section, we consider an extension of our approach to a semiparametric model defined with

a set of moment conditions. For simplicity of exposition, let us assume y ∼ G without mixing,

but the discussion below can be easily applied to a mixture model. Suppose we have the moment

conditions

EG m(y, θ) = 0,

9

Priors

Ḡ ⊂ G

Θ

G∈G

P∗

−1-projection

θ

−1-projection

Q0

Q∗

(Expert)

Gθ

G∗θ

P (DP)

Q

Figure 1: Geometry of exponential tilting of DP priors for semiparametric models. A random

draw G from DP(αG0 ) is projected onto the space Ḡ that satisfies moment conditions to get the

projection G∗θ . The projection is done by −1-projection of Amari (1982) by minimizing Amari’s

−1-divergence. This projection process defines a degenerated distribution P ∗ . Then we incorporate

expert information by applying −1-projection again to get Q∗ . The whole process is shown as the

solid path P → G → G∗θ → P ∗ → Q∗ .

where θ ∈ Θ is a parameter of interest, and EG is the expectation with respect to G. Consider the

space Gθ = {G ∈ G|EG m(y, θ) = 0} of distributions that satisfies the moment condition given θ,

and denote Ḡ = ∪θ∈Θ Gθ as the union of all Gθ . Then for each G ∈ Ḡ, we can define θ = {θ|G ∈ Gθ },

and θ = ∅ for G ∈ (G − Ḡ). We assume that θ is fully identified, i.e. Gθ ∩ Gθ0 = ∅ for θ 6= θ0 .

In our approach, the parameter vector θ is the object on which we elicit expert information. We

first begin with the DP prior DP(αG0 ). A random draw G ∈ G from DP(αG0 ) is projected onto

the sub-space Ḡ by solving

min min

D(G0 k G).

0

θ

G ∈Gθ

(2.14)

Note that the solution G∗θ to the problem minG0 ∈Gθ D(G0 k G) is given by

dG∗θ

exp(γ∗0 m(y, θ))

= G

,

dG

E exp(γ∗0 m(y, θ))

where γ∗ solves minγ EG exp(γ 0 m(y, θ)), and we also have D(G∗θ k G) = − log(EG exp(γ∗0 m(y, θ))).

Therefore, we can rewrite (2.14) as maxθ minγ log(EG exp(γ 0 m(y, θ))) or maxθ log(EG exp(γ∗0 m(y, θ))).

See Kitamura and Stutzer (1997) and Newey and Smith (2004) for the details of dual convexity of

10

this optimization. Geometrically, the solution G∗θ is derived by Amari’s −1-projection of G onto Ḡ.

This projection defines a degenerated distribution of P on Ḡ, and we denote it as P ∗ . Once we get

the prior P ∗ , then we can incorporate expert information by applying Amari’s −1-projection of P ∗

onto Q again.

Figure 1 illustrates the geometry of our approach with semiparametric models. The solid path

P → G → G∗θ → P ∗ → Q∗ represents the process of incorporating moment conditions and expert

information starting from the DP prior P. The first three steps (P → G → G∗θ → P ∗ ) of this

path represents incorporation of moment conditions to get the degenerated distribution P ∗ through

−1-projection (G → G∗θ ). The last step (P ∗ → Q∗ ) is incorporating expert information with another

−1-projection. Essentially, this approach uses the information in moment conditions followed by

expert information. We may call this method ET-ETD-DP (exponential tilting of exponentially

tilted draws of Dirichlet process).

Alternatively, we can use the method of Kitamura and Otsu (2011) for the first three steps

(P → G → G∗θ → P ∗ ). They start with a marginal prior distribution p(θ) on θ. Given θ, they

derive the conditional distribution p(G|θ) on Gθ by −1-projection of G ∼ DP(αG0 ) onto Gθ . This

approach would be useful when an econometrician has a marginal distribution of θ at hand. Once

we apply their method to get P ∗ , expert information can be incorporated (P ∗ → Q∗ ) as usual.

If Ḡ = G, it is not necessary to project G onto Ḡ. We can use the simple method P → Q0 → G

shown as the dashed path in Figure 1. This method applies expert information first (P → Q0 )

on the DP prior, then then draw G from Q0 . This method would not have to assume a marginal

distribution p(θ) of θ, and does not require the projection G → G∗θ of Kitamura and Otsu (2011).

We call this method ETDP (exponentially tilted Dirichlet process). Of course, we still can use

the method of Kitamura and Otsu (2011) by assuming p(θ) and use Q0 in place of P to get the

conditional distribution p(G|θ). But in this case, expert information is not fully incorporated in

the distribution of θ because of the marginal distribution p(θ) used in their method. Essentially,

this method uses expert information first to replace P with Q0 before implementing Kitamura and

Otsu (2011). We may call this method as ETD-ETDP (exponentially tilted draws of exponentially

tilted Dirichlet process).

11

In the following sections, we will focus on nonparametric models only, because the discussion

above regarding semiparametric models is conceptually straightforward and does not give additional

insights in presenting the main idea of this paper. We plan to consider semiparametric models in

a follow-up paper in detail.

3

Exponential tiling of Dirichlet process prior

3.1

Estimation of Gibbs measure

We find Q∗ by solving λ∗ from the sample version of (2.13) using simulated θ. To simulate θ, we

first generate G from DP(αG0 ) using the stick-breaking process of Sethuraman (1994). A random

measure G from the DP prior is discrete almost surely, and can be written in a countable sum,

G=

∞

X

wj δξj ,

(3.1)

j=1

where wj are random weights independent of ξj , and δξ is the distribution concentrated at a random

point ξ. An important consequence of the almost sure discreteness of G is that the samples from G

show clustering. If α is small, the clustering becomes more severe, and there will be a fewer number

of distinct clusters. The stick-breaking process defines the DP through (3.1) by

iid

ξj ∼ G0 ,

(3.2)

w1 = V1 , and

wj = Vj

j−1

Y

(1 − Vk ), for j = 2, 3, . . .

(3.3)

k=1

iid

where Vk ∼ Beta(1, α). Intuitively, the stick-breaking scheme starts with a stick with length 1,

Qj−1

and keeps cutting the fraction Vj of the remaining stick of length k=1 (1 − Vk ). Then wj are the

lengths of the pieces cut.

In fact, the discrete measure in (3.1) gives more general classes of random measures than DP

12

priors. By generalizing the probability distribution of Vk , the stick-breaking method can generate

other classes of priors such as the two parameter Beta process of Ishwaran and Zarepour (2000) and

the two-parameter Poisson-Dirichlet process (Pitman-Yor process) of Pitman and Yor (1997). The

priors from the generalized stick-breaking schemes applied to (3.1) are called the stick-breaking

priors which include DP priors as a simple special case. For posterior sampling with the stickbreaking priors, Ishwaran and Zarepour (2000) and Ishwaran and James (2001) develop generalized

Pólya urn Gibbs samplers and blocked Gibbs samplers. We consider DP priors only in this paper,

but the main idea can be easily extended to other stick-breaking priors.

Once we have G, we can calculate θ from (2.5). Since G defined in (3.1) is an infinite sum, we

would have to approximate G by the truncated stick-breaking method. The method is given by a

finite sum

GN̄ =

N̄

X

wj δξj ,

(3.4)

j=1

where wj and ξj are from (3.2) and (3.3) except that VN̄ = 1. Let PN̄ be the distribution of (3.4)

generated by the truncated stick-breaking method. Ishwaran and Zarepour (2002) shows that, as

N̄ → ∞,

ZZ

f (x)G(dx)PN̄ (dG) →

ZZ

f (x)G(dx)P(dG),

for any bounded continuous real function f . Ishwaran and Zarepour (2000) shows that the approximation error of using PN̄ can be substantial when α is large, and N̄ should increase as α

increases. When the mixing kernel K(·|ξ) is a normal distribution, Theorem 1 of Ishwaran and

R

James (2002) shows that the distance |fPN̄ (y) − fP (y)|dy between the marginal densities fPN̄ (y)

of y from PN̄ and fP (y) from P is proportional to exp(−(N̄ − 1)/α) for large N̄ . This implies that

N̄ should increase proportionally as α increases to get the same level of asymptotic approximation.

Considering their results, we choose N̄ = 20 × α or 50 if (20 × α) < 50 to make the approximation

error sufficiently small for all examples in this paper.

PN̄

N̄ M

N̄

Let {θm

}m=1 , where θm

= j=1 wmj ϕ(K(y|ξmj )), be M random samples of θ calculated from

N̄ M

N̄

GN̄ in (3.4). Consider a discrete probability measure {πm

}m=1 on the points {θm

} such that

P

M

N̄

N̄

N̄

N̄

0 ≤ πm

≤ 1 and m=1 πm

= 1. Although {πm

} and {θm

} depend on N̄ , we drop the superscript

13

N̄ for notational simplicity. Then we solve the maximum entropy problem

max

M

X

(π1 ,...,πM )

πm log(1/πm )

(3.5)

m=1

such that

M

X

πm = 1

m=1

M

X

πm g(θm ) = 0.

m=1

The solution {π̂m } to (3.5) is given by

π̂m

exp λ̂0M g(θm )

,

=P

M

0

m=1 exp λ̂M g(θm )

where λ̂M is an (l × 1) Lagrange multiplier vector of the constraints, and the multiplier can be

obtained from

λ̂M = argmin M −1

λ∈Λ

M

X

exp(λ0 g(θm )),

(3.6)

m=1

where Λ is a compact subset of Rl . The solution π̂m is the exponential tilting estimator of Kitamura

and Stutzer (1997). Exponential tilting estimators are a special case of the generalized empirical

PM

−1

0

likelihood estimators of Newey and Smith (2004). Let LN̄

M (λ) = M

m=1 exp(λ g(θm )) be the

estimating function from (3.6), and L(λ) = EP exp(λ0 g(θ)) be the objective function from (2.13).

a.s.

Noting that λ̂M = argminλ∈Λ LN̄

M (λ) and λ∗ = argminλ L(λ), we prove λ̂M → λ∗ as M, N̄ → ∞.

Assumption 3.1. The solution λ∗ = argminλ EP exp(λ0 g(θ)) of (2.13) is unique in Λ.

a.s.

Assumption 3.2. LN̄

M (λ) → L(λ) uniformly as M, N̄ → ∞.

Assumption 3.3. L(λ) is continuous on Λ.

Theorem 3.4. Let π̂ M be the empirical distribution of {π̂m }, and π ∗ be the change of measure in

14

a.s.

(2.12). If Assumptions 3.1-3.3 hold, as M, N̄ → ∞, we have λ̂M → λ∗ and

π̂ M ⇒ π ∗ .

Proof. We use the argument in Theorem 4.2.1. of Bierens (1994). We first show

a.s.

L(λ̂M ) → L(λ∗ ).

We have

N̄

0 ≤ L(λ̂M ) − L(λ∗ ) = L(λ̂M ) − LN̄

M (λ̂M ) + LM (λ̂M ) − L(λ∗ )

N̄

≤ L(λ̂M ) − LN̄

M (λ̂M ) + LM (λ∗ ) − L(λ∗ )

a.s.

≤ 2 sup |LN̄

M (λ) − L(λ)| → 0,

(3.7)

λ∈Λ

N̄

N̄

N̄

as M, N̄ → ∞, from LN̄

M (λ̂M ) ≤ LM (λ∗ ) and almost sure uniform convergence of LM (λ) to LM (λ).

a.s.

Since L(λ) is continuous and λ∗ is unique, we have λ̂M → λ∗ from (3.7).

3.2

Implementation with a Metropolis-Hastings algorithm

Let y = (y1 , . . . , yn ) be data and

Ψ(dG|y) ∝

n

Y

i=1

f (yi |G)P(dG),

be the density Ψ of the posterior distribution of G in the DPM model. With expert information,

the posterior becomes

Ψ∗ (dG|y) ∝

n

Y

i=1

f (yi |G)π ∗ (θ)P(dG) =

n

Y

i=1

f (yi |G)Q∗ (dG).

We use an MCMC method to get samples from the posterior distribution Ψ∗ . We implement our

approach with the independence chain Metropolis-Hastings (M-H) algorithm (Chib and Greenberg

15

(1994), Chib and Greenberg (1995)). Considering the relationship

Ψ∗ (dG|y)

= π∗ ,

Ψ(dG|y)

we use the posterior distribution Ψ with the original DP prior P as the proposal distribution of the

independence chain M-H algorithm. Then the acceptance of t-th sample G(t) in the MCMC chain

depends on the quantity

π ∗ (θ(t) )

,

π ∗ (θ(t−1) )

(3.8)

which is approximated by

exp λ̂0M g(θ(t) )

,

exp λ̂0M g(θ(t−1) )

with the estimated λ̂M from the previous section. The t-th MCMC sample of G(t) is accepted with

probability

exp λ̂0M g(θ(t) )

,

min 1,

exp λ̂0 g(θ(t−1) )

M

or G(t) = G(t−1) if rejected.

For sampling from the proposal distribution Ψ, we use the blocked Gibbs algorithm of Ishwaran

and James (2001). The implementation of our approach combines the blocked Gibbs algorithm

and the independence chain M-H algorithm above. The posterior simulation cycles through the

∗

∗ n

N̄

following steps. Let ξ N̄ = {ξj }N̄

= {wj }N̄

j=1 and w

j=1 from GN̄ in (3.4). Let ξ = {ξi }i=1 be n

i.i.d. samples of ξi∗ ∼ GN̄ which are used to draw yi |ξi∗ ∼ Kξi∗ . Since GN̄ is discrete, ξi∗ are drawn

from the elements of ξ N̄ . Therefore, some ξi∗ may be same and some elements of ξ N̄ may not be

drawn at all. For each j = 1, . . . , N̄ , define the set Ij = {i : ξi∗ = ξj } of the indices of ξi∗ that is

equal to ξj . When ξj was not drawn at all, then Ij = ∅.

1. Updating ξ N̄ given wN̄ , ξ ∗ , and y: For j = 1, 2, . . . , N̄ , simulate ξj ∼ G0 for all j with Ij = ∅,

16

and draw ξj for Ij 6= ∅ from the density

p(ξj |ξ ∗ , wN̄ , y) ∝ G0 (dξj )

Y

i∈Ij

k(yi |ξj ),

where k is the density of the kernel function K given in (2.3) and (2.4).

2. Updating ξ ∗ given ξ N̄ , wN̄ , and y: Draw (ξi∗ |ξ N̄ , wN̄ , y) independently from

ξi∗ |(ξ N̄ , wN̄ , y) ∼

N̄

X

wj δξj .

j=1

3. Updating wN̄ given ξ ∗ , ξ N̄ , and y: Draw w1 = V1 , and

wj = Vj

j−1

Y

(1 − Vk ), for j = 2, 3, . . . , N̄ − 1

k=1

iid

where VN̄ = 1, Vk ∼ Beta(1 + Card(Ik ), α +

PN̄

k+1

Card(Ik )) for k = 1, . . . , N̄ − 1, and

Card(Ik ) represents the cardinality of the set Ik .

4. Calculate the t-th MCMC sample g(θ(t) ) from (2.5) replacing G with GN̄ defined from

(ξ N̄ , wN̄ ). The t-th sample(ξ ∗ , ξ N̄ , wN̄ ) is accepted with probability

exp λ̂0M g(θ(t) )

,

min 1,

exp λ̂0 g(θ(t−1) )

M

or replaced by the previous sample.

In Step 1, the updating of ξ N̄ is simple if the base measure G0 and the kernel function K are

conjugate. For this reason, we use normal-gamma conjugate distributions for our examples and

empirical applications. Step 1-3 are the original blocked Gibber sampler, and our approach simply

adds Step 4 for exponential tilting.

17

4

Dirichlet process mixture of normal distributions

To demonstrate our theory, we consider the DPM model with the family of normal distributions as

a kernel function. A normal mixture model is very flexible and popularly used because a locationscale mixture of normal distributions can approximate any distribution on the real line (Lo (1984),

Escobar and West (1995)) or on Rd (Müller, Erkanli, and West (1996)). We define the kernel

function

K(y|ξ) = Φ(y|µ, 1/τ ),

where ξ = (µ, τ ) is defined on Ξ = R × R+ , and Φ(·|µ, 1/τ ) is a normal distribution function

with mean µ and variance 1/τ , where τ is a precision parameter. The prior DP(αG0 ) on G is

given by a concentration parameter α and a base measure G0 on (µ, τ ) ∈ R × R+ distributed as a

normal-gamma distribution G0 = NG(µ0 , n0 , ν0 , σ02 ) in which

µ|τ ∼ N(µ0 , (n0 τ )−1 ),

and

τ ∼ Γ(ν0 /2, ν0 σ02 /2),

where Γ(a, b) is a gamma distribution with a shape parameter a and a rate parameter b. Therefore,

DP(αG0 ) is defined with 5 parameters (α, µ0 , n0 , ν0 , σ02 ). The normal-gamma distribution is a

convenient choice for the DPM of normal distribution because of its conjugacy with the normal

kernel function. Also, it is easy to generalized this example to consider hyper-parameters by randomizing the 5 parameters. A popular choice is to put a gamma hyper-prior on α, n0 , σ0−2 , and a

normal hyper-prior on µ0 . Note that α represents the concentration of G around G0 , n0 is related

to the concentration of µ around µ0 of G0 , and ν0 is for the concentration of τ in G0 . Therefore,

(α, n0 , ν0 ) jointly determines the effective sample size of the prior distribution, and smaller values

of these parameters imply more diffuse priors.

In our numerical example, we assume a mixture of two normal distributions as the true distri2

2

bution. We set n = 20 and generate i.i.d. data {yi }20

i=1 from the mixture of N(10, 5 ) and N(20, 5 )

18

Posterior Density of θ

Posterior CDF of θ

70

1.0

Expert

60

Expert

0.8

50

Prior (DP)

0.6

40

DP

DP

30

0.4

20

0.2

Prior (DP)

10

0

0.0

0.00

0.01

0.02

0.03

θ

0.04

0.05

0.06

0.00

0.01

0.02

0.03

θ

0.04

0.05

0.06

Figure 2: The left panel shows the prior distribution of θ from DP(αG0 ) (Prior (DP)), the posterior

distribution of θ with DP(αG0 ) (DP), and the posterior distribution of θ with expert information

(Expert). The right panel shows the cumulative distribution functions of θ.

with equal weights. Specifically, y1 , . . . , y20 are calculated from

yi = Wi Y1i + (1 − Wi )Y2i ,

(4.1)

with Wi = 1 or 0 with an equal probability and Y1i ∼ N(10, 52 ) and Y2i ∼ N(20, 52 ). We set the

DP prior DP(αG0 ) with α = 10, µ0 = 15, n0 = 1, ν0 = 6, σ02 = 20. Based on the discussion in the

previous section, we use N̄ = 20 × α = 200 for GN̄ to approximate G. The parameter of interest θ

is the left-tail probability θ = P{yi ≤ 0} given G, which would represent the probability of default

if yi is an equity value. For estimation of λ̂M and π̂ M , we first set M = 1, 000, 000 and simulate

N̄ M

{θm

}m=1 by using the truncated stick-breaking process in (3.4). The dashed line “Prior (DP)” in

the first panel in Figure 2 shows the prior density of θ from DP(αG0 ).

We assume that the expert information is P{θ ≤ 0.01} = 50% and P{θ ≤ 0.005} = 25%. This

means g(θ) = (I{θ ≤ 0.01} − 0.5, I{θ ≤ 0.005} − 0.25)0 for the moment condition Eg(θ) = 0. To

implement the exponential tilting, we find the Lagrange multiplier used in the maximum entropy

N̄ M

problem in (3.6) to get λ̂0M = (1.010, 1.285) from the simulated {θm

}m=1 . The positive values of

λ̂M imply applying higher weights on the events I{θ ≤ 0.01} and I{θ ≤ 0.005} in the moment

19

Predictive Density of y

Predictive CDF of y

0.06

1.0

0.05

0.8

Data

0.04

0.6

Data

0.03

0.4

0.02

DP

0.2

0.01

DP

Expert

Expert

0.00

0.0

0

10

20

y

30

40

0

10

20

y

30

40

R

R

Figure 3: Predictive density φ(yi |G)Ψ∗ (dG|y) and cumulative distribution Φ(yi |G)Ψ∗ (dG|y)

of yi , where Ψ∗ (dG|y) is the posterior distribution of G, with expert information (Expert) and

without expert information (DP). The vertical lines on the left panel represent the values of n = 20

samples, and the step function (Data) on the right panel is the CDF of the samples.

conditions. Therefore, the exponential tilting of DP(αG0 ) would tilt the prior distribution of θ

toward zero, which tells that the expert information emphasizes that θ should be smaller than what

DP(αG0 ) would generate. Consequently, the prior that complies with the expert information favors

distributions with smaller left-tail probabilities than DP(αG0 ).

Given the simulated data from (4.1), and with the expert information, we draw samples from the

posterior distribution. For the MCMC procedure, we use 101, 000 MCMC iterations, discard the

first 1, 000 iterations for burn-in, and use every 50th state for thinning after the burn-in period. On

the left panel in Figure 2, the line labeled “Expert” represents the posterior density with the expert

information and the line with “DP” is the posterior density from the ordinary DPM model without

the expert information. The right panel shows the distribution functions of the distributions on the

left panel. We can see that the posterior distribution of θ is tilted toward zero.

R

Figure 6 shows the predictive density φ(yi |G)Ψ∗ (dG|y) of yi , and the right panel shows the

predictive distribution function of yi , with expert information (Expert) and without expert information (DP). The vertical lines on the left panel represent the values of n = 20 samples and the

curved labeled as Data is the density of the samples. The step function (Data) on the right panel is

20

Posterior Density of H120

Posterior CDF of H120

5

1.0

Expert

4

0.8

DP

3

0.6

2

0.4

Expert

DP

1

0.2

0

0.0

0.0

0.1

0.2

H120

0.3

0.4

0.0

0.1

0.2

H120

0.3

0.4

Figure 4: Posterior distribution of the probability H120 to observe one default event (yi < 0) out of

20 samples.

the CDF of the samples. The predictive density with the expert information (Expert) has slightly

thinner left-tail than the the predictive density without the expert information (DP). This coincides

with our intuition that the positive λ̂M implies the prior with the expert information favors the

distributions with small left-tail probabilities.

An interesting quantity in an analysis of a rare event is the number of occurrences of the rare

event given a sample size. For the probability of default θ, the probability that we observe k defaults

out of n samples is given by the binomial distribution

Hkn

n k

=

θ (1 − θ)k .

k

(4.2)

We can study the posterior distribution of Hkn given n and k. For the probability H120 of

observing one default out of 20 samples, in Figure 4, we calculate the density (left panel) and

the distribution functions (right panel) of H120 with and without the expert information. Since

the expert information favors the distributions with small left-tail probabilities, we can see that

the distribution of the probability H120 with the expert information (Expert) is tilted toward zero

compared to the distribution without the expert information (DP).

21

Company

Aetna Inc

Archer-Daniels-Midland Co

Chubb Corp/The

Humana Inc

Kohl’s Corp

Marsh & McLennan Cos Inc

Mylan Inc/PA

Northrop Grumman Corp

Return (%)

Company

−12.1

−2.1

16.3

−3.8

−9.0

3.3

−13.4

−8.0

Raytheon Co

Republic Services Inc

Staples Inc

Stryker Corp

Symantec Corp

Thermo Fisher Scientific Inc

WellPoint Inc

Zimmer Holdings Inc

Return (%)

13.5

−14.2

−17.4

−6.1

−25.9

−19.4

−19.0

1.8

Table 1: Annual returns ((p1 − p0 )/p0 ) of 16 companies in a homogeneous portfolio from Jun

30, 2011 to Jun 29, 2012. Max= 16.3%, Min= −25.9%, Mean= −7.21%, and Median= −8.53%,

Standard deviation= 11.75%.

5

Application to tail probabilities of stock returns

We consider inference of tail probabilities for annual stock returns of a homogeneous portfolio of

high grade companies. We build the homogeneous portfolio from the companies included in the S&P

500 index. Among the 500 companies, we select the companies with the following characteristics.

Market beta: 0.8 ∼ 1, Price to book value per share: 1 ∼ 4, and Market Capitalization: $10 ∼

30MM. Using data on 6/30/2011 from Bloomberg, we find that there are 16 companies that belong

to all of these categories. Their returns are calculated by (p1 − p0 )/p0 , where p0 is the stock prices

on 6/30/2011 and p1 is the stock prices on 6/29/2012. The maximum return is 16.3%, the minimum

is −25.9%, the mean is −7.21%, the median is −8.53%, and the standard deviation of the returns

is 11.75%. All observations are assumed to be i.i.d. given a mixing measure G. We could assume

correlation in data, but it does not add much insight for illustration of our approach. Also, the

homogeneity of the portfolio would reduce the concerns of misspecification such as a fixed effect of

an individual asset.

Our inference is focused on the rare event that the annual return falls below −30%. Let θ =

P{yi < −30%} be the probability of the rare event given G. We assume that a hypothetical expert

has the following information. P{θ < 0.02} = 50% and P{θ < 0.01} = 25%. As before, we use

the DPM of normal distributions. The DP prior DP(αG0 ) used for this empirical application is

from α = 10, and the normal-gamma base measure G0 = NG(µ0 , n0 , ν0 , σ02 ) with µ0 = 0, n0 = 1,

22

Posterior Density of θ

Posterior CDF of θ

25

1.0

DP

20

15

0.8

0.6

Expert

10

Expert

0.4

DP

5

0.2

0

0.0

0.00

0.02

0.04

θ

0.06

0.08

0.00

0.02

0.04

θ

0.06

0.08

Figure 5: The left panel shows the posterior distribution of θ with DP(αG0 ) (DP), and the posterior

distribution of θ with expert information (Expert). The right panel shows the posterior cumulative

distribution functions of θ.

ν0 = 6, σ02 = 100. We also set N̄ = 20 × α = 200 for GN̄ , and M = 1, 000, 000 for estimation of λ̂M

as in the simulation study of the previous section. We find λ̂0M = (0.535, 1.315) from (3.6).

For the simulation of the posterior distribution, we use 101, 000 MCMC iterations, 1, 000 iterations for burn-in, and every 50th state for thinning after the burn-in as before. As shown in Figure

5, the posterior density and distribution functions of θ with the expert information (Expert) shows

tilting toward zero compared to the distributions without the expert information (DP). This tells

us that the left-tail probabilities are smaller than those implied by the DP prior in the expert’s

opinion.

The predict density and distribution function of yi shown in Figure 6 also show the results that

support the argument above. The predictive distribution with the expert information (Expert) has

a slightly thinner left-tail than the one without the expert information (DP). Finally, in Figure 7,

we show the posterior distribution of the probability H116 , as defined in (4.2), to observe one asset

with the annual return below −30% out of 16 assets. From the results, it is predicted that it is more

likely to have small values of H116 with the expert information (Expert) than without the expert

information (DP).

23

Predictive Density of y

Predictive CDF of y

1.0

0.035

Data

0.030

0.8

Data

0.025

0.6

0.020

0.015

0.010

0.4

DP

0.2

0.005

DP

Expert

Expert

0.0

0.000

-40

-30

-20

-10

y

0

10

20

30

-40

-30

-20

-10

y

0

10

20

30

R

R

Figure 6: Predictive density φ(yi |G)Ψ∗ (dG|y) and cumulative distribution Φ(yi |G)Ψ∗ (dG|y) of

yi , where Ψ∗ (dG|y) is the posterior distribution of G.

Posterior Density of H116

Posterior CDF of H116

8

1.0

0.8

6

0.6

4

0.4

Expert

2

Expert

0.2

DP

DP

0

0.0

0.0

0.1

0.2

H116

0.3

0.4

0.0

0.1

0.2

H116

0.3

0.4

Figure 7: Posterior distributions of the probability to observe one asset with the return below −30%

out of 16 assets (H116 ) with expert information (Expert) and without expert information (DP).

24

6

Conclusion

Our method deals with the problem of misspecification and the scarcity of data information for inference of rare events by using nonparametric models and incorporating expert information. Elicitation

of expert information can be easily done with carefully designed interviews, and the implementation

of our method can be done with the straightforward M-H method we propose. Therefore, when

historical data are scarce and it is hard to find a reasonable parametric model, our approach could

be an attractive choice.

New nonparametric Bayesian methods are developing rapidly. There is a growing interest in

considering more general priors than the Dirichlet process such as the general stick-breaking processes. Doss (1994) considers a mixture of Dirichlet processes, and Teh, Jordan, Beal, and Blei

(2006) provide an extension to hierarchical Dirichlet processes. We may generalize our method to a

hierarchical Dirichlet processes. Also, there are new MCMC methods for the DPM model such as

the retrospective MCMC sampling of Papaspiliopoulos and Roberts (2008), and the slice sampling

of Walker and Mallick (1997) which is generalized in Kalli, Griffin, and Walker (2011) to general

stick-breaking processes. In a future study, we can consider these methods to improve the MCMC

method presented in this paper and compare the performances of the alternative MCMC schemes.

We also believe that our method can be applied to semiparametric models naturally. Semiparametric models defined by a set of moment conditions are popular in economics and finance. The

moment conditions provide information which reduces the dimension of model space. The resulting

method would merge expert information, moment information, and econometrician’s priors with

data information all together. Another interesting topic is to consider covariates in our approach.

For example, we can consider implementing our method to Bayesian density regressions in which

DP priors can depend on covariates.

25

References

Amari, S. I. (1982): “Differential Geometry of Curved Exponential Families - Curvatures and

Information Loss,” Annals of Statistics, 10(2), 357–385.

Antoniak, C. (1974): “Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems,” Annals of Statistics, 2(6), 1152–1174.

Bierens, H. (1994): Topics in Advanced Econometrics: Estimation, Testing, and Specification of

Cross-Section and Time Series Models. Cambridge University Press.

Blackwell, D., and J. MacQueen (1973): “Ferguson distributions via Pólya urn schemes,”

Annals of Statistics, 1(2), 353–355.

Chaloner, K., and G. Duncan (1983): “Assessment of a beta prior distribution: PM elicitation,”

The Statistician, 32(1/2), 174–180.

Chib, S., and E. Greenberg (1994): “Bayes inference in regression models with ARMA (p, q)

errors,” Journal of Econometrics, 64(1-2), 183–206.

(1995): “Understanding the Metropolis-Hastings algorithm,” The American Statistician,

49(4), 327–335.

Cover, T., and J. Thomas (2006): Elements of Information Theory. John Wiley & Sons, 2nd

edn.

Csiszár, I. (1975): “I-divergence geometry of probability distributions and minimization problems,” Annals of Probability, 3, 146–158.

Doss, H. (1994): “Bayesian nonparametric estimation for incomplete data via successive substitution sampling,” Annals of Statistics, 22(4), 1763–1786.

Escobar, M. (1994): “Estimating normal means with a Dirichlet process prior,” Journal of the

American Statistical Association, 89(425), 268–277.

26

Escobar, M., and M. West (1995): “Bayesian density estimation and inference using mixtures,”

Journal of the American Statistical Association, 90(430), 577–588.

Ferguson, T. (1973): “A Bayesian analysis of some nonparametric problems,” Annals of Statistics,

1(2), 209–230.

(1974): “Prior distributions on spaces of probability measures,” Annals of Statistics, 2(4),

615–629.

Freedman, D. (1963): “On the asymptotic behavior of Bayes’ estimates in the discrete case,”

Annals of Mathematical Statistics, 34(4), 1386–1403.

Garthwaite, P., J. Kadane, and A. O’Hagan (2005): “Statistical methods for eliciting probability distributions,” Journal of the American Statistical Association, 100(470), 680–701.

Gelfand, A., and A. Kottas (2002): “A computational approach for full nonparametric Bayesian

inference under Dirichlet process mixture models,” Journal of Computational and Graphical

Statistics, 11(2), 289–305.

Gelfand, A., and S. Mukhopadhyay (1995): “On nonparametric Bayesian inference for the

distribution of a random sample,” Canadian Journal of Statistics, 23(4), 411–420.

Ghosh, J., and R. Ramamoorthi (2003): Bayesian nonparametrics. Springer Verlag.

Griffin, J., and M. Steel (2011): “Stick-breaking autoregressive processes,” Journal of Econometrics, 162(2), 383–396.

Hirano, K. (2002): “Semiparametric Bayesian inference in autoregressive panel data models,”

Econometrica, 70(2), 781–799.

Hjort, N. L., C. Holmes, P. Müller, and S. G. Walker (eds.) (2010): Bayesian Nonparametrics. Cambridge University Press.

Ishwaran, H., and L. James (2001): “Gibbs sampling methods for stick-breaking priors,” Journal

of the American Statistical Association, 96(453), 161–173.

27

(2002): “Approximate Dirichlet Process computing in finite normal mixtures,” Journal of

Computational and Graphical Statistics, 11(3), 508–532.

Ishwaran, H., and M. Zarepour (2000): “Markov chain Monte Carlo in approximate Dirichlet

and beta two-parameter process hierarchical models,” Biometrika, 87(2), 371–390.

(2002): “Exact and approximate sum representations for the Dirichlet process,” Canadian

Journal of Statistics, 30(2), 269–283.

Jensen, M., and J. Maheu (2010): “Bayesian semiparametric stochastic volatility modeling,”

Journal of Econometrics, 157(2), 306–316.

Kadane, J., N. Chan, and L. Wolfson (1996): “Priors for unit root models,” Journal of

Econometrics, 75(1), 99–111.

Kadane, J., and L. Wolfson (1998): “Experiences in elicitation,” The Statistician, 47(1), 3–19.

Kalli, M., J. Griffin, and S. Walker (2011): “Slice sampling mixture models,” Statistics and

computing, 21(1), 93–105.

Kiefer, N. (2009): “Default estimation for low-default portfolios,” Journal of Empirical Finance,

16(1), 164–173.

(2010): “Default estimation and expert information,” Journal of Business and Economic

Statistics, 28(2), 320–328.

Kitamura, Y., and T. Otsu (2011): “Bayesian analysis of moment condition models using nonparametric priors,” Working Paper, Yale University.

Kitamura, Y., and M. Stutzer (1997): “An information-theoretic alternative to generalized

method of moments estimation,” Econometrica, 65(4), 861–874.

Kullback, S., and R. Leibler (1951): “On information and sufficiency,” Annals of Mathematical

Statistics, 22(1), 79–86.

28

Lo, A. (1984): “On a class of Bayesian nonparametric estimates: I. Density estimates,” Annals of

Statistics, 12(1), 351–357.

MacEachern, S., and P. Müller (1998): “Estimating mixture of Dirichlet process models,”

Journal of Computational and Graphical Statistics, 7(2), 223–238.

Müller, P., A. Erkanli, and M. West (1996): “Bayesian curve fitting using multivariate normal

mixtures,” Biometrika, 83(1), 67–79.

Neal, R. (2000): “Markov chain sampling methods for Dirichlet process mixture models,” Journal

of Computational and Graphical Statistics, 9(2), 249–265.

Newey, W. K., and R. J. Smith (2004): “Higher order properties of GMM and generalized

empirical likelihood estimators,” Econometrica, 72(1), 219–255.

Papaspiliopoulos, O., and G. Roberts (2008): “Retrospective Markov chain Monte Carlo methods for Dirichlet process hierarchical models,” Biometrika, 95(1), 169–186.

Pitman, J., and M. Yor (1997): “The two-parameter Poisson-Dirichlet distribution derived from

a stable subordinator,” Annals of Probability, 25(2), 855–900.

Sethuraman, J. (1994): “A constructive definition of Dirichlet priors,” Statistica Sinica, 4, 639–

650.

Taddy, M., and A. Kottas (2010): “A Bayesian nonparametric approach to inference for quantile

regression,” Journal of Business and Economic Statistics, 28(3), 357–369.

Teh, Y., M. Jordan, M. Beal, and D. Blei (2006): “Hierarchical Dirichlet processes,” Journal

of the American Statistical Association, 101(476), 1566–1581.

Walker, S., and B. Mallick (1997): “Hierarchical generalized linear models and frailty models

with Bayesian nonparametric mixing,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 59(4), 845–860.

White, H. (1982): “Maximum likelihood estimation of misspecified models,” Econometrica, 50(1),

1–26.

29