CEPA Working Paper No. 15-14 Experimental Evidence Eric Bettinger

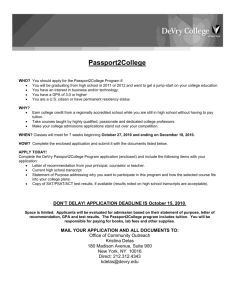

advertisement