Introductory Seminar on Research CIS5935 Fall 2008 Ted Baker

Introductory Seminar on Research

CIS5935 Fall 2008

Ted Baker

Outline

• Introduction to myself

– My past research

– My current research areas

• Technical talk: on RT MP EDF Scheduling

– The problem

– The new results

– The basis for the analysis

– Why a better result might be possible

Past Research

• Relative computability

– Relativizations of the P=NP? question (1975-1979)

• Algorithms

– N-dim pattern matching (1978)

– extended LR parsing (1981)

• Compilers & PL implementation

– Ada compiler and runtime systems (1979-1998)

• Real-time runtime systems, multi-threading

– FSU Pthreads & other RT OS projects (1985-1998)

• Real-time scheduling & synch.

– Stack Resource Protocol (1991)

– Deadline Sporadic Server (1995)

• RT Software standards

– POSIX, Ada (1987-1999)

Recent/Current Research

• Multiprocessor real-time scheduling (1998-…)

– how to guarantee deadlines for task systems scheduled on multiprocessors?

with M. Cirinei & M. Bertogna (Pisa), N. Fisher & S. Baruah

(UNC)

• Real-time device drivers (2006-…)

– how to support schedulability analysis with an operating system?

– how to get predictable I/O response times?

with A. Wang & Mark Stanovich (FSU)

A Real-Time Scheduling Problem

Will a set of independent sporadic tasks miss any deadlines if scheduled using a global preemptive Earliest-Deadline-First (EDF) policy on a set of identical multiprocessors?

Background & Terminology

• job = schedulable unit of computation, with

– arrival time

– worst-case execution time (WCET)

– deadline

• task = sequence of jobs

• task system = set of tasks

• independent tasks: can be scheduled without consideration of interactions, precedence, coordination, etc.

Sporadic Task

i

•

• T i

C

• D i i

= minimum inter-arrival time

= worst-case execution time

= relative deadline deadline job released job completes

D i

?

C i

D i

T i next release scheduling window

Multiprocessor Scheduling

• m identical processors (vs. uniform/hetero.)

• shared memory (vs. distributed)

• preemptive (vs. non-preemptive)

• on-line (vs. off-line)

• EDF

– earlier deadline higher priority

• global (vs. partitioned)

– single queue

– tasks can migrate between processors

Questions

• Is a given system schedulable by global-EDF?

• How good is global-EDF at finding a schedule?

– How does it compare to optimal?

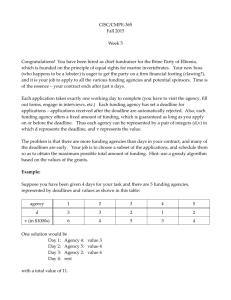

Schedulability Testing

Global-EDF schedulability for sporadic task systems can be decided by brute-force statespace enumeration (in exponential time)

[Baker, OPODIS 2007] but we don’t have any practical algorithm.

We do have several practical sufficient conditions.

Sufficient Conditions for Global EDF

• Varying degrees of complexity and accuracy

• Examples:

• Goossens, Funk, Baruah: density test (2003)

• Baker: analysis of -busy interval (2003)

• Bertogna, Cirinei: iterative slack time estimation

(2007)

• Difficult to compare quality, except by experimentation

• All tests are very conservative

Density Test for Global EDF

Sporadic task system is schedulable on m unit-capacity processors if

sum

(

)

m

( m

1 )

max

(

) where

i

C i min( D i

, T i

)

sum

i n

1

i

max

n max i

1

i

A more precise load metric

DBF (

i

, t )

max 0 ,

t

T i

D i

1 C i maximum demand of jobs of

i that arrive in and have deadlines within any interval of length t

LOAD (

)

max t

0

i n

1

DBF t

(

i

, t )

maximum fraction of processor demanded by jobs of

i that arrive in and have deadlines within any time interval

Rationale for DBF

C i

?

DBF (

i

, t )

max 0 ,

t

T i

D i

1 C i

C i

T i

C i

T i

C i

D i t single processor analysis uses maximal busy interval, which has no “carried in” jobs.

Load-based test: Theorem 3

Sporadic task system is global-EDF schedulable on m unit-capacity processors if

LOAD (

)

(

1 )

max

(

) where

m

( m

1 )

max

(

)

Optimality

• There is no optimal on-line global scheduling algorithm for sporadic tasks [Fisher, 2007]

→ global EDF is not optimal

– so we can’t compare to an optimal on-line algorithm

+ but we can compare it to an optimal clairvoyant scheduler

Speed-up Factors , used in

Competitive Analysis

A scheduling algorithm has a processor speedup factor f ≥ 1 if

• for any task system that is given multiprocessor platform feasible on a

• the algorithm schedules to meet all deadlines on a platform in which each processor is faster by a factor f .

EDF Job Scheduling Speedup

Any set of independent jobs that can be scheduled to meet all deadlines on m unitspeed processors will meet all deadlines if scheduled using Global EDF on m processors of speed 2 - 1/m .

[Phillips et al., 1997]

But how do we tell whether a sporadic task system is feasible?

Sporadic EDF Speed-up

If is feasible on m processors of speed x then it will be correctly identified as global-EDF schedulable on m unit-capacity processors by

Theorem 3 if x

( 3 m

1 )

5 m

2

2 ( m

1 )

2 m

1

Corollary 2

The processor speedup bound for the global-

EDF schedulability test of Theorem 3 is bounded above by

3

5

2

Interpretation

The processor speed-up of 3

5 compensates for both

2

1. non-optimality of global EDF

2. pessimism of our schedulability test

There is no penalty for allowing post-period deadlines in the analysis

(Makes sense, but not borne out by prior analyses, e.g., of partitioned EDF)

Steps of Analysis

• lower bound on load to miss deadline

• lower bound on length of -busy window

• downward closure of -busy window

• upper bound on carried-in work per task

• upper bound on per-task contribution to load, in terms of DBF

• upper bound on DBF, in terms of density

• upper bound on number of tasks with carry-in

• sufficient condition for schedulability

• derivation of speed-up result

problem job arrives first missed deadline other jobs execute problem job executes

Consider the first “ problem job ”, that misses its deadline.

What must be true for this to happen?

Details of the First Step

What is a lower bound on the load needed to miss a deadline?

problem job arrives first missed deadline previous job of problem task problem job ready

The problem job is not ready to execute until the preceding job of the same task completes.

problem window first missed deadline previous job of problem task problem job ready

Restrict consideration to the “ problem window ” during which the problem job is eligible to execute.

t a problem window t d other tasks execute problem task executes

The ability of the problem job to complete within the problem window depends on its own execution time and interference from jobs of other tasks.

t a problem window t d carried-in jobs deadline > t d

The interfering jobs are of two kinds:

(1) local jobs: arrive in the window and have deadlines in the window

(2) carried-in jobs : arrive before the window and have deadlines in the window

t a t d other tasks interfere problem task executes

Interference only occurs when all processors are busy executing jobs of other tasks.

t a t d other tasks interfere problem task executes

Therefore, we can get a lower bound on the necessary interfering demand by considering only “ blocks” of interference.

t a t d

t a t d other tasks interfere problem task executes

The total amount of block interference is not affected by where it occurs within the window. t a t d

t a t d other tasks interfere problem task executes

The total demand with deadline

t d problem job and the interference. includes the problem t a t d processors busy executing jobs with deadline

problem job

approximation of interference (blocks) by demand (formless) t a t d processors busy executing other jobs with deadline

problem job

( t a

) average competing workload in [ t a

,t d

)

From this, we can find the average workload with deadline

t d that is needed to cause a missed deadline.

previous deadline of problem task problem job arrives

T k previous job of problem task t a

min(

D k

D k

, T k

)

The minimum inter-arrival time and the deadline give us a lower bound on the length of the problem window. t d

The WCET of the problem job and the number of processors allow us to find a lower bound on the average competing workload. t d

C k m m

1

min( D k

, T k

) t a

( t a

)

m

min( D k

, T k

)

( m

1 ) C k

m

min(

( m

D k

1 )

, T k k

)

m

( m

1 )

max

(

)

What we have shown

There can be no missed deadline unless there is a

“ -busy ” problem window.

The Rest of the Analysis

• [lower bound on load to miss deadline]

• lower bound on length of -busy window

• downward closure of -busy window

• upper bound on carried-in work per task

• upper bound on per-task contribution to load, in terms of DBF

• upper bound on DBF, in terms of density

• upper bound on number of tasks with carry-in

• sufficient condition for schedulability

• derivation of speed-up result

Key Elements of the Rest of the Analysis

# tasks with carried-in jobs m-1 shows carried-in load max

Observe length of -busy interval ≥ min(D k covers case

D k

>T k

• Derive speed-up bounds

,T k

)

previous deadline of problem task problem job arrives

T k t d previous job of problem task t a

min( D k

, T k

)

D k

Observe length of -busy interval ≥ min(D k

,T k

This covers both case

D k

≤T k and

D k

>T k

)

t a t d

( t a

)

( t a

)

To minimize the contributions of carried-in jobs, we can extend the problem window downward until the competing load falls below

.

t

o t a t d

( t )

( t o

)

t maximal

-busy interval

at most

1 carried-in jobs t o maximal

-busy interval t d

Observe # tasks with carried-in jobs m-1

Use this to show carried-in load max

Summary

• New speed-up bound for global EDF on sporadic tasks with arbitrary deadlines

• Based on bounding number of tasks with carried-in jobs

• Tighter analysis may be possible in future work

Where analysis might be tighter

• approximation of interference (blocks) by demand (formless)

• bounding i by max

(only considering one value of )

• bounding

DBF (

i

,

i

+

) by

(

i

+

)

max

( t )

• double-counting work of carry-in tasks

bounding

DBF (

i

,

i

+

) by

(

i

+

)

max

( t ) t i

i t o

y i

C i

C i

T i

T i contribution of

i

DBF (

i

,

i

)

y i

DBF (

i

,

i

)

i

max

(

)

(

i

)

i

i

max

(

)

(

i

)

max

i

max

(

)

max

(

)

D i

C i t d

double-counting internal load from tasks with carried-in jobs t i

i t o

t d

T i y i

C i

T i

C i

D i

C i carry-in cases non-carry-in cases

(

1 )

max

LOAD (

)

Some Other Fundamental Questions

• Is the underlying MP model realistic?

• Can reasonably accurate WCET’s be found for

MP systems? (How do we deal with memory and L2 cache interference effects?)

• What is the preemption cost?

• What is the task migration cost?

• What is the best way to implement it?

The End

questions?

at most

1 carried-in jobs t o maximal

-busy interval t d

t i

i

C i t o maximal

-busy interval t d

t i y i t o maximal

-busy interval t d

i

C i m (

i

y i

)

y i

i y i

i m

m

1

i

max

(

)