The stochastic nature of stellar population modelling Miguel Cervi˜no

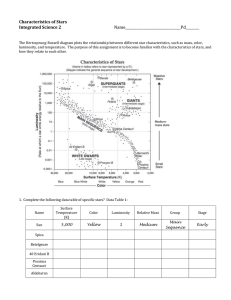

advertisement