Math 6010 Solutions to homework 3

advertisement

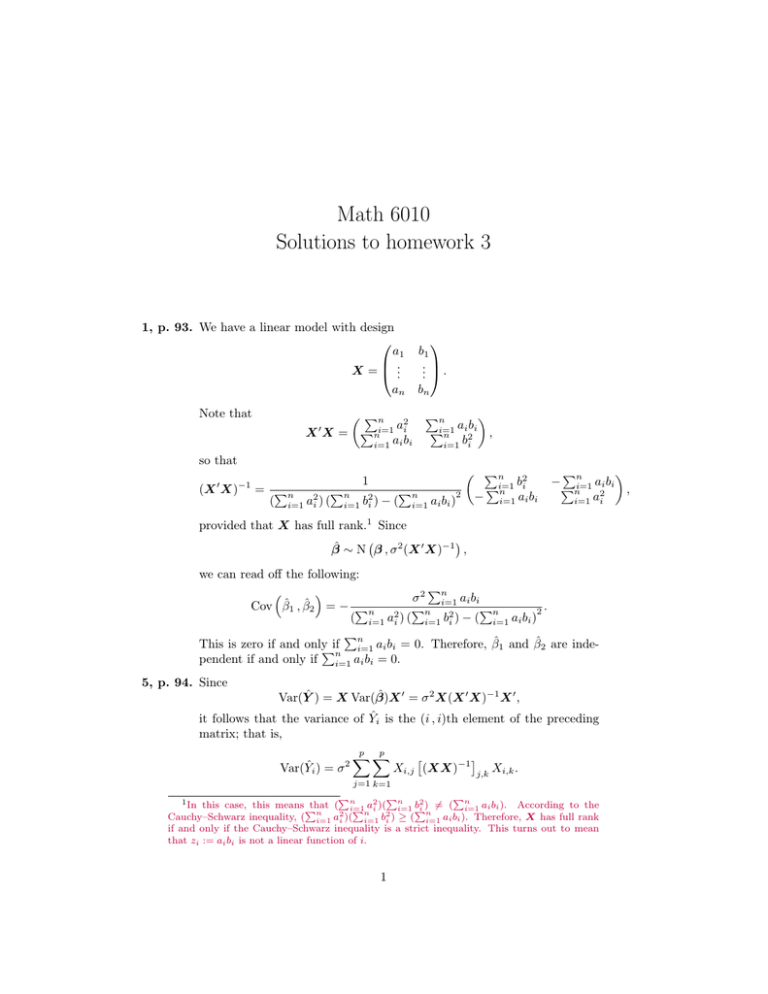

Math 6010 Solutions to homework 3 1, p. 93. We have a linear model with design a1 .. X= . an Note that Pn a2 X X = Pni=1 i i=1 ai bi 0 b1 .. . . bn Pn ai bi Pi=1 , n 2 i=1 bi so that 0 −1 (X X) Pn 2 bi Pi=1 = Pn n Pn 2 Pn 2 2 − i=1 ai bi ( i=1 ai ) ( i=1 bi ) − ( i=1 ai bi ) 1 Pn −P i=1 ai bi , n 2 i=1 ai provided that X has full rank.1 Since β̂ ∼ N β , σ 2 (X 0 X)−1 , we can read off the following: Pn σ 2 i=1 ai bi Cov β̂1 , β̂2 = − Pn Pn Pn 2. ( i=1 a2i ) ( i=1 b2i ) − ( i=1 ai bi ) Pn This is zero if and onlyPif i=1 ai bi = 0. Therefore, β̂1 and β̂2 are inden pendent if and only if i=1 ai bi = 0. 5, p. 94. Since Var(Ŷ ) = X Var(β̂)X 0 = σ 2 X(X 0 X)−1 X 0 , it follows that the variance of Ŷi is the (i , i)th element of the preceding matrix; that is, Var(Ŷi ) = σ 2 p X p X Xi,j (XX)−1 j,k Xi,k . j=1 k=1 P Pn P 1 In this case, this means that ( n 2 b2i ) 6= ( n i=1 i=1 ai bi ). According to the Pn Pnai )(2 i=1 P n 2 Cauchy–Schwarz inequality, ( i=1 ai )( i=1 bi ) ≥ ( i=1 ai bi ). Therefore, X has full rank if and only if the Cauchy–Schwarz inequality is a strict inequality. This turns out to mean that zi := ai bi is not a linear function of i. 1 Because n X Xi,j Xi,k = [X 0 X]j,k , i=1 it follows that n X Var(Ŷi ) = σ 2 i=1 p X p X (XX)−1 j,k [X 0 X]j,k = σ 2 tr (X 0 X)−1 (X 0 X) . j=1 k=1 Because (X 0 X)−1 (X 0 X) = Ip×p , its trace is p; this does the job. Pn 12, p. 95. Because i=1 (Yi − Ŷi )2 = k(I − H)Y k2 and Ȳ .. 1 0 Ȳ 1n×1 = . = n 1n×1 1n×1 Y , Ȳ it suffices to prove that Ȳ 1n×1 and (I − H)Y are independent. Since Ȳ 1n×1 and (I − H)Y are both linear combinations of the εi ’s, they are jointly multivariate normals. Therefore, it suffices to show that the covariance matrix between 1n×1 10n×1 Y and (I − H)Y is the zero matrix. But Cov 1n×1 10n×1 Y , (I − H)Y = 1n×1 10n×1 Var(Y )(I−H)0 = σ 2 1n×1 10n×1 (I−H)0 . Now recall that, in the regression model which we are studying here, the first row of the design matrix is all ones. In particular, 1 ∈ C(X) is orthogonal to I − H, which is projection onto [C(X)]⊥ . Since every row of 1n×1 10n×1 is 10n×1 , it follows that Cov 1n×1 10n×1 Y , (I − H)Y = 0, as desired. 2

![Math for Chemistry Cheat Sheet []](http://s3.studylib.net/store/data/008734504_1-7e0023512e64f60b266986e758a82784-300x300.png)