Hopfield Network Lecture 20 h Positive Coupling

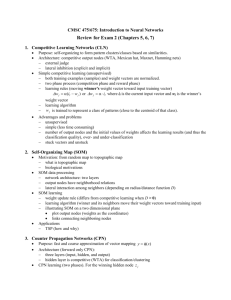

advertisement

Part 3: Autonomous Agents

11/1/07

Hopfield Network

•

•

•

•

•

Lecture 20

Symmetric weights: wij = wji

No self-action: wii = 0

Zero threshold: θ = 0

Bipolar states: si ∈ {–1, +1}

Discontinuous bipolar activation function:

$#1,

" ( h ) = sgn( h ) = %

&+1,

11/1/07

1

h<0

h>0

11/1/07

2

!

What to do about h = 0?

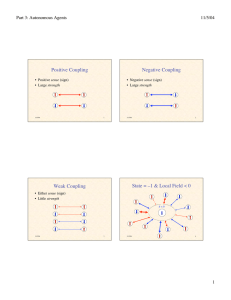

Positive Coupling

• There are several options:

• Positive sense (sign)

• Large strength

σ(0) = +1

σ(0) = –1

σ(0) = –1 or +1 with equal probability

hi = 0 ⇒ no state change (si′ = si)

• Not much difference, but be consistent

• Last option is slightly preferable, since

symmetric

11/1/07

3

11/1/07

Weak Coupling

Negative Coupling

• Either sense (sign)

• Little strength

• Negative sense (sign)

• Large strength

11/1/07

4

5

11/1/07

6

1

Part 3: Autonomous Agents

11/1/07

State = –1 & Local Field < 0

State = –1 & Local Field > 0

h<0

h>0

11/1/07

7

8

State Reverses

State = +1 & Local Field > 0

h>0

h>0

11/1/07

11/1/07

11/1/07

9

11/1/07

10

State = +1 & Local Field < 0

State Reverses

h<0

h<0

11

11/1/07

12

2

Part 3: Autonomous Agents

11/1/07

Hopfield Net as Soft Constraint

Satisfaction System

• States of neurons as yes/no decisions

• Weights represent soft constraints between

decisions

NetLogo Demonstration of

Hopfield State Updating

– hard constraints must be respected

– soft constraints have degrees of importance

• Decisions change to better respect

constraints

• Is there an optimal set of decisions that best

respects all constraints?

Run Hopfield-update.nlogo

11/1/07

13

11/1/07

14

Demonstration of Hopfield Net

Dynamics I

Demonstration of Hopfield Net

Dynamics II

Run Hopfield-dynamics.nlogo

Run initialized Hopfield.nlogo

11/1/07

15

16

Quantifying “Tension”

Convergence

• Does such a system converge to a stable

state?

• Under what conditions does it converge?

• There is a sense in which each step relaxes

the “tension” in the system

• But could a relaxation of one neuron lead to

greater tension in other places?

11/1/07

11/1/07

17

• If wij > 0, then si and sj want to have the same sign

(si sj = +1)

• If wij < 0, then si and sj want to have opposite

signs (si sj = –1)

• If wij = 0, their signs are independent

• Strength of interaction varies with |w ij|

• Define disharmony (“tension”) Dij between

neurons i and j:

Dij = – si wij sj

Dij < 0 ⇒ they are happy

Dij > 0 ⇒ they are unhappy

11/1/07

18

3

Part 3: Autonomous Agents

11/1/07

Review of Some Vector Notation

Total Energy of System

" x1 %

$ '

T

x = $ M ' = ( x1 L x n )

$ '

# xn &

The “energy” of the system is the total

“tension” (disharmony) in it:

E {s} = " Dij = #" si w ij s j

ij

n

x T y = # xi yi = x " y

!

= # 12 " " si w ij s j

j$i

j

x T My = "

= # 12 sT Ws

11/1/07

19

!

L

O

L

" x1 y1

$

xy T = $ M

$

# x m y1

!

= # 12 " " si w ij s j

i

(inner product)

i=1

ij

i

(column vectors)

m

i=1

"

n

j=1

x1 y n %

'

M '

'

xm yn &

(outer product)

x i M ij y j

(quadratic form)

11/1/07

20

!

!

Another View of Energy

Do State Changes Decrease Energy?

The energy measures the number of neurons

whose states are in disharmony with their

local fields (i.e. of opposite sign):

•

•

Suppose that neuron k changes state

Change of energy:

"E = E {s#} $ E {s}

= $% s#i w ij s#j + % si w ij s j

E {s} = " 12 # # si w ij s j

i

="

1

2

i

="

ij j

j$k

j

i i

i

1 T

2

!

=" s h

21

j$k

= "( s#k " sk )% w kj s j

!

#s h

11/1/07

ij

= "% s#k w kj s j + % sk w kj s j

#s #w s

i

1

2

ij

j

11/1/07

= "#sk hk

j$k

<0

22

!

!

!

!

Proof of Convergence

in Finite Time

Energy Does Not Increase

• In each step in which a neuron is considered

for update:

E{s(t + 1)} – E{s(t)} ≤ 0

• Energy cannot increase

• Energy decreases if any neuron changes

• Must it stop?

11/1/07

23

• There is a minimum possible energy:

– The number of possible states s ∈ {–1, +1}n is

finite

– Hence E min = min {E(s) | s ∈ {±1}n} exists

• Must show it is reached in a finite number

of steps

11/1/07

24

4

Part 3: Autonomous Agents

11/1/07

Steps are of a Certain Minimum Size

Conclusion

If hk > 0, then (let hmin = min of possible positive h)

')

+)

n

hk " min( h h = & w kj s j # s $ {±1} # h > 0, = df hmin

)*

)j%k

"E = #"sk hk = #2hk $ #2hmin

!

!

If hk < 0, then (let hmax = max of possible negative h)

)'

)+

n

hk " max( h h = & w kj s j # s $ {±1} # h < 0, = df hmax

j%k

*)

-)

• If we do asynchronous updating, the

Hopfield net must reach a stable, minimum

energy state in a finite number of updates

• This does not imply that it is a global

minimum

"E = #"sk hk = 2hk $ 2hmax

11/1/07

25

11/1/07

26

!

!

Lyapunov Functions

Example Limit Cycle with

Synchronous Updating

• A way of showing the convergence of discreteor continuous-time dynamical systems

• For discrete-time system:

– need a Lyapunov function E (“energy” of the state)

– E is bounded below (E{s} > Emin)

– ΔE < (ΔE)max ≤ 0 (energy decreases a certain

minimum amount each step)

– then the system will converge in finite time

w>0

w>0

• Problem: finding a suitable Lyapunov function

11/1/07

27

The Hopfield Energy Function is

Even

• A function f is odd if f (–x) = – f (x),

for all x

• A function f is even if f (–x) = f (x),

for all x

• Observe:

11/1/07

28

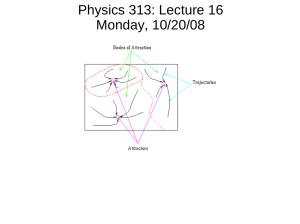

Conceptual

Picture of

Descent on

Energy

Surface

E {"s} = " 12 ("s) T W("s) = " 12 sT Ws = E {s}

11/1/07

29

11/1/07

(fig. from Solé & Goodwin)

30

!

5

Part 3: Autonomous Agents

11/1/07

Energy

Surface

+

Flow

Lines

Energy

Surface

11/1/07

(fig. from Haykin Neur. Netw.)

31

11/1/07

(fig. from Haykin Neur. Netw.)

32

Flow

Lines

Basins of

Attraction

11/1/07

(fig. from Haykin Neur. Netw.)

33

6