Partial solutions to assignment 5 Math 5080-1, Spring 2011 = exp(X

advertisement

Partial solutions to assignment 5

Math 5080-1, Spring 2011

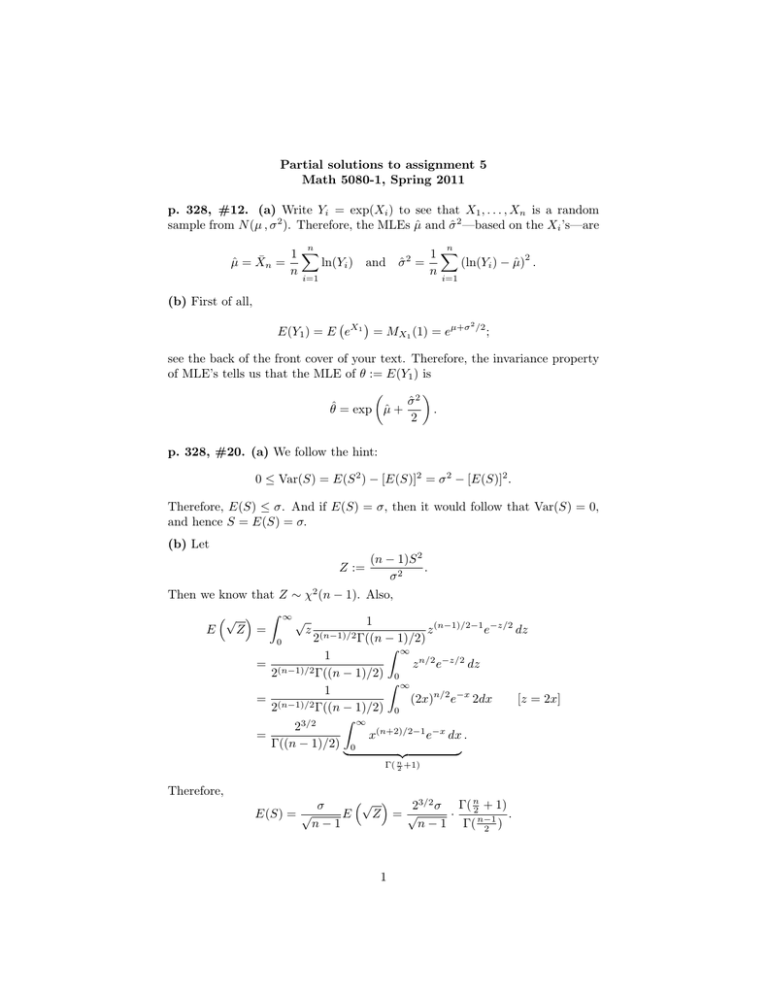

p. 328, #12. (a) Write Yi = exp(Xi ) to see that X1 , . . . , Xn is a random

sample from N (µ , σ 2 ). Therefore, the MLEs µ̂ and σ̂ 2 —based on the Xi ’s—are

n

µ̂ = X̄n =

1X

ln(Yi )

n i=1

n

and σ̂ 2 =

1X

2

(ln(Yi ) − µ̂) .

n i=1

(b) First of all,

2

E(Y1 ) = E eX1 = MX1 (1) = eµ+σ /2 ;

see the back of the front cover of your text. Therefore, the invariance property

of MLE’s tells us that the MLE of θ := E(Y1 ) is

σ̂ 2

.

θ̂ = exp µ̂ +

2

p. 328, #20. (a) We follow the hint:

0 ≤ Var(S) = E(S 2 ) − [E(S)]2 = σ 2 − [E(S)]2 .

Therefore, E(S) ≤ σ. And if E(S) = σ, then it would follow that Var(S) = 0,

and hence S = E(S) = σ.

(b) Let

Z :=

(n − 1)S 2

.

σ2

Then we know that Z ∼ χ2 (n − 1). Also,

√ Z ∞ √

1

E

Z =

z (n−1)/2

z (n−1)/2−1 e−z/2 dz

2

Γ((n − 1)/2)

0

Z ∞

1

= (n−1)/2

z n/2 e−z/2 dz

2

Γ((n − 1)/2) 0

Z ∞

1

= (n−1)/2

(2x)n/2 e−x 2dx

[z = 2x]

2

Γ((n − 1)/2) 0

Z

∞

23/2

=

x(n+2)/2−1 e−x dx .

Γ((n − 1)/2) 0

|

{z

}

Γ( n

2 +1)

Therefore,

E(S) = √

√ σ

23/2 σ Γ( n2 + 1)

E

Z =√

·

.

n−1

n − 1 Γ( n−1

2 )

1

Therefore, the answer is

√

n − 1 Γ( n−1

2 )

.

n

3/2

2 Γ( 2 + 1)

c=

(c) First of all, we need to know what the 95% percentile q of the distribution

of X is: We wish to find q such that P {X ≤ q} = 0.95; therefore,

q−µ

q−µ

0.95 = Φ

⇒

= 1.65 ⇒ q = µ + 1.65σ.

σ

σ

Therefore, an unbiased estimator of q is

q̂ = X̄ + 1.65cS,

where c is from the previous part.

p. 328, #21.

X1 , . . . , Xn is

(a) First of all, the common probability mass function of

f (x , p) = pI{x = 1} + (1 − p)I{x = 0}.

Therefore,

"

E

2 #

2

2

∂

∂

∂

ln f (X, p)

=p

ln p + (1 − p)

ln(1 − p)

∂p

∂p

∂p

=

1

1

1

+

=

.

p 1−p

p(1 − p)

By the Cramér–Rao lower bound, if T is unbiased for τ (p) = p then

Var(T ) ≥

1

p(1 − p)

=

.

n/p(1 − p)

n

Because X̄ achieves this bound with an equality, it follows that it is UMVUE

for p. This answers part (c).

(b) Now τ (p) = p(1 − p), therefore τ 0 (p) = 1 − 2p and hence if T is unbiased

for p(1 − p), then

Var(T ) ≥

(1 − 2p)2

(1 − 2p)2 p(1 − p)

=

.

n/p(1 − p)

n

p. 328, #23. (a) The mean is known. Therefore, the MLE is

n

θ̂ =

1X 2

X .

n i=1 i

A quick computation shows that θ̂ is unbiased for θ [the variance].

(b) Because

Var(θ̂) =

Var(X12 )

,

n

2

we need the variance of X12 . But X12 = θZ 2 , where Z ∼ N (0 , 1). Therefore, the

variance of X12 is θ2 times the variance of a χ2 (1); i.e., 2θ2 [see the front cover

of your text].

Now,

"

2 #

∂

∂

= Var

ln f (X1 , θ)

ln f (X1 , θ)

(recall mean is zero)

E

∂θ

∂θ

√

X 2 ∂

− ln

2πθ − 1

= Var

∂θ

2θ

2

2

X1

Var(X1 )

2θ2

1

= Var

=

=

= 2.

2θ2

4θ4

θ4

2θ

Therefore, any unbiased estimator T of θ satisfies

Var(T ) ≥

1

2θ2

=

.

2

n/(2θ )

n

The rightmost term is Var(θ̂); therefore, θ̂ is UMVUE of θ.

p. 328, #24. (a) We compute

E(θ̂) =

∞

X

e−i

i=0

∞

X

e−µ µi

(µ/e)i

= e−µ

i!

i!

i=0

−1

= e−µ · eµ/e = e−µ[1−e ] .

Therefore, θ̂ is biased for e−µ .

(b) Because u(x) = I{x = 0},

E(θ̃) =

∞

X

u(i)

i=0

e−µ µi

= e−µ .

i!

(c) First of all,

E(θ̂2 ) =

∞

X

i=0

e−2i

−2

e−µ µi

= e−µ[1−e ] .

i!

Therefore,

−2

−1

Var(θ̂) = e−µ[1−e ] − e−2µ[1−e ] .

Also, because θ̃ and its square are equal,

h

i2

Var(θ̃) = E(θ̃) − E(θ̃) = e−µ − e−2µ .

When µ = 1,

Var(θ̃) = e−1 − e−2 ≈ 0.2325,

−2

−1

Var(θ̂) = e−[1−e ] − e−2[1−e ] ≈ 0.1388.

3

Also

Bias(θ̃) = 0,

−1

Bias(θ̂) = e−[1−e ] − e−1 ≈ 0.164.

Therefore,

MSE(θ̃) ≈ 0.2325,

MSE(θ̂) ≈ 0.1642 + 0.1388 ≈ 0.165.

Therefore, θ̂ does a better job, although it is biased.

When µ = 2,

Var(θ̃) = e−2 − e−4 ≈ 0.117,

Also

Bias(θ̃) = 0,

−2

−1

Var(θ̂) = e−2[1−e ] − e−4[1−e ] ≈ 0.098.

−1

Bias(θ̂) = e−2[1−e ] − e−2 ≈ 0.147.

Therefore,

MSE(θ̃) ≈ 0.117,

MSE(θ̂) ≈ 0.1472 + 0.098 ≈ 0.119.

Therefore, for µ = 2, θ̃ does a better job than θ̂ [the roles are reversed!]. This is

an example where we have two estimators, neither of which is better than the

other for all possible choices of the unknown parameter µ.

4