Ambiguity-Based Multiclass Active Learning Member, IEEE,

advertisement

1

Ambiguity-Based Multiclass Active Learning

Ran Wang, Member, IEEE, Chi-Yin Chow, Member, IEEE, and Sam Kwong, Fellow, IEEE

Abstract—Most existing works on active learning (AL) focus

on binary classification problems, which limit their applications

in various real-world scenarios. One solution to multiclass AL

(MAL) is evaluating the informativeness of unlabeled samples

by an uncertainty model, and selecting the most uncertain

one for query. In this paper, an ambiguity-based strategy is

proposed to tackle this problem by applying possibility approach.

First, the possibilistic memberships of unlabeled samples in the

multiple classes are calculated from the one-against-all (OAA)based support vector machine (SVM) model. Then, by employing

fuzzy logic operators, these memberships are aggregated into a

new concept named k-order ambiguity, which estimates the risk

of labeling a sample among k classes. Afterwards, the k-order

ambiguities are used to form an overall ambiguity measure to

evaluate the uncertainty of the unlabeled samples. Finally, the

sample with the maximum ambiguity is selected for query, and

a new MAL strategy is developed. Experiments demonstrate the

feasibility and effectiveness of the proposed method.

Index Terms—Active learning, ambiguity, fuzzy sets and fuzzy

logic, possibility approach, multiclass.

I. I NTRODUCTION

CTIVE learning (AL) [1], known as a revised supervised

learning scheme, adopts the selective sampling manner

to collect a sufficiently large training set. It iteratively selects

the informative unlabeled samples for query, and constructs

a high-performance classifier by labeling as few samples as

possible. A widely used base classifier in AL is support

vector machine (SVM) [2], which is a binary classification

technique based on statistical learning theory. Under binary

settings, many successful AL strategies have been proposed

for SVM, such as uncertainty reduction [3], [4], version space

(VS) reduction [5], minimum expected model loss [6], etc.

However, extending these strategies to multiclass problems is

still a challenging issue due to the following two reasons.

A

Existing SVM-based multiclass models, such as one-againstall (OAA) [7] or one-against-one (OAO) [8], decompose the

multiclass problem into a set of binary problems. These

models evaluate the informativeness of unlabeled samples by

aggregating the output decision values of the binary SVMs, or

by estimating the class probabilities of the samples.

Possibility theory [9], as an extension of fuzzy sets and

fuzzy logic [10], is a commonly used technique for dealing

with vagueness and imprecision about the given information.

It has a great potential for solving AL problems, especially

for SVM under multiclass environments. First, possibility

approach is able to evaluate the uncertainty of unlabeled

samples by aggregating a set of class memberships. This is

intrinsically compatible with MAL. Besides, the memberships

in possibility theory can be independent. Under this condition,

SVM-based model can compute the memberships by decomposing a multiclass problem into a set of binary problems, with

one for each class. Second, possibility approach is based on

an ordering structure rather than an additive structure. This

feature makes it less rigorous in measuring the unlabeled

samples for SVM-based model. For instance, in probability

approach, it has to consider the pairwise relations of all the

classes to satisfy the additive property. However, possibility

approach can relax the additive property and enable SVM to

compute the memberships in a simpler way.

Traditional SVMs are binary classifiers. In order to realize

multiclass AL (MAL), it is necessary to construct an

effective multiclass SVM model.

Designing a sample selection criterion for multiple classes is much more complicated than two classes. For

instance, the size of VS for a binary SVM is easy to

calculate, while the size of VS for multiple SVMs is hard

to define.

To the best of our knowledge, the applications of possibility

approach to AL have not been investigated. In doing so, a new

pool-based MAL strategy is proposed. First, the possibilistic

memberships of unlabeled samples are calculated from OAAbased SVM model. Then, fuzzy logic operators [11], [12],

[13] are employed to aggregate the memberships into a new

concept named k-order ambiguity, which estimates the risk

of labeling a sample among k classes. Finally, a new uncertainty measurement is proposed by integrating the k-order

ambiguities. It is noteworthy that possibility approach has no

difference with probability approach for binary SVM, since the

positive and negative memberships are always complementary.

However, possibility approach is more flexible for multiclass

SVM, since it defines an ordering structure with independent

memberships.

This work is partially supported by Hong Kong RGC General Research

Fund GRF grant 9042038 (CityU 11205314); the National Natural Science

Foundation of China under the Grant 61402460; and China Postdoctoral

Science Foundation Funded Project under Grant 2015M572386.

R. Wang is with the Department of Computer Science, City University

of Hong Kong, Hong Kong, and also with Shenzhen Institutes of Advanced

Technology, Chinese Academy of Sciences, Shenzhen 518055, China (e-mail:

ranwang3-c@my.cityu.edu.hk; wangran@siat.ac.cn).

C.-Y. Chow and S. Kwong are with the Department of Computer Science,

City University of Hong Kong, Hong Kong (e-mail: chiychow@cityu.edu.hk,

CSSAMK@cityu.edu.hk).

The remainder of this paper is organized as follows. In

Section II, we present some related works. In Section III,

we design the ambiguity measure and prove its basic properties, then we establish the ambiguity-based multiclass active

learning strategy. In Section IV, we conduct extensive experimental comparisons to show the feasibility and effectiveness

of the proposed method. Finally, conclusions and future work

directions are given in Section V.

•

•

2

II. BACKGROUNDS

AND

R ELATED W ORKS

Researchers have made some efforts to realize SVM-based

MAL. A number of works adopt OAA approach to construct

the base models [5], [14], [15], [16], and design the sample

selection criterion by aggregating the output decision values of

the binary SVMs. Specifically, Tong [5] proposed to evaluate

the uncertainty of an unlabeled sample by aggregating its

distances to all the SVM hyperplanes. Later, he proposed

to evaluate the model loss by aggregating the VS areas of

the binary SVMs after having queried an unlabeled sample

and received a certain label. This method was also discussed

in [15] and [16] by designing a more effective approximation

method for the VS areas. Moreover, Hospedales et al. [14]

proposed to evaluate the unlabeled samples by both generative

and discriminative models, in order to achieve both accurate

prediction and class discovery. In addition to OAA approach,

OAO approach is also effective to construct multiclass SVM

models. For instance, Joshi et al. [17] proposed a scalable AL

strategy for multiclass image classification, which estimates

the pairwise probabilities of the images by OAO approach,

and selects the one with the highest value-of-information. This

work also stated that entropy might not be a good sample

selection criterion, since the entropy value is highly affected

by the probabilities of unimportant classes. Thus, the best vs.

second best (BvSB) method was developed, which only makes

use of the two largest probabilities.

Possibility approach, different from the above techniques,

is an uncertainty analysis tool with imprecise probabilities.

It is driven by the minimal specificity principle, which states

that any hypothesis not known to be impossible cannot be

ruled out. Given a C-class problem, assume µi (x) is the

membership of sample x in the i-th class (i = 1, . . . , C),

then the memberships are said to be possibilistic/fuzzy

if

PC

µi (x) ∈ [0, 1] and probabilistic further if i=1 µi (x) = 1.

In the context of AL, if the memberships are possibilistic,

then

1) µi (x) = 0 means that class i is rejected as impossible

for x;

2) µi (x) = 1 means that class i is totally possible for x;

3) at least one class is totally possible for x, i.e.,

maxi=1,...,C {µi (x)} = 1.

With a normalisation process, condition 3 can be modified as

maxi=1,...,C {µi (x)} 6= 0.

There are two schemes to handle possibilistic memberships:

1) transform them to probabilities and apply probability approaches; or 2) aggregate them by fuzzy logic operators. It is

stated in [18] that transforming possibilities to probabilities or

conversely can be useful in many cases. However, they are not

equivalent representations. Probabilities are based an additive

structure, while possibilities are based on an ordering structure

and are more explicit in handling imprecision. Thus, we only

focus on the second scheme in this paper.

On the other hand, Wang et al. [19] proposed a concept of

classification ambiguity to measure the non-specificity of a set,

and applied it to the induction of fuzzy decision tree. Given

a set R with a number of labeled samples, its classification

ambiguity is defined as:

Ambiguity(R) =

XC

i=1

(p∗i − p∗i+1 ) log i,

(1)

where (p1 , . . . , pC ) is the class frequency in R, and

(p∗1 , . . . , p∗C , p∗C+1 ) is the normalisation of (p1 , . . . , pC , 0)

with descending order, i.e., 1 = p∗1 ≥ . . . ≥ p∗C+1 = 0. Later,

Wang et al. [20] applied the same measure to the induction

of extreme learning machine tree, and used it for attribute

selection during the induction process. It is noteworthy that

both [19] and [20] applied the ambiguity measure to a set

of probabilities. However, applying this concept to a set of

possibilities might be more effective. Besides, other than

measuring the non-specificity of a set, it is also potential to

measure the uncertainty of an unlabeled sample.

Motivated by the above statements, in this paper, we will

apply possibility approach to MAL, and develop an ambiguitybased strategy for SVM.

III. A MBIGUITY-BASED M ULTICLASS ACTIVE L EARNING

A. Ambiguity Measure

In fuzzy theory, fuzzy logic provides functions for aggregating fuzzy sets and fuzzy relations. These functions are called

aggregation operators. In MAL, if we treat the possibilistic

memberships of unlabeled samples as fuzzy sets, then the

aggregation operators in fuzzy logic could be used to evaluate

the informativeness of these samples.

Given a set of possibilistic memberships {µ1 , . . . , µC }

where µi ∈ [0, 1], i = 1, . . . , C, Frélicot and Mascarilla [11]

proposed the fuzzy OR-2 aggregation operator ▽(2) as:

(2)

▽i=1,...,C µi = ▽i=1,...,C µi △ ▽j6=i µj

,

(2)

where (△, ▽) is a dual pair of t-norm and t-conorm.

It is demonstrated in [12] that when the standard t-norm a△

b = min{a, b} and standard t-conorm a ▽ b = max{a, b} are

(2)

selected, Eq. (2) has the property of ▽i=1,...,C µi = µ′2 , where

µ′2 is the second largest membership among {µ1 , . . . , µC }.

Based on this property, they proposed a specific fuzzy

OR(2)

2 operator, i.e., ▽i=1,...,C µi = △i=1,...,C ▽j6=i µj , and

proved its properties of continuous, monotonic, and symmetric, under some boundary conditions.

Mascarilla et al. [13] further extended Eq. (2) to a generalized version of k-order fuzzy OR operator. Let C =

{1, . . . , C}, P(C) be the power set of C, and Pk = {A ∈

P(C) : |A| = k} where |A| is the cardinality of subset A, the

k-order fuzzy OR operator ▽(k) is defined as:

(k)

▽i=1,...,C µi = △A∈Pk−1 ▽j∈C\A µj ,

(3)

where (△, ▽) is a dual pair of t-norm and t-conorm, and

k ∈ {2, . . . , C}.

By theoretical proof, they also demonstrated that when the

standard t-norm a △ b = min{a, b} and standard t-conorm

a ▽ b = max{a, b} are selected, Eq. (3) has the property of

(k)

▽i=1,...,C µi = µ′k , where µ′k is the k-th largest membership

among {µ1 , . . . , µC }.

It is noteworthy that there are various combinations of

aggregation operators and t-norms, but the study on them is

3

(2)

(2)

AC (x) = ▽i=1,...,C µi (x) ▽i=1,...,C µi (x).

(4)

Eq. (4) reflects the risk of labeling x between two classes,

i.e., class i ∈ C and the mostly preferred class from the others

C \ i. By applying standard t-norm and standard t-conorm,

Eq. (4) is equal to making a comparison between the largest

membership and the second largest membership. In this paper,

we extend the 2-order ambiguity measure to a generalized

version of k-order ambiguity measure as:

(k)

AC (x)

=

(k)

▽i=1,...,C

µi (x) ▽i=1,...,C µi (x).

(5)

Similarly, Eq. (5) reflects the risk of labeling x among k

classes, i.e., classes A ∈ P(C) : |A| = k − 1 and the mostly

preferred class from the others C \ A. By applying standard

t-norm and standard t-conorm, Eq. (5) is equal to making

a comparison between the largest membership and the k-th

largest membership.

In order to get the precise uncertainty information of x,

(k)

we have to consider all the ambiguities, i.e., AC (x), k =

2, . . . , C. The most efficient way is to aggregate them by

1

γ=1

γ=2

γ=3

γ=4

γ=5

0.8

(log k−log(k−1))γ

(log 2−log 1)γ

not the focus of this work. For simplicity, we adopt the standard t-norm and standard t-conorm with the aforementioned

aggregation operators.

In decision theory, ambiguity indicates the risk of classifying a sample based on its memberships. Furthermore, a larger

membership has a higher influence on the risk. Obviously,

the larger ambiguity, the higher difficulty in classifying the

sample. In this section, we will design an ambiguity measure

to achieve this purpose. For the sake of clarity, we start from

a set of axioms. Given a sample x with a set of possibilistic

memberships {µ1 (x), . . . , µC (x)}, where µi (x) ∈ [0, 1] (i =

1, . . . , C) is the possibility of x in the i-th class. The ambiguity

measure on x, denoted as AC (x) = A(µ1 (x), . . . , µC (x)),

is a continuous mapping [0, 1]C → [0, a] (where a ∈ R+ )

satisfying the following three axioms:

1) Symmetry: AC (x) is a symmetric function of

µ1 (x), . . . , µC (x).

2) Monotonicity: AC (x) is monotonically decreasing in

max{µi (x)}, and is monotonically increasing in other

µi (x).

3) Boundary condition: AC (x) = a when µ1 (x) =

µ2 (x) = . . . = µC (x) 6= 0; AC (x) = 0 when

max{µi (x)} 6= 0 and µi (x) = 0 otherwise.

According to Axiom 1, the ambiguity value keeps the same

with regard to any permutation of the memberships. According

to Axiom 2, the increase of the greatest membership and

the decrease of the other memberships will lead to a smaller

ambiguity, i.e., the classification risk on a sample is lower

when the differences between its greatest membership and

the other memberships are larger. According to Axiom 3, the

classification on a sample is most difficult when it equally

belongs to all the classes, and is easiest when only the greatest

membership is not zero.

Frélicot et al. [12] applied the fuzzy OR-2 operator to

classification problem, and proposed the 2-order ambiguity,

to evaluate the classification risk of x, which is defined as:

0.6

0.4

0.2

0

2

3

4

5

6

7

8

9

10

11

k

Fig. 1: Values of

(log k−log(k−1))γ

(log 2−log 1)γ

with different γ.

weighted sum. As a result, we propose an overall ambiguity

measure as Definition 1.

Definition 1: (Ambiguity) Given a sample x with a set

of possibilistic memberships {µ1 (x), . . . , µC (x)}, where

µi (x) ∈ [0, 1] (i = 1, . . . , C) is the possibility of x in the

i-th class, then, the ambiguity of x is defined as:

AC (x) =

XC

k=2

(k)

wk AC (x),

(6)

where wk is the weight for the k-order ambiguity.

It is a consensus that in classifying a sample, the large

memberships are critical, and the small memberships are less

important. With standard t-norm and standard t-conorm, the korder ambiguity is proportional to the k-th largest membership.

As a result, the 2-order ambiguity should be given the highest

weight, and the C-order ambiguity should be given the lowest

weight. In this case, we propose to use a nonlinear weight

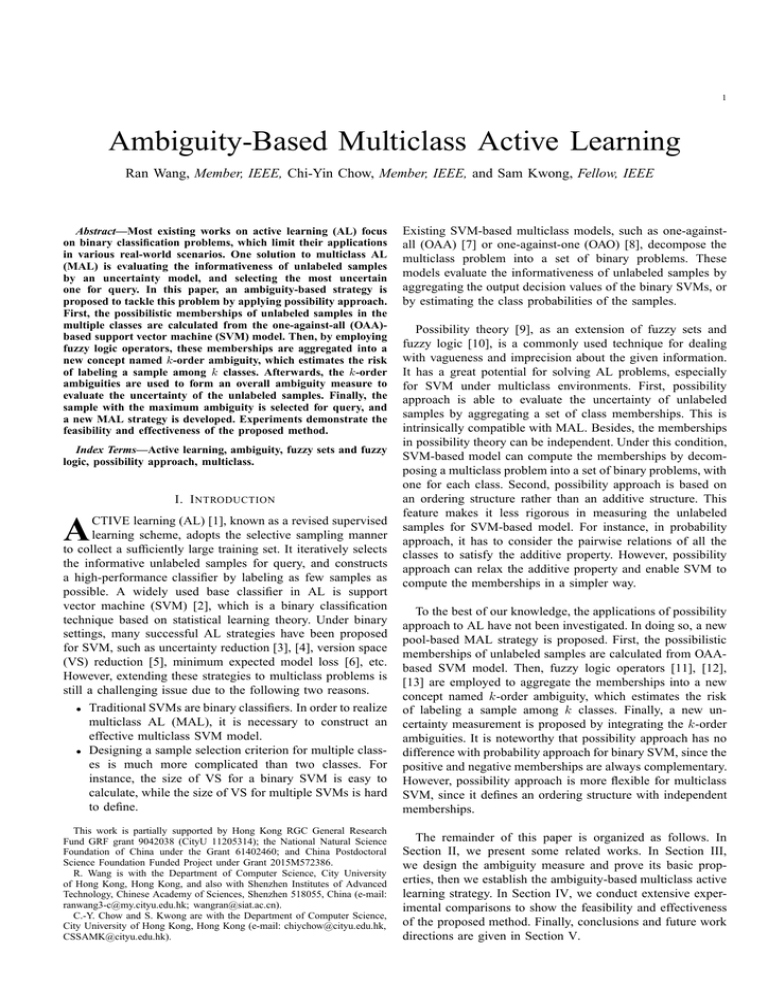

function wk = (log k − log(k − 1))γ , since 1) it is positive

decreasing in [0, +∞], and 2) it can give higher importance to

the large memberships. In this weight function, the scale factor

γ is a real positive

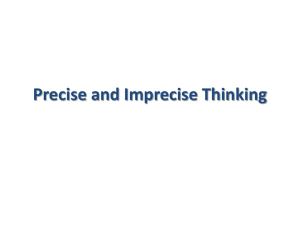

integer. Fig. 1 demonstrates the values of

(log k−log(k−1))γ

when

γ = 1, . . . , 5, which equals to getting

γ

(log 2−log 1)

the normalised weights with w1 = 1. Obviously, a larger γ

can further magnify the importance of the large memberships,

i.e., the 2-order ambiguity will become even more important

and the C-order ambiguity will become even less important.

B. Properties of the Ambiguity Measure

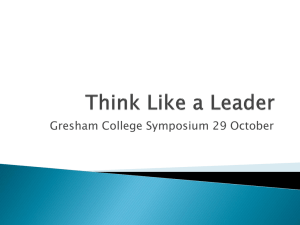

Fig. 2 demonstrates the ambiguity value when C = 2

and γ = 1. Under these conditions, the ambiguity has

several common features. It is symmetric about µ1 (x) =

µ2 (x), strictly decreasing in max{µ1 (x), µ2 (x)}, strictly increasing in min{µ1 (x), µ2 (x)}, and concave. Besides, it attains the maximum at µ1 (x) = µ2 (x) and minimum at

min{µ1 (x), µ2 (x)} = 0. Having these observations, we further give some general properties of the ambiguity measure by

relaxing the conditions of C = 2 and γ = 1. For the sake of

clarity, we denote µi (x) as µi in short, and let µ′1 ≥ . . . ≥ µ′C

be the sequence of µ1 , . . . , µC in descending order.

Theorem 1: AC (x) is a symmetric function of µ1 , . . . , µC .

4

where µ∗i = µ′i /µ′1 .

Theorems 1∼3 show that the three basic axioms given in

Section III-A are satisfied by the proposed ambiguity measure,

and Theorem 4 demonstrates that the proposed measure is a

generalized and extended version of Eq. (1) proposed in [19].

0.8

2

A (x)

0.6

0.4

0.2

0

1

C. Fuzzy Memberships of Unlabeled Sample

0.8

0.6

µ (x)

2

0.4

0.2

0

0.2

0

0.4

0.6

0.8

1

µ (x)

1

Fig. 2: Ambiguity value when C = 2 and γ = 1.

Proof: Let µ′1 ≥ . . . ≥ µ′C be the sequence of µ1 , . . . , µC

in descending order, then

PC

(k)

AC (x) = k=2 AC (x)(log k − log(k − 1))γ

PC

(k)

γ

= k=2 (▽i=1,...,C µi / ▽i=1,...,C µi ) (log k − log(k − 1))

PC

= k=2 (µ′k /µ′1 )(log k − log(k − 1))γ

µ′

µ′

= µ′2 (log 2 − log 1)γ + µ′3 (log 3 − log 2)γ + . . .

1

1

µ′

+ µC′ (log C − log (C − 1))γ .

1

(7)

Given any permutation of µ1 , . . . , µC , the order µ′1 ≥ . . . ≥

µ′C keeps unchanged. Based on Eq. (7) and the definition of

symmetric function, the proof is straightforward.

Theorem 2: AC (x) will decrease when µ′1 increases and all

the others are unchanged; AC (x) will increase when µ′i , i ∈

{2, . . . , C} increases and all the others are unchanged.

Proof: Follow

the expression in

Eq. (7), when i = 1,

µ′3

µ′2

∂AC (x)

γ

(log

2

−

log

1)

−

(log

3 − log 2)γ − . . . −

=

−

′

′2

′2

∂µ

µ

µ

1

1

µ′C

(log C − log (C − 1))γ < 0;

µ′2

1

1

γ

µ′1 (log i − log (i − 1)) > 0.

1

when i = 2, . . . , C,

∂AC (x)

∂µ′i

=

Theorem 3: AC (x) attains its maximum at µ′1 = . . . =

6= 0, and minimum at µ′1 6= 0, µ′C\1 = 0.

µ′C

Proof: When µ′1 = . . . = µ′C 6= 0, it is clear that

... =

µ′2

µ′1

µ′C

µ′1

µ′2

µ′1

=

= 1; when µ′1 6= 0 and µ′C\1 = 0, it is clear that

µ′

= . . . = µC′ = 0. Since µ′1 ≥ . . . ≥ µ′C , follow the

1

expression in Eq. (7), the proof is straightforward.

Theorem 4: When γ is set to 1, AC (x) has the same form

with Eq. (1).

Proof: Follow the expression in Eq. (7), suppose µ′C+1 =

0, when γ = 1 we have:

AC (x)

=

=

=

=

µ′3

µ′2

µ′1 (log 2 − log 1) + µ′1 (log 3 − log 2) + . . .

µ′

+ µC′ (log C − log (C − 1))

1

µ′

µ′

µ′

− µ′2 log 1 + ( µ2′ − µ′3 ) log 2 + . . .

1

1

1

µ′

µ′

µ′

+( µC′ − C−1

) log (C − 1) + µC′ log C

′

µ

1

1

1

µ′

µ′

µ′

µ′

( µ1′ − µ′2 ) log 1 + ( µ2′ − µ′3 ) log 2 + . . .

1

1

1

1

µ′

µ′

+( µC′ − C+1

)

log

C

′

PC 1 ∗ µ1 ∗

i=1 (µi − µi+1 ) log i,

Given a binary training set {(xi , yi )}ni=1 ∈ Rm ×{+1, −1},

the SVM hyperplane is defined as wT x + b = 0, where

w ∈ Rm and b ∈ R. The linearly separable case is

formulated as minw,b 12 wT w, s.t. yi (wT xi + b) ≥ 1, i =

1, . . . , n. The nonlinearly separable case could be handled

by soft-margin SVM,

transforms the formulation into

Pwhich

n

minw,b,ξ 12 wT w+θ i=1 ξi , s.t. yi (wT xi +b) ≥ 1 − ξi , ξi ≥

0, i = 1, . . . , n, where ξi is the slack variable introduced to

xi , and θ is a trade-off between the maximum margin and

minimum training error. Besides, kernel trick [2] is adopted,

which maps the samples into higher dimensional feature space

via φ : x → φ(x), and expresses the inner product of feature

space as a kernel function K : hφ(x), φ(xi )i = K(x, xi ).

By Lagrange

Pn method, the decision function is achieved as

h(x) =

i=1 yi αi K(x, xi ) + b, where αi is the Lagrange

multiplier of xi , and the final classifier is f (x) = sign(h(x)).

SVM-based multiclass models decompose a multiclass

problem into a set of binary problems. Among the solutions,

OAA approach is one of the most effective and efficient methods. It constructs C binary classifiers for a C-class problem,

where fq (x) = sign(hq (x)), q = 1, . . . , C, separates class q

from the rest C − 1 classes. For the testing sample x̂ ∈ Rm ,

the output class is determined as ŷ = argmaxq=1,...,C (hq (x̂)).

In a binary SVM, the absolute decision value of a sample

is proportional to its distance to the SVM hyperplane, and

a larger distance represents a higher degree of certainty in

its label. In OAA-based SVM model, the membership of x

in class q could be computed by logistic function [21], i.e.,

1

, which has the following properties:

µq (x) = 1+e−h

q (x)

When hq (x) > 0, µq (x) ∈ (0.5, 1], and µq (x) will

increase with the increase of |hq (x)|;

• When hq (x) < 0, µq (x) ∈ [0, 0.5), and µq (x) will

decrease with the increase of |hq (x)|;

• When hq (x) = 0, x lies on the decision boundary, and

µq (x) = 0.5.

Obviously, each membership is independently defined for a

specific class. Since the memberships are possibilistic, the

problem is suitable to be handled by possibility theory.

•

D. Algorithm Description

By applying the ambiguity measure and the fuzzy membership calculation, the ambiguity-based MAL strategy is

depicted as Algorithm 1.

We now give an analysis on the time complexity of selecting

one sample in Algorithm 1. Given an iteration, suppose the

number of labeled training samples is l, and the number of

unlabeled samples is u. Based on [22], training a radial basis

function (RBF) kernel-based SVM has the highest complexity

of O(s3 ), and making prediction for one testing sample has

5

Algorithm 1: Ambiguity-Based MAL

Input:

• Initial labeled set

L = {(xi , yi )}li=1 ∈ Rm × {1, . . . , C};

u

m

• Unlabeled pool U = {(xj )}j=1 ∈ R ;

• Scale factor γ and parameters for training

base-classifiers.

Output:

• Classifiers f1 , . . . , fC trained on the final labeled set.

1 Learn C SVM hyperplanes h1 , . . . , hC on L by the OAA

approach, one for each class;

2 while U is not empty do

3

if stop criterion is met then

4

Let f1 = sign(h1 ), . . . , fC = sign(hC );

5

return f1 , . . . , fC ;

6

else

7

for each xj ∈ U do

8

Calculate its decision values by the SVMs,

i.e., hq (xj ), q = 1 . . . , C;

9

Calculate its fuzzy memberships in the

classes, i.e.,

1

µq (xj ) =

, q = 1, . . . , C;

1+e−hq (xj )

10

Calculate its ambiguity based on Eq. (6), i.e.,

AC (xj ) ;

11

end

12

Find the unlabeled sample with the maximum

ambiguity, i.e., x∗ = argmaxxj AC (xj );

13

Query the label of x∗ , denoted by y ∗ ;

14

Let U = U \ x∗ , and L = L ∪ (x∗ , y ∗ );

15

Update h1 , . . . , hC based on L;

16

end

17 end

18 return f1 , . . . , fC ;

1) Random Sampling (Random): During each iteration,

the learner randomly selects an unlabeled sample for query.

The OAA approach is adopted to train the base classifiers.

2) Margin-Based Strategy (Margin) [5]: With the OAA

approach, the margin values of the C bianry

QCSVMs are

aggregated into an overall margin, i.e., m(x) = q=1 |hq (x)|,

and the learner selects the one with the minimum aggregated

margin, i.e., x∗ = argminx m(x).

3) Version Space Reduction (VS Reduction) [15], [16]:

With the OAA approach, assume the original VS area of hq

is Area(V (q) ), and the new area after querying sample x is

(q)

Area(Vx ), then an approximation method is applied, i.e.,

(q)

|h (x)|+1

)Area(V (q) ). Finally, the sample is

Area(Vx ) ≈ ( q 2

QC

(q)

∗

selected by x = argminx q=1 Area(Vx ).

4) Entropy-Based Strategy (Entropy) [17]: This method

is based on probability theory. The OAO approach is adopted,

which constructs C(C − 1)/2 binary classifiers, and each

one is for a pair of classes. The classifier of class q against

class g is defined as hq,g (x) when q < g, where x belongs

to class q if hq,g (x) > 0 and class g if hq,g (x) < 0.

Besides, hq,g (x) = −hg,q (x) when q > g. The pairwise

probabilities of x regarding classes q and g are derived as

rq,g (x) = 1+e−h1q,g (x) when q < g, and rq,g (x) = 1 − rg,q (x)

when q > g. The

P probability of x in class q is calculated

PC

2 C

g6=q,g=1 rq,g

as pq (x) =

. Obviously,

q=1 pq (x) = 1.

C(C−1)

Finally, the sample with

the

maximum

entropy

is selected,

P

i.e., x∗ = argmaxx − C

q=1 pq (x) log pq (x).

5) Best vs. Second Best (BvSB) [17]: This method applies

the same probability estimation process as Entropy, and only

makes use of the two most important classes. Assume the

largest and second largest class probabilities of sample x

are p∗1 (x) and p∗2 (x) respectively, then the most informative

sample is selected by x∗ = argminx (p∗1 (x) − p∗2 (x)).

B. Experimental Design

the complexity of O(sm), where s is the number of support

vectors (SVs) and m is the input dimension. Thus, training

the C binary SVMs (line 1) leads to the highest complexity

of O(Cl3 ), calculating the decision values of the u unlabeled

samples (line 8) leads to the highest complexity of O(uClm),

and calculating the ambiguity values of the u unlabeled

samples (lines 9∼10) leads to the highest complexity of

O(uC 2 ). Furthermore, finding the sample with the maximum

ambiguity (line 12) leads to a complexity of O(u). Finally,

the complexity for selecting one sample in Algorithm 1 is

computed as O(Cl3 ) + O(uClm) + O(uC 2 ) + O(u) ≈

O(Cl3 ) + O(uClm) = O(Cl(l2 + Cm)). It is noteworthy

that this complexity is the highest possible one when all the

training samples are supposed to be the SVs.

IV. E XPERIMENTAL C OMPARISONS

A. Comparative Methods

Five MAL strategies are used in this paper to compare with

the proposed algorithm (Ambiguity).

The experiments are first conducted on 12 multiclass UCI

machine learning datasets as listed in Table I. Since the

testing samples are not available for Glass, Cotton, Libras,

Dermatology, Ecoli, Yeast, and Letter, in order to have a

sufficiently large unlabeled pool, 90% data are randomly

selected as the training set and the rest 10% as the testing set.

Each input feature is normalised to [0,1]. The initial training

set is formed by two randomly chosen labeled samples from

each class, and the learning stops after 100 new samples have

been labeled or the selective pool becomes empty. To avoid

the random effect, 50 trials are conducted on the datasets with

less than 2, 000 samples, and 10 trials are conducted on the

larger datasets. Finally, the average results are recorded.

For fair comparison, θ is fixed as 100 for SVM, and RBF

2

i ||

kernel K(x, xi ) = exp(− ||x−x

) with σ = 1 is adopted.

2σ2

Besides, γ in Eq. (6) is treated as a parameter, and tuned

on the training set. More specifically, the training set X is

divided into two subsets, i.e., X1 and X2 , with equal size.

Active learning is conducted on X1 by fixing γ = 1, 2, . . . , 10,

then, the models are validated on X2 and the best γ value is

selected. The selected γ values for the 12 datasets are listed

in the last column of Table I.

6

TABLE I: Selected datasets for performance comparison

#Feature

Feature Type

#Class

Among the six strategies, Random is a baseline, Margin

and VS Reduction directly utilize the output decision values

of the SVMs, Entropy and BvSB are probability approaches

based on the OAO model, and Ambiguity is a possibility

approach based on the OAA model.

Fig. 3 demonstrates the average testing accuracy and standard deviation of different trials for the six strategies.

The average results on the 12 datasets are shown in Fig. 4.

It is clear from these results that Ambiguity has obtained

satisfactory performances on all the datasets except Vowel. In

fact Vowel is a difficult problem that all the methods fail to

achieve an accuracy higher than 50% after the learning stops.

Besides, Ambiguity has achieved very similar performance

with BvSB in some cases (e.g., datasets Dermatology, Yeast

and Letter). This could be due to the fact that when the

scale factor γ is large enough, the ambiguity measure can be

regarded as only considering the 2-order ambiguity. Since the

2-order ambiguity is just decided by the largest and the second

largest memberships, Ambiguity is intrinsically the same with

BvSB in this case. Furthermore, Ambiguity has achieved low

standard deviation on most datasets. However, all the methods

have shown fluctuant standard deviations on datasets Letter

and Pen. This could be caused by the large size of the datasets

and the small number of trials on them.

Another typical phenomenon observed from Fig. 3 is that

Entropy, which is an effective uncertainty measurement for

many problems, has performed worse than Ambiguity and

even worse than Random in many cases. In order to find

the reason, we make an investigation on the learning process

of dataset Ecoli. Fig. 5 demonstrates the class possibilities

and probabilities of three unlabeled samples in an iteration. It

is calculated from the possibilities that the ambiguity values

of the three samples are 1.722, 0.966 and 1.040. Obviously,

Sample 1 is more benefit to the learning, which will be selected

by Ambiguity. It is further calculated from the probabilities

that the entropy values of the three samples are 2.953, 2.981

Testing Accuracy (%)

C. Empirical Studies

70 / 76 / 17 / 13 / 9 / 29

55 / 49 / 30 / 118 / 77 / 27

24 × 15

112 / 61 / 72 / 49 / 52 / 20

143 / 77 / 2 / 2 / 35 / 20 / 5 / 52

244 / 429 / 463 / 44 / 51 / 163 / 35 / 30 / 20

Note

10 × 9 / 40 × 4 / 20 × 2 / 6 × 2 / 4 / 1

48 × 11

376 / 389 / 380 / 389 / 387 / 376 / 377 / 387

1072 / 479 / 961 / 415 / 470 / 1038

780 / 779 / 780 / 719 / 780 / 720 / 720 / 778

775 / 773 / 734 / 755 / 747 / 739 / 761 / 792 /

80

75

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

70

65

60

5

6

7

7

8

/5

7

10

2

10

/ 380 / 382

10

6

/ 719 / 719

8

783 / 753 / 803 / 783 /

7

85

0

5

4

3

2

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

6

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

Number of New Training Samples

(a) Testing Accuracy

(b) Standard Deviation

Fig. 4: Average result on the 12 UCI datasets.

0.8

Membership

The experiments are performed under MATLAB 7.9.0 with

the “svmtrain” and “svmpredict” functions of libsvm, which

are executed on a computer with a 3.16-GHz Intel Core 2 Duo

CPU, a 4-GB memory, and 64-bit Windows 7 system.

γ

Class Distribution in Training Set

0.8

Possibility

Probability

0.6

0.4

0.2

0

1

2

3

4

5

Class

6

(a) Sample 1

7

8

0.8

Possibility

Probability

0.6

Membership

#Test

Standard Deviation (%)

#Train

Glass

214

0

10

Real+Integer

6

Cotton

356

0

21

Real

6

Libras

360

0

90

Real

15

Dermatology

366

0

34

Real+Integer

6

Ecoli

366

0

7

Real+Integer

8

Yeast

1,484

0

8

Real+Integer

10

Letter

20,000

0

16

Real

26

Soybean

307

376

35

Real+Integer

19

Vowel

528

462

10

Real

11

Optdigits

3,823

1,797

64

Real+Integer

10

Satellite

4,435

2,000

36

Real

6

Pen

7,494

3,498

16

Real+Integer

10

Note: The class distribution of dataset ”Letter” is 789 / 766 / 736 / 805 / 768 /

758 / 748 / 796 / 813 / 764 / 752 / 787 / 786 / 734.

Membership

Dataset

0.4

0.2

0

1

2

3

4

5

Class

6

(b) Sample 2

7

8

Possibility

Probability

0.6

0.4

0.2

0

1

2

3

4

5

Class

6

7

8

(c) Sample 3

Fig. 5: Class memberships of 3 samples.

and 2.951. In this case, Sample 2 will be selected by Entropy. However, this might not be a good selection, since the

advantage of Sample 2 over Samples 1 and 3 is too trivial. This

example tells that the rigorous computation on class probabilities may weaken the differences of the samples in uncertainty,

especially when the number of classes is large, the entropy

value is highly affected by the probabilities of unimportant

classes. In the context of active learning, possibility approach

might be more effective in distinguishing unlabeled samples.

Table II reports the mean accuracy and standard deviation

of the 100 learning iterations, as well as the final accuracy and

the average time for selecting one sample. It is observed that

Ambiguity has achieved the highest mean accuracy and final

accuracy on 11 and 9 datasets out of 12 respectively. Besides,

Ambiguity is much faster than Entropy and BvSB, but slightly

slower than Margin and VS Reduction. It is noteworthy that

in a real active learning process, labeling a sample usually

takes much more time than selecting a sample. For instance,

it may take several seconds to several minutes for labeling

a sample, while the selecting part just takes milliseconds.

7

60

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(a) Glass

(b) Cotton

0

50

35

30

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

40

0

Number of New Training Samples

(e) Ecoli

90

80

70

50

40

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

60

Number of New Training Samples

0 10 20 30 40 50 60 70 80 90 100

8

6

4

2

0

Standard Deviation (%)

Standard Deviation (%)

10

12

8

6

4

2

10 20 30 40 50 60 70 80 90 100

0

Number of New Training Samples

8

6

4

0

Standard Deviation (%)

Standard Deviation (%)

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

10

7

10 20 30 40 50 60 70 80 90 100

6

5

4

0

4

3.5

3

2.5

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(u) Vowel

Standard Deviation (%)

Standard Deviation (%)

4.5

9

8

7

6

0

5

0

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(v) Optdigits

4

3

0

Testing Accuracy (%)

(p) Dermatology

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

3

2.5

2

1.5

1

0

5

4

3

2

1

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

6

0

(t) Soybean

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

6

4

2

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(w) Satellite

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

Number of New Training Samples

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

7

3.5

8

10

5

2

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

6

(s) Letter

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

0 10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

Number of New Training Samples

10 20 30 40 50 60 70 80 90 100

15

75

(l) Pen

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

10

5

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

80

7

(r) Yeast

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

5

85

70

10 20 30 40 50 60 70 80 90 100

11

Number of New Training Samples

(q) Ecoli

90

(o) Libras

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

8

3

Number of New Training Samples

5.5

0

(n) Cotton

9

12

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

75

95

Number of New Training Samples

10 20 30 40 50 60 70 80 90 100

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(h) Soybean

80

Number of New Training Samples

(m) Glass

0

(k) Satellite

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

10

75

70

10 20 30 40 50 60 70 80 90 100

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

80

100

(j) Optdigits

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

12

0

Number of New Training Samples

(i) Vowel

14

35

70

Standard Deviation (%)

0

40

85

(g) Letter

Standard Deviation (%)

25

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

85

Standard Deviation (%)

30

45

90

Number of New Training Samples

Testing Accuracy (%)

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

35

Testing Accuracy (%)

Testing Accuracy (%)

40

50

30

10 20 30 40 50 60 70 80 90 100

100

45

0 10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(d) Dermatology

55

(f) Yeast

50

92

95

Number of New Training Samples

55

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

94

(c) Libras

55

45

96

90

10 20 30 40 50 60 70 80 90 100

60

Testing Accuracy (%)

Testing Accuracy (%)

Testing Accuracy (%)

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

70

0

98

Number of New Training Samples

60

80

65

40

0 10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

85

75

50

Testing Accuracy (%)

0

70

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

60

Testing Accuracy (%)

70

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

70

Standard Deviation (%)

75

80

80

Standard Deviation (%)

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

100

6

Standard Deviation (%)

80

90

Testing Accuracy (%)

85

65

90

100

90

Testing Accuracy (%)

Testing Accuracy (%)

95

Random

Margin

VS Reduction

Entropy

BvSB

Ambiguity

5

4

3

2

1

0

0

10 20 30 40 50 60 70 80 90 100

Number of New Training Samples

(x) Pen

Fig. 3: Experimental comparisons on the selected UCI datasets. (a)∼(l) Testing accuracy. (m)∼(x) Standard deviation.

8

TABLE II: Performance comparison on the selected datasets: mean accuracy (%), standard deviation, final accuracy (%), and

average time for selecting one sample (seconds)

Dataset

mean

Random

final time

Glass

Cotton

Libras

Dermatology

Ecoli

Yeast

Letter

Soybean

Vowel

Optdigits

Satellite

Pen

85.16±6.05

81.60±5.02

64.93±6.89

95.87±1.01

77.67±2.69

48.66±5.36

45.32±5.10

84.93±4.54

38.47±4.97

84.16±6.35

78.92±2.82

84.77±5.12

91.71

87.22

74.94

97.14

80.76

54.51

53.00

89.65

44.85

90.82

81.00

90.03

0.0074*

0.0139*

0.0381*

0.0146*

0.0084*

0.0206*

0.0465*

0.0262*

0.0179*

0.0328*

0.0110*

0.0175*

Avg.

72.54±4.66 77.97 0.0212*

mean

Margin

final time

88.99±7.22

85.03±6.64

66.38±8.96

96.51±1.35

79.47±2.69

48.02±4.41

45.47±4.49

85.87±5.54

37.79±4.46

74.30±3.64

79.33±2.53

88.36±6.49

94.57

91.56

80.06

97.68

82.06

52.51

52.31

92.65

44.11

81.03

82.21

95.01

0.0105

0.0191

0.0724

0.0208

0.0132

0.0362

0.7616

0.0606

0.0238

0.5581

0.1476

0.1162

72.96±4.87 78.81 0.1533

mean

VS Reduction

final time

89.41±7.56

84.67±7.38

66.41±9.28

96.97±1.36

79.86±2.66

47.51±4.20

46.05±4.97

86.35±5.81

39.24±4.74

75.36±2.79

80.14±3.74

85.07±6.14

94.86

92.11

80.22

97.84

81.88

51.45

53.31

92.58

44.64

80.96

83.34

92.60

0.0112

0.0200

0.0706

0.0224

0.0137

0.0384

0.7852

0.0633

0.0247

0.5012

0.1527

0.1170

73.09±5.05 78.82 0.1517

mean

Entropy

final time

87.87±4.84

81.17±4.79

66.85±7.95

96.26±1.10

76.94±2.66

43.64±4.12

39.32±1.83

82.13±2.32

38.63±2.92

82.64±10.63

76.98±2.28

84.99±4.93

93.62

87.11

75.11

96.54

79.29

49.74

41.80

86.19

42.34

92.37

79.79

89.56

0.0367

0.0869

0.2391

0.0946

0.0658

0.3970

10.455

0.2305

0.1557

1.4646

0.9483

1.8034

71.45±4.20 76.12 1.3314

mean

BvSB

final

88.53±4.91

83.89±5.68

67.96±8.31

96.75±1.64

79.55±2.78

49.66±5.11

46.53±5.95

88.89±5.13

43.94±5.36

82.23±11.95

80.99±2.69

89.52±6.21

93.05

89.78

79.28

97.78

81.18

54.77

54.51

92.89

50.26

93.96

83.41

95.35

Ambiguity

final time

time

mean

0.0369

0.0873

0.2341

0.0933

0.0656

0.3984

10.625

0.2261

0.1547

1.4666

0.9027

1.7915

90.62±6.78

86.44±6.80

70.77±8.62

97.17±1.14

80.43±2.58

50.16±4.84

46.53±5.92

90.04±4.42

40.03±5.46

89.53±6.15

81.17±3.18

90.18±6.94

74.87±5.48 80.52 1.3402

94.57

92.72

82.78

97.73

82.29

55.00

55.53

93.29

46.63

95.29

84.09

96.48

0.0112

0.0200

0.0803

0.0243

0.0141

0.0432

0.9271

0.0634

0.0275

0.6073

0.1778

0.1440

76.09±5.23 81.37 0.1783

Note: For each dataset, the highest mean accuracy and final accuracy are in bold face, the minimum time for selecting one sample is marked with *.

TABLE III: Paired Wilcoxon’s signed rank tests (p-values)

Method

Margin

VS Reduction

Entropy

BvSB

Ambiguity

Random

0.1514

0.0923

0.2036

0.0049†

0.0005†

0.2334

0.1763

0.0923

0.0005†

0.0005†

Margin

––

0.1763

0.0923

0.0122†

0.0005†

––

0.5186

0.0342†

0.2661

0.0010†

VS

––

––

0.0522

0.0425†

0.0005†

Reduction

––

––

0.0269†

0.3013

0.0024†

Entropy

––

––

––

0.0010†

0.0005†

––

––

––

0.0010†

0.0005†

BvSB

––

––

––

––

0.0269†

––

––

––

––

0.0425†

Note: In each comparison, the upper and lower results are respectively the

p-values of the Wilcoxon’s signed rank tests on the mean accuracy and

final accuracy. For each test, † represents that the two referred methods are

significantly different with the significance level 0.05.

Assuredly, the time complexity is acceptable.

Finally, Table III reports the p values of paired Wilcoxon’s

signed rank tests conducted on the accuracy listed in Table II.

We adopt the significance level 0.05, i.e., if the p value is

smaller than 0.05, the two referred methods are considered

as statistically different. It can be seen that Ambiguity is

statistically different from all the others by considering both

the mean accuracy and final accuracy.

D. Handwritten Digits Image Recognition Problem

We further conduct experiments on the MNIST handwritten

digits image recognition problem1, which aims to distinguish

0 ∼ 9 handwritten digits as shown in Fig. 6(a). This dataset

contains 60,000 training samples and 10,000 testing samples

from approximately 250 writers, with a relatively balanced

class distribution. We use gradient-based method [23] to

extract 2,172 features for each sample, and select 68 features

by WEKA. Different from the previous experiments, we apply

batch-mode active learning on this dataset, which selects

multiple samples with high diversity during each iteration.

We combine the ambiguity measure with two diversity criteria

proposed in [24], which are angle-based diversity (ABD) and

enhanced clustering-based diversity (ECBD), and realize two

batch-mode active learning strategies, i.e., Ambiguity-ABD

and Ambiguity-ECBD. Besides, we compare them with batchmode random sampling (Random), the ambiguity strategy

1 http://yann.lecun.com/exdb/mnist

without diversity criteria (Ambiguity), and two strategies

in [24] that combine multiclass-level uncertainty (MCLU) with

ABD and ECBD, i.e., MCLU-ABD and MCLU-ECBD. The

initial training set contains two randomly chosen samples from

each class. During each iteration, the learner considers 40

informative samples, and selects the five most diverse ones

from them. Besides, the learning stops after 60 iterations, γ is

tuned as 9 for all the ambiguity-based strategies, and 10 trials

are conducted. The mean accuracy and standard deviation

are shown in Figs. 6(b)∼(c). It can be observed that the

initial accuracy is just slightly higher than 50%. After 300

new samples (0.5% of the whole set) have been queried, the

accuracy has been improved about 30%. Besides, AmbiguityECBD has achieved the best performance, which demonstrates

the potential of combining the ambiguity measure with the

ECBD criteria.

V. C ONCLUSIONS

AND

F UTURE W ORKS

This paper proposed an ambiguity-based MAL strategy by

applying possibility approach, and achieved it for OAA-based

SVM model. This strategy relaxes the additive property in

probability approach. Thus, it computes the memberships in

a more flexible way, and evaluates unlabeled samples less

rigorously. Experimental results demonstrate that the proposed

strategy can achieve satisfactory performances on various

multiclass problems. Future developments regarding this work

are listed as follows. 1) In the experiment, we treat the scale

factor γ as a model parameter and tune it empirically. In the

future, it might be useful to discuss how to get the optimal

γ based on the characteristics of the dataset. 2) It might

be interesting to apply the proposed ambiguity measure to

more base classifiers other than SVMs. 3) If we transform a

possibility vector into a probability vector or conversely, the

existing possibilistic and probabilistic models can be realized

in a more flexible way. How to realize an effective and efficient

transformation between possibility and probability for MAL

will also be one of the future research directions.

R EFERENCES

[1] D. Cohn, L. Atlas, and R. Ladner, “Improving generalization with active

learning,” Mach. Learn., vol. 15, no. 2, pp. 201–221, 1994.

[2] V. N. Vapnik, The nature of statistical learning theory. Springer Verlag,

2000.

0

4

1

9

2

1

3

1

4

4

0

9

1

1

2

4

3

2

7

1

4

8

4

7

6

9

0

3

4

9

5

8

6

5

1

9

0

3

0

6

85

80

75

70

Random

MCLU−ABD

MCLU−ECBD

Ambiguity

Ambiguity−ABD

Ambiguity−ECBD

65

60

55

0

9

0

2

6

7

8

3

9

(a) Samples in MNIST dataset

0

4

50

100

150

200

250

300

Number of New Training Samples

Standard Deviation (%)

5

Testing Accuracy (%)

9

Random

MCLU−ABD

MCLU−ECBD

Ambiguity

Ambiguity−ABD

Ambiguity−ECBD

5

4

3

2

1

0

0

50

100

150

200

250

300

Number of New Training Samples

(b) Testing Accuracy

(c) Standard Deviation

Fig. 6: Batch-mode active learning results on MNIST dataset.

[3] R. Wang, D. Chen, and S. Kwong, “Fuzzy rough set based active

learning,” IEEE Trans. Fuzzy Syst., vol. 22, no. 6, pp. 1699–1704, 2014.

[4] R. Wang, S. Kwong, and D. Chen, “Inconsistency-based active learning

for support vector machines,” Pattern Recogn., vol. 45, no. 10, pp. 3751–

3767, 2012.

[5] S. Tong, “Active learning: theory and applications,” Ph.D. dissertation,

Citeseer, 2001.

[6] M. Li and I. K. Sethi, “Confidence-based active learning,” IEEE Trans.

Pattern Anal. Mach. Intell., vol. 28, no. 8, pp. 1251–1261, 2006.

[7] R. Rifkin and A. Klautau, “In defense of one-vs-all classification,” J.

Mach. Learn. Res., vol. 5, pp. 101–141, 2004.

[8] T.-F. Wu, C.-J. Lin, and R. C. Weng, “Probability estimates for multiclass classification by pairwise coupling,” J. Mach. Learn. Res., vol. 5,

no. 975-1005, p. 4, 2004.

[9] D. Dubois and H. Prade, Possibility theory. Springer, 1988.

[10] G. J. Klir and B. Yuan, Fuzzy sets and fuzzy logic: theory and

applications. Prentice Hall New Jersey, 1995.

[11] C. Frélicot and L. Mascarilla, “A third way to design pattern classifiers

with reject options,” in Proc. 21st Int. Conf. of the North American Fuzzy

Information Processing Society. IEEE, 2002, pp. 395–399.

[12] C. Frélicot, L. Mascarilla, and A. Fruchard, “An ambiguity measure

for pattern recognition problems using triangular-norms combination,”

WSEAS Trans. Syst., vol. 8, no. 3, pp. 2710–2715, 2004.

[13] L. Mascarilla, M. Berthier, and C. Frélicot, “A k-order fuzzy OR

operator for pattern classification with k-order ambiguity rejection,”

Fuzzy Sets Syst., vol. 159, no. 15, pp. 2011–2029, 2008.

[14] T. M. Hospedales, S. Gong, and T. Xiang, “Finding rare classes: Active

learning with generative and discriminative models,” IEEE Trans. Knowl.

Data Eng., vol. 25, no. 2, pp. 374–386, 2013.

[15] R. Yan and A. Hauptmann, “Multi-class active learning for video

semantic feature extraction,” in Proc. 2004 IEEE Int. Conf. Multimedia

and Expo, vol. 1. IEEE, 2004, pp. 69–72.

[16] R. Yan, J. Yang, and A. Hauptmann, “Automatically labeling video data

using multi-class active learning,” in Proc. 9th ICCV. IEEE, 2003, pp.

516–523.

[17] A. J. Joshi, F. Porikli, and N. P. Papanikolopoulos, “Scalable active

learning for multiclass image classification,” IEEE Trans. Pattern Anal.

Mach. Intell., vol. 34, no. 11, pp. 2259–2273, 2012.

[18] D. Dubois, H. Prade, and S. Sandri, “On possibility/probability transformations,” in Fuzzy logic, 1993, pp. 103–112.

[19] X. Z. Wang, L. C. Dong, and J. H. Yan, “Maximum ambiguity-based

sample selection in fuzzy decision tree induction,” IEEE Trans. Knowl.

Data Eng., vol. 24, no. 8, pp. 1491–1505, 2012.

[20] R. Wang, Y.-L. He, C.-Y. Chow, F.-F. Ou, and J. Zhang, “Learning ELMtree from big data based on uncertainty reduction,” Fuzzy Sets Syst., vol.

258, pp. 79–100, 2015.

[21] S. C. H. Hoi, R. Jin, J. Zhu, and M. R. Lyu, “Batch mode active learning

and its application to medical image classification,” in Proc. 23rd ICML.

ACM, 2006, pp. 417–424.

[22] L. Bottou and C.-J. Lin, “Support vector machine solvers,” Large Scale

Kernel Machines, pp. 301–320, 2007.

[23] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning

applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–

2324, 1998.

[24] B. Demir, C. Persello, and L. Bruzzone, “Batch-mode active-learning

methods for the interactive classification of remote sensing images,”

IEEE Trans. Geosci. Remote Sens., vol. 49, no. 3, pp. 1014–1031, 2011.

Ran Wang (S’09-M’14) received her B.Eng. degree

in computer science from the College of Information

Science and Technology, Beijing Forestry University, China, in 2009, and the Ph.D. degree from City

University of Hong Kong, in 2014. She is currently

a Postdoctoral Senior Research Associate at the

Department of Computer Science, City University

of Hong Kong. Since 2014, she is also an Assistant

Researcher at the Shenzhen Key Laboratory for High

Performance Data Mining, Shenzhen Institutes of

Advanced Technology, Chinese Academy of Sciences, China. Her current research interests include pattern recognition,

machine learning, fuzzy sets and fuzzy logic, and their related applications.

Chi-Yin Chow received the M.S. and Ph.D. degrees

from the University of Minnesota-Twin Cities in

2008 and 2010, respectively. He is currently an assistant professor in Department of Computer Science,

City University of Hong Kong. His research interests include spatio-temporal data management and

analysis, GIS, mobile computing, and location-based

services. He is the co-founder and co-organizer of

ACM SIGSPATIAL MobiGIS 2012, 2013, and 2014.

Sam Kwong (M’93-SM’04-F’13) received the B.Sc.

and M.S. degrees in electrical engineering from the

State University of New York at Buffalo in 1983,

the University of Waterloo, ON, Canada, in 1985,

and the Ph.D. degree from the University of Hagen,

Germany, in 1996. From 1985 to 1987, he was a

Diagnostic Engineer with Control Data Canada. He

joined Bell Northern Research Canada as a Member

of Scientific Staff. In 1990, he became a Lecturer

in the Department of Electronic Engineering, City

University of Hong Kong, where he is currently a

Professor and Head in the Department of Computer Science. His main research

interests include evolutionary computation, video coding, pattern recognition,

and machine learning.

Dr. Kwong is an Associate Editor of the IEEE Transactions on Industrial

Electronics, the IEEE Transactions on Industrial Informatics, and the Information Sciences Journal.