A Language-Based Approach to Categorical Analysis by

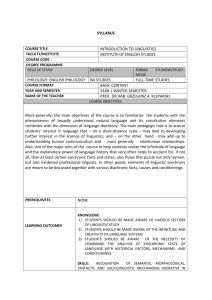

advertisement