Assessment of Storage IO performance Ole W. Saastad USIT, University of Oslo Version:

advertisement

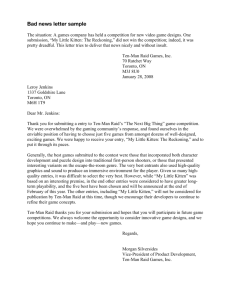

Assessment of Storage IO performance Ole W. Saastad USIT, University of Oslo Version: Date: 0.7 17. Oct. 2007 Assessment of IO Storage performance Introduction This benchmark report presents results from assessment of storage IO performance for a range of storage options ranging from local cached disk IO, through a single NFS file server to a cluster of file servers using clustered file systems. For the single node storage performance hardware RAID (Redundant Array of Inexpensive Disks) as well as single disks has been tested. In addition to tests that show the effect of cached (when data can fit into main memory) disk performance. For the cluster file system and cluster file server a range of options has been tested. Both different RAID solutions as well as the effect of cluster setup, hardware RAID, software RAID and letting the cluster file system mange all the disks without any RAID. All measurements has been measured using the IOZone benchmark. A block size of 64 kilo Bytes has been used and IOZone temp file has been 5 Giga Bytes, unless clearly stated in text, table head, caption or legend. File size must be significantly larger than the total memory in the node used. See appendix for more details about IOZone. The following naming convention has been used: capital letter B mean Bytes, where one byte is 8 bits. The term bits is avoided where possible. All measurements are presented as measured in the lab and has been verified by to, three og in some cases more runs. However, I can give no guarantee that another tester would see the same numbers. My numbers represent what a used might experience when setting up the system. Hardware and Software Hardware was provided by Dell, HP and different sources. Additional information about the hardware and software are found in the appendices. 10/17/07 Page 3 of 71 Assessment of IO Storage performance Results Single node performance When running many scientific and technical applications local temporary storage of very often needed. This is usually provided by means of a scratch or temporary space resident on a local file system. This is normally the fastest secondary storage device available, and has the advantage that is scales perfectly with the number of nodes added. Even if a networked storage device can provide more bandwidth for a single node it cannot usually match the combined bandwidth of all the local disk together. If the application do IO on one node at the time it might be faster using IO over the network. Another point is that if the total working set of the application, both application memory and scratch files can fit in main memory the IO will be cached and IO performance rises orders of magnitude. Hence the omnipresent need for more memory. Typical IOZone command is : /opt/iozone/bin/iozone -r 64 -s 5g -i 0 -i 1 -i 2 -w -f /temp/iozone.tmp The PowerEdge 1750 nodes have two CPUs and in order to simulate a normal application run the IO performance using two concurrently running IOZone applications were also tested. This puts far more strain on the IO subsystem as two jobs are trying to share the already limited bandwidth of the disk subsystem. The IOZone command was the same apart from using two different temporary files. All reported numbers in tables are in Mega Bytes per second. Arrangement of Disks in a single node : RAID 1 versus RAID 0 Multiple disks in a single node can be arranged in RAID 0 Striping or RAID 1 Mirroring using the built in PERC hardware RAID controller. For this test a Vega node v5, a Dell PowerEdge 1750 with Dual SCSI 36 GB 10 kRPM disks was used employing the built in PERC RAID controller. Default values were used for advanced options in the PERC controllers setup of the RAID (see appendix for hardware and software details). For this test the temporary disk was formatted using the Linux ext2 file system and the system was running RedHat EL4. A single IOZone was run to measure the performance. 10/17/07 Page 4 of 71 Assessment of IO Storage performance Table 1: RAID 0 Striping versus RAID 1 Mirroring. Write Rewrite Read Reread Random Read Random Write RAID 0 77.4 67.5 92.6 92.8 14.6 42.9 RAID 1 40.7 33.4 44.2 45.7 14.8 13.8 RAID 0 vs. RAID 1 Performance [kB/sec] 100000 90000 RAID 0 80000 RAID 1 70000 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 1: RAID 0 Striping versus RAID 1 Mirroring. File system performance – Ext2 versus Ext3 For this test a Vega node v3, a Dell PowerEdge 1750 with Dual SCSI 36 GB 10 kRPM disks was used employing the built in PERC RAID controller set to Stripe the two disks as RAID 0. Default values were used for advanced options in the PERC controllers setup of the RAID (see 10/17/07 Page 5 of 71 Assessment of IO Storage performance appendix for hardware and software details). For this test the temporary disk was formatted using the Linux ext2 and Ext3 file system and the system was running RedHat EL4. Table 2: Ext2 versus Ext3 running RedHat EL4. Write Rewrite Read Reread Random Read Random Write Ext2 77.4 76.5 92.6 92.8 14.6 42.9 Ext3 78.9 61.0 84.4 85.4 14.3 34.9 Ext 2 vs. Ext 3 file system ­ IOZone Performance [kB/sec] 100000 90000 Ext 2 80000 Ext 3 70000 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 2: Ext2 versus Ext3 file system running RedHat EL4. The ext2 file system seems to perform somewhat better than ext3 in this single IOZone test. File system test – RedHat versus Suse RedHat only support Ext2 and Ext3 as their native file systems. Suse has support for these in addition to Reiser File System and the XFS. One PowerEdge 1750 with Dual SCSI 36 GB 10 10/17/07 Page 6 of 71 Assessment of IO Storage performance kRPM disks was used employing the built in PERC RAID controller set to Stripe the two disks as RAID 0. Default values were used for advanced options in the PERC controllers setup of the RAID (see appendix for hardware and software details). Again one IOZone benchmark was used to assess the performance. See appendix for more details of the RedHat and Suse installation. Table 3: RedHat EL4 versus Suse SLES 9 SP2 using Ext3 file system. Write Rewrite Read Reread Random Read Random Write RedHat 78.9 61.0 84.4 85.4 14.3 34.9 Suse 76.5 74.3 92.2 93.5 13.3 24.9 The results show minor differences with no conclusive performance gain for any of the two using the same file system. IOZone RedHat EL4 vs. SUSE SLES 9 ­ Ext3 Performance [kB/sec] 100000 90000 RedHat EL 4 80000 SUSE SLES 9 70000 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write # IOZone test Figure 3RedHat EL4 versus Suse SLES 9 - Ext3 file system File system test – Suse SLES 9 Ext3, Reiser and XFS. 10/17/07 Page 7 of 71 Assessment of IO Storage performance Suse SLES 9 has support for a range of file systems and each of these has characteristics that might be of an advantage for high performance computing which normally read and write large files. A test was performed running a single IOZone using several different file systems. The same Dell PE 1750 as above was used using the same RAID 0 settings. Table 4: Ext3, Reiser and XFS file systems running Suse SLES 9. Write Rewrite Read Reread Random Read Random Write Ext3 76.5 74.3 92.2 93.5 13.3 24.9 Reiser 93.6 90.5 82.6 80.3 14.7 48.7 XFS 118.3 93.2 98.9 100.9 14.0 33.3 IOzone Ext3, Reiser vs. XFS 120000 Ext 3 Performance [kB/sec] 110000 Reiser 100000 XFS 90000 80000 70000 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write # IOZone test Figure 4: File system test Ext3, Reiser versus XFS running Suse SLES 9. 10/17/07 Page 8 of 71 Assessment of IO Storage performance 10/17/07 Page 9 of 71 Assessment of IO Storage performance Tests using two concurrently running IOZone benchmarks A typical use of the dual CPU nodes is to run two processes on each node in order to use both CPUs. This is straining both memory bandwidth and file IO. In order to simulate such usage and to assess the performance two concurrent IOZone benchmarks were launched. The two IOZone benchmarks run using two different IOZone temporary files in a common temporary directory. This common directory were mounted on disks in RAID 0 or RAID 1 configuration and a mounted using different file systems. Average performance for the two IOZones is reported. Some results are significantly lower that the other as one instance of IOZone can lock the disk for long periods of time hence getting better results. Reporting the average will give a view of typical performance of an application as these differences tend to cancel out over time. 2x the performance noted should equal the performance of a single IOZone if resources are shared equal. RAID 1 using Two concurrently running IOZones. The test show the performance measured using one and two concurrently running IOZones. This test was run using RedHat EL4 and Ext3 file system. Table 5: RAID 1 Mirroring Ext3 with one and two concurrently running IOZones. # Iozones Write ReWrite Read Reread Random Read Random Write One 40.7 33.4 44.2 45.7 14.8 13.8 Two 18.1 15.5 39.5 40.6 6.6 11.0 10/17/07 Page 10 of 71 Assessment of IO Storage performance IOZone RAID 1 mirroring ­ one/two conc. tests Performance [kB/sec] 50000 45000 40000 1 Concurrent tests 35000 2 Concurrent tests 30000 25000 20000 15000 10000 5000 0 Write Rewrite Read Reread Random Read Random Write IOZone Tests Figure 5: RAID 1 performance using one and two concurrently running IOZones. It is evident that the performance seen by each of the IOZone processes are far less that what is observed whan running only one IOZone instance. This will have, and have been seen on some applications, a dramatic impact on applications that rely on local disk IO for scratch files during a run. Changing to RAID 0 might improve performance for the disk IO. RAID 0 using Two concurrently running IOZones. RAID 0 yield better bandwidth for both tests both one IOZone running alone and for two concurrently running IOZones. The test show the performance measured using one and two concurrently running IOZones. This test was run using RedHat EL4 and Ext3 file system. Table 6: RAID 0 Mirroring Ext3 with one and two concurrently running IOZones. # Iozones Write Rewrite Read Reread Random Read Random Write One 78.9 61.0 84.4 85.4 14.3 34.9 Two 41.1 26.1 19.0 19.2 5.6 31.4 10/17/07 Page 11 of 71 Assessment of IO Storage performance IOZone RAID 0 Ext3 ­ one/two concurrent tests. Performance [kB/sec] 90000 80000 1 Concurrent tests 70000 2 Concurrent tests 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 6: RAID 0 performance using one and two concurrently running IOZones. File system Ext2 versus Ext3 when running Two Concurrently IOZones. The two files systems Ext2 and the journaling file system Ext3 perform differently when two IOZones are run concurrently. The test show the performance measured using one and two concurrently running IOZones. This test was run using RedHat EL4 with Ext2 and Ext3 file system. Table 7: RAID 0 Mirroring Ext2 versus Ext3 with two concurrently running IOZones. Write Rewrite Read Reread Random Read Random Write Ext2 32.1 17.5 14.9 15.0 6.0 25.1 Ext3 41.1 26.1 19.0 19.2 5.6 31.4 10/17/07 Page 12 of 71 Assessment of IO Storage performance Ext 2 vs. Ext 3 ­ RAID 0 Two conc. tests Performance [kB/sec] 45000 40000 Ext 2 35000 Ext 3 30000 25000 20000 15000 10000 5000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 7: Ext2 versus Ext3 when running two concurrently IOZones. It is evident that Ext3 outperform Ext2 when running two instances of IOZone at concurrently. How does RAID 0 compare to RAID 1 when running two instances of IOZone ? RAID 0 versus RAID 1 running Two IOZones concurrently. Table 8: RAID 1 versus RAID 0 using Ext3 with two concurrently running IOZones. Write Rewrite Read Reread Random Read Random Write RAID 0 41.1 26.1 19.0 19.2 5.6 31.4 RAID 1 18.1 15.5 39.5 40.6 6.5 11.0 10/17/07 Page 13 of 71 Assessment of IO Storage performance RAID 0 vs. RAID 1 Two conc. tests ­ ext3 45000 RAID 0 40000 RAID 1 35000 30000 25000 20000 15000 10000 5000 0 Write Rewrite Read Reread Random Read Random Write Figure 8: RAID 0 versus RAID 1 when running two concurrent IOZones using Ext3 file system. For all write test except read the RAID 0 perform better than RAID 1. A relevant question is how the Reiser file system and XFS perform when running two instances of IOZone concurrently. The next tests show below sheds light to this question. Two concurrently running IOZones using Ext3, Reiser and XFS file system. For this test a PE1750 with Dual SCSI 36 GB 10 kRPM disks was used employing the built in PERC RAID controller set to Stripe the two disks as RAID 0. Default values were used for advanced options in the PERC controllers setup of the RAID (see appendix for hardware and software details). Suse SLES 9 SP2 was installed and a partition of the disk was formatted as Ext3, Reiser or XFS, the same partition was used the same time to remove any geometrical effect on the hard drive. 10/17/07 Page 14 of 71 Assessment of IO Storage performance Table 9: Two concurrently IOZones running using Ext3, Reiser and XFS file systems, Suse SLES 9. Write Rewrite Read Reread Random Read Random Write Ext3 34.2 4.3 16.6 16.7 4.2 4.6 Reiser 87.5 85.5 22.0 28.5 5.7 45.1 XFS 84.6 52.4 24.8 28.3 59.5 31.1 IOZone Ext3, Reiser vs. XFS Two concurrent IOZones Performance [kB/sec] 90000 Ext 3 80000 Reiser XFS 70000 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 9: File system Ext3, Reiser versus XFS when running two concurrently IOZones. It is evident that both Reiser FS and XFS perform far better than Ext3 when running two IOZones concurrently. Reiser FS seem to perform slightly better than XFS. 10/17/07 Page 15 of 71 Assessment of IO Storage performance What kind of disks ? ATA/SATA or SCSI ? SATA/ATA disks are inexpensive compared to the SCSI disks. The latter are percieved to be better performing disks. In order to assess the performance of these different disks a number of tests have been undertaken. Two systems have been compared. One Dell PoweEdge 1750 with Dual SCSI 36 GB 10 kRPM disks. (see appendix for hardware and software details). And one HP Proliant DL 140 with dual ATA 250 GB 7.2 kRPM disks. No RAID controllers were configured for any RAID operation only one disk were used in each node. Each disk were accessed as a device through the kernel as /dev/sda and /dev/hda. Both nodes were installed with RedHat EL4 and running Ext3 file system. Table 10: ATA disks versus SCSI disks. Disk type Write Rewrite Read Reread Random Read Random Write ATA 26.9 21.8 55.0 55.7 7.6 4.6 SCSI 40.7 33.4 44.2 45.7 14.8 13.8 IOzone ATA vs. SCSI 60000 Performance [kB/sec] 55000 ATA hda1 50000 SCSI sda3 45000 40000 35000 30000 25000 20000 15000 10000 5000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 10: ATA disk versus SCSI Disk using IOZone. 10/17/07 Page 16 of 71 Assessment of IO Storage performance For sequential access there is no clear picture of which disk giving best performance, but for random access the SCSI disk outperform the ATA with a factor of 2 to 3. Depending on the access pattern of the application used the expected performance might range from equal to three times the performance. Typical use of high performance is not typical use when running data bases, print servers, web servers and other service oriented work where random access is the norm. Scientific and technical applications tend to use large scratch files and hence need high bandwidth for sequential IO. For this kind of access the SATA disk show close to equal performance as the more expensive SCSI disks. RAID of ATA/SATA disks On HP Proliant DL 140 with dual ATA 250 GB 7.2 kRPM disks were used for this test. No hardware RAID controller is present in this node hence the usage of software RAID through LVM. The following command were issued to create the software RAID: lvcreate -i 2 -I 64k -L 400G -n lustrevol VolGroup00 Striping over two disks with 64k Bytes blocks and a total size of 400 GBytes. The node was installed with RedHat EL4 and was running Ext3 file system. Table 11: Single ATA disk versus LVM software RAID using ATA disks. Disk type Write Rewrite Read Reread Random Read Random Write Single ATA 26.9 21.8 55.0 55.7 7.6 4.6 RAID 0 ATA 54.4 44.6 71.2 71.2 7.6 24.0 10/17/07 Page 17 of 71 Assessment of IO Storage performance IOZone ­ one ATA disk vs. two ATA disks LVM RAID 0 Performance [kB/sec] 80000 ATA hda1 70000 ATA RAID 0 60000 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Random Read Write IOZone test Figure 11: Single ATA disk versus LVM RAID 0 using two ATA disks. The measured performance is as expected. For write a doubling of performance is observed, less for the read operations. For random write a very large increase in performance is observed. The test was rerun to check this and results are consistent. Running two two ATA disks in this kind of node is an efficient way of achieving higher disk bandwidth performance. The cost effectiveness of ATA/SATA disks makes this solution a cost effective alternative. ATA/SATA disks are also generally larger than the SCSI disks. Price performance issue – SCSI versus ATA/SATA SCSI disks being 3 to 4 times more costly that ATA disks the possibility to use ATA disks in RAID 0 configuration will in many cases yield a more cost effective solution. 10/17/07 Page 18 of 71 Assessment of IO Storage performance IOZone ­ ATA RAID 0 vs. Single SCSI disk Performance [kB/sec] 80000 70000 Dual ATA RAID 0 60000 Single SCSI 50000 40000 30000 20000 10000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 12: Two ATA disks in RAID 0 configuration versus a SINGLE SCSI disk. Figure 12 show how a RAID 0 configuration of two ATA disks compare with a single SCSI disk in performance. In most of the tests the combination of two ATA disks clearly outperform a far more expensive SCSI disk. Using two disks in RAID 0 yield a higher failure rate, but for disks local to a cluster there ius usually no data that is not already stored at the main disk storage for the cluster, which in turn is backed up on tape. The effect of memory The total amount of memory strongly effect the performance of a system. What is less known is that the total amount of memory required by the application is given by the so called working set. This is memory requirement for the application to run and the scratch files that normally is written to temporary disk space, secondary storage. If the complete working set can fit in main memory the performance will increase tremendously. To exemplify this a special test using IOZone were performed. A test using a temporary file of just half a Giga Byte. The command looked like this: iozone -r 64 -s 500M -i 0 -i 1 -i 2 -w -f /tmp/iozone.tmp. 10/17/07 Page 19 of 71 Assessment of IO Storage performance Linux will try to use extra unused physical memory to cache the file system. This mean that the file system cache will grow with increased amount of memory. If the complete working set can be kept in main memory it will. The node used for this test is the same node a the node for the above test SATA vs. SCSI disks. The cached results are from the 0.5 GB temp file while the none­cached results are from a 5 Giga Bytes temp file. All performance data in table are in Mega Bytes per second. Table 12: The effect of memory, Cached versus None-cached IO. Write Rewrite Read Reread Random Read Random Write Cached 122.0 123.9 1409.0 1444.1 1373.6 514.2 None­ cached 26.9 21.8 55.0 55.7 7.6 4.6 IOZone Cached vs. none Cached Performence [kB/sec] 1600000 Cached 0.5 GB 1400000 Non­cached 5 GB 1200000 1000000 800000 600000 400000 200000 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 13: Cached versus None-cached IO. Reproduceability 10/17/07 Page 20 of 71 Assessment of IO Storage performance Is the numbers obtained for one server reproducable and how large is the variance in the numbers quoted ? In a test several runs were performed for each test. The numbers are reported in Mega Bytes per second as average of 3 runs with the standard deviation given relative in percent to the average. Table 13: Reproducability of IOZone measurements on a single node using a single IOZone instance. Write Rewrite Read Reread Ext3 79.6 ± 5.9% 67.0 ± 11.7% 87.3 ± 4.1% 91.5 ± 0.6% Reiser 92.8 ± 2.2% 91.5 ± 1.0% 78.0 ± 0.9% 77.6. ± 0.7% XSF 110.0 ± 1.6% 87.4 ± 1.4% 91.3 ± 2.4% 64.7 ± 74,5% For some of the tests there are significant deviations. 11 percent and 74 percent. Those two results come from two anomalies in the results and might be that scheduling of one of the IOZone instances making enter a sleep or wait state. The data does not seem to follow a normal distribution hence statistical methods and testing based on normally distributed data cannot be used. However with only 3 data sets it is not easy to make any assessment of the distribution nor the standard deviation. 10/17/07 Page 21 of 71 Assessment of IO Storage performance Two typical servers for Storage For small amounts of storage built in disks can be used. Dell PowerEdge 2850 Dual Xeon 2.8 GHz CPU. 4 x SCSI ultra 320 73 GB. Maxtor ATLAS 10kRPM disks. Table 14: RAID options for the PE 2850 server. Write Rewrite Read Reread Random Read Random Write Single disk 53.5 53.0 60.2 63.9 13.8 24.8 RAID 5 86.8 68.7 22.3 22.2 9.1 8.9 RAID 0 160.2 137.9 88.1 92.7 15.8 95.7 HP Proliant DL 585 HP DL 585 4 x 1.8 GHz Opteron, 4 x 72 GB HP 72.8GB 10,000 RPM Wide Ultra320 SCSI Hard Drives. Table 15: RAID options for the DL-585 server. Write Rewrite Read Reread Random Read Random Write Single disk 55.0 33.6 60.7 62.7 15.1 37.8 RAID 5 29.9 20.4 107.6 109.2 15.0 21.9 RAID 0 82.1 51.3 138.0 139.6 16.3 93.9 10/17/07 Page 22 of 71 Assessment of IO Storage performance LVM RAID 0 10/17/07 Write Rewrite Read Reread Random Read Random Write 71.6 72.2 135.4 148.4 22.0 32.1 Page 23 of 71 Assessment of IO Storage performance Small RAID solutions DELL / EMC ­ AX100i A Dell/EMC RAID AX 100i has been tested. The AX 100i is connected to a Dell PE 2850 file server using iSCSI over Gigabit Ethernet. EMC AX 100i using SATA disks. Configured as RAID 5. 12 disks are configured in two RAID groups using 5 disks and a single hot spare in each group. Table 16: AX 100i performance seen over iSCSI over Gigabit Ethernet. RAID 5 Write Rewrite Read Reread Random Read Random Write 44.4 46.7 36.8 36.8 9.6 16.3 Performance is close to what is claimed by others for this kind of tests and application usage. Nexan InfiniSAN A RAID comprising 8 Seagate 17.5 GB, 15 kRPM, Ultra 160 SCSI disks each interfaced with 1 Gbits/sec FibreChannel interface was tested. Both RAID 5 and RAID 0 was tested. The RAID was connected to a Dell PowerEdge 1650 server. A QLogic qla2200 HCA was used. Table 17: Open System RAID performance using 1 Gbits/sec Fibre Channel interface. Write Rewrite Read Reread Random Read Random Write RAID 5 89.4 78.9 39.7 39.8 9.6 19.6 RAID 0 97.7 84.6 40.3 40.4 9.8 78.5 This RAID solution performed close to wire speed (1 Gbits/s eq. 100 MB/sec) for write, less so for read operations. 10/17/07 Page 24 of 71 Assessment of IO Storage performance Nexan SATABeast A Nexan SATABeast was set up with the following configuration. 28 Hitachi Deskstar 7500 rpm 500 GB SATA disks, two RAID controllers each with two 2 Gbits/s Fibre Channel ports. A total of four 2 Gbits/s FC channels. Cache settings on the SATABeast was set to optimize sequential access. The SATABeast was configured as follows : Four RAID groups each comprising 7 disks in a RAID 5 configuration. Four logical volumes each mapped to a single Fibre Channel Port ensuring that each volume had exclusive access to it's Fibre Channel link. At the server side four Logical Partitions were configured using LVM, this was done by mapping the four physical devices (/dev/sda through /dev/sdd) to four logical volumes which in turn was mounted as four directories. Using this setup it was assumed that each directory was running over a unique Fibre Channel link. Tests on each individual directory confirmed this. The actual test was done by running four IOZone instances concurrently each using a unique directory (mounted as /beast1 through /beast4). The number reported are aggregate values for all four concurrently IOZone runs. Test using HP DL 140 A test using a HP DL 140 server, dual 3.2 GHz Xeon processors 1 GBytes memory. One quad 2 Gbits/s Fibre Channel Ports, Qlogic QLA 2344, card was installed using the single 133 MHz PCI­X slot. 10/17/07 Page 25 of 71 Assessment of IO Storage performance Red Hat AS 4 U2 Ext 3 file system The server was running RedHat AS 4 Update 2 and directories were formatted as Ext 3 file system. Table 18: Nexan SATABeast RAID performance using four 2 Gbits/sec Fibre Channel interface, RedHat AS 4 U2 with Ext 3 file system. Write Rewrite Read Reread Random Read Random Write RAID 5 267,2 316,1 372,6 383.5 27,24 51,94 RAID 0 269,5 314,7 369,9 386,1 27,81 193,8 Performance [kB/sec] IOZone performance - Nexan SATABeast 375000 350000 325000 300000 275000 250000 225000 200000 175000 150000 125000 100000 75000 50000 25000 0 Write Read Random Read Random Write IOZone test Figure 14: Nexan SATABeast IOZone performance using a HP DL 140 server, RedHat AS 4 U2 - Ext 3 file system. Four IOzones run concurrently. Suse SLES 9 SP 3, Reiser File system 10/17/07 Page 26 of 71 Assessment of IO Storage performance The server was running Suse SLES 9 Service Pack 3 and directories were formatted as Reiser file system. The effect of Hyperthreading was tested in these runs as there are four IOZone runs running on just two CPUs. Since there are more threads than CPUs this should be a good case to test the effect of HyperThreading. The RAIDs were configured as RAID 0, striping. Table 19: Nexan SATABeast RAID performance using four 2 Gbits/sec Fibre Channel interface, Suse SLES 9 SP 3 - Reiser file system. Write Rewrite Read Reread Random Read Random Write HT off 364,5 526,5 373,2 539,4 23,23 79,88 HT on 425,0 414,3 388,5 520,1 23,07 95,58 10/17/07 Page 27 of 71 Assessment of IO Storage performance IOZone ­ HT effect ­ Nexan SATABeast 450000 Performance [kB/sec] 400000 HT Off 350000 HT On 300000 250000 200000 150000 100000 50000 0 Write Read Random Read Random Write IOZone test Figure 15: Nexan SATABeast IOZone performance using a HP DL 140 server, Suse SLES 9 Reiser file system. HyperThreading Performance test. Four IOzones run concurrently. File system RH AS 4 U2 Ext 3 vs. Suse SLES 9 Reiser FS. This test was done using the same server, DL 140 and same disk RAID configuration, the only difference is the Operating system and file system. Two parameters has changed simultaneously but this case will show what to expect when a user decides for either RedHat or Suse. The file systems are the default file systems for the two Linux distributions. Table 20: Nexan SATABeast RAID in RAID 0 configuration performance using four 2 Gbits/sec Fibre Channel interface, RedHat AS 4 U2 Ext 3 vs. Suse SLES 9 SP 3 Reiser file system. Write Rewrite Read Reread Random Read Random Write Ext 3 FS 269,5 314,7 369,9 386,1 27,81 193,8 Reiser FS 364,5 526,5 373,2 539,4 23,23 79,88 10/17/07 Page 28 of 71 Assessment of IO Storage performance Test using HP DL 585 A test using a HP DL 585 server, quad 1.8 GHz Opteron processors 4 GBytes memory. One quad 2 Gbits/s Fibre Channel Ports, Qlogic QLA 2344, card was installed using the single 133 MHz PCI­X slot. For this machine there a separate CPU per IOZone instance and hence server performance should be adequate for testing. We do however see a degradation of the read performance over write performance. There is no logical explanation for this. Red Hat AS 4 U2 Ext 3 file system The server was running RedHat AS 4 Update 2 and directories were formatted as Ext 3 file system. Four IOZone tests were run concurrently. Values shown are aggregated values. Table 21: Nexan SATABeast RAID performance using four 2 Gbits/sec Fibre Channel interfaces, RedHat AS4 Update 2 – Ext 3 file system. RAID 5 10/17/07 Write Rewrite Read Reread Random Read Random Write 511,4 309.8 253,6 334,5 31,50 112,7 Page 29 of 71 Assessment of IO Storage performance IOZone - Nexan SATABeast 550000 Performance [kB/sec] 500000 450000 400000 350000 300000 250000 200000 150000 100000 50000 0 Write Read Random Read Random Write IOZone test Figure 16: Nexan SATABeast IOZone performance using a HP DL 585 server, RedHat AS 4 U2 - Ext 3 file system. Four IOzones run concurrently. Infotrend EONstor A16F­G2422 Two Infotrend EONstor A16F­G2422 was set up. Each with the following configuration. 16 Hitachi Deskstar 7500 rpm 500 GB SATA disks, one RAID controllers with two 4 Gbits/s Fibre Channel ports. The EONstor'es was configured as follows : Two RAID groups each comprising 8 disks in a RAID 5 configuration. One logical drive per RAID group. For tests using a single EONstor each logical drive were mapped to it's private 4 Gbits/s FC link. For the tests were two EONstor were used the two logical drives were sharing the 4 Gbits/s FC link. The Host Bridge Adapters (HCAs) for 4 Gbits/s were PCI­express cards. A Dell PowerEdge 2850 with PCI­express slots were used. The HCA cards were put in the 8X PCI express slot when a single card was installed and one card in the 8X position and the second card in the 4X position when two cards were installed. Single EONstor connected to a single server 10/17/07 Page 30 of 71 Assessment of IO Storage performance Suse SLES 9 SP 3, XFS ­ file system. For this test two RAID5 each using 8 disks were configured. On each of these RAIDs a logical drive were configured and mapped to an individual Fibre Channel link. The devices showed up on the server as /dev/sdc and /dev/sdd. Each of these two devices were formatted as XFS directly, no LV were used, just XFS on the raw devices. These two devices were mounted as two directories. Two instances of IOZone were run, each running on it's own CPU as the server has two CPUs. Table 22: Infotrend EONStor RAID performance using two 4 Gbits/sec Fibre Channel interfaces, SLES 9 SP3 – XFS file system. Write Rewrite Read Reread Random Read Random Write RAID 5 A 181.1 172.6 186.1 200,7 8.27 23.8 RAID 5 B 179.7 171.2 219.6 235.0 8.03 22.4 SUM 360.9 343.8 405.8 435.7 16.3 46.2 The total throughput available from the EONstor can be found in the last line where the sum of the two IOZone performance results. A write performance of 361 MegaBytes per second and a read performance of 406 MegaBytes per second is respectable and show the potential of this storage solution. 10/17/07 Page 31 of 71 Assessment of IO Storage performance IOZone Performance - Infotrend EonStor 450000 RAID 1B Performance [kB/sec] 400000 RAID 1A 350000 300000 250000 200000 150000 100000 50000 0 Write Read Random Read Random Write IOZone test Figure 17: Infotrend IOZone performance using a Dell PE2850 server, SLES 9 SP3 XFS file system. Two IOzones run concurrently using two directiries. Two EONstor connected to a single server In these tests a single PE 2850 server were equipped with two dual 4 Gbits/s FC cards, one fitted in the 8X PCI­express slot the other in the 4X slot. Two FC cables from each HCA to the EONstor were connected. For this test each EONstor were configured with two RAIDs in two RAID5 configurations, each with 8 disks. Each RAID were mapped as a logical disk and mapped to it's individual 4 Gbits/s FC link. A total of four 4 Gbits/s Fibre Channel links were employed. For all tests a Dell PE 2850 server with two CPUs running SLES 9 SP 3 were used. The disks were formatted as XFS. There are three (at least) ways of setting this storage up for this kind of tests. One, two or four directories. In the case of one directory all four devices /dev/sdc through /dev/sdf were striped using LVM to one Logical volume at OS level at the server, in the case with two directories the 10/17/07 Page 32 of 71 Assessment of IO Storage performance same were done with devices belonging to the same EONstor box while with four directories XFS were formatted directly on the device. In all cases XFS were used. Default values for cache etc were used and sequential access were selected. Table 23: Infotrend EONStor RAID performance using two 4 Gbits/sec Fibre Channel interfaces, SLES 9 SP3 – XFS file system. One, two and four directories and correspondingly one, two and four instances of IOZone concurrently run. Directories and IOZones Write Rewrite Read Reread Random Read Random Write One 400.7 370.8 359.1 370.9 11.4 149.3 Two 458.1 450.7 458.5 470.7 19.7 106.3 Four 516.7 548.9 688.4 749.0 25.7 96.2 10/17/07 Page 33 of 71 Assessment of IO Storage performance Performance [kB/sec] IOZone - Infotrend 2 x Eonstor 700000 650000 600000 550000 500000 450000 400000 350000 300000 250000 200000 150000 100000 50000 0 Write Read Random Read Random Write IOZone test Figure 18: Two Infotrend EONstors IOZone performance using a Dell PE2850 server, SLES 9 SP3 - XFS file system. Four IOzones run concurrently using Four directories. 10/17/07 Page 34 of 71 Assessment of IO Storage performance Performance IOZone Performance - EONstor x 2 750 700 650 600 550 500 450 400 350 300 250 200 150 100 50 0 One dir./IOZ. Two dirs./IOZs. Four dirs./IOZs. Write Rewrite Read Reread Random Read Random Write IOZone test Figure 19: Two Infotrend EONstors IOZone performance using a Dell PE2850 server, SLES 9 SP3 - XFS file system. One, Two and Four IOzones run concurrently using One, Two and Four directiries respectively. 10/17/07 Page 35 of 71 Assessment of IO Storage performance File system performance Some initial tests were performed using a single dual 4 Gbits/s FC card. Only a single FC channel from each EONstor box to the server was possible. 8 drives were configured as a single RAID5 and mapped as a logical drive, two such logical drives per EONstor box. LVM used to stripe the logical drives to a single device and this device was formatted with the file system in question. Table 24: File system performance SLES 9 SP3 – Ext3, ReiserFS or XFS file system. Two IOZones were run concurrently. Two EONstor boxes. Single 4 Gb/s FC link per EONstor box. File system Write Read Random Read Random Write Ext 3 283.1 527.8 18.29 69.35 Reiser 399.8 528.3 16.41 55.47 XFS 428.1 564.7 18.43 119.5 10/17/07 Page 36 of 71 Assessment of IO Storage performance IOZone performance ­ Infotrend 2 x Eonstor 600 Performance [kB/sec] 550 Ext 3 500 ReiserFS 450 XFS 400 350 300 250 200 150 100 50 0 Write Read Random Read Random Write IOZone test Figure 20: File system performance SLES 9 SP3 – Ext3, ReiserFS or XFS file system. two IOZones were run concurrently. Two EONstor boxes. Single 4 Gb/s FC link per EONstor box. 10/17/07 Page 37 of 71 Assessment of IO Storage performance Single Node file (NFS) server The most common file server for a cluster of compute nodes has been a single NFS file server. This has limited capacity as will be shown by the tests below. As long as the number of client nodes are kept low and their demand for IO storage bandwidth is low a single NFS server kan keep up. In order to assess the NFS server performance a cluster of Dell PowerEdge 1750 compute nodes were accessing an NFS server (details of the NFS server and compute nodes are found in appendices). The two disks in the NFS server were configured as a LVM RAID 0 with 16 kB stripes. Table 25 shows the disk bandwidth measured locally in the NFS server. Table 25: NFS server local disk performance. LVM RAID 0 Write Rewrite Read Reread Random Read Random Write 83.2 100.0 93.6 98.6 9.4 28.7 IOZone was used to test the performance using a common NFS mounted directory kept on the NFS server as the storage for the IOZone temporary file. The IOZone command were issued using the Scali parallel shell, scash. File size of the temporary IOZone file was 5 Giga Bytes. Command used to launch the IOZone test : scash -p -n "v1 v2 v3 v4 v5 v6 v7 v8" '/opt/iozone/bin/iozone -r 64 -s 5g -i 0 -i 1 -i 2 -w -f /scratch/$HOSTNAME\-iozone.tmp > /scratch/$HOSTNAME\-iozone.log ' This command launches one instance of IOZone on each compute node and log the results on a file unique for each compute node. Table 26: Average client performance in MB/sec using NFS file server serving N number of clients. # Clients Write Rewrite Read Reread Random Read Random Write 1 33.5 37.4 35.2 36.0 10.4 5.8 2 18.4 8.1 4.3 4.5 2.4 4.5 10/17/07 Page 38 of 71 Assessment of IO Storage performance # Clients Write Rewrite Read Reread Random Read Random Write 4 9.0 2.8 2.4 2.2 0.89 3.2 8 4.4 1.5 1.3 1.0 0.54 1.5 IOZone Read IOZone Write 40000 35000 35000 30000 25000 Read kB/sec Write kB/sec 30000 20000 15000 10000 25000 20000 15000 10000 5000 5000 0 0 1 2 4 1 8 2 # Concurrent Tests IOZone Random Write 8 IOZone Random Read 6000 11000 5500 10000 5000 Random Read kB/sec Random Write kB/sec 4 # Concurrent Tests 4500 4000 3500 3000 2500 2000 1500 1000 9000 8000 7000 6000 5000 4000 3000 2000 1000 500 0 0 1 2 4 # Concurrent Tests 8 1 2 4 8 # Concurrent Tests Figure 21: A single NFS server serving N clients running IOZone. Disk-server bandwidth is 83 MB/sec write and 94 MB/sec read. From figure 22 it is evident that the total utilization of the NFS server for write is stable at around 35 MB/sec. This is somewhat lower than both the wire speed (117 MB/sec) disk bandwidth measured at 83 MB/sec. A sustained performance of 35 MB/sec even when using many clients is quite an acceptable performance for such an NFS server. 10/17/07 Page 39 of 71 Assessment of IO Storage performance IOZone ­ read ­ Utlization NFS server 40000 35000 35000 Performance [kB/sec] Performance [kB/sec] IOZone ­ write ­ Utilization NFS server 40000 30000 25000 20000 15000 10000 5000 0 30000 25000 20000 15000 10000 5000 0 1 2 4 # Concurrent Tests 8 1 2 4 8 # Concurrent Tests Figure 22: NFS server utilization, one disk in one node. Disk-server bandwidth is 83 MB/sec write and 94 MB/sec read. For read the picture is cloudy and overshadowed by the very large read performance when using only one client with the NFS server. For more clients the performance stays constant with increasing number of clients. The obtained results show that it is possible to calculate what kind of performance attainable using NFS and to calculate when NFS is no longer a feasible option and a cluster file server using parallel file system like LUSTRE must be used. 10/17/07 Page 40 of 71 Assessment of IO Storage performance Cluster file server I – LUSTRE parallel file system A small cluster containing 5 servers were set up running LUSTRE from CFS. One meta data server and four storage servers. Each of the storage servers contain two 250 GB ATA disks. From earlier tests, (see table 10) a bandwidth of approximately 27 MB/sec for write and 55 MB/sec for read is feasible. For one disk per server this yield 108 MB/sec for write and 220 MB/sec for read with all the servers for two disks per server twice these numbers is expected, namely 216 MB/sec for write and 440 MB/sec for read. Given enough disk bandwidth behind the storage server a bandwidth equal to wire speed is achievable, for Gigabit Ethernet this is approximately 115­117 MB/second. For ATA diskes with a typical bandwidth in the order of 30 MB/sec at least 3 SATA disks must be present in each server or an attached RAID storage with at least the same bandwidth. The file sever cluster scale with the number of servers. In this current setup with 4 servers a total bandwidth of 460 MB/second is possible. A cluster of 8 compute nodes running IOZone was used to assess the cluster file server. Only one IOZone was run per compute node as one IOZone is capable of saturating the network bandwidth. Running two IOZones per node will produce more overhead per compute node. One ATA disk per file server. In order to achieve higher performance and higher bandwidth from storage more than one file server can be employed. A cluster of file servers running a cluster file system like LUSTRE can yield scalable file server performance. The bandwidth achievable depends on the disk storage behind or within the file servers as well as the interconnect between the file servers and the clients. The typical interconnect is Gigabit Ethernet which has a theoretical limit of 125 Mega Bytes per second. Any higher bandwidth requirements will need to use a high performance interconnect like InfiniBand. A LUSTRE file server cluster using 5 nodes was set up. One meta data server and four file servers. HP Proliant DL140 servers were used (see appendix for details). Each of the servers contain two ATA disks of 250 Giga Bytes each. For the first test only one disk per server were used. This will make the disk to server the bottleneck. One earlier test (see table 10 and figure 10 on page 16) show the bandwidth obtained when running IOZone locally. The LUSTRE file system were mounted as a common directory on all compute nodes in the cluster and IOZone was started concurrently on all 8 compute nodes using a parallel shell 10/17/07 Page 41 of 71 Assessment of IO Storage performance command : scash -p -n "v1 v2 v3 v4 v5 v6 v7 v8" '/opt/iozone/bin/iozone -r 64 -s 5g -i 0 -i 1 -i 2 -w -f /lustredata/$HOSTNAME\-iozone.tmp > /scratch/$HOSTNAME\-iozone.log ' Table 27: Average client performance in MB/sec using cluster file system LUSTRE file servers serving N number of clients. # Clients Write Rewrite Read Reread Random Read Random Write 1 117.0 117.0 113.1 114.3 1.6 56.7 2 71.8 70.4 62.1 61.7 1.4 24.5 4 20.8 25.3 41.1 58.6 1.1 14.7 8 8.7 9.8 14.3 22.4 0.5 4.9 We see from the performance data that four LUSTRE servers are capable of feeding wire speed into one client (117 MB/s for write and 113 MB/s for read). This is 29 MB/s and 28 MB/s per file server, and within the server­disk bandwidth capability. For two clients we get 72 MB/s for write and 62 MB/s for read. This combined is 144 and 124 MB/s, and amounts to 36 MB/s per server for write and 31 MB/s for read (measurements locally show 27 MB/s for write and 55 MB/s for read, see table 10). LUSTRE is apparently able to utilize the disk better than IOZone as 36 MB/s is more than 27 MB/s measured locally. Figure 23 illustrates this. This is more than the disk to server transfer rate measured locally using IOZone. Using more clients will require more disk to serve bandwidth. We will have a transition from being limited by Ethernet wire speed to disk bandwidth, as full wire speed using two clients are 57 MB/s for write and 56 MB/s for read which is more than the bandwidth to and from the SATA disks in the file servers. For 4 and 8 clients this is more and more clear. 8 clients running at full Ethernet wire speed at 115 MB/s will require 920 MB/s of disk to server transfer bandwidth. The limiting factor for this file server cluster setup is disk bandwidth. This can only be solved using more disks. A test using both disks in the four file servers will show higher bandwidth for the file server cluster. Figure show the effect of limited disk bandwidth within the file server nodes. Two clients saturates the disk bandwidth while the Gigabit Ethernet still has spare capacity. Given enough 10/17/07 Page 42 of 71 Assessment of IO Storage performance disk bandwidth 4 clients should be capable of running at full wire speed. We should see no degradation of performance until more than 4 clients are used. IOZone ­ read ­ Utlization LUSTRE servers IOZone ­ write ­ Utilization LUSTRE servers 175000 140000 Performance [kB/sec] Performance [kB/sec] 160000 120000 100000 80000 60000 40000 20000 0 1 2 4 # Concurrent Tests 8 150000 125000 100000 75000 50000 25000 0 1 2 4 8 # Concurrent Tests Figure 23: LUSTRE servers utilization when using one disk and one LUSTRE server process per node. Combined disk-server bandwidth is 108 MB/sec for write and 220 MB/sec for read. Figure 23 show the obtained LUSTE file server utilization of the disk­server bandwidth. It is evident as described earlier that LUSTRE is able to utilize the disks better and obtain higher disk­server bandwidth than IOZone run directly on the server node. 10/17/07 Page 43 of 71 Assessment of IO Storage performance Two ATA disks per file server, two LUSTRE servers per server node. The same setup as described above were used for this test. Except that both disks in each server were used by running two LUSTRE servers on each node. The nodes have two CPU and one CPU per LUSTRE server were used. In addition hyperthreading (HT) could be turned on in order to test the effectiveness of HT when handling a large number of LUSTRE threads, each LUSTRE server uses a number of threads. The IO performance for one disk in each storage server is shown in table 10 on page 16. This is the performance for one disk alone running a single IOZone benchmark. The performance using two LUSTRE servers one for each disk will differe somewhat from this. The LUSTRE file system were mounted as a common directory on all compute nodes in the cluster and IOZone was started concurrently on all 8 compute nodes using a parallel shell command : scash -p -n "v1 v2 v3 v4 v5 v6 v7 v8" '/opt/iozone/bin/iozone -r 64 -s 5g -i 0 -i 1 -i 2 -w -f /lustredata/$HOSTNAME\-iozone.tmp > /scratch/$HOSTNAME\-iozone.log ' Table 28 show the results from running 4 LUSTRE file servers using two disks and two LUSTRE serves per node with HyperThreading disabled. Table 28: Average client performance in MB/sec using cluster file system LUSTRE file servers serving N number of clients. # Clients Write Rewrite Read Reread Random Read Random Write 1 114.3 114.8 68.6 68.6 1.5 33.4 2 99.6 97.9 68.0 68.1 1.4 15.6 4 48.3 40.0 49.7 51.0 1.2 0.8 8 3.3 6.0 17.5 18.5 0.6 13.6 Using a total of 8 disks each with a bandwidth of 27x8=216 MB/sec for write and 55x8=440 MB/sec for read we see that for one client wire speed is the limiting factor. For two clients the combined bandwidth is 200 MB/sec for write and 136 MB/sec for read. For write both wire speed and disk bandwidth may be limiting, but for read the results are lower that expected. For 4 clients the results are simular as for 2, combined bandwidth is 193 MB/sec for write and 199 MB/sec for read. This is most probable a disk bandwidth limitation. For 8 clients the results are poor showing that utilization of the file server cluster is unexpectedly low. 10/17/07 Page 44 of 71 Assessment of IO Storage performance IOZone Read w. LUSTRE IOZone write w. LUSTRE 70000 120000 110000 60000 90000 Read [kB/sec] Write [kB/sec] 100000 80000 70000 60000 50000 40000 30000 20000 50000 40000 30000 20000 10000 10000 0 1 2 4 0 8 1 # Concurrent Tests IOZone Random write w. LUSTRE 4 8 IOZone Random Read w. LUSTRE 35000 1750 30000 1500 Performance [kB/sec] Randon Write [kB/sec] 2 # Concurrent Tests 25000 20000 15000 10000 5000 1250 1000 750 500 250 0 0 1 2 4 # Concurrent Tests 8 1 2 4 8 # Concurrent Tests Figure 24: Four LUSTRE file server nodes serving N cliensts running IOZone, two disks and two LUSTE server processes per dual CPU node. Combined disk-server bandwidth is 216 MB/sec for write and 440 MB/sec for read. In figure 24 it can be seen that the utilization for 8 clients running 8 IOZone benchmarks concurrently yield very poor utilization of the LUSTRE server for the write benchmark. For the read benchmark the utilization is better, but still below what is seen running 4 clients. For a larger number of clients the utilization might become even worse with this kind of configuration. This is an open question which should be investigated further. 10/17/07 Page 45 of 71 Assessment of IO Storage performance IOZone ­ read ­ Utlization LUSTRE servers 200000 175000 175000 Performance [kB/sec] Performance [kB/sec] IOZone ­ write ­ Utilization LUSTRE servers 200000 150000 125000 100000 75000 50000 25000 0 150000 125000 100000 75000 50000 25000 0 1 2 4 8 # Concurrent Tests 1 2 4 8 # Concurrent Tests Figure 25: Utilization of LUSTRE file servers when using two disks and two LUSTRE servers per node. Combined disk-server bandwidth is 216 MB/sec for write and 440 MB/sec for read. The effect of HyperThreading on file server nodes The measured effect of HyperThreading was small. The only IOZone test that showed any difference was the write test. Figure 26 show the obtained results. For read and random read and write the differences were neglectable. 10/17/07 Page 46 of 71 Assessment of IO Storage performance IOZone write 120000 Performance [kB/sec] 110000 HT Off 100000 HT On 90000 80000 70000 60000 50000 40000 30000 20000 10000 0 1 2 4 8 # Concurrent Tests Figure 26: Effect of HyperThreading on the file server nodes. 10/17/07 Page 47 of 71 Assessment of IO Storage performance Two ATA disks per file server, configured in RAID 0. The same setup as described above were used for this test. Each node was set up using software RAID. LVM was set up to stripe storage over the two ATA disks present in each node. The resulting disk IO performance is shown in table 11 on page 17. One LUSTRE server were run on each file server node using the LVM RAID 0 disk. HyperThreading was turned off. For details please see the text preceding table 11 page 17. Table 29: Average client performance in MB/sec using cluster file system LUSTRE file servers serving N number of clients. # Clients Write Rewrite Read Reread Random Read Random Write 1 116.7 116.8 69.6 69.6 1.8 20.8 2 89.5 91.1 69.5 69.5 1.7 10.8 4 45.3 46.6 58.5 58.7 1.4 15.8 8 22.8 23.3 28.6 29.9 1.0 12.5 10/17/07 Page 48 of 71 Assessment of IO Storage performance IOZone Read w. LUSTRE IOZone write w. LUSTRE 70000 120000 110000 60000 90000 Read [kB/sec] Write [kB/sec] 100000 80000 70000 60000 50000 40000 30000 20000 50000 40000 30000 20000 10000 10000 0 1 2 4 0 8 1 # Concurrent Tests 4 8 IOZone Random Read w. LUSTRE IOZone Random write w. LUSTRE 2000 22500 20000 Performance [kB/sec] Randon Write [kB/sec] 2 # Concurrent Tests 17500 15000 12500 10000 7500 5000 1750 1500 1250 1000 750 500 250 2500 0 1 2 4 0 8 1 # Concurrent Tests 2 4 8 # Concurrent Tests Figure 27: Four LUSTRE file server nodes serving N cliensts running IOZone, two disks LVM RAID 0 per node, one LUSTRE server pr. node. Combined disk-server bandwidth is 218 MB/sec for write and 285 MB/sec for read. IOZone ­ read ­ Utlization LUSTRE servers IOZone ­ write ­ Utilization LUSTRE servers 250000 200000 225000 Performance [kB/sec] Performance [kB/sec] 175000 150000 125000 100000 75000 50000 25000 200000 175000 150000 125000 100000 75000 50000 25000 0 0 1 2 4 # Concurrent Tests 8 1 2 4 8 # Concurrent Tests Figure 28: LUSTRE file servers utilization when using two disks in LVM RAID 0 configuration and one LUSTRE process per node. Combined disk-server bandwidth is 218 MB/sec for write and 285 MB/sec for read. From figure 28 the utilization of the disk bandwidth for write is constant at about 175 MB/sec, the number for one client is limited by network bandwidth. 175 MB/sec is somewhat lower than measured disk bandwidth for one LVM RAID times 4, which is 218 MB/sec with numbers 10/17/07 Page 49 of 71 Assessment of IO Storage performance taken from table 11. For read the utilization seem to become constant when the number of clients equal or becomes higher than the number of file servers. Optimal configuration of LUSTRE servers IOZone write ­ Lustre server disk config. options IOZone read ­ Lustre server disk config. options 120000 110000 100000 110000 One disk two servers two disks 90000 80000 two disks RAID 0 70000 60000 50000 40000 30000 20000 Performance [kB/sec] Performance [kB/sec] 120000 10000 0 One disk 100000 90000 two servers two disks two disks RAID 0 80000 70000 60000 50000 40000 30000 20000 10000 0 1 2 4 8 1 # Concurrent Tests IOZone Random write ­ Lustre server disk config. opts. 60000 55000 4 8 One disk 50000 two servers two disks 45000 40000 two disks RAID 0 IOZone Random read ­ Lustre server disk conf. opts. 2000 35000 30000 25000 20000 15000 10000 5000 0 One disk 1750 Performance [kB/sec] Performance [kB/sec] 2 # Concurrent Tests two servers two disks 1500 two disks RAID 0 1250 1000 750 500 250 0 1 2 4 # Concurrent Tests 8 1 2 4 8 # Concurrent Tests Figure 29: Comparison of One disk per server, two disks per server using two LUSTRE servers or two disks in LVM RAID 0 using one LUSTRE server process per node. How should the disks be configured and how should LUSTRE be set up to utilize the available disk bandwidth and capacity for an optimal performance ? For write the extra bandwidth gained by using two disks per node shows up clearly in the write test. For the other test the picture is not so clear for tests where the number of clients are equal of smaller than the number of servers. For the situation where the number of clients are higher 10/17/07 Page 50 of 71 Assessment of IO Storage performance than the number of serves, which is the typical setup for real­life clusters, the extra bandwidth gained by using two disks shows up. Using two LUSTRE server processes per server and one disk per process or using LVM to set up a RAID 0 can be discussed. For the majority of tests using 8 clients the LVM RAID 0 seem to perform somewhat better. File server disk bandwidth is seen as a limiting factor during this test. How much of the available disk to file server bandwidth if utilized ? The maximum disk bandwidth is taken from table 11 where the single node disk bandwidth has been measured. The numbers for RAID 0 is taken as reference numbers (this is not actually correct for the case where only one disk in each server is used. However, the servers do have two disks). For 4 servers the combined total numbers for disk­server band­width are: 218 MB/sec for write and 285 MB/sec for read. The measured bandwidths are measured using IOZone and it might well be that LUSTRE is capable of using the server­disk in a more efficient way than the IOZone benchmark. Hence number over 100% are quite possible. However, none of the measurements show such high utilization. IOZone write ­ Utilization of server disk bw. 100% One disk 90% two servers two disks 80% two disks RAID 0 70% 60% 50% 40% 30% 20% 10% 0% 1 2 4 8 # Concurent Tests Fraction of combined server disk bw. [% ] Fraction of combined server disk bw. [%] Figure 30 show the percent utilization measured by the different configurations of LUSTRE and the associated servers. It is evident that the configuration using a single LUSTRE server per node and configuring the ATA disks using LVM in RAID 0, striping, is the best configuration for obtaining the best possible utilization of the LUSTRE server. IOZone read ­ Utilization of server disk bw. 90% One disk 80% two servers two disks 70% two disks RAID 0 60% 50% 40% 30% 20% 10% 0% 1 2 4 8 # Concurent Tests Figure 30: Percent of measured disk bandwidth utilized by the LUSTRE clusters using different con-figurations. The numbers for a single client is strongly effected by the limited wire speed of gigabit Ethernet (125 MB/sec theoretical max and 117 MB/sec max in typical measurements). For two clients two times the wire speed (234 MB/sec) is about enough to saturate the disk­server bandwidth. Two IOZone tests per compute node client 10/17/07 Page 51 of 71 Assessment of IO Storage performance A test running two IOZone processes per compute node were performed. One IOZone alone is capable of saturating the Gigabit Ethernet and running an extra IOZone test per compute node will not generate more utilization of either network nor disk­sever bandwidth. It is however as test to test server cluster scalability. Figure 31 show the cluster file server utilization when running one through 16 concurrent IOZone tests on 8 compute node clients using the write and read test. The total disk­server bandwidth is limited to 218 MB/sec for write and 285 MB/sec for read (these numbers are the numbers measured on the storage servers and multiplied by the number of storage servers, table 11). This is the limiting factor for total utilization of the file server cluster. The compute node to file server bandwidth is not a limiting factor. There is a little drop in performance when going from one IOZone per compute node client to two IOZones per compute node client, this is attributed to the extra overhead by running two IOZones per compute node and for the file servers to handle 8 extra clients. Which one of these that is most important is unknown, but the latter impacts file server cluster scalability. A larger test using mode compute node clients would be interesting. IOZone Utilization ­ Write IOZone Utilization ­ Read 250000 225000 175000 Performance [kB/sec] Performance [kB/sec] 200000 150000 125000 100000 75000 50000 25000 200000 175000 150000 125000 100000 75000 50000 25000 0 0 1 2 4 # Concurrent Tests 8 16 1 2 4 8 16 # Concurrent Tests Figure 31: File Server Cluster Utilization. 1 through 16 IOZone concurrent tests running on 8 compute node clients. Combined disk-server bandwidth is 218 MB/sec for write and 285 MB/sec for read. 10/17/07 Page 52 of 71 Assessment of IO Storage performance 10/17/07 Page 53 of 71 Assessment of IO Storage performance Cluster file server I – IBRIX parallel file system A small cluster containing 5 servers were set up running IBRIX software from IBRIX Inc. One fusion manager server and four storage servers. Each of the storage servers contain two 250 GB ATA disks. From earlier tests, (see table 10) a bandwidth of approximately 27 MB/sec for write and 55 MB/sec for read is feasible. For one disk per server this yield 108 MB/sec for write and 220 MB/sec for read with all the servers for two disks per server twice these numbers is expected, namely 216 MB/sec for write and 440 MB/sec for read. Given enough disk bandwidth behind the storage server a bandwidth equal to wire speed is achievable, for Gigabit Ethernet this is approximately 115­117 MB/second. For ATA diskes with a typical bandwidth in the order of 30 MB/sec at least 3 SATA disks must be present in each server or an attached RAID storage with at least the same bandwidth. The file sever cluster scale with the number of servers. In this current setup with 4 servers a total bandwidth of 460 MB/second is possible. A cluster of 8 compute nodes running IOZone was used to assess the cluster file server. Only one IOZone was run per compute node as one IOZone is capable of saturating the network bandwidth. Running two IOZones per node will produce more overhead per compute node. 10/17/07 Page 54 of 71 Assessment of IO Storage performance IOZone write w. IBRIX IOZone Read w. IBRIX 27500 45000 25000 40000 20000 35000 17500 30000 Read [kB/sec] Write [kB/sec] 22500 15000 12500 10000 7500 25000 20000 15000 5000 10000 2500 5000 0 1 2 4 0 8 1 # Concurrent Tests IOZone Random write w. IBRIX 4 8 IOZone Random Read w. IBRIX 20000 9000 17500 8000 15000 7000 Performance [kB/sec] Randon Write [kB/sec] 2 # Concurrent Tests 12500 10000 7500 5000 2500 6000 5000 4000 3000 2000 1000 0 0 1 2 4 8 1 2 # Concurrent Tests 4 8 # Concurrent Tests Figure 32: Four IBRIX file servers serving N cliensts running IOZone. Combined disk-server bandwidth is 108 MB/sec for write and 220 MB/sec for read. File server cluster IBRIX - Read utilisation 120 110 Performance [MB/sec] Performance [MB/sec] File server cluster IBRIX - Write utilisation 80 75 70 65 60 55 50 45 40 35 30 25 20 15 10 5 0 100 90 80 70 60 50 40 30 20 10 0 1 2 4 # IOZone clients 8 16 1 2 4 8 16 # IOZone clients Figure 33: File Server Cluster Utilization. 1 through 16 IOZone concurrent tests running on 8 compute node clients. Combined disk-server bandwidth is 218 MB/sec for write and 285 MB/sec for read. 10/17/07 Page 55 of 71 Assessment of IO Storage performance 10/17/07 Page 56 of 71 Assessment of IO Storage performance Cluster file server II – LUSTRE file system A cluster comprising a total of 26 nodes were set up. 8 file server nodes (Dell PE1750), 16 client/compute nodes (Dell PE2650), one meta data server (Dell PE650) and a front end server (Dell PE1550). Two 24 port Gigabit Ethernet switches were used. 23 nodes (all 16 compute/client nodes, and 7 file server nodes) were connected to one switch. A single Ethernet cable connect the two switches. The second switch has one file server node, the meta data server and the front end. All paths that carry data have full bandwidth. The switch configuration do not introduce any bottlenecks. The storage used was disks inside the storage servers. This limits the capacity both the size and the bandwidthwise. The limited size sets constraints on how many clients (each need 5 GBytes of disk space) that can be served by the file server cluster. Figure 34: Scali manage view of the cluster comprising 26 nodes, 16 compute nodes (r01-r16), 8 file servers (v01-v08), 1 meta data server (v09) and 1 front end node (rigel). 10/17/07 Page 57 of 71 Assessment of IO Storage performance Below is shown scalability tests where we have run up to 32 IOZone cliensts using 16 compute nodes (the 32 client case used two processes on each two processor node) and a range of file servers ranging from one through eight servers. Individual file server performance To assess the maximum total disk performance a test was performed running one IOZone client on each of the file servers. This is a measure of the total disk­server bandwidth. This test is referred to as EP (Embarrassingly Parallel). Figure 35 show the typical performance of each file server. The average is marked by the boxes while the lines show the variance in the performance. It is clear that there is large variance in the performance. This might affect the aggregated performance when each of these servers are used as building blocks for a file server cluster. IOZone - EP - 8 x PE1750, 2 SCSI disks RAID 0 120000 Performance [kB/sec] 110000 100000 90000 80000 70000 60000 50000 40000 30000 20000 10000 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 35: Disk-server bandwidth for each file server in the file server cluster. Each server has two SCSI disks in RAID 0 configuration. Typical performance is somewhat over 80 MBytes/sec for write and somewhat over 110 MBytes for read. Aggregated together this is the total disk bandwidth that is available for the Lustre file system. The total aggregated bandwidth is obtained by summing up all the bandwidths measured on all the file server nodes and is shown in table 30 and figure 36. 10/17/07 Page 58 of 71 Assessment of IO Storage performance Table 30: Total aggregated disk-server bandwidth. EP aggregated Write Rewrite Read Reread Random Read Random Write 653.6 648.9 844.4 861.8 102.2 187.9 IOZone - Aggregated bw. EP - 8 x PE1750 RAID 0 Performance [MB/sec] 900 800 700 600 500 400 300 200 100 0 Write Rewrite Read Reread Random Read Random Write IOZone test Figure 36: Total aggregated disk-server bandwidth, obtained by running IZOne client on each file server. 10/17/07 Page 59 of 71 Assessment of IO Storage performance File server cluster performance Table 31 show the aggregated disk write bandwidth performance as seen from the compute node clients running IOZone. The total disk bandwidth available for write using 8 file servers is shown is table 30 and is 654 MB/sec. The measured maximum in this test is 450 MB/sec. Table 31: Aggregated client write performance obtained from a cluster file server, employing 1 through 8 file servers. The limited disk capacity limits the number of clients to 8 per file server. # Cluster file Servers # IOZone Clients doing write 1 2 4 8 16 32 1 64.8 68.7 67.8 58.7 n.d. n.d. 2 90.1 98.7 96.5 88.0 85.0 n.d. 4 94.5 155.6 150.4 139.8 139.8 177.5 8 64.8 186.6 372.5 374.2 384.4 451.2 10/17/07 Page 60 of 71 Aggregated Performance [MB/sec] Assessment of IO Storage performance IOZone - Total client Bandwidth - write 500 450 400 350 1 2 300 4 8 250 200 16 32 150 100 50 0 1 2 4 8 # Cluster file servers Figure 37: Total aggregated write bandwidth using 1 through 8 file servers and 1 through 32 IOZone clients on 16 compute nodes. Table 32 show the aggregated disk write bandwidth performance as seen from the compute node clients running IOZone. The total disk bandwidth available for write using 8 file servers is shown is table 30 and is 844 MB/sec. The measured maximum in this test is 363 MB/sec. Table 32: Aggregated client read performance obtained from a cluster file server, employing 1 through 8 file servers. The limited disk capacity limits the number of clients per file server. # Cluster file Servers 1 2 4 8 16 32 1 54.5 78.5 74.0 62.4 n.d. n.d. 2 52.8 96.4 99.6 91.9 86.2 n.d. 4 51.7 101.5 190.7 191.1 185.6 189.5 8 54.5 104.4 204.0 363.1 363.7 344.6 10/17/07 # IOZone Clients doing read Page 61 of 71 Aggregated Performance [MB/sec] Assessment of IO Storage performance IOZone - Total client Bandwidth - read 375 350 325 300 275 250 225 200 175 150 125 100 75 50 25 0 1 2 4 8 16 32 1 2 4 8 # Cluster file servers Figure 38: Total aggregated read bandwidth using 1 through 8 file servers and 1 through 32 IOZone clients on 16 compute nodes. The measurements are clearly showing the potential to build scalable file server clusters. Even with the modest disk to server performance a disk bandwidth from the compute cluster in excess of 300 MBytes/sec can be achieved. For the case with 8 file servers and 16 compute nodes running 32 IOZone clients the bandwidth per file server is only a modest 56 MBytes/sec for write and 43 MBytes/sec for read. Using a disk subsystem like a low cost RAID that deliver 150 MB/sec will increase these numbers close to the double, limited only by the 115 Mbytes/sec imposed by the Gigabit Ethernet. Cluster File server Performance - Write / Lustre Cluster File server Performance - Read / Lustre 450 Performance [MB/sec] Performance [MB/sec] 500 400 350 300 250 200 150 100 50 0 1 2 4 8 # Lustre Clients 16 32 (2 ppn) 375 350 325 300 275 250 225 200 175 150 125 100 75 50 25 0 1 2 4 8 16 32 (2 ppn) # Lustre Clients Figure 39: File Server Cluster Utilization. 1 through 32 IOZone concurrent tests running on 16 compute nodes served by 8 file servers. Combined disk-server bandwidth is 654 MB/sec for write and 844 MB/sec for read. 10/17/07 Page 62 of 71 Assessment of IO Storage performance Another way of conveying the aggregated total cluster file server bandwidth is shown in figure 39 where each individual performance measurement are shown plotted together. This figure show that you need some more clients than servers to utilize the total cluster file server potential. Aggregated Bandwidth [MB/sec] Cluster file server performance 500 450 Write bandwidth Read bandwidth 400 350 300 250 200 150 100 50 0 1/2 2/4 4/8 8 / 16 8 / 32 # File servers / # Clients Figure 40: Aggregated bandwidth for write and read as measured with IOZone using different number or file servers in the file server cluster. Figure 40 and figure 41 show the scaling associated with a cluster of file servers. It clearly show the scaling potential when applying more file servers. A two to one ratio between clients and servers seem to be acceptable for scaling measurement. The scaling is not only a function of file serves it is also dependent on the backend storage. In this case it is built in disks in the file servers, but a higher performing disk RAID might show different scaling. 10/17/07 Page 63 of 71 Assessment of IO Storage performance Speedup [Relative to one server] File server cluster Scalability 6 Write bandwidth Read bandwidth 5 4 3 2 1 0 1 2 4 8 # File servers Figure 41: File server cluster Scalability using a number of file servers and two IOZone clients per server. Figure 41 show scaling using 2, 4, 8 and 16 IOZone clients using the same file system served by a number of file servers show on the ordinate axis. Both read and write operations show good scaling. It also shows that any given bandwidth (within limits) requirement can be reached by applying more file servers. The two following tables show the performance for each individual file server. This performance is limited by the limited disk­server bandwidth. Each file server has only two striped SCSI disks inside. A sustained performance in excess of 40 MBytes/sec is acceptable, even if the performance measured local on each node is higher, see figure 35. Using an external RAID this performance will increase. Table 33: Individual file server performance serving write requests. Serving 1 through 32 IOZone clients. This is aggregated numbers divided by the number of file servers. # Cluster file Servers 1 2 4 8 16 32 1 54.5 78.5 74.0 62.4 n.d. n.d. 2 26.4 48.2 49.8 46.0 43.1 n.d. 4 12.9 25.4 47.7 47.8 46.4 47.4 10/17/07 # IOZone Clients doing write Page 64 of 71 Assessment of IO Storage performance # Cluster file Servers 8 # IOZone Clients doing write 1 2 4 8 16 32 6.8 13.1 25.5 45.4 45.5 43.1 Table 34: Individual file server performance serving read requests. Serving 1 through 32 IOZone clients. This is aggregated numbers divided by the number of file servers. # Cluster file Servers # IOZone Clients doing read 1 2 4 8 16 32 1 54.4 78.5 74.0 62.4 n.d. n.d. 2 26.4 48.2 49.8 45.6 43.1 n.d. 4 13.0 25.4 47.7 47.8 46.4 47.4 8 6.8 13.1 25.5 45.5 45.5 43.1 Reproducibility All measurements are by their nature limited by random and systematic errors. In order to assess the reproducibility and deviation associated with the measurements shown above. 10 runs of the same tests were run. Table 35 show this for a range of IOZone clients, in addition a more compact view is given in figure 42. It can be seen from the data that write performance show some more deviation that the comparable read operations. The numbers being relative manifests itself by number showing high performance show lower deviation than numbers showing low performance, higher deviations for 32 clients than for 1 client data. Another issue is that many clients should be served fair but all clients are not equal some are more equal than others. This leads to difference in performance which manifests itself as deviation in the combined results. While not a random normal distributed error it is nevertheless what is observed and should be taken into account. There is no reason that this deviation is normal distributed and hence confidence intervals (assuming normal distribution) based on these numbers should be avoided. 10/17/07 Page 65 of 71 Assessment of IO Storage performance Table 35: Measured IOZone bandwidth reproducibility, with deviation given as relative standard deviation as a result of 10 consecutive runs for all number of IOZone clients (1 through 32) using 16 compute nodes.. # IOZone clients Write Rewrite Read Reread Random Read Random Write 1 93.7 ±1 % 87.2 ± 1% 52.4 ± 1% 53.7 ± 1% 2.6 ± 7% 86.6 ± 3 % 2 93.7 ± 1% 86.3 ± 1% 51.8 ± 1% 52.9 ± 1% 2.6 ± 1% 62.2 ± 4% 4 83.2 ± 7% 71.0 ± 11% 49.3 ± 2% 52.9 ± 1% 2.4 ± 1% 34.4 ± 20% 8 39.2 ± 11% 32.2 ± 12% 45.8 ± 1% 49.6 ± 1% 2.0 ± 1% 33.9 ± 11% 16 19.0 ± 12% 15.6 ± 11% 23.4 ± 1% 24.8 ± 1% 1.2 ± 1% 30.6 ± 11% 32 11.3 ±13% 10.1 ± 11% 10.6 ± 3% 15.6 ± 2 % 0.5 ±1 % 37.0 ± 13% IOZone - File server performance - analysis of deviation 20.0% Relative Std. dev. [%] 18.0% 16.0% 14.0% 12.0% 10.0% 8.0% 6.0% 4.0% 2.0% 0.0% Write Rewrite Read Reread Random Read Random Write IOZone test Figure 42: Deviation of file server performance for 1 through 32 IOZone clients. Variance is shown as relative standard deviation given in percent, 10/17/07 Page 66 of 71 Assessment of IO Storage performance 10/17/07 Page 67 of 71 Assessment of IO Storage performance Appendix A The IOZone application were used for all the tests. Version $Revision: 3.247$ was used. The same RPM package was installed on all nodes tested. The IOZone command used is (unless clearly stated in the relevant section) : /opt/iozone/bin/iozone -r 64 -s 5g -i 0 -i 1 -i 2 -w -f /temp/iozone.tmp The options used are: ­r 64 ­ 64 KB record size ­s 5g – test file size 5 Giga Bytes, ­i 0 – Test Write and ReWrite, ­i 1 – Test Read and Rewrite, ­i 2 – Test Random Read and Write ­w ­ Do not unlink temporary file ­f file – use the file as test file. Appendix B Table 36: Dell PowerEdge 1750 Hardware, x8 Vega cluster. Hardware component Detail Node Dell PowerEdge 1750 Memory 2 GBytes RAM Processor x 2 Intel(R) Xeon(TM) CPU 2.80GHz HyperThreading off. Disk x 2 Fujitsu ULTRA 320 SCSI 36 GB 10 kRPM. RAID controller PERC RAID controller : megaraid cmm: 2.20.2.4 (Release Date: Thu Dec 9 19:10:23 EST 2004) 10/17/07 Page 68 of 71 Assessment of IO Storage performance Table 37: Dell PowerEdge 1750 Software Software component Detail Operating System RedHat EL4 Operating System Suse SLES 9 SP2 LUSTRE 1.4.1 Appendix C Table 38: HP Proliant DL 140 Hardware Hardware component Detail Node HP Proliant DL 140 Memory 1 GByte RAM Processor x 2 Intel(R) Xeon(TM) CPU 2.40GHz Disk x 2 Maxtor 250GB UDMA/133 7200RPM 8MB Table 39: HP Proliant DL 140 Software Software component Detail Operating System RedHat EL4 LUSTRE 1.4.1 Appendix D Table 40: Dell PowerEdge 1650 NFS server Hardware component Node 10/17/07 Detail Dell PowerEdge Page 69 of 71 Assessment of IO Storage performance Hardware component Detail Memory 256 MByte RAM Processor x 2 Pentium III (Coppermine) 1 GHz Disk IBM 146GB U320 88 PIN SCSI WIDE 10K RPM Table 41: HP Proliant DL 140 Software Software component Operating System Detail RedHat ES3 Appendix E Large cluster setup. Table 42: Dell PowerEdge 26 node testing Cluster. Hardware component Detail Node storage server x 8 Dell PowerEdge 1750 Node client x 16 Dell PowerEdge 2650 Node front end Dell PowerEdge 1550 Node Metadata server Dell PowerEdge 650 Memory storage servers 2 GBytes RAM Memory clients 2 and 3 GBytes RAM Processor x 2 storage servers Intel(R) Xeon(TM) CPU 2.80GHz HyperThreading off. Processor x 2 clients Intel(R) Xeon(TM) CPU 2.40GHz HyperThreading off. Disk x 2 storage servers Fujitsu ULTRA 320 SCSI 36 GB 10 kRPM. RAID controller PERC RAID controller : megaraid cmm: 2.20.2.4 (Release Date: Thu Dec 9 19:10:23 EST 2004) 10/17/07 Page 70 of 71 Assessment of IO Storage performance Table 43: Software 26 node testing cluster Software component Detail Operating System RedHat EL4 Operating System Suse SLES 9 SP2 LUSTRE 1.4.1 10/17/07 Page 71 of 71