Incremental Closure of Variable Splitting Tableaux

advertisement

Incremental Closure of Variable Splitting

Tableaux

Christian Mahesh Hansen, Roger Antonsen, and Arild Waaler

Department of Informatics, University of Oslo

Abstract. Incremental closure is a proof search technique for free variable analytic tableaux where partial solutions are updated along with

tableaux expansion. In this paper we address possible adaptations of

the incremental closure technique to variable splitting tableaux. Variable splitting tableaux provide a sound way to instantiate occurrences of

the same variable in different branches differently by imposing additional

constraints on closing substitutions. We show how these constraints can

be formulated as graph theoretical problems and suggest incremental

methods for calculating solutions.

1

Introduction

Traditionally, search strategies for free variable tableaux for first-order logic implement a rule selection strategy triggered by the dominating logical symbols of

unexpanded formulas. The constraints are caused by the free variables; in order

to avoid unnecessary redundancies in the derivations it is necessary to postpone

the application of γ-rules as much as possible. As the traditional γ-rules for free

variable tableaux select a fresh free variable for each application, one will typically want to opt for a β-expansion before a γ-expansion. This application order

will create one separate copy of the γ-formula in each branch, each of which will

generate a unique free variable. If we, on the other hand, select the γ-formula

for expansion before the β-formula, the γ-expansion will generate a free variable

that subsequently will occur in both premisses of the β-inference, thus creating

an unnecessary dependency in the derivation.

However, constraining expansion order in this way will in most situations be

an obstacle to the implementation of goal-directed search procedures (typically

driven by connections). Variable splitting is a technique that eliminates the need

for constraints on expansion order without increasing the size of derivations. The

splitting technique provides us with full flexibility with respect to expansion

order, and thus supports the formulation of goal-directed search strategies.

Another kind of redundancy that pertains to traditional free variable tableaux

is caused by iterative deepening: if a derivation D cannot be closed, one will typically expand D further, up to a limit, say D0 , and then try to close D0 . The

problem with this approach is that the partial solutions found for D are not

reused in the search for closure of D0 . In general, a significant amount of calculations must then be repeated. To remedy, Giese proposed the incremental closure

technique [4–6]. Trading space for time, the idea is to keep part of the derivation

in memory along with the solutions of all its subderivations. When, say, D is

increased with one new expansion to D1 , two operations are performed.

– The connections in the new leafs of D1 that are not already identified as

connections in D give rise to new equations. These equations are solved.

– The new solutions sink toward the root in the proof construction in an

attempt to close as many subderivations of D1 as possible along the way.

The crucial operation is to merge solutions for two distinct subderivations

joined by a single β-inference. If a new solution reaches the root, D1 is closed,

and the procedure terminates with success.

The method relies on a monotonicity property: any solution for a subderivation

of D is also a solution for the corresponding subderivation of D1 . The procedure

utilizes in an essential way that each γ-expansion introduces a fresh free variable.

This paper reports work in progress that attempts to adapt the incremental

closure technique to a system with variable splitting. We suggest two different

approaches to this problem. To present the main underlying ideas, we first need

to say a few words about the splitting calculus.

The idea behind the splitting system is to identify conditions under which

two occurrences of the same variable can be taken as independent, and hence

treated as distinct variables. Technically, variables that occur in the leaf of a

branch are labelled with the name of the branch; the branch is said to color

the variables that occur in its leaf. The admissibility condition for proofs is

formulated in terms of a relation on the nodes of the formula trees, called the

reduction ordering. A solution is admissible if the associated reduction ordering

is acyclic. For a given set of equations, there will in general be more than one

admissible solution.

To incrementally compute solutions for a derivation in the splitting system, it

is hence not sufficient just to solve equations as we do for free variable tableaux

without splitting. We must also compute the associated reduction orderings,

since it is this relation that conveys information about which occurrences of a

given variable that are distinct and which that are identical. In this paper we

shall mainly focus on the problem of characterizing such solutions for all subderivations of a given derivation, starting from the leaf sequents and proceeding

downwards toward the root. This includes the merging of solutions, which is one

of the core operations of incremental closure.

We shall propose two distinct approaches to this problem. One approach is,

at each stage, to compute all the maximal admissible reduction orderings and

use each of these to generate equations that identify variables. These identifying

equations are then added to the equation sets. The main difficulty lies in the

merging operation: when two reduction orderings are merged, a simple union

of them may be cyclic. Should this be the case we have to compute its maximal acyclic subrelations and proceed with each of them separately. As a side

effect, new identifying equations will in general be generated for each maximal

subrelation. Since we assume that different colored variables are distinct unless

explicitly identified in the process of merging, this approach is called identifying

by need.

We also address a complementary approach, splitting by need, which is reminiscent of an idea first proposed by Bibel [3]. In this approach we try to solve

the initial equation set. If this fails, we use failure information to increase the

reduction ordering. The idea is hence to start with an empty reduction ordering

and gradually increase the reduction ordering when needed. This will, if successful, produce a solution with a minimal reduction ordering. While merging

of solutions is now straightforward, the problem with this approach is to efficiently extract splitting information from failed attempts to solve equations, and

to integrate this information without unnecessary backtracking.

The splitting system is introduced in Sect. 2. The material in that section

is mostly taken from [1]. In Sect. 3 we characterize splitting proofs from the

perspective of equation solving. This is the basis for the incremental closure

proposals addressed in the rest of the paper. Identification by need is addressed

in Sect. 4, while splitting by need is addressed in Sect. 5. The emphasis is put

on concepts and examples. In Sect. 6 we summarize the main contributions of

the paper and formulate the main problems that we currently address.

2

Variable Splitting Tableaux

Variable splitting is a technique applicable to free variable tableaux, sequent

calculi, and matrix characterizations that exploits a relationship between β- and

γ-rules. Using contextual information to differentiate between occurrences of the

same free variable in different branches, the technique admits conditions under

which these occurrences may safely be assigned different values by substitutions.

We will here present only the basic terminology for variable splitting. The reader

is referred to [1] for more details.

A first-order language is defined in the standard way from a nonempty set

of relation symbols and a set of function symbols. Formulas are defined from

terms and relation symbols by means of the logical symbols ¬, ∧, ∨, →, ∀ and

∃, and terms are defined from quantification variables and function symbols.

A term is ground if it contains no variables, and a formula is closed if every

variable occurrence is bound by a quantifier. A signed formula is a formula with

a polarity, > or ⊥.

We adopt an indexing system for formulas similar to that used by Wallen [8]

and Otten and Kreitz [7], which is spelled out in detail in [1]. Think of an indexed

formula tree as a representation of a signed formula as a tree, where all copies

of γ-formulas are made explicit and all nodes are marked with an index (for

the purpose of this presentation, indices can be taken to be natural numbers).

If a formula ϕ has polarity P and index i, we write ϕPi for the corresponding

indexed formula. Furthermore, all variables in atomic indexed formulas are replaced either with the index of a γ-formula or the Skolem term corresponding

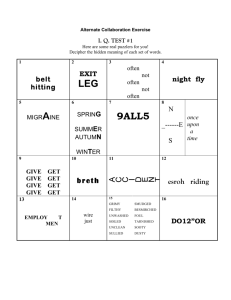

to a δ-formula. For example, the indexed formula tree for the signed formula

(¬∃xP x ∨ ∃yQy)⊥ is given in Fig. 1 (where only three copies of the γ-formula

are displayed). For each index there is a unique indexed formula and vice versa.

For example, index 7 in Fig. 1 corresponds to the indexed formula (∃yQy)⊥

7.

The index ordering is a partial ordering on indices, defined as the transitive

closure of 1 , where i 1 j holds if ψj is an immediate subformula or a copy

of ϕi . Intuitively, the indexed formulas occurring in the root goal of a derivation

will always have the least indices with respect to the index ordering.

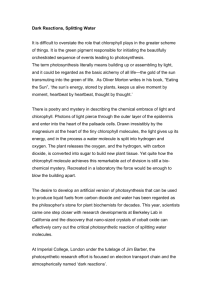

A nonatomic indexed formula ϕi has a type - α, β, γ or δ, determined by its

polarity and outermost connective; see the leftmost columns in Figure 2. The

indexed formulas β1 and β2 of a β-formula have the secondary type β0 . The type

symbols are frequently used as metavariables denoting any indexed formula of

that type. In the case of γ- and δ-formulas, ϕ[x/t] denotes the indexed formula ϕ,

where all free occurrences of x have been replaced with t. The index i of γ is called

an instantiation variable, and the indexed formula γ 0 is called a copy of γ. For

simplicity, the letters u, v, w are used for instantiation variables. Thus, if γ has

index u, its instance is denoted γ1 (u). The symbol fi is called a Skolem function

symbol, and ~u consists of exactly the instantiation variables in δ. The set of

instantiation terms is the least set containing the set of instantiation variables

that is generated by function and Skolem function symbols. An instantiation

term is ground if it does not contain any instantiation variables. From now on

“formula” means “indexed formula”, “variable” means “instantiation variable”

and “term” means “instantiation term.”

A set of formulas is called a goal. If all formulas in a goal are closed, it is

called a root goal. Goals are in examples displayed in the sequent notation Γ ` ∆,

where Γ is the set of formulas with polarity > and ∆ is the set of formulas with

polarity ⊥. Selected indices are displayed below the root sequents.

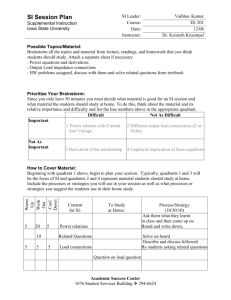

Definition 1. Let Γ be a root goal. A derivation of Γ is a finite tree, with root

node Γ , obtained by iteratively applying the derivation rules given in Figure 3.

The rules should be read bottom-up; for example, if Γ, α is the leaf goal of a

derivation, then Γ, α1 , α2 is a new goal added above Γ, α. The formulas α, β, γ,

and δ below the horizontal line are said to be expanded in the derivations.

P f3 >

4

∃x>

3

¬⊥

2

Q5⊥

6

∃y ⊥

5

Q7⊥

8

∃y ⊥

7

Q9⊥

10

∃y ⊥

9

∨⊥

1

Fig. 1. Indexed formula tree.

α

>

(ϕ ∧ ψ)

⊥

(ϕ ∨ ψ)

α1

α2

ϕ

>

ψ

>

ϕ

⊥

ψ

⊥

(ϕ → ψ)⊥ ϕ>

ϕ⊥

ϕ⊥

(¬ϕ)⊥

ϕ>

ϕ>

γ0

(∀xϕ)>

i

(∀xϕ)>

i0

β2

ϕ

⊥

ψ⊥

ϕ

>

ψ>

(ϕ → ψ)> ϕ⊥

ψ>

(ϕ ∧ ψ)

>

(ϕ ∨ ψ)

δ

δ1 (fi (~

u))

ϕ[x/i]

(∀xϕ)⊥

i

ϕ[x/fi (~

u)]⊥

ϕ[x/i]⊥

(∃xϕ)>

ϕ[x/fi (~

u)]>

i

γ1 (i)

(∃xϕ)⊥

(∃xϕ)⊥

i

i0

β1

⊥

ψ⊥

(¬ϕ)>

γ

β

>

Fig. 2. Types and notation for indexed formulas.

Γ, α1 , α2

Γ, α

Γ, β1

Γ, β2

Γ, β

Γ, γ 0 , γ1 (u)

Γ, γ

Γ, δ1 (fi (~

u))

Γ, δ

Fig. 3. Derivation rules. The variable u is the index of γ, i is the index of δ and ~

u

consists of all the variables in δ.

For each branch of a derivation there is a set Φ of all the formulas contained

in it, and we use the set of indices of all β0 -formulas in Φ as a name of the

branch. In the rest of this article, a branch refers to a set of β0 -indices obtained

in this way. For example, if (ϕ ∨ ψ)> is expanded in a derivation, one branch

contains the index of ϕ and the other contains the index of ψ. If D is a derivation

and θ is a type or a secondary type, θ(D) denotes the set of indices of expanded

θ-formulas in D. Hence, γ(D) is the set of all variables that occur in a derivation

D.

If s is the greatest lower bound of t1 and t2 with respect to , that is,

s {t1 , t2 } or s = t1 = t2 , and there is no s0 such that s s0 and s0 {t1 , t2 },

then s is called the meet of t1 and t2 , written t1 u t2 . If t1 and t2 are neither

equal nor -related, that is, t1 6 t2 and t2 6 t1 , and t1 u t2 is of type β, then

t1 and t2 are called β-related, written t1 4 t2 . If s is immediately below t1 and t2 ,

that is, s 1 {t1 , t2 }, and t1 and t2 are different, then t1 and t2 are called dual

and s is denoted (t1 ∆t2 ). (For example, in Fig. 1, we have that 4 is β-related

to all indices from 5 to 10, but that only 2 and 5 are dual.) A set of indices is

called dual-free if it does not contain dual indices.

A splitting set for a derivation D is a dual-free subset S of β0 (D). In particular, a branch is a splitting set. Splitting sets are in examples written as sequences

of natural numbers; since we never explicate more than ten indices, this does

not cause any ambiguity.

Let D be a derivation. A colored variable for D is a variable u together with

a splitting set S for D, written uS . If ϕ is a formula in the leaf goal of a branch

B, then ϕ

b denotes the colored formula where all variables u have been replaced

c is extended to goals and sets of goals in the

by uB . The coloring operator (·)

obvious way. We will write the colored variable u∅ as u.

A substitution is a partial function σ from the set of colored variables for D to

the set of ground terms. The application of σ to an argument u is written in the

postfix notation uσ. Substitutions are extended to terms and indexed formulas

in the standard way. A substitution σ closes a colored leaf goal Γb if there are

formulas ϕ and ψ in Γb with opposite polarities such that ϕσ = ψσ (up to indices

and polarities) and it is closing for D if it closes every colored leaf goal of D.

Obviously, not all closing substitutions are admissible. In order to characterize admissibility, we define a reduction ordering on β-indices by means of two

auxiliary relation on indices. Let D be a derivation and σ a substitution.

– A splitting relation is a binary relation @ from β(D) to γ(D). It is called a

σ-ordering if σ(uS ) 6= σ(uT ) implies that there are elements s ∈ S and t ∈ T

such that (s∆t) @ u.

– If a variable u occurs in both β1 and β2 , for a formula β, then u is called

critical for β, written u l β.

Definition 2. The reduction ordering induced from a splitting relation @ is a

relation C on β(D) such that a C b holds if either a b or there is a γ-index

u such that a @ u and u l b. If the transitive closure of C is irreflexive, then

@ is called admissible. We say that σ is admissible if there is an admissible

σ-ordering.

Definition 3. Let D be a derivation of Γ and σ be a substitution that is closing

and admissible for D. Then, hD, σi is called a refutation of Γ . When we display

the goals in the sequent notation, we call hD, σi a proof.

3

Solving equations

Definition 4. The set of colored terms is the set of terms constructed from

colored variables, function and Skolem function symbols. An equation is a pair

ht1 , t2 i, written t1 ≈ t2 , where t1 and t2 are colored terms. An identity set I is a

set of equations of the form uS ≈ uT where uS and uT are colored variables. A

substitution σ solves t1 ≈ t2 if t1 σ = t2 σ, and σ satisfies a set of equations E if

σ solves every equation in E. Two colored terms t and t0 are unifiable if the set

{t ≈ t0 } is satisfiable.

The extension of a splitting set S with a β0 -index i is written S + i. We

extend the +-operator to terms, equations and sets of equations in the obvious

way, e.g. {v 3 ≈ a, v 4 ≈ b} + 1 = {v 13 ≈ a, v 14 ≈ b}.

Definition 5. Let D be a derivation, and let Γ be a goal in D. The restriction

of D to Γ , written D|Γ , is the subtree of D with root Γ . A connection is a

subset ϕ ` ψ of a leaf goal such that ϕ and ψ are atomic formulas with identical

predicate symbols. A set of connections is spanning for D|Γ if it contains exactly

one connection from each leaf goal of D|Γ .

Remark. Note that the subtree obtained when restricting a derivation is not

necessarily a derivation. The root node of such a subtree might contain formulas

which are not closed.

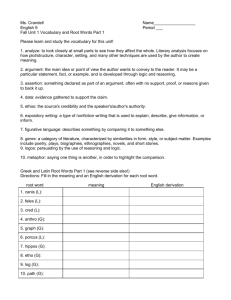

Example 1. The restriction of D1 in Fig. 4 to Γ1 and Γ2 , respectively, are the

following trees.

P ub ` P av

P ub ` P bv

P ub ` P av ∧ P bv

D1 |Γ2

P ua ` P av

P ua ` P bv

P ua ` P av ∧ P bv

D1 |Γ1

Each leaf goal of D1 contains a connection, e.g. L1 contains P ua ` P av, and L2

contains P ua ` P bv.

L1 : P ua ` P av

L2 : P ua ` P bv

L3 : P ub ` P av

L4 : P ub ` P bv

Γ1 : P ua ` P av ∧ P bv

Γ2 : P ub ` P av ∧ P bv

P ua ∨ P ub `

P av ∧ P bv

P ua ∨ P ub ` ∃x(P av ∧ P bv)

∀x(P xa ∨ P xb) ` ∃x(P ax ∧ P bx)

u

1

2

v

3

4

Fig. 4. A derivation D1 . Some goals are labelled in order to refer to them in the text.

Definition 6. Let Γ be a goal in a derivation D. A splitting relation for D|Γ

is a splitting relation for D that is restricted to elements in β(D|Γ ).

Example 2. Let D1 be the derivation in Fig. 4. In Fig. 5 are four splitting relations. When we represent a splitting relation @ as a graph, we draw b @ u as

u . The relations @1 , @2 and @3 are admissible, but @4 is not.

b

Definition 7. Let Γ be a goal in a derivation D, and let @ be a splitting relation

for D|Γ . We say that two colored variables uS and uT are identified by @ if there

are no two β0 -indices i ∈ S and j ∈ T such that (i4j) @ u. If E is an equation

set, then the identity set for E (with respect to @) is the least I such that if

uS and uT occurs in E and are identified by @, then either uS ≈ uT ∈ I or

uT ≈ uS ∈ I.

Example 3. Let @1 be the splitting relation from Fig. 5. The colored variables

v 3 and v 4 are identified by @1 , but u3 and u4 are not, since (344) @ u. The

set {v 3 ≈ v 4 } is therefore an identity set for the equation set {u3 ≈ a, u4 ≈ b, v 3 ≈

a, v 4 ≈ a}.

u

344

142

344

142

344

142

344

v

u

v

u

v

u

v

@1 for D1 |Γ1

@2 for D1

@3 for D1

@4 for D1

Fig. 5. Four splitting relations for the derivation in Fig. 4.

Definition 8. Let D be a derivation, and let Γ be a goal in D. A solution ς for

D|Γ is a triple hE, I, @i where E is a set of equations, I is an identity set for E

(with respect to @), and @ is a splitting relation for D|Γ such that

– E corresponds to a spanning set of connections for D|Γ , and

– E ∪ I is satisfiable, and

– @ is admissible.

Example 4. Let D be the derivation in Fig. 4, and let @1 be the splitting relation

from Fig. 5. The following is a solution for D|Γ1 :

h{u3 ≈ a, u4 ≈ b, v 3 ≈ a, v 4 ≈ a}, {v 3 ≈ v 4 }, @1 i

Definition 9. Let @ be a splitting relation, and let E and E 0 be sets of equations.

The set Id@ (E, E 0 ) is the smallest set containing all equations uS ≈ uT such that

uS occurs in E, uT occurs in E 0 , and uS and uT are identified by @.

4

Identifying by need

Definition 10. Let Γ be a goal in a derivation D, and let @ be a splitting

relation for D|Γ which is not admissible. A maximal subset @0 of @ such that

@0 is admissible is called a cycle reduction of @. We denote by CycleRed(@) the

set of all cycle reductions of @. If @ is admissible, then CycleRed(@) = {@}.

Example 5. Let @2 , @3 and @4 be the splitting relations from Fig. 5. Then,

CycleRed(@4 ) = {@2 , @3 }.

Definition 11. Let D be a derivation and let

Γj

Γk

Γ

be a β-inference in D where (j 4 k) ∈ Γ is the expanded formula. Let ςj =

hEj , Ij , @j i and ςk = hEk , Ik , @k i be solutions for D|Γj and D|Γk , respectively.

The merging of @j and @k , written @j ⊗ @k , is defined as CycleRed(@0 ), where

b @0 u if and only if

– b @j u and b @k u, or

– b @j u and b is not expanded in D|Γk , i.e. b 6∈ β(D|Γk ), or

– b @k u and b is not expanded in D|Γj , i.e. b 6∈ β(D|Γj ), or

– b = (j4k), and there is a colored variable uS in Ej or Ek such that u is not

critical for (j 4k).

The merging of ςj and ςk , written ςj ⊗ ςk , is the set of solutions hE, I, @i for

D|Γ such that

– @ ∈ (@j ⊗ @k ), and

– E = (Ej + j) ∪ (Ek + k), and

– I = (Ij + j) ∪ (Ik + k) ∪ Id@ (Ej + j, Ek + k).

We extend the ⊗-operator to sets of solutions S1 and S2 as follows.

[

S1 ⊗ S2 =

ς1 ⊗ ς2

ς1 ∈S1 ,ς2 ∈S2

Consider the derivation D2 in Fig. 6. The solutions for the leaf goals L1 and

L2 are ς1 = h{u ≈ a}, ∅, ∅i and ς2 = h{u ≈ b}, ∅, ∅i. The solution ς1 ⊗ ς2 for

D2 is calculated as follows. First, we construct the set CycleRed(@), where @ is

constructed from the empty splitting relations of ς1 and ς2 , and the expanded

(142) of the inference we are merging in. Since u occurs in both ς1 and ς2 , and

is not critical for (142), we get (142) @ u. This splitting relation is admissible,

so we get CycleRed(@) = {@}. Since CycleRed(@) is a singleton set, we have

that ς1 ⊗ ς2 contains only the solution h{u1 ≈ a, u2 ≈ b}, ∅, @i. The existence of a

solution for the whole derivation ensures that the derivation is closable with an

admissible substitution.

L1 : P u ` P a

L2 : P u ` P b

Pu ` Pa ∧ Pb

∀xP x ` P a ∧ P b

u

1

2

Fig. 6. A derivation D2 .

We now consider the derivation D1 in Fig. 4. The equation sets for the

connections in the leaf goals are {u ≈ a, v ≈ a} for L1 , {u ≈ b, v ≈ a} for L2 ,

{u ≈ a, v ≈ b} for L3 , and {u ≈ b, v ≈ b} for L4 . Let ς1 , ς2 , ς3 and ς4 be the

corresponding solutions where the identity sets and splitting relations are all

empty. First, we calculate the solution ς1 ⊗ ς2 for D1 |Γ1 , and ς3 ⊗ ς4 for D1 |Γ2 .

The splitting relation @1 from Fig. 5 is admissible and is the only resulting

splitting relation in both cases. Hence, we get for ς1 ⊗ ς2 the solution

ς 0 = h{u3 ≈ a, v 3 ≈ a, u4 ≈ b, v 4 ≈ a}, {v 3 ≈ v 4 }, @1 i,

and for ς3 ⊗ ς4 the solution

ς 00 = h{u3 ≈ a, v 3 ≈ b, u4 ≈ b, v 4 ≈ b}, {v 3 ≈ v 4 }, @1 i.

We now calculate ς 0 ⊗ς 00 . First, we construct CycleRed(@), where @ is constructed

as follows. Since (3 4 4) @1 u in both ς 0 and ς 00 , we also have (3 4 4) @ u. In

addition, v is not critical for (142), so we get (142) @ v. This is exactly the

same splitting relation as @4 in Fig. 5, and it is not admissible. But, as we have

seen, there are two maximal and admissible subrelations of @, namely @2 and @3

from Fig. 5. Let I 0 = {v 13 ≈ v 14 , v 23 ≈ v 24 }. Then, these two splitting relations

give rise to the candidates

I

0

13

23

13

24

@

14

23

14

24

I ∪ {v ≈ v , v ≈ v , v ≈ v , v ≈ v }

I 0 ∪ {u13 ≈ u23 , u13 ≈ u24 , u14 ≈ u23 , u14 ≈ u24 }

@2

@3

for membership in ς 0 ⊗ ς 00 , where both have

E = {u13 ≈ a, v 13 ≈ a, u14 ≈ b, v 14 ≈ a, u23 ≈ a, v 23 ≈ b, u24 ≈ b, v 24 ≈ b}

in common. However, for both candidates, the union of the equation set and the

identity set is unsatisfiable, and hence there are no solutions for the derivation

D1 .

L3 : Qv ` Qa

L4 : Qv ` Qb

L: P u ` P v, Qa ∧ Qb

Γ : Qv ` Qa ∧ Qb

P u, P v → Qv ` Qa ∧ Qb

P u, ∀x(P x → Qx) ` Qa ∧ Qb

∀xP x, ∀x(P x → Qx) ` Qa ∧ Qb

u

v

1

2

3

4

Fig. 7. A derivation D3 .

As a last example, we look at the derivation D3 in Fig. 7. For the leaf goals

we have the equation sets {u ≈ v} for L, {v ≈ a} for L3 , and {v ≈ b} for L4 .

Let ς, ς3 and ς4 be the corresponding solutions where identity sets and splitting

relations are empty. First, we calculate ς3 ⊗ ς4 . For the splitting relation @ we

get (344) @ v, which is admissible. Hence, ς3 ⊗ ς4 contains the solution

h{v 3 ≈ a, v 4 ≈ b}, ∅, @i.

Let ς 0 = ς3 ⊗ ς4 , and we calculate ς ⊗ ς 0 . In the resulting splitting relation @0

we have (142) related to u, and (344) related to both u and v. This splitting

ordering is admissible, and we get

h{u1 ≈ v 1 , v 23 ≈ a, v 24 ≈ b}, {v 1 ≈ v 23 , v 1 ≈ v 24 }, @0 i

as candidate for membership in ς ⊗ς 0 . The union of the equations and the identity

set is however not satisfiable. The set ς ⊗ ς 0 is empty, and hence the derivation

is not closable.

In the derivation D30 in Fig. 8 we have expanded the β-formula Qa ∧ Qb of

L. This expansion produces no new connections in any of the new leaf goals,

but it turns out that there is now a solution for D30 . We call this phenomenon

context splitting, where an expansion of a β-formula in the context makes further

variable splitting possible. The solutions calculated for the branch L of D3 are

no longer valid for D30 , so we must have some sort of update propcedure which

goes into action every time we expand a β-formula.

L3 : Qv ` Qa

L4 : Qv ` Qb

L1 : P u ` P v, Qa

L2 : P u ` P v, Qb

L: P u ` P v, Qa ∧ Qb

Γ : Qv ` Qa ∧ Qb

P u, P v → Qv ` Qa ∧ Qb

P u, ∀x(P x → Qx) ` Qa ∧ Qb

∀xP x, ∀x(P x → Qx) ` Qa ∧ Qb

u

v

1

2

3

4

Fig. 8. A derivation D30 obtained by one expansion of the derivation D3 in Fig. 7.

5

Splitting by need

Splitting by need is distinguished from the identifying by need approach in Sect. 4

by the merging operation. As in Def. 11 let D be a derivation and let

Γj

Γk

Γ

be a β-inference in D where (j 4 k) ∈ Γ is the expanded formula. Let ςj =

hEj , Ij , @j i and ςk = hEk , Ik , @k i be solutions for D|Γj and D|Γk , respectively.

To compute the merging of ςj and ςk in the splitting by need approach we take

hE, I, @i as the point of departure, where

– @=@j ∪ @k ,

– E = (Ej + j) ∪ (Ek + k), and

– I is an identity set for E with respect to @.

We then check if hE, I, @i is a solution.

– If it is a solution, the merging returns hE, I, @i.

– If the set E ∪ I is not satisfiable (due to a unification failure), we try to

increase the splitting ordering @ incrementally in order to resolve the conflict. Normally, there are several ways to do this, and all solutions must be

recorded.

– When it is impossible to increase the splitting ordering without creating a

cyclic reduction ordering, the merging simply fails.

Note that when @ is increased, I must in general decrease. To get an idea of the

merging, assume that {u1 ≈ a, u2 ≈ b} is the resulting equation set and that the

splitting relation is empty. Then, the identity set is {u1 ≈ u2 }, and it is not a

solution. If the resulting equation set is {u1 ≈ f (v 1 ), v 2 ≈ g(u2 )}, it is also not a

solution (due to an occur check failure).

Such inconsistencies can be removed by adding elements to the splitting

ordering. This is similar in spirit to Bibel’s original approach called “splitting by

need”. In the examples above, note that both are clearly solutions if h(142), ui

is added to @. This will remove the equation u1 ≈ u2 and sanction separate

treatment of the two colored variables.

A way of achieving this more efficiently could be to represent the terms as

directed acyclic graphs (dags) and to realize the equation sets as equivalence

relations on the nodes of the graph, similar to the approach in, for example [2],

for ordinary unification. The idea is originally due to Huet, and such relations

are referred to as unification relations or closures. In order to check if a given

dag corresponds to a solution, one must typically check for symbol clashes and

cycles.

If the terms (and the equations) are represented as dags, then the merging of

two equation sets, typically from two branches, will result in a new dag, which

must be checked for symbol clashes and cycles, and if such are found, then the

reduction ordering must be extended to compensate for this. Thus, the problem

is reduced to two purely graph theoretical problems, both involving a cycle check.

The exact nature of the relations between nodes has not been investigated

in detail yet. Perhaps one way to do it is to label the edges between nodes with

splitting sets, and to carefully define what cycles are with respect to this.

Example 6. We close this section by addressing a splitting by need attempt to

close the derivation D in Fig. 7. Consider first merging of the equations from

L3 and L4 in an attempt to close D|Γ . Since the splitting relations @3 and @4

are empty, @3 ∪ @4 generates the identity v 3 ≈ v 4 , which in turn results in

unification failure (a ≈ b). Adding h(344), vi to @ solves the problem and gives

an admissible solution. Note that v 3 ≈ v 4 must now be removed from the identity

set.

Proceeding toward the root, we must try to solve the equations {u1 ≈ v 1 , v 23 ≈

24

a, v ≈ b} under the identities {v 1 ≈ v 23 , v 1 ≈ v 24 }. This also generates a symbol

clash. The only possible remedy is to add h(142), vi to @, but since this makes

the reduction ordering cyclic, the merging operation terminates with failure.

6

Conclusion and Future Work

The paper addresses the formulation of incremental closure for free variable

tableaux with variable splitting. Two complementary approaches are identified:

identification by need and splitting by need. To this end we introduce new concepts and definitions, and use these to give precise formulations of the merging

operations for both approaches. Both approaches are illustrated by detailed examples.

This paper reports work in progress. In a short term perspective we shall

concentrate on unresolved challenges related to three core operations:

– the cycle reduction in identifying by need,

– the merging in splitting by need,

– the update procedure for incremental closure.

The cycle reduction operation in the identifying by need approach needs

to be characterized and analyzed. We are currently addressing the design of

efficient algorithms for computing cycle reductions. There is reason to believe

that the efficiency of the “id by need” approach is crucially dependent on the

cycle reduction operation.

A computationally smooth formulation of the splitting by need approach

seems to require operations on dags and tree unification. We are currently following this idea further.

The update operation is invoked when a new leaf is expanded. It is this

operation that integrates the current work into a proper incremental closure

procedure. Formulating the update operation is challenging since it seems to

require a solution to the context splitting problem. But besides this, the update

operation seems straightforward.

In a longer perspective we will implement and test the algorithms. An objectoriented implementation has recently been initiated. We will also seek to clarify

the precise relationship between splitting by need and identification by need,

both in theory and through benchmarking.

The design of an incremental closure procedure for the splitting system is

technically complex and the algorithms are likely to be untractable in the limit

cases. However, the complexity of these algorithms should not be taken as a bad

sign; in fact the overhead caused by the algorithms is likely to be quite small

compared to the benefits of using the splitting calculus. Two facts support this

claim. First, proof lengths in the splitting calculus can be much shorter that

proofs without splitting; in the limit case they can even be exponentially shorter

[1]. Second, the splitting system admits goal-directed search. For large inputs

goal-directed procedures will, in many cases, significantly outperform procedures

driven by dominating logical symbols.

References

1. Roger Antonsen and Arild Waaler. Liberalized variable splitting. Journal of Automated Reasoning, 2007. DOI: 10.1007/s10817-006-9055-9.

2. Franz Baader and Wayne Snyder. Unification theory. In A. Robinson and

A. Voronkov, editors, Handbook of Automated Reasoning, volume I, chapter 8, pages

445–532. Elsevier Science, 2001.

3. Wolfgang Bibel. Automated Theorem Proving 2. Edition. Vieweg Verlag, 1987.

4. Martin Giese. Proof search without backtracking using instance streams, position

paper. In Peter Baumgartner and Hantao Zhang, editors, 3rd Int. Workshop on

First-Order Theorem Proving (FTP), St. Andrews, Scotland, TR 5/2000 Univ. of

Koblenz, pages 227–228, 2000.

5. Martin Giese. Incremental Closure of Free Variable Tableaux. In Proc. Intl. Joint

Conf. on Automated Reasoning, Siena, Italy, number 2083 in LNCS, pages 545–560.

Springer-Verlag, 2001.

6. Martin Giese. Proof Search without Backtracking for Free Variable Tableaux. PhD

thesis, Fakultät für Informatik, Universität Karlsruhe, July 2002.

7. Christoph Kreitz and Jens Otten. Connection-based theorem proving in classical

and non-classical logics. Journal of Universal Computer Science, 5(3):88–112, 1999.

8. Lincoln A. Wallen. Automated deduction in nonclassical logics. MIT Press, 1990.