C R S A

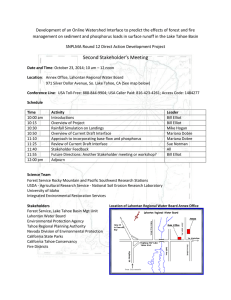

advertisement