Stat 407 Exam 1 (Fall 2001) SOLUTION 5.25

advertisement

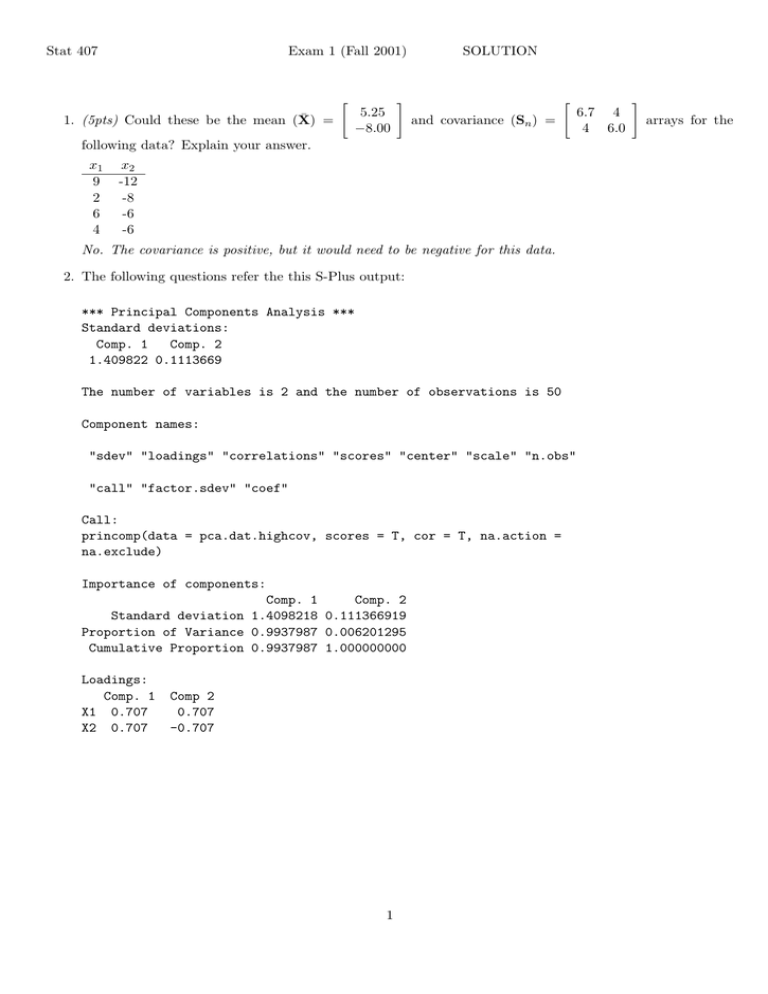

Stat 407 Exam 1 (Fall 2001) 1. (5pts) Could these be the mean (X̄) = " 5.25 −8.00 # SOLUTION and covariance (Sn ) = " following data? Explain your answer. x1 9 2 6 4 x2 -12 -8 -6 -6 No. The covariance is positive, but it would need to be negative for this data. 2. The following questions refer the this S-Plus output: *** Principal Components Analysis *** Standard deviations: Comp. 1 Comp. 2 1.409822 0.1113669 The number of variables is 2 and the number of observations is 50 Component names: "sdev" "loadings" "correlations" "scores" "center" "scale" "n.obs" "call" "factor.sdev" "coef" Call: princomp(data = pca.dat.highcov, scores = T, cor = T, na.action = na.exclude) Importance of components: Comp. 1 Comp. 2 Standard deviation 1.4098218 0.111366919 Proportion of Variance 0.9937987 0.006201295 Cumulative Proportion 0.9937987 1.000000000 Loadings: Comp. 1 X1 0.707 X2 0.707 Comp 2 0.707 -0.707 1 6.7 4 4 6.0 # arrays for the (a) (5pts) Write down the equation to generate the first principal component, that is, of the form Y1 = a1 X1 + a2 X2 . Y1 = 0.707X1 + 0.707X2 (b) (5pts) Draw the variable axes on the following plot of the first two principal components thus creating a bi-plot. (Note that the Standard deviations on the output are square root of the eigenvalues. √ The factor loadings are obtained by eigenvectortimes eigenvalue.) The variable axes are drawn for variable 1 as (1.41 × 0.707 0.11 × 0.707) = (0.99, 0.08) and for variable 2 as (1.41 × 0.707 0.11 × −0.707) = (0.99, −0.08). 2 3. The following questions refer to measurements made on the size of the carapace and gender of painted turtles (Jolicoeur and Mosimann, 1960). The variables in order are Length, Width and Height (in mm), and gender (1 =Female, 2 =Male). Min Max Length 93 177 Width 74 132 Height 35 67 Sex 1 2 (a) (5pts) As accurately as possible, plot the point X0 = (98 81 60 1) on the parallel coordinate plot. Is this case unusual? Why or why not? This case has an unusually high value of Height. 3 (b) (5pts) Using the classification tree below, predict the class (1 or 2) of this case X0 = (165 121 52). Class 1, because Height is greater than 47.5. (c) (1pts) The confusion matrix for the classification tree is as follows. Compute the apparent error rate. Actual Pred 1 2 1 22 2 2 2 22 Apparent error rate is 4/48 = 0.083. 4 (d) (5pts) Below is the S-Plus output from running the linear discriminant analysis method. *** Discriminant Analysis *** Call: discrim(Sex ~ Length + Width + Height, data = turtles, family = Classical(cov.structure = "homoscedastic"), na.action = na.omit, prior = "proportional") Group means: Length Width Height N Priors 1 136.0417 102.58333 52.04167 24 0.5 2 113.3750 88.29167 40.70833 24 0.5 Covariance Structure: homoscedastic Length Width Height Length 295.1431 175.0607 101.6649 Width 110.8868 61.7491 Height 37.9982 Constants: 1 2 -66.11596 -55.19621 Linear Coefficients: 1 2 Length -1.437461 -1.164668 Width 3.051991 3.188469 Height 0.255887 -0.994019 Plug-in classification table: 1 2 Error Posterior.Error 1 20 4 0.1666667 0.2362568 2 0 24 0.0000000 -0.0426450 Overall 0.0833333 0.0968059 (from=rows,to=columns) Write down the linear discriminant rule for two sexes as simply as possible based on quantities in the output. (This will be equivalent to the equation from class: 1 0 −1 (X̄1 − X̄2 )0 S−1 pooled X0 − (X̄1 − X̄2 ) Spooled (X̄1 + X̄2 ) ≥ 0 2 but with the numbers delineating the linear projection of the data and the constant term.) −1.44L + 3.05W + 0.26H − 66 − (−1.16L + 3.19W − 0.99H − 55) > 0 −0.28L − 0.14W + 1.25H − 11 > 0 5 (e) (1pts) From the above S-Plus output, calculate the apparent error rate. Apparent error rate is 4/48 = 0.083. (f) (3pts) Compare the results of the classification tree rule and the results from linear discriminant analysis, in terms of confusion between groups and importance of variables as much as is possible with the information at hand. The errors are different between the two methods. The classification tree has 2 errors in each of class 1 and 2, but LDA only confuses class 1 cases. Both methods put emphasis on Height as a main difference between the two sexes. It is the first variable in the classification tree. In LDA, the coefficient for Height for both sexes is most different, opposite in sign. 6 4. (10pts) Multiple choice questions: (a) Which of the following do you learn by examining the summary statistics (mean, variance-covariance matrix) of multivariate data? (a) Location, (b) Outlier, (c) Cluster Structure, (d) None of the previous options. (b) When there is heterogenous variance between classes (groups) in a data set which of the following methods is likely to provide the WORST solution? (a) quadratic discriminant analysis, (b) classification trees, (c) support vector machines, (d) linear discriminant analysis. (c) Which of the following methods for imputing missing values in multivariate data generates a random value? (a) variable mean value, (b) regression on non-missing variables, (c) multiple imputation, (d) none of the previous options. (d) Which of the following ways are effective ways to incorporate categorical variable information into a plot of continuous variables? (a) Color, (b) Symbol, (c) Conditioning, (d) All of the previous options. (e) When using a linear discriminant rule, that incorporates a cost function, the linear boundary shifts (a) towards the group which has the highest cost of classifcation error, (b) away from the group which has the highest cost of classifcation error, (c) stays half-way between the two groups, (d) none of the previous options. 5. (10pts) True or False questions: (a) Feed-forward neural networks provide a flexible way to generalize linear regression functions. (T). (b) The main drawback of classification trees is that they don’t effectively use the variance-covariance structure in the data. (T). (c) Linear discriminant analysis assume heterogeneous variance. (F). (d) Shape of the data is related to the joint distribution of the variables. (T). (e) PCA on the correlation matrix is the same as first standardizing the variables. (T). 7 6. (5pts) These following two data sets containing two variables both have loadings for the first principal component equal to (0.707, 0.707). Why is this? The direction of maximum variance is in the x = y direction for both data sets, despite the difference in spread of data. 8