Extracting Rich Event Structure from Text Nate Chambers Models and Evaluations

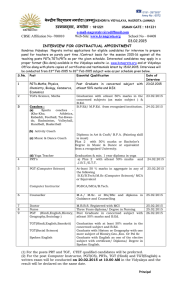

advertisement

Extracting Rich Event Structure from Text

Models and Evaluations

Clustering Models

Nate Chambers

US Naval Academy

A First Approach

Entity-focused

Clustering

Mutual Information

2

The Protagonist

protagonist:

(noun)

1. the principal character in a

drama or other literary work

2. a leading actor, character, or

participant in a literary work or

real event

3

Inducing Narrative Relations

Chambers and Jurafsky. Unsupervised Learning of Narrative Event Chains. ACL-08

Narrative Coherence Assumption

Verbs sharing coreferring arguments are semantically connected

by virtue of narrative discourse structure.

1.

Dependency parse a document.

2.

Run coreference to cluster entity mentions.

3.

Count pairs of verbs with coreferring arguments.

4.

Use pointwise mutual information to measure relatedness.

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico

when it was capped on July 15 and engineers have since been working to

permanently plug it. The damaged Macondo well has spewed about

4.9m barrels of oil into the gulf after an explosion on April 20 aboard the

Deepwater Horizon rig which killed 11 people. BP said on Monday that its

costs for stopping and cleaning up the spill had risen to $6.1bn.

5

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico

when it was capped on July 15 and engineers have since been working to

permanently plug it. The damaged Macondo well has spewed about

4.9m barrels of oil into the gulf after an explosion on April 20 aboard the

Deepwater Horizon rig which killed 11 people. BP said on Monday that its

costs for stopping and cleaning up the spill had risen to $6.1bn.

6

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico

when it was capped on July 15 and engineers have since been working to

permanently plug it. The damaged Macondo well has spewed about

4.9m barrels of oil into the gulf after an explosion on April 20 aboard the

Deepwater Horizon rig which killed 11 people. BP said on Monday that

its costs for stopping and cleaning up the spill had risen to $6.1bn.

7

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico when it was capped on July 15 and engineers have

since been working to permanently plug it. The damaged Macondo well has spewed about 4.9m barrels of oil into the

gulf after an explosion on April 20 aboard the Deepwater Horizon rig which killed 11 people. BP said on Monday that its

costs for stopping and cleaning up the spill had risen to $6.1bn.

8

The oil stopped

The damaged Macondo well spewed

gushing from BP’s ruptured well

spewed 4.9m barrels of oil

it capped

spewed into the gulf

engineers working

killed 11 people

engineers plug

BP said

plug it

risen to $6.1bn

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico when it was capped on July 15 and engineers have

since been working to permanently plug it. The damaged Macondo well has spewed about 4.9m barrels of oil into the

gulf after an explosion on April 20 aboard the Deepwater Horizon rig which killed 11 people. BP said on Monday that its

costs for stopping and cleaning up the spill had risen to $6.1bn.

9

The oil stopped

The damaged Macondo well spewed

gushing from BP’s ruptured well

spewed 4.9m barrels of oil

it capped

spewed into the gulf

engineers working

killed 11 people

engineers plug

BP said

plug it

risen to $6.1bn

Example Text

The oil stopped gushing from BP’s ruptured well in the Gulf of Mexico when it was capped on July 15 and engineers have

since been working to permanently plug it. The damaged Macondo well has spewed about 4.9m barrels of oil into the

gulf after an explosion on April 20 aboard the Deepwater Horizon rig which killed 11 people. BP said on Monday that its

costs for stopping and cleaning up the spill had risen to $6.1bn.

gushing from BP’s ruptured well

it capped

The oil stopped

spewed 4.9m barrels of oil

The damaged Macondo well spewed

plug it

engineers working

engineers plug

10

11

p(x, y)

min(C(x),C(y))

pmi(x, y) log

*

p(x) p(y) min(C(x),C(y)) 1

12

Chain Example

13

Schema Example

Police, Agent,

Authorities

Prosecutor, Attorney

Plea, Guilty, Innocent

14

Judge, Official

Suspect, Criminal,

Terrorist, …

Narrative Schemas

N (E,C)

E = {arrest, charge, plead, convict, sentence}

C {C1,C2,C3}

15

Add a Verb to a Schema

max narsim(N,v)

v V

narsim(N,v)

max(,

d Dv

max chainsim(c, v,d ))

c C

n

chainsim(c, v,d ) max (score(c,a) sim( e,d , v,d ,a))

a Args

i1

sim( e,d , v,d ,a) pmi( e,d , v,d ) logC( e,d , v,d ,a)

16

Learning Schemas

narsim(N,v)

max(,

d Dv

17

max chainsim(c, v,d ))

c C

Argument Induction

•

Induce semantic roles by scoring argument head

words.

= criminal

suspect

man

student

immigrant

person

n1

score( )

n

(1 ) pmi(e ,e

i1 j i1

18

i

j

) log( freq(ei ,e j , ))

Learned Example: Viral

virus, disease, bacteria, cancer,

toxoplasma, strain

mosquito, aids, virus, tick,

catastrophe, disease

19

Learned Example: Authorship

book, report, novel, article, story, letter,

magazine

company, author, group, year, microsoft,

magazine

Database of Schemas

•

•

•

•

1813 base verbs

596 unique schemas

Various sizes of schemas (6, 8, 10, 12)

Temporal ordering data

– Available online:

http://www.usna.edu/Users/cs/nchamber/data/schemas/acl09

So What?

22

Information Extraction (MUC)

• Message Understanding Conference – 1992

• Semantic representation (messages) of a situation (incident)

and its key attributes.

23

2. INCIDENT: DATE

11 JAN 90

3. INCIDENT: LOCATION

BOLIVIA: LA PAZ (CITY)

4. INCIDENT: TYPE

BOMBING

5. INCIDENT: STAGE OF EXECUTION

ATTEMPTED

6. INCIDENT: INSTRUMENT ID

"BOMB"

7. INCIDENT: INSTRUMENT TYPE

BOMB: "BOMB"

8. PERP: INCIDENT CATEGORY

TERRORIST ACT

9. PERP: INDIVIDUAL ID

10. PERP: ORGANIZATION ID

"ZARATE WILLKA LIBERATION ARMED FORCES"

11. PERP: ORGANIZATION CONFIDENCE CLAIMED OR ADMITTED: "ZARATE WILLKA LIBERATION ARMED FORCES"

12. PHYS TGT: ID

"GOVERNMENT HOUSE"

13. PHYS TGT: TYPE

GOVERNMENT OFFICE OR RESIDENCE: "GOVERNMENT HOUSE"

14. PHYS TGT: NUMBER

1: "GOVERNMENT HOUSE"

15. PHYS TGT: FOREIGN NATION

16. PHYS TGT: EFFECT OF INCIDENT

SOME DAMAGE: "GOVERNMENT HOUSE"

17. PHYS TGT: TOTAL NUMBER

18. HUM TGT: NAME

19. HUM TGT: DESCRIPTION

"CABINET MEMBERS" / "CABINET MINISTERS"

20. HUM TGT: TYPE

GOVERNMENT OFFICIAL: "CABINET MEMBERS" / "CABINET MINISTERS"

21. HUM TGT: NUMBER

PLURAL: "CABINET MEMBERS" / "CABINET MINISTERS"

22. HUM TGT: FOREIGN NATION

23. HUM TGT: EFFECT OF INCIDENT

24. HUM TGT: TOTAL NUMBER

-

MUC 4 Contains “Structure”

• Focus on the core attributes.

– Type of incident

– Main agent (perpetrator)

– Affected entities (targets, both physical and human)

BOMBING

DATE

LOCATION

INSTRUMENT TYPE

PERP

PHYS TGT

PHYS TGT: EFFECT

HUM TGT

24

11 JAN 90

BOLIVIA: LA PAZ (CITY)

BOMB: "BOMB”

"ZARATE WILLKA LIBERATION ARMED FORCES”

"GOVERNMENT HOUSE”

SOME DAMAGE

"CABINET MINISTERS”

Labeled Template Schemas

Perpetrator

1.

2.

3.

4.

Victim

Target

Instrument

Attack

Bombing

Kidnapping

Arson

• Assume these template schemas are unknown.

• Assume documents containing templates are unknown.

25

Expanding the dataset

• MUC is a small dataset: 1300 documents

– The protagonist is too sparse.

26

Expanding the dataset

• MUC is a small dataset: 1300 documents

– The protagonist is too sparse.

• Instead, first cluster words based on proximity

kidnap, release,

abduct, kidnapping,

ransom, robbery

detonate, blow up,

plant, hurl, stage,

launch, detain,

suspect, set off

27

Information Retrieval

Retrieve new documents

83 MUC documents

kidnap, release, abduct,

kidnapping, board

28

(Ji and Grishman, 2008)

NYT Gigaword

1.1 billion tokens

3954 documents

Cluster the Syntactic Slots?

• How do we learn the slots?

• Could just use PMI as before, but we still have low

document counts.

Solution

- Represent events (e.g., subject/throw) as word

vectors and cluster based on vector distance.

29

Cluster the Syntactic Slots?

Novel Idea

Create a narrative vector of protagonist

connections.

Each event is a vector of other events with which

it shared a coreferring argument. Value is the

frequency of coref.

Compare two events x and y:

cos( xnar , ynar )

30

Selectional Preferences

Borrowed Idea

Create a selectional preferences vector.

Katrin Erk, ACL 2007.

Bergsma et al., ACL 2008.

Zapirain et al., ACL 2009.

Calvo et al., MICAI/CIARP 2009

Ritter et al., ACL 2010.

Christian Scheible, LREC 2010.

Walde, LREC 2010.

• Subject of Detonate

– Man, member, person, suspect, terrorist

• Object of Set off

– Dynamite, bomb, truck, explosive, device

31

cosine similarity

Cluster the Syntactic Functions

• Agglomerative clustering, average link scoring

• Two types of cosine similarity

– Selectional preferences

– Narrative protagonist relations

sim(x, y) max(cos( xselpref , yselpref ),cos(xnar, ynar))

cos( xselpref , y selpref ) (1 ) cos( xnar , ynar )

32

Constrain the Argument Classes

• Use WordNet (Fellbaum, 1998) to constrain types

– Person/Organization

– Physical Object

– Other

• The subject of detonate (Person)

– Man, member, person, suspect, terrorist

• The object of detonate (Physical Object)

– Dynamite, bomb, truck, explosive

• Only cluster events with the same type.

33

Learned Templates

Learned Template

explode, detonate, blow up, explosion, damage, cause…

Person: X detonate, X blow up, X plant, X hurl, X stage

Phys Object: destroy X, damage X, explode at X, throw at X, …

Phys Object: explode X, hurl X, X cause, X go off, plant X, …

Person: X raid, X question, X investigate, X defuse, X arrest

34

Learned Templates

Bombing

explode, detonate, blow up, explosion, damage, cause…

Perpetrator:

Person who detonates, blows up, plants, hurls, stages, is

detained, is suspected, is blamed on, launches

Target:

Object that is damaged, is destroyed, is exploded at, is

thrown at, is hit, is struck

Instrument:

Object that is exploded, explodes, is hurled, causes, goes off,

is planted, damages, is set off, is defused

Police:

Person who raids, questions, investigates, defuses, arrests, …

35

Learned Templates

Kidnapping

kidnap, release, abduct, kidnapping, board

Perpetrator:

Person who releases, kidnaps, abducts, ambushes, holds,

forces, captures, frees

Victim:

Person who is kidnapped, is released, is freed, escapes,

disappears, travels, is harmed

36

New Templates

Elections

choose, favor, turns out, pledges, unites, blame, deny…

Voter: Person who chooses, is intimidated, favors, is appealed to, turns out

Government: Org. that authorizes, is chosen, blames, denies

Candidate: Person who resigns, unites, advocates, manipulates,

pledges, is blamed

Smuggling

smuggle, transport, seize, confiscate, detain, capture…

Perpetrator: Person who smuggles, is seized from, is captured, is detained

Police: Person who raids, seizes, captures, confiscates, detains, investigates

Instrument: Object that is smuggled, is seized, is confiscated, is transported

37

37

Evaluate Templates

Perpetrator

1.

2.

3.

4.

Victim

Attack

Bombing

Kidnapping

Arson

Precision: 14 of 16 (88%)

Recall: 12 of 13 (92%)

38

Target

Instrument

??

Police

Induction Summary

1. Learned the original templates.

– Attack, Bombing, Kidnapping, Arson

2. Learned a new role (Police)

3. Learned new structures, not annotated by humans.

– Elections, Smuggling

• Now we can perform the standard extraction task.

39

Extraction Approach

Kidnapping

kidnap, release, abduct, kidnapping, board

Perpetrator: X kidnap, X release, X abduct

Victim: kidnap X, release X, kidnapping of X, release of X, X’s release

They announced the initial release of the villagers last weekend.

40

Evaluation

• Training: 1300 documents

– Learned template structure

– Developed extraction algorithm

• Testing: 200 documents

– Extracted slot fillers (perpetrator, target, etc.)

• Metric: F1-Score

– Standard metric; balances precision and recall

precision recall

F1 2

precision recall

41

MUC Example

4. INCIDENT: TYPE

BOMBING

7. INCIDENT: INSTRUMENT TYPE

BOMB: "BOMB"

9. PERP: INDIVIDUAL ID

10. PERP: ORGANIZATION ID

"ZARATE WILLKA LIBERATION ARMED FORCES”

12. PHYS TGT: ID

"GOVERNMENT HOUSE”

19. HUM TGT: DESCRIPTION

"CABINET MEMBERS" / "CABINET MINISTERS”

Two bomb attacks were carried out in La Paz last night, one in front of Government House following the

message to the nation over a radio and television network by president Jaime Paz Zamora.

The explosions did not cause any serious damage but the police were mobilized, fearing a wave of attacks.

The self-styled `` Zarate willka Liberation Armed Forces '' sent simultaneous written messages to the media,

calling on the people to oppose the government…

The second attack occurred at 2335 ( 0335 GMT on 12 January ), just after the cabinet members had left

Government House where they had listened to the presidential message.

A bomb was placed outside Government House in the parking lot that is used by cabinet ministers .

The police placed the bomb in a nearby flower bed, where it went off.

The shock wave shattered some windows in Government House and street lamps in the Plaza Murillo.

As of 0500 GMT today, the police had received reports of two other explosions in two La Paz neighborhoods,

but these have not yet been confirmed.

42

F1 Scores of Extraction Systems

• Rule-based systems (Chinchor et al. 93, Rau et al. 92)

• Supervised systems (Patwardhan/Riloff 2007,2009; Huang 2011)

• Event Schemas Unsupervised

Slot Filling F1

Rule-based

Supervised

Unsupervised

0

43

0.2

0.4

0.6

0.8

1.0

F1 Scores of Extraction Systems

• Rule-based systems (Chinchor et al. 93, Rau et al. 92)

• Supervised systems (Patwardhan/Riloff 2007,2009; Huang 2011)

• Event Schemas Unsupervised (2011)

Slot Filling F1

Rule-based

Supervised

Unsupervised

0

44

0.2

0.4

0.6

Latest performance

0.8

1.0

Summary

• Extracted without knowing what needed to be

extracted.

• .40 F1 within range of more-informed approaches

• The first results on MUC-3 without schema

knowledge

45

Shortly after…

• More Progress!

– Jans et al. (EACL 2012)

– Balasubramanian et al. (NAACL 2013)

– Pichotta and Mooney (EACL 2014)

• Semantic Role Labeling

– Gerber and Chai (ACL 2010), Best Paper Award

• Coreference

– Irwin et al. (CoNLL 2011), Rahman and Ng (EMNLP 2012)

• Generative Models

–

–

–

–

Cheung et al.

Bamman et al.

Chambers

Nguyen et al.

(NAACL 2013)

(ACL 2013)

(EMNLP 2013)

(ACL 2015)

46

Coming up

• Generative Models

–

–

–

–

Cheung et al.

Bamman et al.

Chambers

Nguyen et al.

(NAACL 2013)

(ACL 2013)

(EMNLP 2013)

(ACL 2015)

47