Semiparametric Estimation of Partially Linear Varying Coefficient

advertisement

Semiparametric Estimation of Partially Linear Varying Coefficient

Models with Time Trend and Nonstationary Regressors

Yichen Gao∗

Zheng Li†

Zhongjian Lin‡

August 18, 2014

Abstract

This paper extends the partially linear varying coefficient model to contain time trend

and nonstationary variables as regressors. We use the profile likelihood method to estimate both time trend coefficient in the linear component and the functional coefficients in

the nonlinear component and establish their asymptotic distributions. Monte Carlo simulations are shown to investigate the finite sample performance of the proposed estimators.

Keywords: Partially linear varying coefficient model; time trend; integrated time series.

JEL: C14; C22; C51.

∗ Corresponding Author, International School of Economics and Management, Capital University of Economics

and Business, Beijing 100070, China, yichengao@gmail.com

† Department of Economics, Texas A&M University, College Station, TX 77843, lzrain@tamu.edu

‡ Department of Economics, Emory Univeristy, Atlanta, GA 30322, zhongjian.lin@emory.edu

1

1 Introduction

Recently, there is an increasing attention among econometricians and statisticians working

on nonparametric regression models with non-stationary covariates. Karlsen, Myklebust and

Tjøstheim (2007), Wang and Phillips (2009a,b), Kasparis and Phillips (2012) and Liang, Lin

and Hsiao (2013) consider the problem of nonparametric cointegration model of the following

form.

yt = g( xt ) + u t ,

t = 1, · · · , n

(1)

where xt follows a drift-less unit root I(1) process, the functional form of g(·) is not specified,

and ut is a zero mean stationary I(0) error term. Model (1) extends a standard nonparametric

regression model with independent or weakly dependent data to the strong dependent nonstationary data case. One restrictive feature of model (1) is that the dimension of xt cannot be

greater than two because when the dimension of xt is greater than two, the unit root process

becomes non-recurrent, hence, within a shrinking interval near x, say [ x − h, x + h] where

h = hn → 0 as n → ∞, the number of data points that fall inside [ x − h, x + h] will not increase

to ∞ as n → ∞. Hence, nonparametric kernel method cannot lead to consistent estimation of

g( x) if the dimension of x is greater than two.

To avoid the above problem and also allow for flexible functional forms in a nonparametric

regression model with I(1) covariates, Cai, Li and Park (2009) and Xiao (2009) suggest using a

varying coefficient framework to model the relationship among non-stationary variables.

yt = xt′ β(zt ) + ut ,

t = 1, · · · , n

(2)

where xt is a d × 1 vector of drift-less I(1) process, zt and ut are scalar weakly dependent

stationary I(0) variables, the functional form of (·) is unspecified. Sun and Li (2011) study the

asymptotic behavior of bandwidth using data-driven least squares cross-validation method to

select the bandwidth h. Sun, Cai and Li (2013) further extend model (2) to the case that both xt

and zt are drift-less I(1) variables.

Li et al. (2013) consider a partially linear varying coefficient model:

′

′

γ + x2t

β( zt ) + u t ,

yt = x1t

t = 1, · · · , n

(3)

where x1t and x2t are d1 × 1 and d2 × 1 I(1) non-stationary variables, γ is a d1 × 1 vector of con2

stant parameters, β(zt ) is a d2 × 1 vector function of zt , zt and ut are scalar stationary variables.

Li et al. (2013) suggest using a qth order local polynomial method to estimate γ and β(z). They

show that γ can be estimated with a parametric rate of O p (n−1 ), while the estimate of β(z) has

√

the nonparametric rate of convergence of O p (hq+1 + (n h)−1 ).

Juhl and Xiao (2005) consider the following partially linear model with integrated covariate:

yt = γyt−1 + g( xt ) + ut ,

t = 1, · · · , n

(4)

where γ = 1 or very close to one, xt and ut are stationary I(0) variables.

One common feature for the above works is that all the non-stationary cointegrated variables (i.e., I(1) covariates) are driftless unit processes. Hence, this type of models cannot capture time trend behavior as are often observed in macroeconomic and financial variables.

Juhl and Xiao (2009) consider a nonparametric time-trend model, but they normalize the

time trend variable as τ = τn = t/n for t = 1, · · · , n. So the regression model has the form of

yt = g(τn ) + ut = g(t/n) + ut ,

t = 1, · · · , n

(5)

where the functional form of g(·) is a smooth non-specified function. Since τ lies inside the unit

interval [0, 1] and g(·) is a smooth function, supτ ∈[0,1] | g(τ )| ≤ C, where C is a finite positive

constant. Hence, model (5) cannot be used to model upward trending behaviors.

Liang and Li (2012) consider a time trend varying coefficient model of the form:

yt = tγ + xt′ β(zt ) + ut ,

t = 1, · · · , n

(6)

where γ is a unknown constant parameter so that yt is an unit root or a near unit root process, β(z) is a smooth but otherwise unspecified function of z, xt , zt and ut are weakly dependent stationary variables. Although Liang and Li (2012) explicitly allow for a time trend

variable in their semiparametric varying coefficient model, their model does not contain any

non-stationary I(1) covariates.

Li and Li (2013) consider a time-trend varying coefficient model of the following form:

yt = tγ(zt ) + xt′ β(zt ) + ut ,

t = 1, · · · , n

(7)

where γ(z) and β(z) are both smooth, unspecified functions. Li and Li (2013) derive the rate

3

of convergence results. Let γ̂(z) and β̂(z) denote the local constant kernel estimator of γ(z)

√

√

and β(z), respectively. Li and Li (2013) establish that β̂(z) − β(z) = O p ( nh2 + h) and

√

√

γ̂(z) − γ(z) = O p ( nh2 + h). But they do not provide asymptotic distributions of γ̂(z) and

β̂(z).

In this paper we consider the problem of estimating a time-trend partially linear varying

coefficient model of the form given in (6), but different from model (6) in that we allow for xt

to be a non-stationary I(1) regressor. Specifically, we extend the partially linear varying coefficient model to contain time trend as the linear component and nonstationary variables having

a varying coefficient function. We show that the estimator for the parametric component has

the same rate of convergence as to the case when the function β(z) is known and derive its

asymptotic distribution. Once the fast rate of convergence of the parametric component estimator is established, the asymptotic behavior of the estimator of the nonparametric function

β(z) follows from existing work of Cai, Li and Park (2009) and Xiao (2009).

The remaining part of the paper is organized as follows. In Section 2, we consider the

problem of estimating a semiparametric time trend varying coefficient model. We use the

profile likelihood method to estimate both time trend coefficient and the functional coefficients

of the nonparametric component and establish their asymptotic distributions. Section 3 reports

Monte Carlo simulation results to investigate the finite sample performance of our proposed

estimators. We conclude the paper in Section 4. Mathematical proofs are postponed to the

Appendix.

2 The Model, Estimators and Their Asymptotic Distributions

We consider the following partially linear varying coefficient model with a time trend component enters the model linearly, while some I(1) regressors enter the model semiparametrically

in the sense that their coefficients are smooth functions of a stationary variable as follows:

yt = tγ + xt′ β(zt ) + ut ,

t = 1, · · · , n

(8)

where t is time trend, γ is an unknown constant coefficient associated with the time trend

variable, xt is p-dimensional I(1) regressors. That is, xt = xt−1 + vt with vt being a p × 1 vector

of zero mean weakly dependent I(0) variable. The prime denotes the transpose of a matrix.

4

β(·) is a p-dimensional unspecified varying coefficient function. zt is a scalar I(0) variables1 ,

and ut is a stationary error term satisfying E (ut | xt , zt ) = 0.

We propose estimating the unknown parametric γ and the unknown function β(·) by the

profile least squares method. First we treat γ as if it were known, we can re-write (8) as

yt − tγ + xt′ β(zt ) + ut ,

(9)

Then we estimate β(zt ) by a local polynomial (of order qth ) kernel estimation method. Let

e ≡ ( I p , Np× pq ), Gh ≡ diag(1, h, · · · , hq ) ⊗ I p , where h is the smoothing parameter, I p is a p × p

identity matrix, Np× pq is a p × pq null matrix (a matrix with all elements being zeros), and ⊗

denotes the Kronecker product. Define

Zst,h ≡ (zs − zt )/h, Kst ≡ h−1 K (zst,h ), Qst ≡ [1, (zs − zt ), · · · , (zs − zt )q ]′

where K (·) is a kernel function. Then the estimator of β(zt ) is given by

β̃(zt ) = e

n

∑

s =1

= eGh

1

= e 2

n

Kst ( Qst Q′st

n

∑

s =1

n

∑

s =1

⊗

Kst ( Qst Q′st

xs xs′ )

⊗

−1 xs xs′ )

Kst Gh−1 ( Qst Q′st

⊗

n

∑ Kst (Qst ⊗ xs )(ys − sγ)

s =1

−1

Gh Gh−1

xs xs′ ) Gh−1

= A1t − A2t γ

n

∑ Kst (Qst ⊗ xs )(ys − sγ)

s =1

−1 1

n2

n

∑

s =1

Kst Gh−1 ( Qst

⊗ xs )(ys − sγ)

(10)

where

A1t = eSt−1

"

1

n2

eSt−1

"

1

n2

A2t =

St =

1

n2

n

∑ Kst Gh−1 (Qst ⊗ xs )ys

s =1

n

∑

s =1

Kst Gh−1 ( Qst

⊗ xs ) s

#

#

n

∑ Kst Gh−1(Qst Q′st ⊗ xs xs′ )Gh−1

s =1

Note that β̃(zt ) in (10) is not a feasible estimator for β(zt ) because it depends on the unknown parametric γ. In order to obtain feasible estimators for γ and β(·), we add and subtract

1 It

is straightforward to extend the scalar zt to the multivariate zt case. For notational simplicity, we only

consider the scalar zt case in this paper.

5

xt′ β̃(zt ) in (8), then rearranging terms gives

yt − xt′ A1t = (t − xt′ A2t )γ + ǫt

(11)

where ǫt = ut + xt′ [ β(zt ) − β̃(zt )]. The ordinary least squares (OLS) estimator of γ based on

(11) is given by

γ̂ =

n

∑ (t −

t =1

xt′ A2t )2

−1

n

∑ (t − xt′ A2t )(yt − xt′ A1t )

(12)

t =1

Note that γ̂ defined in (12) is a feasible estimator of γ because A1t and A2t depend on observed

variables and therefore computable. Substituting (12) into (8), one can obtain a feasible local

polynomial estimator of β(zt ).

The use of local polynomial estimation (with q ≥ 1) is needed to ensure that γ̂ − γ =

O p (n−3/2 ) has a parametric rate of convergence. When estimating β(z), there is no need to

use a local high order polynomial method. Local linear or even local constant methods can be

used to consistently estimate β(z). We suggest using the local linear method because as shown

in Sun and Li (2011), for a varying coefficient model with xt being an I(1) variable, local linear

estimator of β(z) has a faster convergence rate than a local constant estimator of β(z). A local

linear estimator of β(z) along with a derivative estimator for β(1) (z) =

β̂(z)

β̂(1) (z)

n

= ∑

where Kh,sz =

s =1

1

hK

zs − z

h

(zs − z) xs xs′

xs xs′

(zs − z) xs xs′ (zs − z)2 xs xs′

−1

Kh,sz

n

∑

s =1

dβ ( z)

dz

are given by

xs

( zs − z) xs

(ys − sγ̂)Kh,sz

(13)

. Before establishing the asymptotic distribution of γ̂ and β̂(z), we

need to make some regularity assumptions. Let xt = xt−1 + vt , vt is stationary and weakly

dependent random vector process which will be specified later. Also, let k · k denotes the the

Euclidean norm.

Assumption 1. Let νt = (v′t , ut , zt ), {ν} is a strictly stationary Îś-mixing process with mixing coefficients α(m) = O(ρ−m ) for some 0 < ρ < 1, and E [kνt4 k] < ∞. Also, let ξ t = (v′t , ut )′ . Then ξ t has

zero mean and Var(ξ t ) = Σ.

√1

n

[nr ]

∑t=1 ξ t ⇒ Bv,u (r) = ( Bv (r), Bu (r)), where [ a] denotes the integer

part of a, ⇒ denotes weak convergence, Bv,u (r) is a Browning motion with covariance given by Σ.

Assumption 2. Let (ut , Fnt , 1 ≤ t ≤ n) be a martingale difference sequence with E (ut |Fnt−1 ) = 0

a.s. and E (u2t | Fnt−1 ) = σu2 a.s., where Fnt = σ{s1 , zs1 , us2 : 1 ≤ s1 ≤ n, 1 ≤ s2 ≤ t}.

6

Assumption 3. The function β(z) has (q + 1)-th order continuous derivatives for z ∈ Sz , where Sz is

the compact support of zt .

Assumption 4. The density function of zt , f (z), is positive and bounded away from infinity and zero

on z ∈ Sz , and has second-order continuous derivative z ∈ Sz . Furthermore, the joint density function

of (zt , zs ) is bounded for all s > t.

Assumption 5. K (·) is bounded continuous probability density function with a compact support.

Assumption 6. Let nhq+1 → 0 and

nh

ln n

→ ∞ as n → ∞.

We present the asymptotic distribution of γ̂ and β̂(z) below and delay the proofs to Appendices.

Theorem 1. Under assumptions 1 to 6, we have

d

n3/2 (γ̂ − γ) −

→ W1−1W2

where

W1 =

1 −

3

Z 1

0

rBx (r)′ dr

h Z

1

Bx (r) Bx (r)′ dr

0

i −1 Z

1

0

rBx (r)dr

and

W2 =

Z 1

0

rdBu (r) −

Z

1

0

′

Bx (r) dBu (r)

h Z

1

0

′

Bx (r) Bx (r) dr

i −1 Z

1

0

rBx (r)dr

Theorem 1 shows that γ̂ − γ = O p (n−3/2 ) has the same fast convergence rate as in a parametric time trend model (i.e., when β(zt ) is known). This result is expected as it is well known

that for semiparametric models, usually the parametric components can be estimated with

the same rate of convergence as the counterpart parametric models (when the nonparametric

functions are known).

With the result of Theorem 1, it is easy to establish the asymptotic distribution of β̂(z). We

R

d2 β ( z )

need first to introduce some notations. Denoting by β(2) (z) = dz2 , µ2 = v2 K (v)dv and

R

R1

ν0 = K 2 (v)dv. Also, let S = 0 Bv (r) Bv (r)′ dr. The following Theorem gives the asymptotic

′

distribution of β̂(z), β̂(1) (z) .

Theorem 2. Under assumptions 1 to 6, we have

n

√ d

h β̂(z) − β(z) − h2 µ2 β(2) (z)/2 −

→ MN (Σ β (z))

7

where MN (Σ β (z)) is a mixed normal distribution with mean zero and conditional covariance matrix

given by Σ β (z) = σu2 ν0 S−1 / f (z). We report simulation results in the next section.

3 Monte Carlo Simulations

In this section we use Monte Carlo simulations to investigate the finite sample performance of

the proposed estimator γ̂ and β̂(zt ). We consider the following data generating process:

yt = tγ + xt′ β(zt ) + ut ,

where γ = 1, xt = xt−1 + vt with vt being i.i.d. N (0, 1) and ut is i.i.d. N (0, 1). β(zt ) has several

choices:

DGP1 :

β( z) = 1 + z

DGP2 :

β(z) = 1 + 0.5 sin(πz)

DGP3 :

β ( z ) = e z = z2

DGP4 :

β( z) = Φ( z)

where Φ(·) is the normal density function. Liang and Li (2012) used DGP1 and DGP2 in their

simulations, while DGP3 is used by Li and Li (2013). zt follows an AR(1) process: zt = 0.5zt−1 +

ε t with ε t being i.i.d. uniform [−1, 1]. {vt }, {ε t } and {ut } are mutually independent with each

other. The smoothing parameter is h = c0 zsd n−1/5 , where c0 = 0.75, 1, 1.25 and 1.5, zsd is the

sample standard deviation of {zt }nt=1 . We consider different sample sizes n = 100, 200, 400 and

800, the number of replications is M = 2000. The Mean Squared Error (MSE) is calculated as

following:

MSEγ̂ =

MSEβ̂ =

M

1

M

j=1

1

M

∑n∑

∑ (γ̂j − γ)2

M

n

1

j=1

t =1

β̂ j (zt ) − β(zt )

2

where j refers to the jth simulation replication.

When estimating γ by γ̂, we use local linear (q = 1) kernel method. With γ̂ we estimate

β̂(·) using both local constant (LC) and local linear (LL) methods because both methods give

8

consistent estimate for β(·). However, as shown in Sun and Li (2011), MSEβ̂ LL = O((n2 h)−1/2 +

h4 ) = O(n−8/5 ) if h ∼ n−2/5 , and MSEβ̂ LC = O((n2 h)−1 + h/n) = O(n−3/2 ) if h ∼ n−1/2 .

Hence, β̂ LL (·) has a faster rate of convergence than that of β̂ LC (·).

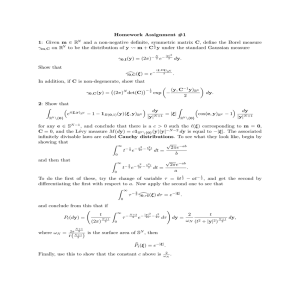

Tables 1 and 2 report the Monte Carlo simulation results for four DGPs. We use n3 MSEγ̂

and n8/5 MSEβ̂ LL to verify the rate of convergence of γ̂ and β̂ LL we derived in Theorems 1 and 2

in Section 2. The results of n3/2 MSEβ̂ LC in the last column of both tables are also reported

to compare the different convergence rate between local linear estimator and local constant

estimator. For the convenience of comparing MSEβ̂ LL and MSEβ̂ LC , we also report n3/2 MSEβ̂ LL .

From the third and fourth columns of Tables 1 and 2, we find that the simulation results

tend to take constant values when n is large. This numerically verifies that the rate of convergence of MSEγ̂ and MSEβ̂ LC are correct. Specifically, MSEγ̂ = O(n−3 ) and MSEβ̂ LL = O(n−8/5 ).

Also, the numbers from sixth column support that the local constant estimator has a MSE

n−3/2 rate of convergence. Finally, from the fifth and the sixth columns we can compare MSE

of the local linear and the local constant estimators. We see that the results are mixed, for some

cases local linear estimator has smaller estimated MSE, while for other cases, local constant

method has smaller estimated MSE. Even the local linear estimator has a faster rate of convergence than the local constant estimator, in finite sample applications, it is possible for the

local constant estimator to has smaller estimated MSE. For sufficiently large sample size, local

linear estimator will dominate the local constant estimator as the asymptotic theory predicted.

DGP1 is a linear regression model, hence, as expected, the local linear method’s performance

improves as c0 increases. DGP2 exhibits the most nonlinearity among the four DGPs. The

c0 values considered in the simulations seem to be too large for the local constant estimator

for this DGP. Local linear estimator has a smaller MSE than the local constant estimator for

DGP2. For DGP3, local constant estimator has smaller (larger) MSE than local linear estimator

for small (large) value of c0 (or h). The normal cumulative function in DGP4 is a monotone

function, for this DGP, local constant estimator has smaller MSE than the local linear estimator

for the samples we considered.

9

Table 1: Monte Carlo Simulation Results

c0

n

n3 MSEγ̂

0.75 100

200

400

800

1

100

200

400

800

1.25 100

200

400

800

1.5 100

200

400

800

83.38

79.53

88.49

94.15

81.16

83.98

81.97

83.08

93.42

89.58

98.11

93.52

79.76

81.77

85.64

87.04

0.75 100

200

400

800

1

100

200

400

800

1.25 100

200

400

800

1.5 100

200

400

800

99.35

89.91

84.38

96.53

111.07

115.24

102.11

122.78

155.24

161.38

161.06

158.11

213.44

267.24

229.86

272.19

n8/5 MSEβ̂

n3/2 MSEβ̂

LL

LL

DGP1

96.00

60.57

88.46

52.07

48.30

26.53

56.51

28.96

38.13

24.06

33.91

19.96

26.08

14.33

18.53

9.49

28.67

18.09

21.48

12.65

17.13

9.41

12.76

6.54

23.82

15.03

18.00

10.60

14.52

7.97

11.06

5.67

DGP2

99.24

62.74

74.51

43.87

65.46

35.96

68.96

35.34

45.17

28.50

37.62

22.15

34.85

19.14

31.55

16.17

35.80

22.59

32.62

19.20

33.14

18.20

41.57

21.30

35.81

22.60

37.31

21.97

43.77

24.04

63.75

32.67

n3/2 MSEβ̂

LC

20.15

15.13

13.66

15.44

14.21

13.20

13.97

17.32

15.21

15.67

20.14

27.70

16.84

20.37

28.07

46.33

49.73

75.82

99.30

113.27

81.15

121.36

172.53

269.37

133.32

230.89

411.30

630.59

213.39

379.91

491.42

862.94

4 Conclusion

In this paper we consider the problem of estimating a semiparametric time trend model with

time trend component enter the model linearly, and the non-stationary covariate xt and the

stationary covariate zt enter the model via a varying coefficient form: xt′ β(zt ). We derive the

asymptotic distributions of the finite dimensional parameter estimator γ̂ and the infinite dimensional function estimate β̂(·). The results of this paper can be generalized in several directions. First, we can extend model (8) to the case that both xt and zt are non-stationary I(1)

variables. The result of Sun, Cai and Li (2013) should be useful in deriving the asymptotic

distributions of the estimators of this extended model. Second, we can develop model specification tests to test whether β(z) has a known parametric functional form. Various nonpara-

10

Table 2: Monte Carlo Simulation Results

c0

n

n3 MSEγ̂

0.75 100

200

400

800

1

100

200

400

800

1.25 100

200

400

800

1.5 100

200

400

800

83.38

79.53

88.49

94.15

81.16

83.98

81.97

83.08

93.42

89.58

98.11

93.52

79.76

81.77

85.64

87.04

0.75 100

200

400

800

1

100

200

400

800

1.25 100

200

400

800

1.5 100

200

400

800

99.35

89.91

84.38

96.53

111.07

115.24

102.11

122.78

155.24

161.38

161.06

158.11

213.44

267.24

229.86

272.19

n8/5 MSEβ̂

n3/2 MSEβ̂

LL

LL

DGP3

96.00

60.57

88.46

52.07

48.30

26.53

56.51

28.96

38.13

24.06

33.91

19.96

26.08

14.33

18.53

9.49

28.67

18.09

21.48

12.65

17.13

9.41

12.76

6.54

23.82

15.03

18.00

10.60

14.52

7.97

11.06

5.67

DGP4

99.24

62.74

74.51

43.87

65.46

35.96

68.96

35.34

45.17

28.50

37.62

22.15

34.85

19.14

31.55

16.17

35.80

22.59

32.62

19.20

33.14

18.20

41.57

21.30

35.81

22.60

37.31

21.97

43.77

24.04

63.75

32.67

n3/2 MSEβ̂

LC

20.15

15.13

13.66

15.44

14.21

13.20

13.97

17.32

15.21

15.67

20.14

27.70

16.84

20.37

28.07

46.33

49.73

75.82

99.30

113.27

81.15

121.36

172.53

269.37

133.32

230.89

411.30

630.59

213.39

379.91

491.42

862.94

metric/semiparametric specification tests are developed for a nonparametric/semiparametric

regression models with non-stationary data. For example, testing the null hypothesis that g( x)

has a parametric functional form, say g( x) is a linear function in x, has been considered by

Gao et al. (2009) and Wang and Phillips (2012). Gao et al. (2009) consider the problem of testing a linear cointegration model, yt = β0 + xt′ β1 + ut , against a nonlinear cointegration model,

yt = g( xt ) + ut , where { xt }nt=1 is a random walk process and is independent of {ut }. Wang and

Phillips (2012) consider a similar testing problem as in Gao et al. (2009) but relax many of the

restrictive assumptions to allow for more general nonstationary process for { xt }. For example,

Wang and Phillips (2012) do not require { xt }nt=1 to be independent of {ut }nt=1 . Sun, Cai and Li

(2013) consider problem of testing β(z) in (2) equals a vector of constant parameters, or it has

11

a known parametric functional form. The result of Gao et al. (2009) and Sun, Cai and Li (2013)

will be useful in developing model specification test for the partially linear time trend varying

coefficient model considered in this paper.

References

Cai, Z. (2007): Trending time varying coefficient time series models with serially correlated

errors, Journal of Econometrics 136, 163-188.

Cai, Z., J. Fan, and Q. Yao (2000): Functional coefficient regression models for nonlinear time

series, Journal of the American Statistical Association 95, 941-956.

Cai, Z., Q. Li, and J.Y. Park (2009): Functional-coefficient models for nonstationary time series

data, Journal of Econometrics 148, 101-113.

Gao, J., M. King, Z. Liu, and D. Tjøstheim (2009): Nonparametric specification testing for nonlinear time series with nonstationary, Econometric Theory 25, 1869-1892.

Hansen, B.E. (1992): Convergence to stochastic integrals for dependent heterogeneous processes, Econometric Theory 8, 489-500.

Hansen, B.E. (2008): Uniform convergence rates for kernel estimation with dependent data,

Econometric Theory 24, 1-23.

Juhl, T., and Z. Xiao (2005): Partially linear models with unit roots, Econometric Theory 21, 877906.

Juhl, T., and Z. Xiao (2009): Tests for Changing Mean with Monotonic Power, Journal of Econometrics 148, 14-24.

Karlsen H.A., T. Myklebust, and D. Tjøstheim (2007): Nonparametric estimation in a nonlinear

cointegration type model, Annals of Statistics 35, 252-299.

Kasparis, I., and P.C.B. Phillips (2012): Dynamic misspecification in nonparametric cointegration, Journal of Econometrics 168, 270-284.

Li, K., D. Li, Z. Liang, and C. Hsiao (2013): Estimation of Semi-Varying Coefficient Models

with Nonstationary Regressors, Working Paper.

Li, Q., C.J. Huang, D. Li, and T. Fu (2002): Semiparametric smooth coefficient models, Journal

of Business and Economics Statistics 20, 412-422.

Li, K., and W. Li (2013): Estimation of varying coefficient models with time trend and integrated regressors, Economics Letters 119, 89-93.

Laing, Z., and Q. Li (2012): Functional coefficient regression models with time trend, Journal of

Econometrics 170, 15-31.

Liang, Z., Lin, Z., and C. Hsiao (2013): Local Linear Estimation of Nonparametric Cointegration Models, Forthcoming in Econometric Reviews.

Masry, E. (1996): Multivariate Local Polynomial Regression for Time Series: Uniform Strong

Consistency and Rates, Journal of Time Serial Analysis 17, 571-599.

12

Phillips, P.C.B. (2009): Local limit theory and spurious nonparametric regression, Econometric

Theory 25, 1466-1497.

Phillips, P.C.B. and P. Perron (1988): Testing for unit roots in time series regression, Biometrika

75, 335-346.

Revuz, D., and M. Yor (2005): Continuous Martingales and Brownian Motion, 3rd edition.

Fundamental Principles of Mathematical Sciences 293, New York: Springer-Verlag.

Sun, Y., Z. Cai, and Q. Li (2013): Semiparametric Functional Coefficient Models with Integrated

Covariates, Econometric Theory 29, 659-672.

Sun, Y., and Q. Li (2011): Data-driven method selecting smoothing parameters in semiparametric models with integrated time series data, Journal of Business and Economic Statistics 29,

541-551.

Wang, Q., and P.C.B. Phillips (2009a): Asymptotic theory for local time density estimation and

nonparametric cointegrating regression, Econometric Theory 25, 710-738.

Wang, Q., and P.C.B. Phillips (2009b): Structural nonparametric cointegrating regression,

Econometrica 77, 1901-1948.

Wang, Q., and P.C.B. Phillips (2012): A specification test for nonlinear nonstationary models,

Annals of Statistics 40, 727-758.

Xiao, Z. (2009): Functional coefficient co-integration models, Journal of Econometrics 152, 81-92.

Yoshihara, K. (1976): Limiting behavior of U-statistics for stationary, absolutely regular processes, Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 35, 237-252.

13

Appendices

A Proof of Theorems

A.1 Proof of Theorem 1

Following similar proof strategy of Li et al. (2013), we decompose γ̂ − γ into several terms

below. Define

n

n

1

2

n St

s =1

h

i

n

β(zt ) = eSt−1 n−2 ∑ Kst Gh−1 ( Qst ⊗ xs ) xs′ β(zs ) =

h

ũt = eSt−1 n−2

i

−1

K

G

(

Q

⊗

x

)

u

∑ st h st s s =

∑ Kst xs us ,

s =1

1

n2 St

s =1

n

∑ Kst xs xs′ β(zs )

s =1

where e is defined in Section 2 and we apply eGh−1 = e and e( Qst ⊗ xs ) = xs . Then we have

γ̂ − γ =

=

h

t =1

h n

∑ (t − xt′ A2t )2

=

h

=

h

=

=

=

n

∑ (t − xt′ A2t )2

t =1

n

∑ (t − xt′ A2t )2

t =1

n

∑ (t −

xt′ A2t )2

∑ (t −

xt′ A2t )2

t =1

h n

t =1

h n

∑ (t − xt′ A2t )2

t =1

−3/2 −1

n

B1n [ B2n

i −1

n

h

n

∑ (t − xt′ A2t )(yt − xt′ A1t ) − ∑ (t − xt′ A2t )2

t =1

i −1 n

t =1

i −1 h

n

∑ (t − xt′ A2t )2

t =1

i

γ

∑ (t − xt′ A2t )(yt − xt′ A1t − tγ + xt′ A2t γ)

t =1

n

i −1 h

i −1 ∑ (t − xt′ A2t )(yt − tγ + xt′ ( A2t γ − A1t )

t =1

n

∑ (t −

xt′ A2t )

xt′ β(zt ) + ut

∑ (t −

xt′ A2t )

xt′ β(zt ) + ut

t =1

i −1 n

t =1

i −1 n

+

−

xt′

xt′

1

n2 St

1

n2 St

B3n =

B4n =

∑

s =1

n

∑

s =1

Kts eGh−1 ( Qst

⊗ xs )(γs − ys )

xs Kts ( xs′ β(zs ) + us )

n

n

t =1

t =1

t =1

where

B2n =

n

∑ (t − xt′ A2t ) xt′ ( β(zt ) − β(zt )) − ∑ (t − xt′ A2t ) xt′ ũt + ∑ (t − xt′ A2t )ut

− B3n + B4n ]

B1n =

i

1

n3

n

∑ (t − xt′ A2t )2

t =1

n

1

n3/2

1

n3/2

1

n3/2

∑ (t − xt′ A2t ) xt′ ( β(zt ) − β(zt ))

t =1

n

∑ (t − xt′ A2t ) xt′ ũt

t =1

n

∑ (t − xt′ A2t )ut

t =1

14

In Lemma 1 we show that

1 −

3

d

B1n −

→

Z 1

0

rBx (r)′ dr

h Z

1

Bx (r) Bx (r)′ dr

0

i −1 Z

1

0

rBx (r)dr ≡ W1

In Lemmas 2 and 3, we prove that

B2n = o p (1)

B3n = o p (1)

and

Finally, in Lemma 4, we establish that

d

B4n −

→

Z 1

0

rdBu (r) −

Z

1

0

′

Bx (r) dBu (r)

h Z

1

0

′

Bx (r) Bx (r) dr

i −1 Z

1

0

rBx (r)dr ≡ W2

Therefore,

d

n3/2 (γ̂ − γ) −

→ W1−1W2

This completes the proof of Theorem 1.

A.2 Proof of Theorem 2

Proof. By using γ̂ − γ = O p n−3/2 , we to show that replacing γ by γ̂ will not affect the

asymptotic distribution of β̂(z).

β̂(z)

β̂(1) (z)

n

= ∑

s =1

xs xs′

(zs − z) xs xs′

(zs − z) xs xs′ (zs − z)2 xs xs′

= D1n + D2n

−1

Kh,sz

n

∑

s =1

xs

( zs − z) xs

xs

(ys − sγ̂)Kh,sz

where

n

D1n = ∑

s =1

xs xs′

(zs − z) xs xs′

(zs − z) xs xs′

− z )2 x

D1n,1

=

D1n,2

( zs

′

s xs

−1

Kh,sz

15

n

∑

s =1

( zs − z) xs

(ys − sγ)Kh,sz

and

n

D2n = ∑

s =1

Kh,sz

(zs − z) xs xs′ (zs − z)2 xs xs′

D2n,1

=

D2n,2

It is straightforward to show that D2n,1 = O p

and Park (2009), we know that

−1

(zs − z) xs xs′

xs xs′

1

n

n

∑

s =1

xs

( zs − z) xs

s(γ − γ̂)Kh,sz

, and from the proof of Theorem 2.1 in Cai, Li

D1n,1 = β(z) + O p h2 +

1 √

n h

Hence, combining the above results we obtain

β̂(z) = D1n,1 + D2n,1 = D1n,1 + O p

1

n

= D1n,1 + o p

1 √

n h

(14)

Equation (14) implies that the asymptotic distribution of β̂(z) is the same when one replaces γ

by γ̂. Hence, Theorem 2 follows from Theorem 2.1 of Cai, Li and Park (2009).

B Lemmas

Lemma 1. Under assumptions 1 to 6, we have

d

B1n −

→

1 −

3

Z 1

0

rBx (r)′ dr

h Z

1

0

Bx (r) Bx (r)′ dr

i −1 Z

1

0

rBx (r)dr ≡ W1

Proof. Recall that

B1n =

1

n3

n

∑ (t − xt′ A2t )2 =

t =1

1

n3

n

∑ (t2 − 2txt′ A2t + ( xt′ A2t )2 )

t =1

= B1n,1 − 2B1n,2 + B1n,3

It is easy to see that

B1n,1 =

1

∑nt=1 t2

→

3

n

3

16

(15)

For B1n,2 , using Lemma 5, we have

B1n,2

=

=

=

1

n3

1

n3

1

n3

n

∑ txt′ A2t

t =1

n

∑ txt′ [ A2 + o p (1)]

t =1

n

∑ txt′ A2 + o p (1)

t =1

−1 1 n

1 n ′ 1 n

′

x

x

sx

tx

=

s s

s + o p ( 1)

t

n3 t∑

n2 s∑

n2 s∑

=1

=1

=1

1 n t x′ 1 n x x′ −1 1 n s x √t

√s √s

√s + o p (1)

=

∑

∑

n t∑

n

n

n

n

n

n n

n

=1

s =1

s =1

Z 1

h Z 1

i −1 Z 1

d

−

→

rBx (r)′ dr

Bx (r) Bx (r)′ dr

rBx (r)dr .

0

0

0

where the second equality follows Lemma 5. Similarly, we have that

B1n,3

=

1

n3

=

1

n3

=

d

−

→

=

A2′

n

Z

n

∑ ( xt′ A2t )2

t =1

n

∑ ( xt′ A2 )2 + o p (1)

t =1

0

n

x x ′ A

t

2

t

+ o p ( 1)

∑ √ n √n

n

t =1

h Z 1

i −1 h Z

1

′

′

rBx (r) dr

Bx (r) Bx (r) dr

Z 1

0

1

n

0

rBx (r)′ dr

h Z 1

0

Bx (r) Bx (r)′ dr

1

0

i −1 Z 1

0

′

Bx (r) Bx (r) dr

rBx (r)dr .

ih Z

1

0

′

Bx (r) Bx (r) dr

i −1 Z

1

0

Therefore, combining the above results, we obtain

B1n

Z 1

h Z 1

i −1 Z 1

1

′

′

−

→

−2

rBx (r) dr

Bx (r) Bx (r) dr

rBx (r)dr

3

0

0

0

Z 1

h Z 1

i −1 Z 1

+

rBx (r)′ dr

Bx (r) Bx (r)′ dr

rBx (r)dr

d

0

=

1 −

3

0

Z 1

0

rBx (r)′ dr

h Z

0

1

0

Bx (r) Bx (r)′ dr

i −1 Z

1

0

rBx (r)dr

Lemma 2. Under assumptions 1 to 6, we have B2n = o p (1).

17

rBx (r)dr

Proof. Define

′

β( q ) ( zt )

,

M β ( zt ) =

β ( z t ), β ( z t ), · · · ,

q!

i

h1 n

M β (zt ) = St−1 2 ∑ Kst Gh−1 ( Qst ⊗ xs ) xs′ β(zs ) ,

n s =1

′

then we have

h1

k M β (zt ) − M β (zt )k = St−1 2

n

h1

= St−1 2

n

≤

n

∑ Kst Gh−1 (Qst ⊗ xs ) xs′

s =1

n

∑

s =1

n

Kst Gh−1 ( Qst

⊗

xs ) xs′

β(zs ) − Q′st M β (zt )

β( zs ) − ∑

q

i=0

i

i

β( i) ( zt )

( zt − zs ) i i!

q

−1

β( i) ( zt )

1 −1

i

G

(

Q

⊗

x

)

·

k

x

k

·

β

(

z

)

−

Kst k

S

k

·

(

z

−

z

)

s

s

t

s

∑ i!

∑ h st s

n2 t

s =1

i=0

= O p ( hq +1 )

where the last equality follows the results that kSt−1 k = O p (1),

k xs k = O p (1) and

1

n2

∑ns=1 Gh−1 ( Qst ⊗ xs ) ·

q

β( i) ( zt )

i

(zt − zs ) Kst = O p (hq+1 )

E β( zs ) − ∑

i!

i=0

Hence, we obtain that uniformly for t = 1, · · · , n,

M β ( z t ) − M β ( z t ) = O p ( hq +1 )

Using (16), it is easy to show that

sup β(zt ) − β(zt ) = sup eM β (zt ) − eM β (zt ) = O p (hq+1 )

1≤ t ≤ n

1≤ t ≤ n

Thus, by the definition of B2n , we have

B2n =

1

n

∑ (t − xt′ A2t ) xt′ ( β(zt ) − β(zt ))

n3/2

t =1

≤

=

1

n3/2

1

n3/2

n

sup k β(zt ) − β(zt )k ∑ kt − xt′ A2t k · k xt k

1≤ t ≤ n

t =1

O p (hq+1 )nO p (n)O p (

18

√

n) = O p (nhq+1 ) = o p (1)

(16)

where the first equality follows the facts that kt − xt′ A2t k = O p (n) and k xt k = O p (

the last equality is implied by assumption 6, which completes the proof.

Lemma 3. Under assumptions 1 to 6, we have B3n = o p (1).

Proof. Note that

1

B3n =

n3/2

n

∑ (t − xt′ A2t ) xt′ ũt

t =1

n

i

h

n

−1

′

′

−1 1

K

G

(

Q

⊗

x

)

u

(

t

−

x

A

)

x

eS

st h

st

s s

t 2t t

t

n2 s∑

n3/2 t∑

=1

=1

1 n

1 n

′

′

−1

−1

(t − xt A2t ) xt (eSt Kst Gh Qst ⊗ xs ) us

5/2 ∑

n s∑

t =1

=1 n

1

=

=

Similar to the proof of Lemma 1, we can show that uniformly in s = 1, · · · , n,

n

xs i

eSt−1 Kst Gh−1 Qst ⊗ √

n

t =1

h

n

1

xs i

−1

−1

′

′

√

eS

K

G

(

t

−

x

A

)

x

Q

⊗

+ o p ( 1)

st

2

st

∑

t

t

t

h

n5/2 t=1

n

1

(t − xt′ A2t ) xt′

∑

5/2

n

=

h

Define

Vns ≡

Θns ≡

h

i−1 h

1 n

xs i

′

Γ (κ ) ⊗ √

xt xt

e ∆(κ ) ⊗

∑ (t −

n2 t∑

n5/2 t=1

n

=1

h

i

n

1

xs

(t − xt′ A2 ) xt′ eSt−1 Kst Gh−1 Qst ⊗ √

− Vns

∑

5/2

n

n

t =1

1

n

xt′ A2 ) xt′

where

κj =

Z

v j K (v)dv, j = 1, · · · , 2q

Γ(κ ) = (1, κ1 , · · · , κq )′

1

κ1

κ2

κ1 κ2

κ3

..

∆(κ ) =

.

κ2 κ3

.

.

.

.

..

..

.

κ q κ q +1 κ q +2

19

···

···

···

..

.

···

κq

κ q +1

κ q +2

..

.

κ2q

√

n), and

Then we have

1

B3n = √

n

n

∑ Vns us +

s =1

1

√

n

∑ Θns us

n

s =1

where Θns = o p (1) uniformly in s = 1, · · · , n.

For any ǫ1 > 0 and ǫ2 > 0, we have

n 1

Pr √

n 1

= Pr √

=

n

n

n

h

o

>

ǫ

Θ

u

1

∑ ns s

s =1

n

o

n 1

∑ Θns us > ǫ1 , max kθns k > ǫ2 + Pr √

s

s =1

E k∑ns=1 Θns us k2 1{maxs kθns k ≤ ǫ2 }

nǫ12

i

+ o ( 1)

n

n

o

∑ Θns us > ǫ1 , max kθns k ≤ ǫ2

s

s =1

where we apply Chebyshev’s Inequality. By assumptions 1 and 2, we can prove that

2

i

n

1 h

E ∑ Θns us 1{max kθns k ≤ ǫ2 } = o(1)

s

s =1

n

Further, since xt′ A2 is a scalar, we can show that

Vns =

=

=

n

h

1 n

i−1 h

xs i

′

e ∆(κ ) ⊗

Γ (κ ) ⊗ √

xt xt

∑ (t −

n2 t∑

n5/2 t=1

n

=1

−1 x n

n

n

n −1 1

1

1

1

′

′

′

√s

t−

xx

sxs xt xt

xx

2 ∑ s s

2 ∑

2 ∑ t t

n

n

n

n5/2 t∑

n

s =1

s =1

t =1

=1

n

n

−1 1 n

−1 xs

1

1 n

1 n

′

′

′

′

√

xt xt

xt xt

∑ tx − ∑ sxs n2 ∑ xs xs

n2 t∑

n2 t∑

n5/2 t=1 t

n

s =1

s =1

=1

=1

1

A2′ xt ) xt′

= 0

Therefore we have

B3n = o p (1)

Lemma 4. Under assumptions 1 to 6, we have

d

B4n −

→

Z 1

0

rdBu (r) −

Z

1

0

′

Bx (r) dBu (r)

h Z

20

1

0

′

Bx (r) Bx (r) dr

i −1 Z

1

0

rBx (r)dr

Proof.

n

1

B4n =

n3/2

1

n

≡

∑ (t − xt′ A2t )ut =

t =1

n

1

1

n

n

n

t

1

xt′

√

√

u

−

A

u

2 t +

∑ n t

n

n

t =1

n

∑

t =1

x′

√t ( A2 − A2t )ut

n

∑ Unt∗ ut + n ∑ Φ∗nt ut

t =1

t =1

≡ B4n,1 + B4n,2

By Lemma 5, we have kΦnt k = o p (1) uniformly in t = 1, · · · , n. Similarly as that in the proof

of Lemma 3, we have B4n,2 =

B4n,1

=

1

n

∑nt=1 Φnt ut = o p (1). And

1 n t

xt′

√

√

u

−

A

u

t

2

t

n t∑

n

n

=1

n

n

xt′ ut 1

t ut

√

√

−

∑ n n ∑ n √n · n

t =1

t =1

xs xs′ −1 1 n s xs √

√

∑ n √n

n s∑

n

s =1

=1 n

Z 1

Z 1

Z

Z

1

−1

1

d

Bx (r)′ dBu (r) ·

Bu (r)dr −

−

→

Bx (r) Bx (r)′ dr

Bx (r)dr

·

=

n

0

0

0

0

This completes the proof of Lemma 4.

Lemma 5. Under assumptions 1 to 6, we have

A2t = A2 + o p (1)

where

A2 =

1

n2

uniformly int = 1, · · · , n

n

∑ xs xs′

s =1

−1 1

n2

n

∑ sxs

s =1

Proof. We follow the proof strategy in the Proposition A.1 of Li et al. (2013). We will first

consider the first term St in A2t . Let Qsz = [1, (zs − z), · · · , (zs − z)q ]′ , K̃h,sz = Kh,sz Qsz Q′sz ,

ηh,sz = K̃h,sz − E [K̃h,sz ]. Then we have

h

1

−1 1

Cn ( z ) = Gh

h

n2

h1

= Gh−1 2

n

n

∑ K̃h,sz ⊗

s =1

n

xs xs′

i

Gh−1

∑ E[K̃h,sz ] ⊗ xs xs′

s =1

≡ C1n (z) + C2n (z)

i

Gh−1 + Gh−1

21

h1

n2

n

∑

s =1

i

K̃h,sz − E [K̃h,sz ] ⊗ xs xs′ Gh−1

Under assumptions 4 and 5, we obtain that

sup E [K̃h,sz ] = f (z)∆(κ ) + o(1).

z ∈ Sz

where Sz is the bounded support of zt .

Noting that f (z) is bounded away from zero and infinity for z ∈ Sz , then we have uniformly

for z ∈ Sz ,

1

1

C1n (z) − ∆(κ ) ⊗

f ( z)

n2

n

∑

s =1

xs xs′

= o p ( 1)

(17)

Next we will show that C2n (z) = o p (1) uniformly for z ∈ Sz . Let Q∗sz = [1, (zs − z)/h, · · · , (zs −

∗ be defined as η

∗

z)q /hq ]′ and ηh,sz

h,sz with Q sz replaced by Q sz . Then

C2n (z) =

n

1

n2

∗

⊗ xs xs′

∑ ηh,sz

s =1

By the similar argument in Theorem 1 of Masry (1996), we can prove that

sup sup Var

z ∈ Sz

l ≥0

l +m

∑

s = l +1

∗

ηh,sz

= O(m/h)

for all m ≥ 1. For some 0 < δ < 1, set N = [1/δ], sk = [kn/N ] + 1, s∗k = sk+1 − 1, and

∗

s∗∗

k = min {sk , n }. Let Un,s = xs xs /n for any 1 ≤ s ≤ n and Un (r ) = Un,[nr ] for any r ∈ [0, 1].

Following the proof of Theorem 3.3 of Hansen (1992), we have

1

C2n (z) = sup 2

z ∈ Sz n

1

≤ sup 2

z ∈ Sz n

1

≤ sup 2

n

z ∈ Sz

≤

1

n

N −1

∑

n

∑

s =1

∗

ηsz

1

⊗ Un,s = sup 2

z ∈ Sz n

∗∗

N −1 s k

∑ ∑

k=0 s = s k

∗∗

N −1 s k

∗

ηsz

∗∗

N −1 s k

∑ ∑

k=0 s = s k

1

⊗ Un,sk + sup 2

z ∈ Sz n

∗

ηsz

⊗ Un,s ∗∗

N −1 s k

∑ ∑

k=0 s = s k

∗

ηsz

⊗ (Un,s − Un,sk )

1 N −1 s∗∗

k

∗

∗

kηsz k · kUn,sk k + sup 2

kηsz

k · kUn,s

n

z ∈ Sz

k=0 s = s k

k=0 s = s k

∑ ∑

sup k =0 z ∈ Sz

s ∗∗

k

∑

s=sk

≡ C2n,1 + C2n,2.

∗

ηsz

·

∑ ∑

− Un,sk k

1

sup kUn (r)k + sup kUn (r1 ) − Un (r2 )k · sup

0≤ r ≤ 1

z ∈ Sz n

|r1 −r2 |≤ δ

∗∗

N −1 s k

∑ ∑ kηsz∗ k

k=0 s = s k

Note that sup0≤r ≤1 kUn (r)k = O p (1) due to Un (r) ⇒ Bx (r) Bx (r)′ by assumption 1. Besides, by

22

the similar argument in the proof of Theorem 1 of Masry (1996), we obtain

1

n

N −1

∑

sup k =0 z ∈ Sz

s ∗∗

k

∑

s=sk

which implies that

∗

ηsz

N

≤

n

s ∗∗

k

sup

sup

∑ kηsz∗ k ≤

0≤ k ≤ N − 1 z ∈ S z s = s k

1

sup sup 1≤ s ≤ n z∈Sz δn

s + δn

∑

i= s

∗

ηiz

= o p ( 1)

C2n,1 = sup kUn (r)k · o p (1) = o p (1).

0≤ r ≤ 1

Since it is easy to show that uniformly for z ∈ Sz ,

1

n

∗∗

N −1 s k

∑ ∑ kηsz∗ k = O p (1),

k=0 s = s k

we have that

C2n,2 =

sup kUn (r1 ) − Un (r2 )k · O p (1) = o p (1)

|r1 −r2 |≤ δ

by letting δ → 0. Therefore C2n (z) = o p (1) uniformly for z ∈ Sz . With equation (17) we have

uniformly for z ∈ Sz

1

1

Cn ( z ) − ∆ ( κ ) ⊗

h f ( z)

n2

n

∑ xs xs′

s =1

= o p ( 1)

(18)

Using the similar argument as above, we can also prove that, for the second term in A2t , uniformly for z ∈ Sz

1

2

n h f ( z)

n

∑ Kst Gh−1 (Qst ⊗ xs )s = Γ(κ ) ⊗

s =1

1

n2

n

sx

∑ s + o p ( 1) .

s =1

With equations (18) and (19), we show that

A2t = A2 + o p (1), uniformly in t = 1, · · · , n,

where

A2 =

=

=

=

1 n

−1

1 n

′

e ∆(κ ) ⊗

Γ

(

κ

)

⊗

x

x

sx

s

s

s

n2 s∑

n2 s∑

=1

=1

−1

1 n

1 n

′

·

Γ

(

κ

)

⊗

x

x

sx

e∆(κ )−1 ⊗

s s

s

n2 s∑

n2 s∑

=1

=1

h

i h 1 n

−1 1 n

i

′

e ∆(κ ) −1 Γ (κ ) ⊗

x

x

sx

s

s

∑

∑

s

n2 s =1

n2 s =1

−1 1 n

1 n

′

x

x

sx

s

s

s

n2 s∑

n2 s∑

=1

=1

23

(19)

h

i

where the (1, 1)-th element of ∆(κ )−1 Γ(κ ) is 1 and we deploy the structure of e.

24