EXPERIENTIAL LEARNING ENVIRONMENTS FOR STRUCTURAL BEHAVIOR By S.M.

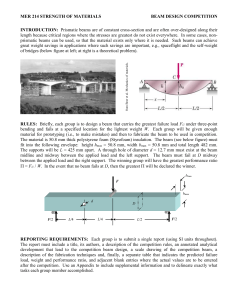

advertisement