Document 10834098

advertisement

CSE 305 Introduc0on to Programming Languages Lecture 9 – CFG and Pushdown Automata CSE @ SUNY-­‐Buffalo Zhi Yang Courtesy of Professor Yacov Hel-­‐Or Courtesy of Dr. David Reed No0ce Board • First, June 27, 2013, you will be having midterm exam, which covers lecture 1 to lecture 10. • Second, on June 20, 2013(Thursday), you will be having the second long quiz. Our objec0ve • The first objec0ve of our class, is to comprehend a new programming language within very short 5me period, and because you have this ability to shorten your learning curve, you are going to manipulate the language with an insight learning. • The second objec0ve is to even engineer your own language! Review what we ve learnt and see future eg: Egyp0an Number System; Complement Number eg: Abacus Number System eg: Gate system, Including different underline device 1st Genera0on language: Machine Code eg: MIPS 2nd Genera0on language: Assembly Code eg: Fortran Regular Expression What s next ? 3rd Genera0on Language: Macro func0on Macro func5on Basic Calcula0on System Lexer Compiler System Virtual Machine Parser Push Down Automata Type Checking Context-­‐Free Grammar Lambda Calculus Theory Context-­‐Free Language • Languages that are generated by context-free grammars are context-free

languages

• Context-free grammars are more expressive than finite automata: if a language L

is accepted by a finite automata then L can be generated by a context-free

grammar

• Beware: The converse is NOT true

Context-­‐Free Grammar Definition. A context-free grammar is a 4-tuple (∑, NT, R, S), where:

• ∑ is an alphabet (each character in ∑ is called terminal)

• NT is a set (each element in NT is called nonterminal)

• R, the set of rules, is a subset of NT × (∑ ∪ NT)*

If (α,β) ∈ R, we write production α à β

• S, the start symbol, is one of the symbols in NT

β is called a sentential form

Alternate Defini0on: Context-­‐Free Grammars Grammar Variables G = (V , T , S , P )

Terminal symbols Start variables Produc0ons of the form: A →α

α

is string of variables and terminals Courtesy Costas Busch -­‐ RPI 7 Proper0es of PDAs !"#$%"&'%()#*)!+,(

We had two ways to describe regular

languages:

Regular-Expressions

DFA / NFA

syntactic

computational

How about context-free-languages?

CFG

syntactic

PDA

computational

!"#$%&'

CFG = PDA Theorem: A language is context-free iff

some pushdown automaton recognizes it.

Proof:

CFLoPDA: we show that if L is CFL

then a PDA recognizes it.

PDA oCFL: we show that if a PDA

recognizes L then L is CFL.

!"#$%&!'%(#%)*+

From CFG to PDA Proof idea: Use PDA to simulate leftmost

derivations.

Leftmost derivation : A derivation of a string is

a leftmost derivation if at every step the leftmost

remaining variable is the one replaced.

We use the stack to store the suffix that has not

been derived so far.

Any terminal symbols appearing before the

leftmost variable are matched right away.

Different deriva0ons for the same !"##$%$&'()$%"*+'",&-(#,%('.$(-+/$(

parse tree 0+%-$('%$$

CFG: EoEuE | E+E

Eo0 | 1 | 2 _«_9

1$#'/,-'

)$%"*+'",&

E

E

E

5

E

E

+

3

x

2

%"2.'/,-'

)$%"*+'",&

E EuE

E EuE

5 EuE

5 3u E

5 3u 2

E EuE

Eu2

E Eu2

E 3u 2

5 3u 2

7

/$0%$1#2(!"#3124%0$1"#5

!"#$%"&

input: '()(*(+(,

stack: .

E

!"#$%"&

E EuE

input: '()(*(+(,

EuE

stack: +

.

!"#$%"&

E EuE

E EuE

input: '()(*(+(,

E+EuE

stack: )

+

.

!"#$%"&

E EuE

E EuE

5 EuE

input: '()(*(+(,

5+EuE

'

stack: )

+

.

!"#$%"&

E EuE

E EuE

5 EuE

input: '()(*(+(,

EuE

E EuE

E EuE

5 EuE

5 3u E

stack: +

.

!"#$%"&

input: '()(*(+(,

3uE

stack: *

+

.

E EuE

!"#$%"&

E EuE

5 EuE

5 3u E

input: '()(*(+(,

stack: .

E

E EuE

E EuE

5 EuE

5 3u E

5 3u 2

!"#$%"&

input:

'()(*(+(,

2

stack: ,

.

E EuE

E EuE

5 EuE

5 3u E

5 3u 2

!"#$%"&

input: '()(*(+(,

stack: -

7KHVWULQJ¶'()(*(+(,·LVDFFHSWHG

!"#$%&!'%(#%)*+

From CFG to PDA Informally:

1. Place the marker symbol $ and the start variable

S on the stack.

2. Repeat the following steps:

± If the top of the stack is a variable A:

&KRRVHDUXOH$ĺD1«Dk and substitute A with D1«Dk

± If the top of the stack is a terminal a:

Read next input symbol and compare to a

,IWKH\GRQ¶WPDWFKUHMHFWGLH

± If top of stack is $, go to accept state

!"#$%&!'%(#%)*+

From CFG to PDA For a given CFG G=(V,6,S,R),

we construct a PDA P=(Q,6,*,G,q0,F) where:

± Q={qstart, qloop, qaccpt}

± * = V6{$}

± q0=qstart

± F={qaccpt}

From CFG to PDA !"#$%&!'%(#%)*+

We define G as follows (shorthand notation):

±

±

±

±

G(qstart,H,H)={(qloop,S$)}

G(qloop,H,A)={(qloop, D1«Dk) | for each AoD1«Dk in R}

G(qloop,a,a)={(qloop,H) | for each a6 }

G(qloop,H,$)={(qaccpt,H)}

{H,AoD1«Dk | for rules A oD1«Dk}

{a,aoH | for all a6}

,-(."(

H,HoS$

,/##0

H,$oH

,.110(

-."/0123 Example Construct a PDA for the following CFG G:

!o"#$ %&$&&&&&&&&&&&'()*+&",$

#o#" %&H

H,SoaTb

H,Sob

H,ToTa

H,ToH

a,aoH

b,boH

456"76

H,HoS$

41880

H,$oH

4"9906

!"#$%&'(%)#%*!+

From PDA to CFG ܂

context-free grammar

pushdown automaton

First, we simplify the PDA:

± It has a single accept state qf

± $ is always popped exactly before accepting

± Each transition is either a push, or a pop, but

not both

From PDA to CFG !"#$%&'(%)#%*!+

single accept state qf:

H,HoH

H,HoH

!"#$%&'(%)#%*!+

From PDA to CFG $ is always popped exactly before accepting:

{H,AoH | A*, Az$}

H,$oH

!"#$%&'(%)#%*!+

From PDA to CFG Each transition is either a push, or a pop:

V,aob

V,aoH

H,Hob

V,HoH

z*

V,Hoz H,zoH

!"#$%&'(%)#%*!+

From PDA to CFG For any word w accepted by a PDA

P=(Q,6,*,G,q0,qf) the process starts at q0 with an

empty stack and ends at qf with an empty stack.

Definition: for any two states p,qQ we define

Lp,q to be the language that if we starts at p with

an empty stack and run on wLp,q we end at q

with an empty stack.

We define for Lp,q a variables Ap,q s.t.

Lp,q = {w | Ap,q * w}

!"#$%&'(%)#%*!+

Note, that L(P)=Lq0,qf

For any word w accepted by a PDA

!"#$%&'(%)#%*!+

From PDA to CFG Let P=(Q, 6, *, G, q0, qf) a given PDA.

We construct a CFL G=(V,6,S,R) as follows*:

V = {Ap,q | p,qQ}

S=A q0,qf

R is a set of rules constructed as follows:

* Proof of correctness and further reading at the supplementary

-

From PDA to CFG !"#$%&'(%)#%*!+

Add the following rules to R:

1. For each p,q,r,sQ, t*, and a,b6H,

if (r,t)G(p,a,H) and (q,H)G(s,b,t) add a rule

Apq o aAr,sb

a,Hot

,

"

b,toH

-

,/-0%)

.

,#,%)

2. For each p,q,rQ, add a rule Ap,qo Ap,r Ar,q

,

"

!"#$%&'(%)#%*!+

3. For each pQ, add the rule Ap,poH

1.

Add

the

following

rules

to

R:

./#,0+%

.

,

!"#$%&'(

)*

)*

Example 0,HoA

1,AoH

H,Ho$

#,HoH

H,$oH

)+

0,HoA

H,Ho$

#,Hoz

)+

),

).

)-

1,AoH

H,zoH

H,$oH

n#1n

L(P)=0

!"#$%&'(

),

)-

!"#$%&'( Example 3)

0,HoA

H,Ho$

#,Hoz

34

35

32

)*#+*,-#+.#/&'(,,012

productions:

!"" ȺH

oA !"" Ⱥ!""!"" 1,AoH

!## ȺH

!"" Ⱥ!"#!#"

!$$H,$oH

ȺH

#,Hoz

!"" Ⱥ!H,zoH

"$!$"

12

36

1,AoH

H,zoH

H,$oH

6

!"" 3Ⱥ!

"%!%"

!"$ Ⱥ!""!"$

!"$ Ⱥ!"#!#$

!"$ Ⱥ!"$!$$

&&&

35!%% ȺH

!(( ȺH

!"#$%&'(

!"% Ⱥ!#$

!#$ Ⱥ#'!#$$

!#$ Ⱥ$((

32

How to determine CFG? !"#$%"#&'(&)*+''$,-#./-.'0

So some languages seem to be not CFL.

The question is which?

This can be determined using the pumping

lemma for context-free languages.

!"#$%&'()*+$,#''-$.

The Pumping Lemma -­‐ /-01+23&*4

background Let L be a CFL and let G be a simple

grammar (no unit/H rules) generating it.

Let wL be a long enough word (we will say

later what is long).

The parsing tree of w contains a long path

from S to some leaf (terminal).

On this long path some variable R must

repeat (remember, w is long).

The P

umping L

emma -­‐

b

ackground

!"#$%&'()*+$,#''-$. /-01+23&*4

S

Divide w into uvxyz

according to the parse

tree, as in the figure.

R

Each occurrence of R

has a subtree under it.

R

u

v

x

y

z

The P

umping L

emma -­‐

b

ackground

!"#$%&'()*+$,#''-$. /-01+23&*4

The upper occurrence of R has a larger subtree

and generates vxy.

The lower occurrence of R has a smaller

subtree and generates only x.

Both subtrees are generated by the same

variable R.

That means if we substitute one for the other we

will still obtain valid parse trees.

S

R

R

u

v

x

y

z

S

S

R

R

R

x

u

v

v

y

x

z

u

z

y

Replacing the smaller by

the larger repeatedly

generates the string

uvixyiz at each i>0.

Replacing the larger by

the smaller generates the

string uxz or uvixyiz

where i=0.

S

S

Therefore, for all it0, wi = uvixyiz is also in L

R

R

!"#$%&'()*+$,#*+-"

The Pumping Length That means that every CFL has a special

value called the pumping length such that all

strings longer than the pumping length can

be "pumped".

The string can be divided into 5 parts

w=uvxyz.

The second and fourth can be pumped to

produce additional words in L.

for all kt0, wk = uvkxykz can also be

generated by the grammar.

!"#$%&'()*##+(,-.(/0)

Pumping Lemma for CFL Lemma: Let L be a context-free language.

There is a positive integer p (the pumping

length) such that for all strings wL with

|w|tp, w can be divided into five pieces

w=uvxyz satisfying the following conditions:

1. |vy|>0

2. |vxy|dp

3. for each it0, uvixyizL

Proof -­‐ v'()*+%#$%,

alue of p !"##$%&

First we find out the value of p.

Let G be a CFG for CFL L.

Let b be the maximum number of symbols in

the right side of any rule in G.

So we know that in any parse tree of G a node

can't have more than b children.

So if the height of a parsing tree for wL is h

then |w|< bh

(h>logb|w|).

-

-oD.D/D0 }Db

}

!"##$%& '()*+%#$%,

D D D

D

.

/

0

1

First we find out the value of p.

!"##$%&

Proof -­‐ v'()*+%#$%,

alue of p Let |V| be the number of variables in G.

We set p = b|V|+2 .

(h>logb|p|).

Then for any string of length p the parse tree

requires height at least |V|+2 (Note, b>1 since

there are no unit rules).

Given a string wL, s.t. |w| t p , since G has

only |V| variables, at least one of the variables

repeats (height |V|+2 Î |V|+1 variables +

terminal).

!"##$%& '()*+%#$%,

W.l.o.g. assume this variable is R

!"##$%²

Proof – c&#'()*)#'%+

ondi0on 1 To prove condition 1 (|vy|>0) we have to show it

is impossible that both v and y are H.

We use a grammar without unit rules.

But the only way to have v=y=H, is to have a

rule R o R, which is a unit rule. Contradiction.

S

So condition 1 is satisfied.

R

R

u

!"##$%² &#'()*)#'%+

v

x

y

z

Proof –

c

ondi0on 2

!"##$%² &#'()*)#'%+

To prove condition 2 (|vxy|dp) we will check

the height of the subtree rooted in first R =

the subtree that generates vxy.

Its height is at most |V|+2 (R was selected as

a variable that has two occurrences within

the bottom |V|+1 levels of the parsing tree).

So it can generate a string of length at most

b|V|+2.

Since p= b|V|+2, condition 2 is satisfied.

A/::>*²

Proof – c&:(2'+':(*B

ondi0on 3 S

S

R

R

R

u

v

R

v

x

x

y

z

y

!"#$%&'()*+,"*-.%$$"/*01*

+,"*$%/)"/*/"#"%+"2$1*

)"("/%+"-*+,"*-+/'()*

34 '51 '6 %+*"%&,*'789

u

z

!"#$%&'()*+,"*$%/)"/*01*

+,"*-.%$$"/*)"("/%+"-*

+,"*-+/'()* 356** :/** 34 '51 '6**

;,"/"*'<89

=,"/">:/"?*>:/*%$$*'t8?**;'<34'51'6 '-*%$-:*'(*@**

Syntax • syntax: the form of expressions, statements, and program units in a programming language programmers & implementers need a clear, unambiguous descrip0on formal methods

for describing syntax:

§ Backus-Naur Form (BNF)

developed to describe ALGOL (originally by Backus, updated by Naur)

allowed for clear, concise ALGOL 60 report

(paralleled grammar work by Chomsky: BNF = context-free grammar)

§ Extended BNF (EBNF)

§ syntax graphs

BNF is a meta-­‐language • a grammar is a collec0on of rules that define a language § rules can be conditional using | to represent OR

<IF-STMT> à if <LOGIC-EXPR> then <STMT>

| if <LOGIC-EXPR> then <STMT> else <STMT>

– BNF rules define abstrac0ons in terms of terminal symbols and abstrac0ons <ASSIGN> à <VAR> := <EXPRESSION>

§ arbitrarily long expressions can be defined using recursion

<IDENT-LIST> à <IDENTIFIER>

| <IDENTIFIER> , <IDENT-LIST>

Deriving expressions from a grammar • from ALGOL 60: <letter>

à a | b | c | ... | z | A | B | ... | Z

<digit>

à 0 | 1 | 2 | ... | 9

<identifier> à <letter>

| <identifier> <letter>

| <identifier> <digit> can derive language elements (i.e., substitute definitions for abstractions):

<identifier> è

è

è

è

è

è

<identifier> <digit>

<identifier> <letter> <digit>

<letter> <letter> <digit>

C <letter> <digit>

CU <digit>

CU1

the above is a leftmost derivation (expand leftmost abstraction first)

Deriva0ons vs. parse trees <identifier>

è

è

è

è

è

è

<identifier> <digit>

<identifier> <letter> <digit>

<letter> <letter> <digit>

C <letter> <digit>

CU <digit>

CU1

a derivation can be represented hierarchically

as a parse tree

<identifier>

<identifier>

<digit>

– internal nodes are abstractions

<identifier>

<letter>

1

– leaf nodes are terminal symbols

<letter>

C

U

Ambiguous grammars • consider a grammar for simple assignments <assign>

<id>

<expr>

à <id> := <expr>

à A | B | C

à <expr> + <expr>

| <expr> * <expr>

| ( <expr> )

| <id>

• A grammar is ambiguous if there exist sentences with 2 or more dis0nct parse trees e.g., A := A + B * C

<assign>

<id>

A

:=

<expr>

<expr>

+

<assign>

<id>

A

<expr>

:=

<expr>

<expr>

*

<id>

A

<expr>

<id>

<expr>

*

<expr>

<expr>

+

<expr>

<id>

<id>

<id>

<id>

B

C

A

B

C

Ambiguity is bad! • programmer perspec0ve – need to know how code will behave • language implementer s perspec0ve – need to know how the compiler/interpreter should behave can build concepts such as operator precedence into grammars

§ introduce a hierarchy of rules, lower level à higher precedence

<assign>

<id>

<expr>

<term>

<factor>

à

à

à

à

à

<id> := <expr>

A | B | C

<expr> + <term> | <term>

<term> * <factor> | <factor>

( <expr> ) | <id>

higher precedence operators bind tighter, e.g., A+B*C ≡ A+(B*C)

Operator precedence <assign>

<id>

<expr>

<term>

<factor>

à

à

à

à

à

<id> := <expr>

A | B | C

<expr> + <term> | <term>

<term> * <factor> | <factor>

( <expr> ) | <id>

A := A + B * C

<assign>

Note: because of hierarchy,

<id>

A

:=

<expr>

<expr>

+

+ must appear above * in the parse tree

<term>

here, if tried * above, would not be able to

derive + from <term>

<term>

<factor>

<id>

A

<term>

*

<factor>

<factor>

<id>

<id>

C

B

In general, lower precedence (looser bind) will

appear above higher precedence operators in

the parse tree

Operator associa0vity When we combine operators to form expressions, the order in which the operators are to be applied may not be obvious. For example, a+b+c can be interpreted as((a + b) + c) or as(a + (b + c)). We say that + isleN-­‐associa5ve if operands are grouped leN to right as in ((a + b) + c). We say it is right-­‐associa5ve if it groups operands in the opposite direc5on, as in (a + (b + c)). A.V. Aho & J.D. Ullman 1977, p. 47

<assign>

<assign> à <id> := <expr>

<id>

à A | B | C

<expr>

à <expr> + <term>

| <term>

<term>

à <term> * <factor>

| <factor>

<factor> à ( <expr> ) | <id>

<id>

A

A := A + B + C

:=

<expr>

<expr>

+

<term>

<factor>

<expr>

+

<term>

<term>

<factor>

<factor>

<id>

<id>

B

A

<id>

C

Right associa0vity • suppose we wanted exponen0a0on ^ to be right-­‐associa0ve – need to add right-­‐recursive level to the grammar hierarchy A := A ^ B ^ C

<assign>

<assign> à <id> := <expr>

<id>

à A | B | C

<expr>

à <expr> + <term>

| <term>

<term>

à <term> * <factor>

| <factor>

<factor> à <exp> ^ <factor>

| <exp>

<exp>

à ( <expr> ) | <id>

<id>

:=

A

<expr>

<term>

<factor>

<exp>

<id>

A

^

<factor>

<exp>

^

<factor>

<id>

<exp>

B

<id>

C

In ALGOL 60… •

•

•

•

•

•

<math expr> à <simple math>

| <if clause> <simple math> else <math expr>

<if clause> à if <boolean expr> then

<simple math>

à <term>

| <add op> <term>

| <simple math> <add op> <term>

•

•

<term>

<factor>

à <factor> | <term> <mult op> <factor>

à <primary> | <factor> ↑ <primary>

•

•

•

•

<add op>

<mult op>

<primary>

à + | à х | / | %

à <unsigned number> | <variable>

| <function designator> | ( <math expr> )

• precedence? associa0vity? Dangling else • consider the C++ grammar rule: <selection stmt> à if ( <expr> ) <stmt>

| if ( <expr> ) <stmt> else <stmt>

poten0al problems? if (x > 0)

if (x > 100)

cout << foo

else

cout << bar

ambiguity!

• to which if does the else belong?

<< endl;

<< endl;

in C++, ambiguity remains in the grammar rules

• is clarified in the English description

(else matches nearest if)

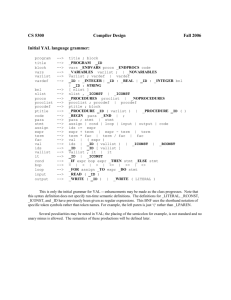

Example1: Fortran/C/Pascal Parse Tree Example2: Perl Number Terminal Tokens <number> ::= ((number_1to999 number_1e6)? (number_1to999 number_1e3)?

number_1to999?) | number_0

<number_0> ::= "zero" <number_1to9> ::=

"one" | "two" | "three" | "four" | "five" | "six" | "seven" | "eight" |

"nine" <number_10to19> ::= "ten" | "eleven" | "twelve" | "thirteen" |

"fourteen" | "fifteen"

| "sixteen" | "seventeen" | "eighteen" |

"nineteen" <number_1to999> ::= (number_1to9? number_100)? (number_1to9

| number_10to19 | (number_tens number_1to9))? <number_tens> ::=

"twenty" | "thirty" | "fourty" | "fifty" | "sixty" | "seventy" |

"eighty" | "ninety" <number_100> ::= "hundred" <number_1e3> ::=

"thousand" <number_1e6> ::= "million"

!

!

!

!

!

Recall …….. • first computers (e.g., ENIAC) were not programmable – had to be rewired/

reconfigured for different computa0ons • late 40 s / early 50 s: coded directly in machine language – extremely tedious and error prone – machine specific – used numeric codes, absolute 011111110100010101001100010001100000000100000010000000010000000000000000000000

000000000000000000000000000000000000000000000000000000000000000001000000000000

001000000000000000000000000000000001000000000000000000000000000000000000000000

000000000000000000000000000000000000000000001010000100000000000000000000000000

000000000000000000110100000000000000000000000000000000000000000000101000000000

000000100000000000000000010000000000101110011100110110100001110011011101000111

001001110100011000010110001000000000001011100111010001100101011110000111010000

000000001011100111001001101111011001000110000101110100011000010000000000101110

011100110111100101101101011101000110000101100010000000000010111001110011011101

000111001001110100011000010110001000000000001011100111001001100101011011000110

000100101110011101000110010101111000011101000000000000101110011000110110111101

101101011011010110010101101110011101000000000000000000000000000000000010011101

111000111011111110010000000100110000000000000000000000001001000000010010011000

000000000000010101000000000000000000000000100100100001001010100000000000000100

000000000000000000000000000000000001000000000000000000000000101000000001000000

000000000010001001000000010000000000000001000000010101000000000000000000000000

100100100001001010100000000000000100000000000000000000000000000000000001000000

000000000000000000101100000001000000000000000100001000000000000000000000100000

000100000000000000000000000010000001110001111110000000001000100000011110100000

000000000000000000000000000000000000000000000001001000011001010110110001101100

011011110111011101101111011100100110110001100100001000010000000000000000000000

000000000000000000000000000000000000000001000000000000000000000000000000000000

000000000000000000000000000000000100000000001111111111110001000000000000000000

000000000000010000000000000000000000000000000000000000000000000000000000000000

000001000000000011111111111100010000000000000000000000000000000000000000000000

000000000000000000000000000000000000000000000000000000001100000000000000000000

001100000000000000000000000000000000000000000000000000000000000000000000000000

000000000000000000000000000000000000000000010000000000000000000000000000000000

000000000000000000000000000000000000000000000000000000000000000000000110000000

000000000000000100000000000000000000000000001101000000000000000000000000000000

000000000000000000000000000000000000001000000000000000000000000000000000000000

000000000000000100010000000000000000000000000000000000000000000000000000000000

000000000010000000000000000000000000000000000000000000000000000010001100000000

000000000000000000000000000000000000000000000000000000000000100000000000000000

000000000000000000000000000000000000101100000000000000000000000000000000000000

000000000000000000000000000000001000000000000000000000000000000000000000000000

000000001101001000000000000000000000000000000000000000000000000000000000100100

000010010000000000000000000000010000000000000000000000000011011100000000000000

000000000000000000000000000000000000000000000000000000100000000000000000000000

000000000000001101000011001010110110001101100011011110010111001100011011100000

111000000000000011001110110001101100011001100100101111101100011011011110110110

101110000011010010110110001100101011001000010111000000000010111110101000101011

111011100010111010001101111011001000000000001011111010111110110110001110011010

111110101111100110111011011110111001101110100011100100110010101100001011011010

101000001000110010100100011011101101111011100110111010001110010011001010110000

101101101010111110101001000110111011011110111001101110100011100100110010101100

001011011010000000001011111010111110110110001110011010111110101111100110111011

011110111001101110100011100100110010101100001011011010101000001000011011000110

000000001100101011011100110010001101100010111110101111101000110010100100011011

101101111011100110111010001110010011001010110000101101101000000000110110101100

001011010010110111000000000011000110110111101110101011101000000000000000000000

000000000000000000000000000000000000000000000000000000

Recall …….. mid 1950 s: assembly languages

developed

§ mnemonic names replaced

numeric codes

§ relative addressing via names

and labels

a separate program (assembler)

translated from assembly code to

machine code

• still machine specific, low-level

.file

"hello.cpp"

gcc2_compiled.:

.global _Q_qtod

.section ".rodata"

.align 8

.LLC0: .asciz "Hello world!"

.section ".text"

.align 4

.global main

.type

main,#function

.proc

04

main:

!#PROLOGUE# 0

save %sp,-112,%sp

!#PROLOGUE# 1

sethi %hi(cout),%o1

or %o1,%lo(cout),%o0

sethi %hi(.LLC0),%o2

or %o2,%lo(.LLC0),%o1

call __ls__7ostreamPCc,0

nop

mov %o0,%l0

mov %l0,%o0

sethi %hi(endl__FR7ostream),%o2

or %o2,%lo(endl__FR7ostream),%o1

call __ls__7ostreamPFR7ostream_R7ostream,0

nop

mov 0,%i0

b .LL230

nop

.LL230: ret

restore

.LLfe1: .size

main,.LLfe1-main

.ident "GCC: (GNU) 2.7.2"

Recall …….. late 1950 s: high-level languages

developed

§ allowed user to program at higher

level of abstraction

however, bridging the gap to low-level

hardware was more difficult

• a compiler translated code all at once

into machine code (e.g., FORTRAN, C

++)

• an interpreter simulated execution of

the code line-by-line (e.g., BASIC,

Scheme)

// File: hello.cpp

// Author: Dave Reed

//

// This program prints "Hello world!"

////////////////////////////////////////

#include <iostream>

using namespace std;

int main()

{

cout << "Hello world!" << endl;

return 0;

}

SoNware development methodologies…… • by 70 s, sopware costs rivaled hardware •

à new development methodologies emerged • early 70 s: top-­‐down design – stepwise (itera0ve) refinement (Pascal) • late 70 s: data-­‐oriented programming – concentrated on the use of ADT s

(Modula-­‐2, Ada, C/C++) • early 80 s: object-­‐oriented programming – ADT s+inheritance+dynamic binding (Smalltalk, C++, Eiffel, Java) • mid 90's: extreme programming, agile programming (???) Architecture influences design……. • virtually all computers follow the von Neumann architecture •

fetch-­‐execute cycle: repeatedly • fetch instruc0ons/data from memory • execute in CPU • write results back to memory • impera0ve languages parallel this behavior – variables (memory cells) – assignments (changes to memory) – sequen0al execu0on & itera0on (fetch/execute cycle) since features resemble the underlying implementa0on, tend to be efficient declarative languages emphasize problem-solving approaches far-removed from the

underlying hardware

e.g., Prolog (logic): specify facts & rules, interpreter performs logical inference

LISP/Scheme (functional): specify dynamic transformations to symbols & lists

tend to be more flexible and expressive, but not as efficient

FORTRAN (Formula Translator) • FORTRAN was the first* high-­‐level language – developed by John Backus at IBM – designed for the IBM 704 computer, all control structures corresponded to 704 machine instruc0ons – 704 compiler completed in 1957 – despite some early problems, FORTRAN was immensely popular – adopted universally in 50's & 60's – FORTRAN evolved based on experience and new programming features • FORTRAN II (1958) • FORTRAN IV (1962) • FORTRAN 77 (1977) • FORTRAN 90 (1990) C

C

C

C

FORTRAN program

Prints "Hello world" 10 times

PROGRAM HELLO

DO 10, I=1,10

PRINT *,'Hello world'

10 CONTINUE

STOP

END

LISP (List Processing) • LISP is a func0onal language – developed by John McCarthy at MIT – designed for Ar0ficial Intelligence research – needed to be symbolic, flexible, dynamic – LISP interpreter completed in 1959 – LISP syntax is very simple but flexible, based on the λ-­‐calculus of Church – all memory management is dynamic and automa0c – simple but inefficient – LISP is s0ll the dominant language in AI – dialects of LISP have evolved • Scheme (1975) • Common LISP (1984) ;;; LISP program

;;; (hello N) will return a list containing

;;;

N copies of "Hello world"

(define (hello N)

(if (zero? N)

'()

(cons "Hello world" (hello (- N 1)))))

> (hello 10)

("Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world"

"Hello world")

>

ALGOL (Algorithmic Language) • ALGOL was an interna0onal effort to design a universal language – developed by joint commiwee of ACM and GAMM (German equivalent) – influenced by FORTRAN, but more flexible & powerful, not machine specific – ALGOL introduced and formalized many common language features of today • data type • compound statements • natural control structures • parameter passing modes • recursive rou0nes • BNF for syntax (Backus & Naur) – ALGOL evolved (58, 60, 68), but not widely adopted as a programming language • instead, accepted as a reference language comment ALGOL 60 PROGRAM

displays "Hello world" 10 times;

begin

integer counter;

for counter := 1 step 1 until 10 do

begin

printstring(Hello world");

end

end

C à C++ à Java à JavaScript ALGOL influenced the development of

virtually all modern languages

§ C (1971, Dennis Ritchie at Bell Labs)

• designed for system programming

(used to implement UNIX)

• provided high-level constructs and lowlevel machine access

§ C++ (1985, Bjarne Stroustrup at Bell Labs)

• extended C to include objects

• allowed for object-oriented

programming, with most of the

efficiency of C

§ Java (1993, Sun Microsystems)

• based on C++, but simpler & more

reliable

• purely object-oriented, with better

support for abstraction and networking

§ JavaScript (1995, Netscape)

• Web scripting language

#include <stdio.h>

main() {

for(int i = 0; i < 10; i++) {

printf ("Hello World!\n");

}

}

#include <iostream>

using namespace std;

int main() {

for(int i = 0; i < 10; i++) {

cout << "Hello World!" << endl;

}

return 0;

}

class HelloWorld {

public static void main (String args[]) {

for(int i = 0; i < 10; i++) {

System.out.print("Hello World ");

}

}

}

<html>

<body>

<script language="JavaScript">

for(i = 0; i < 10; i++) {

document.write("Hello World<br>");

}

</script>

</body>

</html>

Other influen5al languages •

COBOL (1960, Dept of Defense/Grace Hopper) – designed for business applica0ons, features for structuring data & managing files •

BASIC (1964, Kemeny & Kurtz – Dartmouth) – designed for beginners, unstructured but popular on microcomputers in 70's •

Simula 67 (1967, Nygaard & Dahl – Norwegian Compu0ng Center) – designed for simula0ons, extended ALGOL to support classes/objects •

Pascal (1971, Wirth – Stanford) – designed as a teaching language but used extensively, emphasized structured programming •

Prolog (1972, Colmerauer, Roussel – Aix-­‐Marseille, Kowalski – Edinburgh) – logic programming language, programs stated as collec0on of facts & rules •

Ada (1983, Dept of Defense) – large & complex (but powerful) language, designed to be official govt. contract language There is no silver bullet • remember: there is no best programming language – each language has its own strengths and weaknesses • languages can only be judged within a par0cular domain or for a specific applica0on business applica0ons à COBOL ar0ficial intelligence à LISP/Scheme or Prolog systems programming

à C sopware engineering à C++ or Java or Smalltalk Web development à Java or JavaScript or VBScript or perl