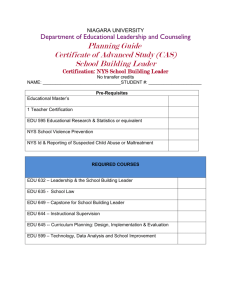

15-740/18-740 Computer Architecture Lecture 17: Asymmetric Multi-Core Prof. Onur Mutlu

advertisement

15-740/18-740 Computer Architecture Lecture 17: Asymmetric Multi-Core Prof. Onur Mutlu Carnegie Mellon University Fall 2011, 10/19/2011 Review Set 9 Due today (October 19) Wilkes, “Slave Memories and Dynamic Storage Allocation,” IEEE Trans. On Electronic Computers, 1965. Jouppi, “Improving Direct-Mapped Cache Performance by the Addition of a Small Fully-Associative Cache and Prefetch Buffers,” ISCA 1990. Recommended: Hennessy and Patterson, Appendix C.2 and C.3 Liptay, “Structural aspects of the System/360 Model 85 II: the cache,” IBM Systems Journal, 1968. Qureshi et al., “A Case for MLP-Aware Cache Replacement,“ ISCA 2006. 2 Readings for Today Accelerated Critical Sections and Data Marshaling Suleman et al., “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures,” IEEE Micro 2010. Shorter version of ASPLOS 2009 paper. Read the ASPLOS 2009 paper for details. Suleman et al. “Data Marshaling for Multi-core Systems,” IEEE Micro 2011. Shorter version of ISCA 2010 paper. Read the ISCA 2010 paper for details. 3 Announcements Midterm I next Monday Exam Review Likely this Friday during class time (October 21) Extra Office Hours October 24 During the weekend – check with the TAs Milestone II Is postponed. Stay tuned. 4 Last Lecture Dual-core execution Memory disambiguation 5 Today Research issues in out-of-order execution or latency tolerance Accelerated critical sections 6 Open Research Issues in OOO Execution (I) Performance with simplicity and energy-efficiency How to build scalable and energy-efficient instruction windows How to approximate the benefits of a large window To tolerate very long memory latencies and to expose more memory level parallelism Problems: How to scale or avoid scaling register files, store buffers How to supply useful instructions into a large window in the presence of branches MLP benefits vs. ILP benefits Can the compiler pack more misses (MLP) into a smaller window? How to approximate the benefits of OOO with in-order + enhancements 7 Open Research Issues in OOO Execution (II) OOO in the presence of multi-core More problems: Memory system contention becomes a lot more significant with multi-core More opportunity: Can we utilize multiple cores to perform more scalable OOO execution? OOO execution can overcome extra latencies due to contention How to preserve the benefits (e.g. MLP) of OOO in a multi-core system? Improve single-thread performance using multiple cores Asymmetric multi-cores (ACMP): What should different cores look like in a multi-core system? OOO essential to execute serial code portions 8 Open Research Issues in OOO Execution (III) Out-of-order execution in the presence of multi-core Powerful execution engines are needed to execute Single-threaded applications Serial sections of multithreaded applications (remember Amdahl’s law) Where single thread performance matters (e.g., transactions, game logic) Accelerate multithreaded applications (e.g., critical sections) Large core Large core Large core Large core “Tile-Large” Approach Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Large core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core “Niagara” Approach ACMP Approach 9 Asymmetric vs. Symmetric Cores Advantages of Asymmetric + Can provide better performance when thread parallelism is limited + Can be more energy efficient + Schedule computation to the core type that can best execute it Disadvantages - Need to design more than one type of core. Always? - Scheduling becomes more complicated - What computation should be scheduled on the large core? - Who should decide? HW vs. SW? - Managing locality and load balancing can become difficult if threads move between cores (transparently to software) - Cores have different demands from shared resources 10 A Case for Asymmetry Execution time of sequential kernels, critical sections, and limiter stages must be short It is difficult for programmer to shorten these serial bottlenecks Insufficient domain-specific knowledge Variation in hardware platforms Limited resources Goal: a mechanism to shorten serial bottlenecks without requiring programmer effort Solution: Ship serial code sections to a large, powerful core in an asymmetric multi-core processor “Large” vs. “Small” Cores Large Core • • • • Out-of-order Wide fetch e.g. 4-wide Deeper pipeline Aggressive branch predictor (e.g. hybrid) • Multiple functional units • Trace cache • Memory dependence speculation Small Core • • • • In-order Narrow Fetch e.g. 2-wide Shallow pipeline Simple branch predictor (e.g. Gshare) • Few functional units Large Cores are power inefficient: e.g., 2x performance for 4x area (power) 12 Tile-Large Approach Large core Large core Large core Large core “Tile-Large” Tile a few large cores IBM Power 5, AMD Barcelona, Intel Core2Quad, Intel Nehalem + High performance on single thread, serial code sections (2 units) - Low throughput on parallel program portions (8 units) 13 Tile-Small Approach Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core “Tile-Small” Tile many small cores Sun Niagara, Intel Larrabee, Tilera TILE (tile ultra-small) + High throughput on the parallel part (16 units) - Low performance on the serial part, single thread (1 unit) 14 Can we get the best of both worlds? Tile Large + High performance on single thread, serial code sections (2 units) - Low throughput on parallel program portions (8 units) Tile Small + High throughput on the parallel part (16 units) - Low performance on the serial part, single thread (1 unit), reduced single-thread performance compared to existing single thread processors Idea: Have both large and small on the same chip Performance asymmetry 15 Asymmetric Chip Multiprocessor (ACMP) Large core Large core Large core Large core “Tile-Large” Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core “Tile-Small” Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Large core ACMP Provide one large core and many small cores + Accelerate serial part using the large core (2 units) + Execute parallel part on small cores and large core for high throughput (12+2 units) 16 Accelerating Serial Bottlenecks Single thread Large core Large core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core Small core ACMP Approach 17 Performance vs. Parallelism Assumptions: 1. Small cores takes an area budget of 1 and has performance of 1 2. Large core takes an area budget of 4 and has performance of 2 18 ACMP Performance vs. Parallelism Area-budget = 16 small cores Large core Large core Large core Large core Small Small Small Small core core core core Small Small Small Small core core core core Large core Small Small core core Small Small core core Small Small Small Small core core core core Small Small Small Small core core core core Small Small Small Small core core core core Small Small Small Small core core core core “Tile-Small” ACMP “Tile-Large” Large Cores 4 0 1 Small Cores 0 16 12 Serial Performance 2 1 2 2x4=8 1 x 16 = 16 1x2 + 1x12 = 14 Parallel Throughput 19 19 An Example: Accelerated Critical Sections Problem: Synchronization and parallelization is difficult for programmers Critical sections are a performance bottleneck Idea: HW/SW ships critical sections to a large, powerful core in Asymmetric MC Benefit: Reduces serialization due to contended locks Reduces the performance impact of hard-to-parallelize sections Programmer does not need to (heavily) optimize parallel code fewer bugs, improved productivity Suleman et al., “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures,” ASPLOS 2009, IEEE Micro Top Picks 2010. Suleman et al., “Data Marshaling for Multi-Core Architectures,” ISCA 2010, IEEE Micro Top Picks 2011. 20 Contention for Critical Sections Critical Section Parallel Thread 1 Thread 2 Thread 3 Accelerating Thread 4 Idle critical sections not only helps the thread executing t t t t t t thet critical sections, but also the waiting threads Thread 1 Critical Sections 1 2 3 4 5 6 Thread 2 Thread 3 Thread 4 7 execute 2x faster t1 t2 t3 t4 t5 t6 t7 21 Impact of Critical Sections on Scalability • Contention for critical sections increases with the number of threads and limits scalability 8 7 Speedup LOCK_openAcquire() foreach (table locked by thread) table.lockrelease() table.filerelease() if (table.temporary) table.close() LOCK_openRelease() 6 5 4 3 2 1 0 0 8 16 24 32 Chip Area (cores) MySQL (oltp-1) 22 Accelerated Critical Sections EnterCS() PriorityQ.insert(…) LeaveCS() 1. P2 encounters a critical section (CSCALL) 2. P2 sends CSCALL Request to CSRB 3. P1 executes Critical Section 4. P1 sends CSDONE signal Core executing critical section P1 P2 P3 Critical Section Request Buffer (CSRB) P4 OnchipInterconnect 23 Accelerated Critical Sections (ACS) Small Core Small Core A = compute() A = compute() PUSH A CSCALL X, Target PC LOCK X result = CS(A) UNLOCK X print result … … … … … … … Large Core CSCALL Request Send X, TPC, STACK_PTR, CORE_ID … Waiting in Critical Section … Request Buffer … (CSRB) TPC: Acquire X POP A result = CS(A) PUSH result Release X CSRET X CSDONE Response POP result print result Suleman et al., “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures,” ASPLOS 2009. 24 ACS Performance Chip Area = 32 small cores Equal-area comparison Number of threads = Best threads 269 160 140 120 100 80 60 40 20 0 180 185 Coarse-grain locks ea n hm eb ca ch e w sp ec jb b 2 ol tp - 1 ol tp - ip lo ok up ts p sq lit e qs or t Accelerating Sequential Kernels Accelerating Critical Sections pu zz le pa ge m in e Speedup over SCMP SCMP = 32 small cores ACMP = 1 large and 28 small cores Fine-grain locks 25 ACS Performance Tradeoffs Fewer threads vs. accelerated critical sections Accelerating critical sections offsets loss in throughput As the number of cores (threads) on chip increase: Overhead of CSCALL/CSDONE vs. better lock locality Fractional loss in parallel performance decreases Increased contention for critical sections makes acceleration more beneficial ACS avoids “ping-ponging” of locks among caches by keeping them at the large core More cache misses for private data vs. fewer misses for shared data 26 Cache misses for private data PriorityHeap.insert(NewSubProblems) Private Data: NewSubProblems Shared Data: The priority heap Puzzle Benchmark 27 ACS Performance Tradeoffs Fewer threads vs. accelerated critical sections Accelerating critical sections offsets loss in throughput As the number of cores (threads) on chip increase: Overhead of CSCALL/CSDONE vs. better lock locality Fractional loss in parallel performance decreases Increased contention for critical sections makes acceleration more beneficial ACS avoids “ping-ponging” of locks among caches by keeping them at the large core More cache misses for private data vs. fewer misses for shared data Cache misses reduce if shared data > private data 28 ACS Comparison Points Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Large core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Large core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core SCMP ACMP ACS • All small cores • Conventional locking • One large core (area-equal 4 small cores) • Conventional locking • ACMP with a CSRB • Accelerates Critical Sections 29 ------ SCMP ------ ACMP ------ ACS Equal-Area Comparisons Number of threads = No. of cores Speedup over a small core 3.5 3 2.5 2 1.5 1 0.5 0 3 5 2.5 4 2 7 6 5 4 3 2 1 0 3 1.5 2 1 0.5 1 0 0 3.5 3 2.5 2 1.5 1 0.5 0 14 12 10 8 6 4 2 0 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 (a) ep (b) is (c) pagemine (d) puzzle (e) qsort (f) tsp 6 10 5 8 4 8 12 3 12 10 2.5 10 8 2 8 6 1.5 6 4 1 4 2 0.5 2 0 0 0 6 6 3 4 4 2 1 2 0 0 2 0 0 8 16 24 32 0 8 16 24 32 (g) sqlite (h) iplookup 0 8 16 24 32 (i) oltp-1 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 (i) oltp-2 (k) specjbb (l) webcache Chip Area (small cores) 30 How Can We Do Better? Transfer of private data to the large core limits performance of ACS Can we identify/predict which data will need to be transferred to the large core and ship it there while shipping the critical section? Suleman et al., “Data Marshaling for Multi-Core Architectures,” ISCA 2010, IEEE Micro Top Picks 2011. 31 Data Marshaling Summary Staged execution (SE): Break a program into segments; run each segment on the “best suited” core Problem: SE performance limited by inter-segment data transfers A segment incurs a cache miss for data it needs from a previous segment Data marshaling: detect inter-segment data and send it to the next segment’s core before needed new performance improvement and power savings opportunities accelerators, pipeline parallelism, task parallelism, customized cores, … Profiler: Identify and mark “generator” instructions; insert “marshal” hints Hardware: Buffer “generated” data and “marshal” it to next segment Achieves almost all benefit of ideally eliminating inter-segment cache misses on two SE models, with low hardware overhead 32 Staged Execution Model (I) Goal: speed up a program by dividing it up into pieces Idea Benefits Split program code into segments Run each segment on the core best-suited to run it Each core assigned a work-queue, storing segments to be run Accelerates segments/critical-paths using specialized/heterogeneous cores Exploits inter-segment parallelism Improves locality of within-segment data Examples Accelerated critical sections [Suleman et al., ASPLOS 2010] Producer-consumer pipeline parallelism Task parallelism (Cilk, Intel TBB, Apple Grand Central Dispatch) Special-purpose cores and functional units 33 Staged Execution Model (II) LOAD X STORE Y STORE Y LOAD Y …. STORE Z LOAD Z …. 34 Staged Execution Model (III) Split code into segments Segment S0 LOAD X STORE Y STORE Y Segment S1 LOAD Y …. STORE Z Segment S2 LOAD Z …. 35 Staged Execution Model (IV) Core 0 Core 1 Core 2 Instances of S0 Instances of S1 Instances of S2 Work-queues 36 Staged Execution Model: Segment Spawning Core 0 S0 Core 1 Core 2 LOAD X STORE Y STORE Y S1 LOAD Y …. STORE Z S2 LOAD Z …. 37 Staged Execution Model: Two Examples Accelerated Critical Sections [Suleman et al., ASPLOS 2009] Idea: Ship critical sections to a large core in an asymmetric CMP Segment 0: Non-critical section Segment 1: Critical section Benefit: Faster execution of critical section, reduced serialization, improved lock and shared data locality Producer-Consumer Pipeline Parallelism Idea: Split a loop iteration into multiple “pipeline stages” where one stage consumes data produced by the next stage each stage runs on a different core Segment N: Stage N Benefit: Stage-level parallelism, better locality faster execution 38 Problem: Locality of Inter-segment Data Core 0 S0 Core 1 LOAD X STORE Y STORE Y Core 2 Transfer Y Cache Miss S1 LOAD Y …. STORE Z Transfer Z Cache Miss S2 LOAD Z …. 39 Problem: Locality of Inter-segment Data Accelerated Critical Sections [Suleman et al., ASPLOS 2010] Producer-Consumer Pipeline Parallelism Idea: Ship critical sections to a large core in an ACMP Problem: Critical section incurs a cache miss when it touches data produced in the non-critical section (i.e., thread private data) Idea: Split a loop iteration into multiple “pipeline stages” each stage runs on a different core Problem: A stage incurs a cache miss when it touches data produced by the previous stage Performance of Staged Execution limited by inter-segment cache misses 40 What if We Eliminated All Inter-segment Misses? 41 Terminology Core 0 S0 Core 1 LOAD X STORE Y STORE Y Transfer Y S1 LOAD Y …. STORE Z Generator instruction: The last instruction to write to an inter-segment cache block in a segment Core 2 Inter-segment data: Cache block written by one segment and consumed by the next segment Transfer Z S2 LOAD Z …. 42 Key Observation and Idea Observation: Set of generator instructions is stable over execution time and across input sets Idea: Identify the generator instructions Record cache blocks produced by generator instructions Proactively send such cache blocks to the next segment’s core before initiating the next segment 43 Data Marshaling Hardware Compiler/Profiler 1. Identify generator instructions 2. Insert marshal instructions Binary containing generator prefixes & marshal Instructions 1. Record generatorproduced addresses 2. Marshal recorded blocks to next core 44 Data Marshaling for ACS Large Core Small Core 0 Addr Y L2 Cache Data Y L2 Cache LOAD X STORE Y G: STORE Y CSCALL LOAD Y …. G:STORE Z CSRET Critical Section Marshal Buffer Cache Hit! 45 DM Support/Cost Profiler/Compiler: Generators, marshal instructions ISA: Generator prefix, marshal instructions Library/Hardware: Bind next segment ID to a physical core Hardware Marshal Buffer Stores physical addresses of cache blocks to be marshaled 16 entries enough for almost all workloads 96 bytes per core Ability to execute generator prefixes and marshal instructions Ability to push data to another cache 46 DM: Advantages, Disadvantages Advantages Timely data transfer: Push data to core before needed Can marshal any arbitrary sequence of lines: Identifies generators, not patterns Low hardware cost: Profiler marks generators, no need for hardware to find them Disadvantages Requires profiler and ISA support Not always accurate (generator set is conservative): Pollution at remote core, wasted bandwidth on interconnect Not a large problem as number of inter-segment blocks is small 47 ql -2 ch e hm ea n ca ys ql -1 20 eb m ys up li t e n e 40 w sq ue e az ts p oo k m ip l le or t zz m is in e qs pu ge m 140 nq pa Speedup over ACS DM on Accelerated Critical Sections: Results 168 170 120 8.7% 100 80 60 DM Ideal 0 48 Pipeline Parallelism Cache Hit! Core 0 Addr Y L2 Cache Data Y Marshal Buffer S0 LOAD X STORE Y G: STORE Y MARSHAL C1 S1 LOAD Y …. G:STORE Z MARSHAL C2 S2 0x5: LOAD Z …. Core 1 L2 Cache 49 es s et 40 ea n 60 hm 80 si gn ra nk is t ag e m tw im fe rr de du pE de du pD co m pr bl ac k Speedup over Baseline DM on Pipeline Parallelism: Results 160 140 120 16% 100 DM Ideal 20 0 50 Scaling Results DM performance improvement increases with More cores Higher interconnect latency Larger private L2 caches Why? Inter-segment data misses become a larger bottleneck More cores More communication Higher latency Longer stalls due to communication Larger L2 cache Communication misses remain 51 Other Applications of Data Marshaling Can be applied to other Staged Execution models Task parallelism models Cilk, Intel TBB, Apple Grand Central Dispatch Special-purpose remote functional units Computation spreading [Chakraborty et al., ASPLOS’06] Thread motion/migration [e.g., Rangan et al., ISCA’09] Can be an enabler for more aggressive SE models Lowers the cost of data migration an important overhead in remote execution of code segments Remote execution of finer-grained tasks can become more feasible finer-grained parallelization in multi-cores 52