Generalized Linea r Mo

advertisement

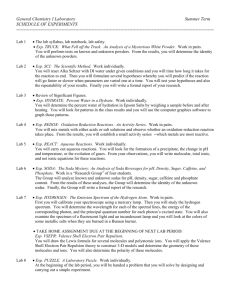

Generalized Linear Models: A subclass of nonlinear models with a \linear" component Data: (Y1; XT1 ) (Y2; XT2 ) .. (Yn; XTn ) % response variable where - explantory or regression, variables XTj = (X1j ; X2j ; : : : ; Xpj ) describe the conditions under which the response Yj was obtained. 1 Basic Features: 1. Y1; Y2; ; Yn are independent 2. Yj has a distribution in the exponential family of distributions, j = 1; 2; ; n. The joint likelihood has the form (canonical form): where L(; ; Y) = jn=1f (Yj ; j ; ) Yj j b(j ) f (Yj ; j ) = exp f g a() for some functions a(); b(); and c(): Here, j is called a canonical parameter. 2 3. (Systematic part of the model) There is a link function h() that links the conditional mean of Yj given Xj = (Xij ; : : : ; Xpj )T to a linear function of unknown parameters, e.g. h(E (Yj jXj )) = XTj = 1X1j + : : : + pXpj Members of the exponential family of distributions available in S-PLUS: glm() function SAS: PROC GENMOD 3 Normal distribution: Y N (; 2) ) ( 2 (Y ) 1 f (Y ; ; ) = p 2 exp 22 2 8 > < 2 " Y 2 1 Y2 2) = exp > 2 + log(2 2 2 : 9 #> = > ; " C (Y; ) Here E (Y ) = V ar(Y ) = 2 = 2 and b() = 2 a() = 4 Binomial Distribution: Y Bin(n; ) n f (Y ; ; ) = Y (1 )n Y Y n Y = (1 )n Y 1 ) ( = exp Y log + n log(1 )+ log n Y 1 " C (Y; ). Here and e = log ) = 1 1 + e b() = n log(1 ) = n log(1 + e ): There is no dispersion parameter, so we will take 1 and a() = 1. 5 Poisson distribution: Y P oisson() Y f (Y ; ; ) = e Y! n o = exp Y log() log(Y !) " C (Y; ). Here and = log() ) = exp() b() = = exp() and there is no additional dispersion parameter. We will take 1 and a() = 1. Then, f (Y ; ; ) = expfY exp() log( (Y + 1))g 6 Two parameter gamma distribution:Y gamma(; ) f (Y ; ; ) = Y = exp = exp n Y Y 1 exp( Y= ) () + ( 1) log(Y ) log( ) log( ()) 1 +log 1 1 o + ( 1) log(Y ) + 1 log( 1) log( ()) " C (Y; ) Here, = 1 = 1; b() = log( ); a() = 7 Inverse Gaussian Distribution: Y IG(; 2) ( 1 exp 2 3 2 Y for Y > 0; > 0; 2 > 0 also E (Y ) = ; V ar(Y ) = 23 f (Y ; ; ) = p 8 > < ) 2 (Y ) 222Y Y ( 212 ) exp > 2 : 1 1 + 2 2 Y 9 > = 1 log(22Y 3) 2 " C (Y; ) Here, 1 2 2p b() = = 2 = 2 \dispersion parameter" a() = = 2 = 8 > ; Canonical form of the log-likelihood: `(; ; Y ) = log(f (Y ; ; )) = Y b() + C (Y; ) a() paratial derivatives: ;Y ) = Y b0() (i) @`(; @ a() (ii) @ 2`(;;Y ) @2 = b00() a() Apply the following results ! @`(; ; Y ) =0 Ed @ ! " #2 2 @ `(; ; Y ) @`(; ; Y ) E + E =0 2 @ @ to obtain @`(; ; Y E (Y ) b0() 0=E = @ a() ! 9 which implies E (Y ) = b0() Furthermore, from (i), (ii) and (iv) we have ! !2 2 @ `(; ; Y ) @`(; ; Y ) 0 = E +E 2 @ @ ! Y b0() b00() + V ar = a() a() b00() V ar(Y ) = + a() [a()]2 which implies V (Y ) = a() b00() " " function of the dispersion called the parameter variance function 10 Normal distribution: Y N (; 2) 2 2 b() = b0() = b00() = 1 a() = = 2 ) E (Y ) = ) ) Var(Y ) = b00()a() = (1) = = 2 " the variance does not depend on the parameter that dene the mean 11 Binomial distribution: Y Bin(n; ) = log( 1 ) b() = (n)log(1 + e ) ne ne 0 b () = 1+e ) E (Y ) = 1+e = n ne b00() = [1+ e ]2 a() 1 9 = ; Var(Y ) = ne (1) [1+e ]2 e 1 n 1+e 1+e = = n(1 ) " V ar(Y ) is completely determined by the parameter that denes the mean. 12 Poisson Distribution: Y P oisson() b() = exp() b0() = exp() ) = E (Y ) = exp() ) 00 b () = exp() a() = 1 Var(Y ) = exp() = E (Y ) = Gamma Distribution: b() = b0() = b00() = 12 a() = ) log( ) 1 ) E (Y ) = 1 Var(Y ) = = = a()b00() 2 [E (Y )]2 13 Canonical link function: is the link function h() = XT that corresponds to = XT @b() = E (Y ) = = b0() @ Normal (Gaussian) distribution: = XT = b0() = then E (Y ) = = = XT and the canonical link function is the identity function h() = = XT 14 Binomial distribution: = XT ne 0 n = E (Y ) = b () = 1 + e ) = log 1 = XT Hence, the canonical (default) link is the logit link function h() = log = XT 1 Other available link functions: Probit: h() = 1() = XT where Z XT u2 1 T p = (X ) = e 2 du 1 2 15 Complimentary log-log link (clog log): h() = log[ log(1 )] = XT The inverse of this link function is = 1 exp[ exp(XT )] The glm( ) function glm(model, family, data, weights, control) family = binomial family = binomial(link=logit) family = binomial(link=probit) family = binomial(link=cloglog) 16 Poisson regression (log-linear models) Y P oisson() = XT = E (Y ) = b0() = exp() and the canonical link function is = log() = XT This is called the log link function. Other available link functions: identity link: h() = = XT square root link: h() = p = XT glm(model, family, data, weights, control) family = poisson family = poisson(link=log) family = poisson(link=identity) family = poisson(link=sqrt) 17 Gamma distribution: = XT 1 E (Y ) = = the canonical link function is 1 = XT h() = This is called the \inverse" link. glm(model, family, data, weights, control) family = gamma family = gamma(link=inverse) family = gamma(link=identity) family = gamma(link=log) 18 Inverse Gaussian Distribution = XT p 2 b() = 1 = E (Y ) = b0() = p 2 The canonical link is 1 = h() = 2 = XT 2 This is the only built-in link function for the inverse gaussian distribution. glm(model, family, data, weights, controls) family = inverse.guassian family = inverse.guassian(link=1=2) 19 Normal-theory Gauss-Markov model: Y N (X ; 2I ) or Y1; Y2; : : : ; Yn are independent with Yj N (XTj ; 2). Then 8 < 9 (Yj XTj )2 = ; 22 f (Yj ; j 2) = p 2 exp : 2 8 > > < (X T (Yj )(Xj ) 2 = exp > 2 > : T j )2 9 3> > = 5 > > ; 2 1 4 Yj2 + log(22) 2 2 " C (Yj ; 2) Here, (XTj )2 b() = 2 = 2 and a() = E (Yj ) = j = XTj 20 The link function is the identity function h(j ) = j = XTj The class of generalized linear models includes the class of linear models: (i) Systematic part of the model (identity link function) j = E (Yj ) = XTj = 1X1j + : : : + pXpj (ii) Stochastic part of the model Yj N (j ; 2) and Y1Y2 : : : Yn are independent. 21 Example 13.1 Mortality of a certain species of beetle after 5 hours exposure to gaseous carbon disulde (Bliss, 1935). Number Number of Number Dose Log(Dose) killed survivors exposed (mg/l) (Xj ) (Yj ) (nj Yj ) nj 49.057 3.893 6 53 59 52.991 3.970 13 47 60 56.911 4.041 18 44 62 60.842 4.108 28 28 56 64.759 4.171 52 11 63 68.691 4.230 53 6 59 72.611 4.258 61 1 62 76.542 4.338 60 0 60 22 Logistic Regression: Y1; Y2; : : : ; Y8 are independent random counts with YBin(nj ; j ) log j 1 j = 0 + 1 Xj Comments: * Here the logit link function is the canonical link function * Use of the binomial distribution follows from (i) Each beetle exposed to log-dose Xj responds independently (ii) Each beetle exposed to log-dose Xj has probability j of dying 23 Maximum Likelihood Estimation: Joint likelihood for Y1; Y2; : : : ; Yk L(; Y; X ) = jk=1f (Yj ; j ; ) ( ) Yj j b(j k = j =1 exp + C (Yj ; ) a ( phi ) n k = j =1 exp Yj (0 + 1Xj ) n o + X 0 1 j nj log(1 + e ) + log j Yj Since = 1 a() = = 1 j = log j 1 j = 0 + 1Xj b(j ) = nj log(1 + e0+1Xj ) C (Yj ; ) = log nj Yj 24 log-likelihood function: `() = = " k X # Yj j b(j ) + C (Yj ; ) a() j =1 k h X j =1 Yj (0 + 1Xj ) nj log(1 + e0+1Xj ) nj +log Yj = 0 k X j =1 k X Yj + 1 k X j =1 Yj Xj k X nj nj log Yj j =1 j =1 To maximize the log-likelihood, solve the system of equations obtained by setting rst partial derivatives equal to zero. log(1 + e0+1Xj ) + 25 Estimating Equations (likelihood equations): 8 8 X exp( ^ + ^1X1 @`(^ ) X = Yj nj 0 = ^ ^ @ ^0 j =1 j =1 1 + exp( + 1 X1) = 8 X (Yj nj ^j ) j =1 8 @`(^ ) X 0 = = Xj Y j @ ^0 j =1 8 X j =1 Xj (Yj 8 X j =1 nj Xj exp(^ + ^1X1 1 + exp(^ + ^1X1) nj ^j ) Generally no closed form expression for the solution If a solution exists in the interior of the parameter space (0 < j < 1 for all j = 1; : : : ; k) then it is unique. 26 The likelihood equations (or score function) can be expressed as h0i 0= = X T (Y m) = Q 0 " score function where 2 3 2 3 2 3 1 X1 Y1 n11 6 7 6 7 6 7 6 1 X2 7 6 Y2 7 6 n2 2 7 X = 64 .. 7Y = 6 . 7m = 6 . 7 5 4 . 5 4 . 5 1 Xk Yk n88 and from the inverse link function exp(0 + 1Xj j = 1 + exp(0 + 1Xj ^m We will use Q; ^ , and ^j to indicate when these quantities are evaluated at = ^ . 27 Second partial derivatives of the log-likelihood function: 2 3 @ 2`() @ 2`( ) 2 6 @0@1 7 @ 6 0 H = 4 @ 2`() @ 2`() 75 = where @0@1 XT V X @12 - called the Hessian matrix 2 n11(1 1) ... V = 64 3 nk k (1 k ) 7 5 = V ar(Y) We will use H^ = X T V^ X and V^ to denote H and V evaluated at = ^ . 28 Fisher Information matrix: 2 2`( ) 3 2`( ) @ @ E @0@1 7 E @ 2 6 0 7 H = 64 2 2 5 @ ` ( ) @ ` ( ) E @0@1 E @ 2 1 = E (X T V X ) = XT V X = H Since the second partial derivatives do not depend on Y = (Y1; : : : ; Yk)T . 29 Iterative procedures for solving the likelihood equations: Start with some initial values 2 where 3 " (0) P ) ^ log ( (0) 1 P ^ = 4 0(1) 5 = 0 ^1 P= # Pk j =1 Yj Pk j =1 nj Newton-Raphson algorithm ^ ^ (S +1) = ^ (S ) + H^ 1Q " these are evaluated at ^ (S ) 30 Fisher scoring algorithm ^ ^ (S +1) = ^ (S ) + I^ 1Q " for the logistic regression model with binomial counts I^ = H^ Modication: - Check if the log-likelihood is larger at ^ (S +1) than at ^ (S ). - If it is, go to the next iteration - If not, invoke a halving step S +1)) S +1) = 1 ( ^ (S ) + ^ (old ^(new 2 31 Large sample inference: (see results 9.1-9.3) From result 9.2, ^ _ N (; (X T V 1X ) 1) when n1; n2; : : : ; nk are all large " # \large" is nj j > 5 nj (1 j ) > 5 Estimate the covariance matrix as Vd ar(^ ) = (X T V^ 1X ) 1 where V^ is V evaluated at ^ 32 For the Bliss beetle data: " # " # ^ = ^^0 = 60:717 14:883 1 with 2 3 " 2 S S ^0;^1 5 26:84 ^ d 0 4 ^ V ar() = S = 2 6:55 S ^ ^ 0;1 ^1 6:55 1:60 33 # Wald tests: Test H0 : 0 = 0 vs. HA : 0 6= 0 2 32 2 ^ 0 60 : 717 0 5 = X2 = 4 = 137:36 S^0 5:18 p-value = P rf2(1) > 197:36g < :0001 Test H0 : 1 = 0 vs. HA : 1 6= 0 2 32 2 ^ 14 : 883 0 = 138:49 X2 = 4 1 5 = S^1 1:265 p-value = P rf2(1) > 138:49g < :0001 34 Approximate (1 ) 100% condence intervals ^0 Z=2S^0 ^1 Z=2S^1 " from the standard normal distribution Z:025 = 1:96 35 Likelihood ratio (deviance) tests: Model A: log 1 jj = 0 and Yj Bin(nj ; j ) Model B: log 1 jj = 0 + 1Xj and Yj Bin(nj ; j ) Model C: log 1 jj = 0 + j and Yj Bin(nj ; j ) Model C simply says that 0 < j < 1; j = 1; : : : ; k 36 Null: Deviance residual h DevianceNull = 2 log-likelihoodModel A i -log-likelihoodModel C = 2 k h X j =1 k X Yj log(Pj =P) 1 Pj i + (nj Yj )log 1 P j =1 with (k 1)d:f: where Yj Pj = j = 1; : : : ; k nj Pk nj Pj Y j =1 j = Pk P = nj j =1 nj are the m.l.e.'s of j under models C and A, respectively. 37 LOOSE: deviance residuals DevianceLOOSE = h 2 log-likelihoodModel A i -log-likelihoodModel B k h X ^j = 2 Yj log P j =1 k 1 X ^j i + (nj Yj )log 1 P j =1 = 272:7803 with (2 1) = 1d:f: where exp(^0 + ^1Xj ) ^j = 1 + exp(^0 + ^1Xj Pk ^ Yj exp( j =1 0 P = = 1 + exp(^0 Pkj=1 nj 38 LOOSE: DEV (Lack-of-t test) j H0 : log = 0 + 1Xj (model B) 1 j j = 0 + j (model C) HA : log 1 j G2 = deviancelack-of-t = devianceNULL devianceLOOSE 2 k P X = 2 4 Yj log j ^j j =1 3 k 1 X Pj 5 + (nj Yj )log 1 ^j j =1 = 11:42 on [(k 1) 1] = k 2d:f: (= 8 2 = 6d:f:) (p-value = 0:762) 39 Pearson chi-square test: k (Y X nj ^j )2 j 2 X = nj ^j j =1 k (n X Yj nj (1 ^j ))2 j + nj (1 ^j ) j =1 k k X (Pj ^j )2 X (1 Pj [1 ^j ])2 = nj + nj ^j 1 ^j j =1 j =1 = 10:223 with d.f.= k 2 = 6 p-value = .1155 40 Estimates of expected counts under Model B. j nj ^j nj (1 ^j ) 1 3.43 55.57 2 9.78 50.22 3 22.66 39.34 4 33.83 22.17 Cochran's rule: 5 50.05 12.95 6 53.27 5.73 6 59.22 2.78 8 58.74 1.26 All expected counts should be larger than one. At least 80% should be larger than 5. 41 Estimate mortality rates: exp(^0 + ^1Xj ) ^j = 1 + exp(^0 + ^1Xj Use the delta method to compute an approximate standard error @ ^j exp(^0 + ^1Xj = @ ^0 [1 + exp(^0 + ^1Xj )]2 = ^j (1 ^j ) Xj exp(^0 + ^1Xj @ ^j = ^ @ 0 [1 + exp(^0 + ^1Xj )]2 = Xj ^j (1 ^j ) 42 Dene Gj = @@^^j @@^^j = [^j (1 ^j )Xj ^j (1 ^j )] 0 1 Then, S^2j = Gj [Vd ar(^ )]GTj and an approximate standard error is r S^j = Gj [Vd ar(^ )]GTj An approximate (1 ) 100% condence interval for j is ^j Z=2S^j 43 Estimate the log-dose that provides a certain mortality rate, say 0. 0 log = 0 + 1X0 ) 1 0 X0 = log 0 1 0 1 0 The m.l.e. for X0 is log 1 00 ^0 X^0 = ^1 44 Use the delta method to obtain an approximate standard error: 1 @ X^0 = ^ @ ^0 h 1 i 0 log 1 0 ^0 @ X^0 = ^ @ 1 ^12 ^ ^ Dene G^ = @@X^0 @@X^0 and compute 0 1 h i 2 SX^0 = G^ Vd ar(^ ) G^T 45 The standard error is r h i SX^0 = G^ Vd ar(^ ) G^T and an approximate (1 ) 100% condence interval for X0 is X^0 Z=2SX^0 Suppose you want a 95% condence interval for the dose that provides a mortality rate of 0 . dose0 = exp(X0) 46 The m.l.e. for the does is d ^0) dose 0 = exp(X Apply the delta method @ exp(X^0) ^0) = exp( X @ X^0 Then Sdose = [exp(X^0)]2Vd ar(X^0) d 0 d )S Sdose = [exp(X^0)]SX^0 = (dose 0 X^0 d and an approximate (1 ) 100% condence interval for dose0 is exp(X^0) Z=2[exp(X^0)]SX^0 47 Fit a probit model to the Bliss beetle data: where 1(j ) = 0 + 1Xj Xj = log(dose) Yj = Bin(nj ; j ) j = 1; 2; : : : ; k Note that j = Z 0+1Xj 1 1 t2 1 p2 e 2 dt 48 Fit the complimentary log-log model to the Bliss beetle data where log[ log(1 j )] = 0 + 1Xj Note that Xj = log(dose) Yj = Bin(nj ; j ) j = 1 exp[ e0+1Xj ] j = 1; 2; : : : ; k 49 Poisson Regression (Log-linear models)[.2in] Example 13.2: Yjk = number of TA98 salmonella colonies on the k-th plate with the j-th dosage level of quinoline Xj = log10(dose of quinoline(g)) X1 = 0 X2 = 1:0 X3 = 1:5 X4 = 2:0 X5 = 2:5 and k = 1; 2; 3 plates at each ?? 50 Independent Poisson counts: Yjk P oisson(mj ) j = 1; : : : ; 5 k = 1; : : : ; 3 where myj mj P rfYjk = yg = e y! for y = 0; 1; 2; : : : E (Yjk = mj V ar(Yjk ) = mj Log-link function: log(mj = 0 + 1Xj 51 Joint likelihood function Yjk m L() = j5=1k3=1 j e mj Yjk Log-likelihood function `() = = 5 X 3 X j =1 k=1 5 X 3 X [Yjk log(mj ) mj log(Yjk !)] [Yjk [0 + 1Xj ] e0+1Xj j =1 k=1 log(Yjk !)] = 0Y00 + 1 5 X 3 X j =1 k=1 5 X j =1 Xj Yj 0 3 5 X j =1 e0+Xj log(Yjk !) 52 Likelihood equations: 5 X @`() 0 = = Y:: 3 e0+1Xj @0 j =1 5 5 X @`() X = Xj Yj: 3 Xj e0+1Xj 0 = @1 j =1 j =1 Use the Fisher scoring algorithm: 2 3 2 3 Y1 1 1 X1 6 7 6 7 6 Y1 2 7 6 1 X1 7 Y = 64 . . 75 X = 64 .. .. 75 Y53 3 1 X5 2 @`() T 0 7 Q = 64 @`@ m) 5 = X (Y () where @1 2 3 m1 6 7 6 m1 7 m = 64 . 75 = exp(X ) . m5 53 Second partial derivatives of `() 5 X XX @ 2`() + X 0 1 j = 3 e = mj 2 @0 j =1 j k 5 X XX @ 2`() + X 0 1 j = 3 e = Xj mj @0@1 j =1 j k 5 X XX @ 2`() 2 + X 2m 0 1 j = 3 X e = X j j j @12 j =1 j k 54 Fisher information matrix 2 H = = = where @ 2`( ) @02 @ 2`() @ 2`( 3 @0@1 7 7 E 2 @ `() 5 @0@1 @12 " P P P P m Xj mj j Pj Pk Pj P k 2 j k Xj mj j k Xj mj X T m X 6 6 4 V ar(Y) = m = 2 6 6 6 6 6 6 6 6 4 m1 # 3 m1 m1 m2 ... m5 55 7 7 7 7 7 7 7 7 5 Iterations: (S +1) = (S ) + H 1Q Use halving steps as needed Starting values: Use the m.l.e.s for the model with 1 = 0, i.e., Yij P oisson(m) are i.e.d. with m = e0 . Then, m ^ (0) = Y:: and ^0(0) = log(Y::) ^1(0) = 0 56 Large sample normal approximation for the distribution of ^ ^ N (; H 1) % estimate this by substituting ^ for in H to obtain ^ 57 Estimate the mean number of colonies for a specic log10(dose) of quinoline, say X The m.l.e. is m ^ = e^0+^1X Apply the delta method: @m ^ = e^0+^1X = m ^ ^ @ 0 @m ^ = X e^0+^1X = X m ^ ^ @ 1 58 Dene h @m ^ @m ^ @ ^0 @ ^1 i G^ = = [m ^ Xm ^ ] Then the estimated variance of is m ^ = e^0+^1X 2 = G 1G T ^ ^ ^ Sm H ^ = G^[X T m^ X ] 1GT q 2 and an apThe standard error is Sm^ = Sm ^ proximate (1 ) 100% condence interval is m ^ Z=2Sm^ 59 Four kinds of residuals: Response residuals: " # Yi Yi mu ^ i= ^i ni Pearson residuals: Yi ^i q = q Yi ni^i (binomial) ni^i(1 ^i) Vd ar(Yi) Yi ^i (Poisson) = p ^i 60 Working residuals: Yi n^i Yi ^i = (binomial) @i ni^i(1 ^i) @i Yi ^i = (Poisson) ^i For the binomial family with logit link ! ! nii i = log = XTi i = log 1 i ni(1 i) exp( XTi ) exp( i) i = nii = ni = ni T 1 + exp(i) 1 + exp(Xi @i = ni exp(i) 2 = nii(1 i) @i [1 + exp(i)] 61 Deviance residual: q ei = sign(Yi ^i) jdij where di is the contribution of the i-th case to the deviance binomial family G2 = 2 and k X i=1 " Yilog ! Yi + (ni Yi)log ni Yi ni^i ni(1 ^i) !# ei = sign( Y n^i) v " i ! !# u u n Y Y i i i t2 Yilog n ^ + (ni Yi)log n (1 ^ ) i i i i 62 Poisson family G2 = (deviance) = 2 where then k X i=1 Yilog Yi ^i ! ^i = exp(^0 + ^1X1i+??) log(i) = 0 + 1X1i + : : : v u u t Yi ei = sign(Yi ^i) 2Yilog ^i ! 63