Other discrete distributions A

advertisement

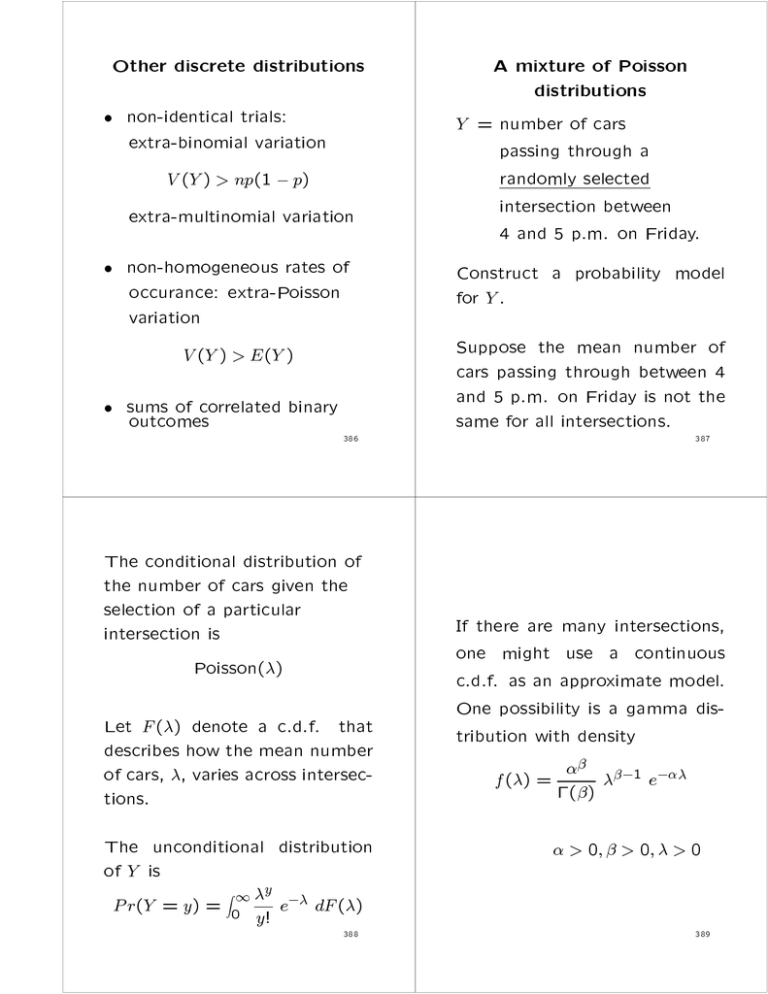

Other discrete distributions

non-identical trials:

extra-binomial variation

V (Y ) > np(1 p)

extra-multinomial variation

non-homogeneous rates of

occurance: extra-Poisson

variation

V (Y ) > E (Y )

sums of correlated binary

outcomes

386

The conditional distribution of

the number of cars given the

selection of a particular

intersection is

Poisson()

Let F () denote a c.d.f. that

describes how the mean number

of cars, , varies across intersections.

The unconditional distribution

of Y is

Z

y

P r(Y = y) = 01 e dF ()

y!

388

A mixture of Poisson

distributions

Y = number of cars

passing through a

randomly selected

intersection between

4 and 5 p.m. on Friday.

Construct a probability model

for Y .

Suppose the mean number of

cars passing through between 4

and 5 p.m. on Friday is not the

same for all intersections.

387

If there are many intersections,

one might use a continuous

c.d.f. as an approximate model.

One possibility is a gamma distribution with density

1 f () =

e

( )

> 0; > 0; > 0

389

Then

P r(Y = y)

= R01 y e y!

0

@

1

1 e d

( )

d

y 1 A 1 y

= +

+1

1+

1

Substituting

=

and = k

+1

P r(Y = y)

y

+

k

1

= k 1 k(1 )y ;

for y = 0; 1; : : :

0<<1

k>0

%

Negative Binomial (Pascal) Distribution

Pascal (1679) coeÆcients

Montmort (1714) basic formulation (study of hazards)

Greenwood & Yale (1920, JRSS) accident proneness

James Bernoulli (1713) paper on binomial distribution

= probability that exactly y failures occur before the k-th

success in a series of independent and idential trials with

= P rfsuccessg.

390

391

Special cases:

Moments:

1

1

A = m

E (Y ) = k @

0

0

1

1

m

+

k

1

A > m

V (Y ) = k @ 2 A = m @

k

%

0

k > 0 need not be

an integer, so this

extra-variation

factor is simply

1

392

k = 1 yields the geometric

distribution where

P (Y = y) = (1 )y ;

y = 0 ; 1; If k ! 1 and ! 1 in a way

such that

0

1

1

A ! < 1

k@

then

Neg.Bin(k; ) dist'n

! Poisson()

393

Parameter estimation: Maximum likelihood estimation

Assume cars are counted at n

randomly selected intersections

and the counts are Y1; Y2; : : : ; Yn.

The log-likelihood function is

n

Yi + k 1!

X

`(k; ; data) =

log

k 1

i=1

+nk log()

+

n

X

i=1

An iterative procedure must be

used to nd numerical values for

the m.l.e.'s ^k and ^.

Then m.l.e.'s for \expected

counts are

0

1

^

y

+

k

1

C ^

k (1 y

A

n B@ ^

^

^

)

k 1

for y = 0; 1; : : :

Yi log(1 )

394

395

Likelihood equations:

0 = @` = nk

@

n

X

i=1

Yi

The following results were used:

1 0 = @`

@k

n

X

@ log[ (Yi + k)]

=

@k

i=1

@ log[ (k)]

n

+ n log()

@k

n "1

X

+ 1 + =

i=1 k k + 1

3

1

5I

+

k + Yi 1 [Yi>0]

+ n log()

396

@ log[ (y + k)]

= @ log[ (y + k 1)]

@k

@k

+ 1

y+k

0

@

1

y+k 1 A =

k 1

(k + k)

(k) (y + 1)

397

Second partial derivatives:

Modied Newton-Raphson

algorithm:

n

X

Yi

(1 )2

@ 2`

nk

=

@2

2

i=1

^(s+1) = ^(s) H (s) G(s)

@ 2`

= n

@@k

@ 2`

=

@k2

%

2

6

4

1+ 1

+

i=1 k2 (k + 1)2

3

1

+ (k + X 1)2 75 I[Yi>0]

i

n

X

2

6

6

4

%

3

^(s+1) 77

^k(s+1) 5

2

6

6

6

4

-

3

@ 2`

@ 2` 7 1

2

@@k

@

7

7

@ 2` @ 2 ` 5

@@k @k2 =^ (s)

2

6

6

4

398

3

@`

@ 77

@` 5

@k =^ (s)

399

Initial Estimates: Method of

moments.

Choose = 1 if

Use Y to estimate

`(^(s+1); data) `(^(s); data):

Otherwise, reduce until

`(^(s+1); data) `(^(s); data)

e.g. try f12; 14; 18; ; 21r g

400

m = E (Y ) = k

(1 ) :

Use

1 Xn (Y Y )2

n i=1 i

to estimate

1 V (Y ) = k 2 :

S2 =

401

/* This program is stored as negbin.sas */

Solve the equations:

(1 ~k)

Y = ~k ~

k

1 ~

S 2 = ~k

~

/* This program computes maximum

likelihood estimates for Poisson

and Negative Binomial distributions

and tests the fit of those

distributions.

Method of moments estimators

Y

~ = 2 ) ^(0)

S

2

~k = 2Y ) ^k(0)

S Y

Use these only if S 2 > Y .

The data are entered as two columns

with the first column (RESULT)

corresponding to a number of

occurences of an event and the second

column corresponding to the number of

trials or individuals in that category.

All categories preceeding the category

with the largest number of events must

be included in the data file, even if

the observed counts are zero in some

of those categories. The program

automatically groups categories to keep

expected counts above a user specified

minimum. */

402

DATA SET1;

/* NUMBER OF SMOOTH SURFACE CAVITIES IN

12-YEAR-OLD CHILDREN TAKEN FROM GRANGER

AND REID (1954) J. DENTAL. RES. 613-623.*/

403

13 2

RUN;

PROC PRINT DATA=SET1; run;

/* Begin the IML code */

INPUT RESULT X;

LABEL RESULT = NUMBER OF CAVITIES

X = NUMBER OF CHILDREN;

CARDS;

0 63

1 29

2 12

3 15

4 8

5 9

6 5

7 4

8 6

9 2

10 3

11 3

12 2

PROC IML;

START FIT;

/* Identify the input data set */

USE SET1;

/* Put the counts into the vector Y

It is assumed that the counts are

in the second column of the data

set */

READ ALL INTO X;

Y = X[ ,2];

/* Compute the number of categories */

K=NROW(Y);

404

405

/* Compute the sum of the counts */

N = SUM(Y);

/* Compute expected counts for the

iid Poisson model */

EP = J(K,1,0);

EP[1,1] = N*EXP(-MEAN);

DO I = 2 TO K;

IM1 = I-1;

EP[I,1] = EP[IM1,1]*MEAN/IM1;

END;

EP[K,1] = EP[K,1] + N -SUM(EP);

/* Store category values in CC */

CC = X[ ,1];

/* YC is a vector of cummulative

counts used to compute derivatives

of the negative binomial

log-likelihood */

YC = Y;

DO I = 1 TO K;

YC[I,1] = SUM(Y[I:K,1]);

END;

/* Combine categories to make each

expected count larger than MB */

EPT = EP;

RUN COMBINE;

/* Compute sample mean and variance

for the Poisson distribution */

/* Compute the Pearson statistic */

PEARSON = (YT-EPT)`*INV(DIAG(EPT))*(YT-EPT);

MEAN = sum(CC#Y)/N;

VAR = (t(y)*(CC##2)-N*MEAN*MEAN)/N;

PRINT,,,"Sample Mean is" MEAN;

PRINT,,,"Sample Variance is" VAR;

406

/* Compute likelihood ratio test */

gg = 0;

ncf = nrow(ept);

do i = 1 to ncf;

at = 0;

if(yt[i,1])>0. then

at=2*yt[i,1]*log(yt[i,1]/ept[i,1]);

gg = gg + at;

end;

/* Compute the Fisher Deviance */

FISHERD = N*VAR/MEAN;

DFF = N-1;

DFP = KK - 2;

PVALF = 1-PROBCHI(FISHERD,DFF);

PVALP = 1-PROBCHI(PEARSON,DFP);

PVALG = 1-PROBCHI(GG,DFP);

408

407

/* Print Results */

ZT=CT||YT||EPT;

PRINT,,,,,,,,,,,"Results for Fitting the

Poisson Distribution";

PRINT,,,,,"

Observed Expected";

PRINT "Result

Count

Count";

PRINT ZT;

PRINT,,,"

PEARSON Statistic =" PEARSON;

PRINT,"

df =" DFP;

PRINT,"

p-value =" PVALP;

PRINT,,,"Likelihood ratio test =" GG;

PRINT "

df =" DFP;

PRINT "

p-value =" PVALG;

PRINT,,,"

Fisher Deviance =" FISHERD;

PRINT,"

df =" DFF;

PRINT,"

p-value =" PVALF;

409

/* Fit the negative binomial model

First compute method of moments

estimators for parameters */

PI = MEAN/VAR;

BETA = MEAN*MEAN/(VAR-MEAN);

PRINT,,,,,,," Results for Fitting

the Negative Binomial Distribution";

print,,,"Method of moments estimators";

PRINT," pi = " PI;

PRINT,"Beta = " BETA;

/* Compute maximum likelihood

estimates. The parameter

stimates are kept in B */

B = J(2,1);

B[1,1] = PI;

B[2,1] = BETA;

MAXIT=50;

HALVING=16;

CONVERGE=.000001;

print,,,"Begin iterations to compute

maximum likelihood estimates";

/* Run the Newton-Raphson algorithm */

RUN NEWTON;

PI = B[1,1];

BETA = B[2,1];

print,,,"Maximum likelihood estimates";

print," pi = " PI;

print,"Beta = " BETA;

print,,,"Estimated covariance matrix

for maximum likelihood estimates";

VARB = INV(H);

print,VARB;

/* Check if mean > variance, if so,

select new starting values */

IF(MEAN > VAR) THEN DO;

B[1,1] = .95;

B[2,1] = 20;

END;

410

/* Compute expected counts for

the negative binomial model */

RUN PROB;

EP = N*PNB;

411

PVALG = 1-probchi(GG, dfp);

/* Combine categories to keep

expected counts larger than MB */

EPT=EP;

RUN COMBINE;

/* Compute the Pearson statistic */

PEARSON = (YT-EPT)`*

INV(DIAG(EPT))*(YT-EPT);

DFP = KK - 3;

PVALP = 1-PROBCHI(PEARSON,DFP);

/* Compute a likelihood ratio test */

GG = 0;

ncf = nrow(ept);

do i = 1 to ncf;

at = 0;

if(yt[i,1])>0. then

at=2*yt[i,1]*log(yt[i,1]/ept[i,1]);

GG = GG + at;

end;

412

/* Print Results */

ZT=CT||YT||EPT;

PRINT,,,,,,,,,,,"Results for Fitting

the Negative Binomial Distribution";

PRINT,,,,,"

Observed Expected" ;

PRINT "Result

Count

Count";

PRINT ZT;

PRINT,,,"

PEARSON Statistic =" PEARSON;

PRINT,"

df =" DFP;

PRINT,"

p-value =" PVALP;

PRINT,,,"Likelihood ratio test =" GG;

PRINT "

df =" DFP;

PRINT "

p-value =" PVALG;

FINISH;

413

* Modified Newton-Raphson algortihm ;

*

;

* User must supply initial values for ;

* parameters in B, and the FUN and

;

* DERIV functions. FUN evaluates the ;

* function to be maximized at the

;

* current value of B. DERIV evaluates;

* first and second partial derivatives;

* First partial derivatives are put ;

* into Q. The negative of the matrix ;

* of second partial derivatives is put;

* into H

;

*-------------------------------------;

START NEWTON;

CHECK=1;

DO ITER = 1 TO MAXIT

WHILE(INT(CHECK/CONVERGE));

RUN FUN;

FOLD=F;

BETA=B`;

STEP=ITER-1;

print,STEP BETA;

BOLD=B;

B2=BOLD;

RUN DERIV;

HI=INV(H);

B=BOLD+HI*Q;

RUN FUN;

DO HITER = 1 TO HALVING

WHILE(FOLD-F >= 0);

B=B2+2.*((.5)##HITER)*HI*Q;

RUN FUN;

END;

CHECK=((BOLD-B)`*(BOLD-B))/(B`*B);

END;

FINISH;

414

*EVALUATION OF THE LOG-LIKELIHOOD;

START FUN;

PI = B[1,1];

BETA = B[2,1];

/* Check if beta is getting too big */

IF(BETA > 40) THEN DO;

PRINT,,,,'The Newton-Raphson algoritm

did not converge';

IF(MEAN > VAR) THEN DO;

PRINT,'Estimated variance is smaller';

PRINT,'than the estimated mean';

PRINT,'Consider a Poisson model';

END;

STOP;

END;

G1 = CC + (J(K,1)#BETA);

G1 = LOG(GAMMA(G1));

G2 = CC + J(K,1);

G2 = LOG(GAMMA(G2));

F=Y`*G1 - Y`*G2 - N*LOG(GAMMA(BETA))

+ N*BETA*LOG(PI)

+ (Y`*CC)*LOG(1-PI);

FINISH;

416

415

*EVALUATION OF NEGATIVE BINOMIAL ;

*PROBABILITIES

;

START PROB;

PI = B[1,1];

BETA = B[2,1];

PNB = J(K,1);

PNB[1,1] = PROBNEGB(PI,BETA,0);

DO I = 2 TO K;

IM1 = I-1;

IM2 = I - 2;

PNB[I,1] = PROBNEGB(PI,BETA,IM1)

- PROBNEGB(PI,BETA,IM2);

END;

PNB[K,1] = PNB[K,1] + 1 - SUM(PNB);

FINISH;

417

* ----DERIVATIVE EVALUATION--------;

*

;

* First partial derivatives are

;

* returned in Q and the negative ;

* of the second partial derivatives;

* are returned in the matrix H. ;

*----------------------------------;

START DERIV;

Q = J(2,1);

H = J(2,2);

PI = B[1,1];

BETA = B[2,1];

CB = CC + ((BETA-1)#J(K,1));

Q[2,1] = SUM(INV(DIAG(CB))*YC)

-(YC[1,1]/(BETA-1)) +(N*LOG(PI));

Q[1,1] = (N*BETA/PI) - (Y`*CC/(1-PI));

H[1,1] = (N*BETA/(PI*PI))

+ (Y`*CC/((1-PI)*(1-PI)));

H[1,2] = -N/PI;

H[2,1] = -N/PI;

CB = CB##2;

H[2,2] = SUM(INV(DIAG(CB))*YC)

- (YC[1,1]/((BETA-1)*(BETA-1)));

FINISH;

*MODULE FOR COMBINIG CATEGORIES TO KEEP;

*ALL EXPECTED COUNTS ABOVE A SET LOWER ;

*BOUND: MB ;

*

*

*

*

Start at the bottom of the ;

array and combine categories;

until the expected count for

the combined category exceeds MB;

start combine;

mb=2;

cl = cc;

cu = cc;

yt=y;

ept=ep;

nc=nrow(cc);

ptr = nc;

I = J(nc, 1, .);

kk = 0;

418

do until(ptr = 1);

ptrm1 = ptr - 1;

if (ept[ptr] < mb) then do;

ept[ptrm1] = ept[ptrm1] + ept[ptr];

yt[ptrm1] = yt[ptrm1] + yt[ptr];

cu[ptrm1] = cu[ptr];

end;

else do;

kk = kk + 1;

I[kk] = ptr;

end;

ptr = ptrm1;

end;

if (ept[1] < mb) then do;

Ik = I[kk];

ept[Ik] = ept[Ik] + ept[1];

yt[Ik] = yt[Ik] + yt[1];

cl[Ik] = cl[1];

end;

419

else do;

kk = kk + 1;

I[kk] = 1;

end;

II

cl

cu

yt

ept

=

=

=

=

=

I[kk:1];

cl[II];

cu[II];

yt[II];

ept[II];

finish;

*-----------------------------------------;

/* Run the program */

RUN FIT;

420

421

Obs

RESULT

X

1

2

3

4

5

6

7

8

9

10

11

12

13

14

0

1

2

3

4

5

6

7

8

9

10

11

12

13

63

29

12

15

8

9

5

4

6

2

3

3

2

2

Results for the Poisson Distribution

Result

ZT

Observed

Count

63

29

12

15

8

9

5

22

Expected

Count

13.015525

32.898136

41.576785

35.029929

22.135477

11.189959

4.7139704

2.44022

PEARSON

PEARSON Statistic = 391.16992

MEAN

Sample Mean is 2.5276074

VAR

Sample Variance is 10.641876

df =

DFP

6

p-value =

PVALP

0

422

423

Results for the Negative Binomial Distribution

GG

Likelihood ratio test = 213.25714

df =

DFP

6

p-value =

PVALG

0

Method of moments estimators

PI

pi = 0.2375152

BETA

Beta = 0.7873537

Begin iterations to compute maximum

likelihood estimates

FISHERD

Fisher Deviance = 686.27184

df =

DFF

162

p-value =

PVALF

0

STEP

0

BETA

0.2375152 0.7873537

STEP

1

BETA

0.15943 0.4478721

STEP

2

BETA

0.1774692 0.5403728

STEP

424

BETA

425

Maximum likelihood estimates

PI

pi = 0.1888224

3

0.1874445 0.5823439

STEP

4

BETA

0.1887996 0.588264

BETA

Beta = 0.5883654

Estimated covariance matrix for maximum

likelihood estimates

VARB

0.0009272 0.0024031

0.0024031 0.0092301

426

Result

ZT

Observed

Count

63

29

12

15

8

9

5

4

6

5

5

2

Expected

Count

61.12833

29.174646

18.794984

13.154134

9.5722688

7.1255573

5.383545

4.1102093

3.1625555

4.3521292

2.6513795

4.3902613

PVALP

p-value = 0.3855776

Likelihood ratio test =

df

=

GG

9.27001

DFP

9

PVALG

p-value = 0.4127326

PEARSON

PEARSON Statistic = 9.579599

df =

DFP

9

427

428

# This set of Splus code is stored

# in the file negbin.ssc

#

#

#

#

This code computes maximum likelihood

estimates for Poisson and negative

binomial distributions and tests the

fit of these distirbutions.

#

#

#

#

#

#

#

The code includes a function for a

modified Newton-Raphson algorithm that

is used to maximize the log-likelihoods.

It requires specification of functions

to evaluate the log-likelihood and

evaluate first and second partial

derivatives of the log-likelihood.

# First create a number of useful functions

#

#

#

#

#

#

#

This function is used to combine

categories so all expected counts exceed

some constant mb. Here mb=2 by default if

you do not use the fourth argument of the

function. Start at the bottom of the array.

It may incur some roundoff error in

cummulating expected counts.

#---FUNCTION FOR COMBINIG CATEGORIES-----#---KEEP EXPECTED COUNTS FOR COMBINED----#---CATEGORIES ABOVE A SET LOWER BOUND: mb

#

#

#

#

Start at the bottom of the array and

combine categories until the expected

count for the combined category is

at least mb.

# input:

# ======

# cc -- vector of levels of a

#

catogorical variable.

# x -- vector of counts.

# ep -- vector of expected counts.

# nc -- the original number of

#

categories (levels) .

# mb -- the minimum expected count that

#

each combined category should

#

have.

429

430

# output:

# =======

#

k -- the number of categories after

#

combining.

# cl (cu) -- the smallest (largest) value

#

of a combined category.

# xt -- the observed count for a combined

#

category.

# ept -- the expected count for a combined

#

category.

combine<-function(cc, x, ep, nc, mb)

{

ptr <- nc

I <- c()

k <- 0

cl<-cc

cu<-cc

while(ptr > 1) {

ptrm1 <- ptr - 1

if(ep[ptr] < mb) {

ep[ptrm1] <- ep[ptrm1] + ep[ptr]

x[ptrm1] <- x[ptrm1] + x[ptr]

cu[ptrm1]<-cu[ptr]

}

else {

k <- k + 1

I[k] <- ptr

}

ptr <- ptrm1

}

if(ep[1] < mb) {

Ik <- I[k]

ep[Ik] <- ep[Ik] + ep[1]

x[Ik] <- x[Ik] + x[1]

cl[Ik]<-cl[1]

}

else {

k <- k + 1

I[k] <- 1

}

II <- I[k:1]

list(k=k, cl = cl[II], cu=cu[II],

xt = x[II], ept = ep[II])

}

431

432

# Function for evaluating the

# likelihood function

fun <- function(b,x.dat,x.cat) {

if(b[2,1] > 40) {stop(paste("beta > 40"))}

else

{f <- crossprod(x.dat, lgamma(x.cat+b[2,1])

-lgamma(x.cat+1))

f <- f + sum(x.dat)*(b[2,1]* log(b[1,1])

- lgamma(b[2,1]))

f <- f + crossprod(x.dat,x.cat)*log(1-b[1,1])}

f }

# Function for evaluating negative

# binomial probabilities

#

#

#

#

#

Function for computing first

partial derivatives and the

negative of the matrix of the

matrix of second partial derivatives

of the negative binomial log-likelihood

dfun <- function(b, x.dat, x.cat)

{

xcum <- sum(x.dat)-cumsum(x.dat)+x.dat

q <- matrix(c(1,1), 2, 1)

h <- matrix(c(1, 1, 1, 1), 2, 2)

q[1,1] <- sum(x.dat)*b[2,1]/b[1,1] crossprod(x.dat, x.cat)/(1-b[1,1])

q[2,1] <- sum(xcum/(x.cat+(b[2,1]-1)))(xcum[1]/(b[2,1]-1)) +

(sum(x.dat)*log(b[1,1]))

prob <- function(b, x.dat, x.cat){

pp <- exp(lgamma(x.cat+b[2,1])

-lgamma(x.cat+1)-lgamma(b[2,1])

+(b[2,1]*log(b[1,1]))

+x.cat*log(1-b[1,1]))

kt <- length(pp)

pp[kt] <- 1 - sum(pp) + pp[kt]

pp }

}

h[1,1] <- sum(x.dat)*b[2,1]/(b[1,1]^2) +

crossprod(x.dat, x.cat)/((1-b[1,1])^2)

h[1,2] <- -sum(x.dat)/b[1,1]

h[2,1] <- h[1,2]

h[2,2] <- sum(xcum/((x.cat+(b[2,1]-1))^2))

h[2,2] <- h[2,2]-(xcum[1]/((b[2,1]-1)^2))

list(q=q, h=h)

433

# Modified Newton-Raphson Algorithm

mnr <- function(b, x.dat, x.cat,

maxit = 50, halving = 16,

conv = .000001)

{

check <- 1

iter <- 1

while(check > conv && iter < maxit+1) {

fold <- fun(b, x.dat, x.cat)

bold <- b

aa <- dfun(b, x.dat, x.cat)

hi <- solve(aa$h)

b <- bold + hi%*%aa$q

fnew <- fun(b, x.dat, x.cat)

hiter <- 1

while(fold-fnew > 0 && hiter < halving+1) {

b <- bold + 2.*((.5)^hiter)*hi%*%aa$q

fnew <- fun(b, x.dat, x.cat)

hiter <- hiter + 1

}

435

434

cat("\n", "Iteration = ", iter, " pi =", b[1,1],

" beta = ", b[2,1])

iter <- iter + 1

check <- crossprod(bold-b,bold-b)/

crossprod(bold,bold)

}

aa <- dfun(b, x.dat, x.cat)

hi <- solve(aa$h)

list(b = b, hi = hi, grad = aa$q)

}

# The data in this example are

# the smooth surface cavity data

# considered in STAT 557.

#

#

#

#

#

#

#

#

Enter a list of counts for each

of the categories from zero

through K, the maximum number of

cavities seen in any one child.

All categories preceeding K must

be included, even if the observed

count is zero. There should be K+1

entries in the list of counts.

436

x.dat <- c(63, 29, 12, 15, 8, 9, 5,

4, 6, 2, 3, 3, 2, 2)

mb<-2

# Combine categories to keep expected

# counts above a lower bound

xcc <- combine(x.cat, x.dat, m.p, nc, mb)

# Enter the category values

# Compute the Pearson chi-squared

# test and p-value

nc <- length(x.dat)

x.cat <- 0:(nc-1)

x2p <- sum((xcc$xt-xcc$ept)^2/xcc$ept)

dfp <- length(xcc$ept) - 2

pvalp <- 1 - pchisq(x2p, dfp)

# Compute the total count, mean,

# and variance

n <- sum(x.dat)

m.x <- crossprod(x.dat, x.cat)/n

v.x <- (crossprod(x.dat, x.cat^2) - n*m.x^2)/n

# Compute expected counts for

# the Poisson model

m.p <- (n/m.x)*exp(-m.x)*

cumprod(m.x*(c(1,1:(length(x.dat)-1))^(-1)))

# Compute the G^2 statistic

g2<-0

for(i in 1:length(xcc$ept)) {

at<-0

if(xcc$xt[i] > 0)

{at<-2*xcc$xt[i]*log(xcc$xt[i]/xcc$ept[i])}

g2 <- g2+at}

pvalg <- 1 - pchisq(g2, dfp)

437

# Compute the Fisher deviance test statistic

fisherd <- n*v.x/m.x

dff <- n -1

pvalf <- 1 - pchisq(fisherd, dff)

438

# Begin computation for fitting the

# negative binomial distribution

pi <- .95

beta <- 20

if(v.x > m.x) {pi <- m.x/v.x

beta <- m.x^2/(v.x-m.x) }

# Print results

kk <- length(xcc$ept)

new2 <- matrix(c(xcc$cl, xcc$cu, xcc$xt,

xcc$ept), kk, 4)

cat("\n", " Results for fitting the

Poisson distribution", "\n")

cat("\n", "Category Bounds Count Expected",

"\n")

print(new2)

cat("\n", "

Pearson test = ", x2p)

cat("\n", "Degrees of freedom = ", dfp)

cat("\n", "

p-value = ", pvalp, "\n")

cat(" Likelihood ratio test =", g2, "\n")

cat("

df =", dfp, "\n")

cat("

p-value =", pvalg, "\n")

cat("\n"," Fisher deviance test = ", fisherd)

cat("\n", "

Degrees of freedom = ", dff)

cat("\n", "

p-value = ", pvalf )

439

cat("\n", "Results for fitting the

negative binomial distribution", "\n")

cat("\n", " Method of moment estimators ")

cat("\n", "

pi = ", pi)

cat("\n", " beta = ", beta, "\n")

# Compute maximum likelihood estimates

b <- matrix(c(pi, beta), nrow=2, ncol=1)

nbr <- mnr(b, x.dat, x.cat)

cat("\n",

cat("\n",

cat("\n",

cat("\n",

" Maximum likelihood estimators")

"

pi = ", nbr$b[1])

" beta = ", nbr$b[2], "\n")

" Covariance matrix =", nbr$hi )

440

# Compute the G^2 statistic

# Compute expected counts for the

# negative binomial model

m.nb <- sum(x.dat)*prob(nbr$b, x.dat, x.cat)

# Combine categories in the right

# tail of the distribution

nc <- length(x.dat)

xcc <- combine(x.cat, x.dat, m.nb, nc, mb)

# Compute the Pearson chi-squared

# test and p-value

x2p <- sum(((xcc$xt-xcc$ept)^2)/xcc$ept)

dfp <- length(xcc$ept) - 3

pvalp <- 1 - pchisq(x2p, dfp)

g2<-0

for(i in 1:length(xcc$ept)) {

at<-0

if(xcc$xt[i] > 0)

{at<-2*xcc$xt[i]*log(xcc$xt[i]/xcc$ept[i])}

g2 <- g2+at}

pvalg <- 1-pchisq(g2, dfp)

# print results

kk <- length(xcc$ept)

new3 <- matrix(c(xcc$cl, xcc$cu, xcc$xt,

xcc$ept), kk, 4)

cat("\n", " Results for fitting the Negative

Binomial distribution", "\n")

cat("\n", "Category Bounds Count Expected",

"\n")

print(new3)

cat("\n", "

Pearson test = ", x2p)

cat("\n", " Degrees of freedom = ", dfp)

cat("\n", "

p-value = ", pvalp)

cat("\n", "Likelihood ratio test = ", g2)

cat("\n", "

df = ", dfp)

cat("\n", "

p-value = " , pvalg)

442

441

Fisher deviance test = 686.27

Degrees of freedom = 162

p-value = 0

Results for fitting the

Poisson distribution

Category Bounds Count

[,1] [,2] [,3]

[1,]

0

0

63

[2,]

1

1

29

[3,]

2

2

12

[4,]

3

3

15

[5,]

4

4

8

[6,]

5

5

9

[7,]

6

6

5

[8,]

7 13

22

Pearson test

Degrees of freedom

p-value

Likelihood ratio test

df

p-value

Results for fitting the

negative binomial distribution

Expected

[,4]

13.015525

32.898136

41.576785

35.029929

22.135477

11.189959

4.713970

2.440142

Method of moment estimators

pi = 0.237515

beta = 0.787354

Iteration

Iteration

Iteration

Iteration

Iteration

= 391.18

= 6

=

0

= 213.26

= 6

= 0

=

=

=

=

=

1

2

3

4

5

pi

pi

pi

pi

pi

=

=

=

=

=

0.159430

0.177469

0.187444

0.188799

0.188822

beta

beta

beta

beta

beta

=

=

=

=

=

0.447872

0.540373

0.582344

0.588264

0.588365

Maximum likelihood estimators

pi = 0.188822

beta = 0.588365

Covariance matrix

0.00092745 0.00240421

0.00240421 0.00923527

443

444

Results for fitting the

Negative Binomial distribution

Category Bounds Count

[,1] [,2] [,3]

[1,]

0

0

63

[2,]

1

1

29

[3,]

2

2

12

[4,]

3

3

15

[5,]

4

4

8

[6,]

5

5

9

[7,]

6

6

5

[8,]

7

7

4

[9,]

8

8

6

[10,]

9 10

5

[11,] 11 12

5

[12,] 13 13

2

Pearson test

Degrees of freedom

p-value

Likelihood ratio test

df

p-value

=

=

=

=

=

=

Neyman Type A distribution:

Neyman (1939, Annals of

Mathematical Statistics)

Expected

[,4]

61.128330

29.174646

18.794984

13.154134

9.572269

7.125557

5.383545

4.110209

3.162555

4.352129

2.651380

4.390261

Example:

The number of egg masses deposited in a specic area by

a specic insect species has a

Poisson ( ) distribution.

Given a number of egg masses,

say j , the conditional distribution of the number of insect larvae in the area is P oisson(j).

9.5796

9

0.3856

9.2700

9

0.4127

445

446

Moments:

The marginal distribution for the

number of larvae, Y , in the area

is

P rfY = yg

1

j egg

X

=

P rfY = yj masses g

j =0

E (Y ) = V (Y ) = (1 + )

= E (Y )(1 + )

for > 0 and > 0: Here is

called the index of clumping.

j egg

P rf masses g

8

>

>

>

>

>

>

>

>

<

e (1 e )

for y = 0

1 j (j)y = >> X

j

e

e

j!

>

>

y!

>

j =1

>

>

>

:

for y = 1; 2; : : :

447

Limiting forms of the

distribution:

1. is large, is not too small

Y q

N (0; 1)

(1 + )

448

Logarithmic Series Distribution

2.

is small

8

y

>

< e :

y

! y = 1; : : :

P rfY = yg = >:

1 + e for y = 0

%

large probability that y = 0

3.

y

P rfY = yg =

; y = 1; 2; : : :

y

1

for 0 < < 1 and = log(1

)

Moments:

E (Y ) =

is small

() e P rfY = yg =:

y!

is nearly P oisson():

1 (1 )

(1 )2

2

3

1

5

= E (Y ) 4

1 V (Y ) =

449

450

Motivation:

This distribution has a relatively

\long" right tail.

R. A. Fisher (1943) Number of buttery and

moth species caught in

light traps

Chateld (1966) JRSS A, Numbers of

items bought by consumers

451

Suppose the number of species

represented by a single captured

individual is

n1

and the number of species represented by k captured individuals

is approximately

0

1

n

k

1

@

A

k = 1; 2; : : :

k

452

Then the total number of

species is

0

1

n1 1A k

X

@

S =

k=1 k

= n1 log(1 )

= n1

and

k

n1 k

k = S

k

is the proportion of species for

which k individuals will be captured.

Extra-Binomial Variation:

Beta-Binomial Model:

Consider a sequence of n independent binary trials, where

= P rfsuccessg

randomly varies according to a

Beta distribution with density

( )

1(1 ) 1

( ) ( )

for > 0; 0 < < 1; and 0 < < 1 :

f ( ) =

453

Then the marginal probability of

observing Y = y successes in n

trials is

P rfY = yg

= ny R01 y (1 )n y f ()d

(y+ ) (n y+ )

= (n+1)

(y+1) (n y+1) (n+ )

for y = 0; 1; : : : ; n and > 0 and

0<<1

Moments:

E (Y ) = n

Dirichlet - Multinomial

distribution

Suppose the conditional

distribution of

2

3

2

3

Y

6 1 7

6 1 7

6

7

6

7

Y = 6664 . 7775 given = 6664 . 7775

I

YI

is Mult(n; ), where

n =

2

6

4

3

+ n 75

V (Y ) = n(1 )

+1

454

1=

I

X

i=1

I

X

i=1

yi;

i; for 0 < i < 1

455

Suppose varies with a Dirichlet

distribution with density

() YI i 1;

i

I

Y

(i) i=1

i=1

I

where > 0, i > 0, 1 = X i,

i=1

i > 0, 1 = X i.

Then the unconditional distribution

of Y2 is39

8

y1 7>>=

P r >>Y = .. 75>>

:

yI ;

>

>

<

6

6

4

I

(yi + i)

() Y

= (n+1)

(n+) i=1 (i) (yi + 1)

where

> 0;

i

i > 0;

1=

I

X

i=1

456

i

457

Another extra-multinomial

variation model:

Let

Moments:

E (Y) = n 0

n + ! V (Y) =

n diag() 1+

%

Since > 0 this factor is

between 1 and n it determines the amount of extramultinomial variation.

Let

8

>

>

>

>

>

>

>

>

>

>

>

<

X with probability Xj = >>> an independent

>

>

>

>

>

>

>

>

:

Mult(1; ) observation

with probability 1 Y=

3

1 77

where = . 7775

I

X Mult(1; )

Let

458

2

6

6

6

6

6

4

n

X

j =1

Xj

459

Then

E (X) = n V (X) = [1 + 2(n 1)]n(

0

)

Modied Power Series

Distribution:

a(y)[ ()]y

P rfY = yg =

f ()

for y = 0; 1; 2; : : :

jj where is the radius

of convergence of the

series

1

f () = X a(y)[ ()]y

Note that Y Mult(n; ) when

= 0.

could be considered as an \index of clumping".

460

y=0

() is positive, bounded,

dierentiable

461

Poisson distribution:

Gupta, R. C. (1974) Modied power

series distributions and some of its applications Sankhya, B35, 288{298.

Gupta, R. C. (1977) Minimum variance unbiased estimation in modied

power series distribution and some of

its applications, Communications

in Statistics: Theory and Methods,

A10, 977{991.

Greenwood, P.E. and Nikulin, M. S.

(1996)

a(y) = y1! ; () = ; f () = e

0 < < 1.

1 y

X

y=0 y !

Binomial distribution:

a(y) = ny

0 < < 1.

() = 1 f () = (1 1)n

Negative binomial:

(k+y)

a(y) = (y+1)

(k)

0 < < 1.

() = f () = (1 ) k

Geometric distribution:

a(y) = 1 () = f () = 1 1 0 < < 1.

462

463

Generalized negative binomial distribution:

)

a(y) = (y+1)n ((nn++y

y y+1) ;

() = (1 ) 1,

f () = (1 ) n;

0 < 1;

jj < j

Left truncated generalized logarithmic series

distribution:

a(y) = (y+1)(y(+1)

y y+1) for y = ; + 1; : : :

f () = log 1 1 () = (1 ) 1

X1

!

1 k [(1 ) 1]k

k

k

k=1

0 < < 1 and jj < 1; and 1.

464