Stat 501 Homework 1 Solutions Spring 2005

advertisement

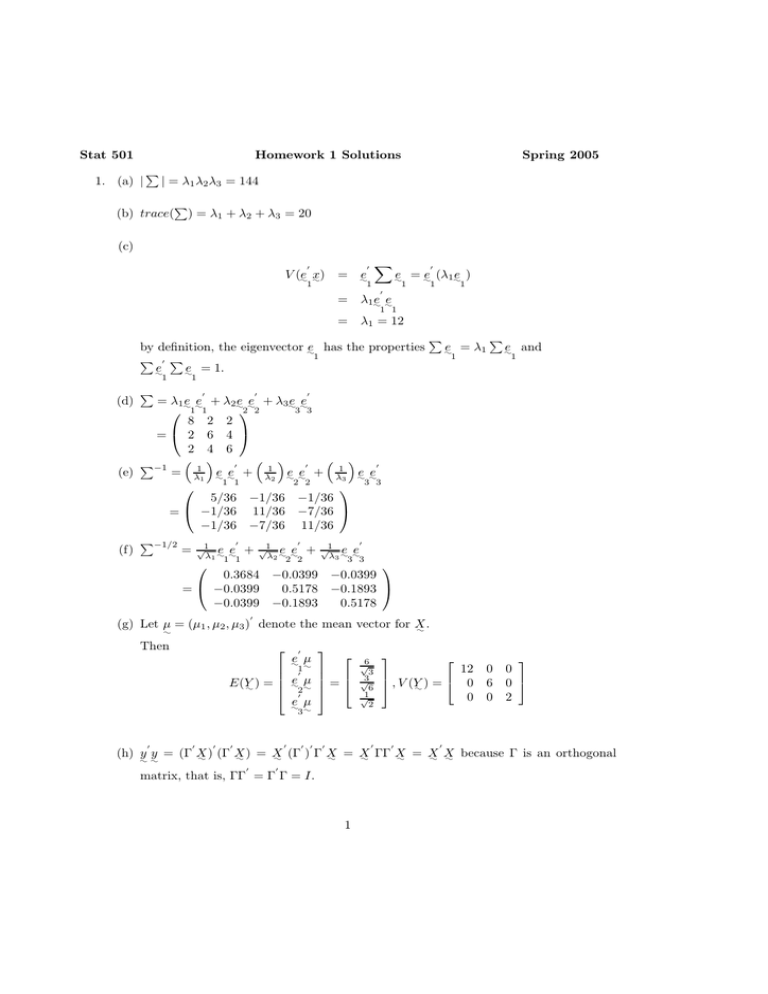

Stat 501 Homework 1 Solutions 1. (a) | Spring 2005 | = λ1 λ2 λ3 = 144 (b) trace( ) = λ1 + λ2 + λ3 = 20 (c) V (e: x ) = : 1 e : 1 e = :e (λ1 :e ) : 1 = λ1 :e :e = λ1 = 12 (d) 1 1 1 by definition, the eigenvector :e has the properties 1 e = 1. e : : 1 1 e = λ1 e and : : ⎡ ⎤ 0 0 6 0 ⎦ 0 2 1 1 1 = λ1 :e :e + λ2 :e :e + λ3 :e :e 2 2 3 3 ⎛ 1 1 ⎞ 8 2 2 =⎝ 2 6 4 ⎠ 2 4 6 −1 = λ11 :e :e + λ12 :e :e + λ13 :e :e (e) 1 1 2 2 3 3 ⎛ ⎞ 5/36 −1/36 −1/36 = ⎝ −1/36 11/36 −7/36 ⎠ −1/36 −7/36 11/36 −1/2 = √1λ :e :e + √1λ :e :e + √1λ :e :e (f) 1 1 1 2 2 2 3 3 3 ⎛ ⎞ 0.3684 −0.0399 −0.0399 = ⎝ −0.0399 0.5178 −0.1893 ⎠ −0.0399 −0.1893 0.5178 (g) Let µ = (µ1 , µ2 , µ3 ) denote the mean vector for X . : : ⎡ Then e µ : : 1 ⎢ ⎢ E(Y: ) = ⎢ :e µ ⎣ 2 : e µ : ⎤ ⎡ ⎥ ⎥ ⎢ ⎥=⎣ ⎦ 3: √6 3 √3 6 √1 2 ⎤ 12 ⎥ ⎦ , V (Y: ) = ⎣ 0 0 ) (Γ X ) = X (Γ ) Γ X = X ΓΓ X = X X because Γ is an orthogonal (h) y y = (Γ X : : : : : : : : : : matrix, that is, ΓΓ = Γ Γ = I. 1 (i) i. Yes, use result 2A.9.(e) on page 97 of the text and |I| = 1. Then, | y | = |Γ = |Γ ||Γ|| X | = |Γ Γ|| X | = |I|| X |. This makes X Γ| = |Γ || X ||Γ| intuitive sense because | y | and | X | are proportional to the square of the volume of the same ellipse. Rotations do not change volume. ii. Yes, use on page 98 of the text. Then result 2A.12.(c) trace( y ) = trace(Γ Γ) = trace(ΓΓ X X ) = trace(I X ) = trace( X ). iii. No, Y is a diagonal matrix for any X regardless of whether or not X is a diagonal matrix. iv. Yes, note that Γ Y Γ is the spectral decomposition of = X . Then, −1 −1 X −1 −1 Γ = Γ (Γ Γ )Γ = (Γ Γ) (Γ Γ) = Γ Y Γ , X Γ Y Γ and Γ X Y Y −1 I −1 I = . Y Y Consequently, (Y: − µ ) : −1 Y Y −1 (Y: − µ ) = (Γ X −Γ µ ) Γ −Γ µ ) X Γ(Γ X : : : : : Y X X −1 − µ )] Γ Γ[Γ (X − µ )] = [Γ (X X : : : : X X −1 − µ ) (Γ ) Γ ΓΓ (X − µ ) = (X X : : : : X X −1 − µ ) (ΓΓ ) (ΓΓ )(X − µ ) = (X X : : : : X X −1 −µ ) −µ ) = (X X (X : : : : X X v. Yes, (Y: − µ ) (y − µ ) : Y : : = (Γ X − Γ µ ) (Γ X −Γ µ ) : : : Y X : X = [Γ (X − µ )] [Γ (X − µ )] : : : X : X = (X − µ ) (Γ ) Γ (X −µ ) : : : X : X = (X − µ ) (X −µ ) : : : X : X because ΓΓ = I Hence, both Euclidean distance and Mahalanobis distance are invariant to rotations. 2. (a) f (x1 , x2 ) = 2π| 1 | −1 1 exp − 2 (X −µ ) (X −µ ) : : |1/2 : : X X −1 9 3 1 | = 36, = 36 3 5 2 2 9 3 1 1 1 f (x1 , x2 ) = 2π√36 exp − 2 X − − X : 36 : 1 1 3 5 2 = 1 12π exp 1 −2 2 − 58 [( x√ ) + 5 1 −2 √2 ( x√ )( x23−1 ) 5 5 + ( x23−1 )2 ] √ √ (b) λ1 = 7 + 13 = 10.6056, λ2 = 7 − 13 = 3.3944, 0.4719 0.8817 e: = , :e = −0.8817 0.4719 1 2 (c) | | = 36, trace( ) = 14 (d) The ellipse given by 1 9(x1 − 2)2 + 6(x1 − 2)(x2 − 1) + 5(x2 − 1)2 = χ2(2).50 = 1.386 36 √ (e) χ2(2)0.5 λ1 = 3.83 √ χ2(2)0.5 λ2 = 2.17 (f) area = πχ2(2).50 | (g) area = πχ2(2).05 | 3. µ = : 1 4 , = |1/2 = (π)(1.39)(6) = 27.95 |1/2 = (π)(5.99)(6) = 112.91 5/36 1/12 1/12 1/4 4. (a) (i),(v ) display independent pairs of random variables, you simply have to check if the covariance is zero in each case. 3 (b) X1 X2 −3 1 ∼N 4 0 , 0 5 (c) A bivariate normal distribution with mean vector −3 1 + −1 0 (− X23 − 4) 1 [2]−1 (X3 + 1) = and covariance matrix 4 0 0 5 − −1 0 [2]−1 −1 0 = 7/2 0 0 5 (d) ρ12 = 0 and ρ12·3 = 0 X1 X3 (e) Since X2 are independent to , X2 |x1 , x3 ∼ N (1, 5) 5. (a) Z1 ∼ N (−26, 77) (b) Z1 Z2 = 3 −5 0 1 2 0 −1 3 ∼N X : −26 −5 77 59 , 59 89 (c) A bivariate normal distribution with mean vector −4 2 1 −4 2/9 + (1 − 0) = + 1 −2 9 1 −2/9 −34/9 = 7/9 and covariance matrix 4 2 2 1 2 −2 − 2 4 −2 9 4 2 4/9 −4/9 32/9 22/9 = − = 2 4 −4/9 4/9 22/9 32/9 (d) ρ14·23 = √ 5/2 7/2×7/2 = 5 7 = 0.714 6. (a) cov(X − Y: , X + Y: ) = cov(X , X ) + cov(X , Y ) − cov(X , Y ) − cov(Y: , Y: ) : : : : : : : : 4 −4 = 1− 2 = −4 0 (b) No, because cov(X − Y: , X + Y: ) is not a matrix of zeros. : : 4 (c) Start with the fact that X and Y: are independent. Then : 160 of the text, ⎛⎡ ⎤ ⎡ 2 8 −2 0 X ⎜⎢ 6 ⎥ ⎢ −2 4 0 : ⎢ ⎥ ⎢ ∼N⎜ ⎝⎣ 3 ⎦ , ⎣ 0 Y: 0 4 4 0 0 2 from result 4.5(c) on page ⎤⎞ 0 ⎟ 0 ⎥ ⎥⎟ ⎦ 2 ⎠ 4 then from result 4.3 on page 157 of the text X − Y: X I −I : : = I I X + Y Y : : : ⎛⎡ ⎤ ⎡ ⎤⎞ −1 12 0 4 −4 ⎜⎢ 2 ⎥ ⎢ 0 ⎟ 8 −4 0 ⎥ ⎢ ⎥ ⎢ ⎥⎟ ∼N⎜ ⎝⎣ 5 ⎦ , ⎣ 4 −4 12 ⎦ 0 ⎠ 10 −4 0 0 8 7. (a) d(p, q ) for any A. d(p, q ) = [(p, q ) A(p, q )]1/2 > 0 for any (p − q ) if A is positive : : : : : : : : : : definite and it always true that d(p, p) = 0. Now we only have to consider property : : (iv ). It easy to show that (iv ) is true when A is both positive definite and symmetric. Then, the spectral decomposition matrix A = ΓΛΓ , exists, where Λ is a diagonal matrix containing the eigenvalues of Γ are the corresponding ⎤ ⎡ for A and the columns λ1 eigenvector. Then, A1/2 = Γ ⎣ · ⎦ Γ is also symmetric and positive · λp 1/2 definite and A 1/2 A 1/2 = A. Define d =A : (r: − q ) and a = (p − :r ) A1/2 . : : : Use the Cauchy-Schwarz inequality (page 80 in the text) to show that [(p − :r ) A(r: − q )]2 : : = (a d)2 : : ≤ (a a)(d d) : : : : = [d(p, q )]2 [d(r:, q )]2 . : : : Then, [d(p, q )]2 : : = (p − q ) A(p − q ) = (p − :r + :r − q ) A(p − :r + :r − q ) = : : : : : : : : (p − :r) A(p − :r ) + (r: − q ) A(p − :r) : : : : +(p − :r ) A(r: − q ) + (r: − q ) A(r: − q ) : = : : : [d(p, :r )]2 + [d(r:, q )]2 + 2(p − q ) A(r: − q ) : : 5 : : : [d(p, :r )]2 + [d(r:, q )]2 + 2d(p, :r )d(r:, q ) ≤ : : : : 2 [d(p, :r ) + d(r:, q )] = : : Hence, (iv ) is established for any positive definite symmetric matrix A. (b) Show that (iv ) holds for any positive definite matrix A, note that [d(p, q )]2 = (p − q ) A(p − q ) is a scalar, so it is equal to its transpose. : : : : : : Hence, (p − q ) A(p − q ) = [(p − q ) A(p − q )] = (p − q ) A (p − q ) : : : : : Then, [d(p, q )]2 : : = = : : : : : : : 1 (p − q ) A(p − q ) + (p − q ) A(p − q ) : : : : : : 2 : : 1 (p − q ) (A + A ) (p − q ) : : : : 2 satisfies (iv ) by the previous argument because 12 (A + A ) is symmetric and positive definite. Consequently, A dose not have to be symmetric, but it must be positive definite for (p − q ) A(p − q ) to satisfy properties (i)-(iv ) of a distance measure. If A : : : : was not positive definite, p − q = :0 would exist for which (p − q ) A(p − q ) = 0 and : : : : : : this would violate condition (ii). (c) Simply apply the triangle inequality to each component of (p − q ), i.e., : d(p, q ) : : : = max(|p1 − q1 |, |p2 − q2 |) = max(|p1 − r1 + r1 − q1 |, |p2 − r2 + r2 − q2 |) ≤ max(|p1 − r1 | + |r1 − q1 |, |p2 − r2 | + |r2 − q2 |) ≤ max(|p1 − r1 | + max(|r1 − q1 |, |r2 − q2 |), |p2 − r2 | + max(|r1 − q1 |, |r2 − q2 |)) ≤ max(|p1 − r1 |, |p2 − r2 |) + max(|r1 − q1 |, |r2 − q2 |) = d(p, :r ) + d(r:, q ) : 8. (a) i. Iq×q 0r×q B Ir×r : Iq×q 0r×q −B Ir×r Iq×q −Iq×q Bq×r + Bq×r Ir×r 0r×q Iq×q + Ir×r 0r×q Ir×r Iq×q 0q×r = = I(q+r)×(q+r) 0r×q Ir×r = 6 ii. Ir×r B 0r×q Iq×q Ir×r −B 0r×q Iq×q Ir×r − 0r×q Bq×r Ir×r 0r×q + 0r×q Iq×q = Bq×r Ir×r − Iq×q Bq×r Bq×r 0r×q + Iq×q Iq×q Ir×r 0r×q = I(r+q)×(r+q) = 0q×r Iq×q (b) The determinant of a block triangle matrix can be obtained by simply multiplying the determinants of the diagonal blocks. Consequently, Iq×q B = |Iq×q ||Ir×r | = 1 0 Ir×r and Ir×r B 0 Iq×q = |Ir×r ||Iq×q | = 1 To formly prove the latter case, start with the martix with q = 1 and expand across the first row to compute the determinant. The determinant of each minor in the expansion is multiplied by zero, except for the determinant of the r × r identity matrix which is multiplied by one. Cosequently, the determinant is one. Using an inductive proof, assume the result is true for any q and use a similar expansion acroos the first row to show that it is also true when the upper left block has dimesion q+1. Iq×q 0q×r A11 A12 Iq×q −A12 A−1 22 (c) A21 A22 0r×q Ir×r −A−1 Ir×r 22 A21 0q×r Iq×q Iq×q A11 − A12 A−1 Iq×q A12 − A12 A−1 22 A21 22 A22 = 0r×q A11 + Ir×r A21 0r×q A12 + Ir×r A22 −A−1 Ir×r 22 A21 −1 Iq×q 0q×r 0 A11 − A12 A22 A21 = A21 A22 −A−1 A Ir×r 21 22 −1 A11 − A12 A22 A21 0q×r = 0r×q A22 (d) from 8(c), A11 − A12 A−1 A21 22 0r×q 0q×r Iq×q = A22 0r×q −A12 A−1 22 A11 A21 Ir×r LHS = |A22 ||A11 − A12 A−1 22 A21 | for |A22 | = 0 RHS = 1 × |A| × 1 = |A| from 8(b). Hence, |A| = |A11 − A12 A−1 22 A21 | for |A22 | = 0 7 Iq×q A12 A22 −A−1 22 A21 0q×r Ir×r (e) Take inverse on both sides of 8(c), then −1 Iq×q A11 A12 Iq×q −A12 A−1 22 A21 A22 0r×q Ir×r −A−1 22 A21 −1 −1 −1 −1 Iq×q 0q×r A11 A12 −A12 A22 −1 A21 A22 Ir×r −A22 A21 Ir×r 0q×r A11 − A12 A−1 22 A21 0r×q A22 Iq×q = 0r×q = 0q×r Ir×r −1 then we easily get −A12 A−1 22 Ir×r Iq×q 0r×q A−1 = A11 − A12 A−1 22 A21 0r×q 0q×r A22 −1 Iq×q −A−1 22 A21 0q×r Ir×r from result of 8(a), we know that Iq×q −A−1 22 A21 9. Define −A12 A−1 22 Ir×r Iq×q 0r×q X = : X : = Iq×q 0r×q −A12 A−1 22 Ir×r ⎛ ⎞ µ : µ=⎝ 1 ⎠ 1 −1 0q×r Ir×r Iq×q −A−1 22 A21 = 0q×r Ir×r X : = −1 11 12 µ 21 22 : 2 −1 fX (x) = (2π)p/21| |1/2 exp − 12 (X − µ) (X − µ) : : : : : 2 : = (2π)q/2 (2π)r/2 | 22 |1/2 | 1 11 − : −1 22 12 21 | −1 1 exp − 2 (X − µ) (X − µ) : : 1/2 : : Expand the quadratic form (X − µ) : −1 : ⎛ (X − µ) = ⎝ : : = −µ ) (X : 1 × = : 1 Iq×q 0r×q −µ ) (X : 1 : 2 : (X −µ ) : : 2 − 12 22 1 2 : : 2 21 −1 Ir×r ⎠ 11 2 2 − µ ) − (X −µ ) (X : : 1 1 −µ ) (X : ⎛ ⎞ −1 −µ ) (X : : 1 1 ⎠ ⎝ 12 −µ ) (X 22 : ⎞ ⎛ ⎝ − 2 Iq×q −1 21 22 (X −µ ) : 1 : 2 : 1 −µ ) (X : −1 ⎞ 0q×r Ir×r 0q×r ( 11 − 12 −1 21 ) 22 −1 0r×q 22 1 2 8 1 ⎠ −µ ) 21 ) (X : 22 : : 2 −1 ( 11 − 12 22 21 ) 0q×r −1 0r×q 22 ⎡ ×⎣ (X −µ )− : : 1 1 12 −1 22 : = [(X −µ )− : : 1 12 1 −1 22 × [(X −µ )− : : 1 : 2 −µ ) (X : 2 ⎤ (X −µ ) : ⎦ 2 2 −1 −1 (X − µ )] ( 11 − 12 22 21 ) : : 2 22 12 1 2 −1 (X − µ )] + (X −µ ) : : : 2 : 2 2 −1 22 2 (X −µ ) : 2 : 2 = B1 + B2 fX (x) = : : (2π)q/2 (2π)r/2 | 22 = = (2π)q/2 | (2π)q/2 | = fX : |x 1 2 11 11 − − |1/2 1 11 22 12 21 1 −1 22 12 21 22 12 1 −1 | 21 |1/2 (2π)r/2 | |1/2 exp − 12 B1 1/2 exp 1 22 |1/2 (2π)r/2 | − µ) : −1 (X − µ) : : exp − 12 (B1 + B2 ) 1 22 |1/2 exp − 21 B2 (x )fX (x ) : : : 1 2 2 and X are uncorrelated, then fX If X : : 1 −1 − − 21 (X : : 2 |X2 =x 1 2 (x ) = fX (x ) : : : 1 1 1 Therefore, fX |x (x ) = fX (x )fX (x ) imply X and X are independent. : : : : : : : 1 1 : 2 2 1 2 The review of properties for partitioned matrices in problem 8 was done to give you the tools to factor the density for a multivariate normal distribution as a product of a marginal density and a conditional density. You may find some of these martix properties to be useful in future work. 9