Journal of Inequalities in Pure and

Applied Mathematics

http://jipam.vu.edu.au/

Volume 7, Issue 5, Article 187, 2006

A NOTE ON THE MARTINGALE INEQUALITY

YU MIAO

C OLLEGE OF M ATHEMATICS AND I NFORMATION S CIENCE

H ENAN N ORMAL U NIVERSITY

453007 H ENAN , C HINA .

yumiao728@yahoo.com.cn

Received 26 April, 2006; accepted 29 September, 2006

Communicated by F. Qi

A BSTRACT. In this paper, we will establish a martingale inequality, which extends the classic

Hoeffding inequality in some sense. In addition, our inequality improves the results of Lee and

Su [7] (2002) in some cases.

Key words and phrases: Bounded Martingale; Deviation bound; Hoeffding inequality; Martingale inequality.

2000 Mathematics Subject Classification. 60G42, 60E15.

1. I NTRODUCTION

Given a probability space (Ω, F, P) and a filtration F0 = {φ, Ω} ⊂ F1 ⊂ · · · ⊂ Fn = F, an

integrable random variable X ∈ L1 (Ω, F, P) can be written as

n

n

X

X

X − EX =

E(X|Fk ) − E(X|Fk−1 ) :=

dk ,

k=1

k=1

where dk is a martingale difference. An early inequality result is the following. If for any k,

there exist constants ak and bk , such that P(dk ∈ [ak , bk ]) = 1, then for any t > 0, we have the

following classic Hoeffding inequality (cf. [5])

2t2

.

P(|X − EX| ≥ t) ≤ 2 exp − Pn

2

k=1 (bk − ak )

De la Peña [2, 3] discussed a general class of exponential inequalities for bounded martingale

difference and ratios by the decoupling theory. Andreas [9] gave exponential deviation inequalities for one-sided bounded martingale difference sequences. In the case of the length of longest

increasing subsequences and the independence number of sparse random graphs, Lee and Su

[7] have utilised the symmetry argument in the martingale inequality.

ISSN (electronic): 1443-5756

c 2006 Victoria University. All rights reserved.

I wish to thank Y.Q. Chen and K. Zheng of Henan Normal University for many suggestions and helpful discussions during our writing of

this paper. I am also grateful to the conscientious anonymous referee for his very serious and valuable report. His suggestions and comments

have largely contributed to Section 3.

123-06

2

Y U M IAO

For these phenomena of measure concentration, the usual procedure in analysis is via martingale methods, information-theoretic methods and Talagrand’s induction method (see [6, 8,

10]). In most applications, X is a function of n independent (possibly vector valued) random variables ξ1 , ξ2 , . . . , ξn and the filtration is Fk = σ(ξ1 , ξ2 , . . . , ξn ). In this case we let

0

0

0

{ξ1 , ξ2 , . . . , ξn } be an independent copy of {ξ1 , ξ2 , . . . , ξn } and define

0

0

0

0

0

∆k = X(ξ1 , ξ2 , . . . , ξk−1 , ξk , ξk+1 , . . . , ξn ) − X(ξ1 , ξ2 , . . . , ξk−1 , ξk , ξk+1 , . . . , ξn ).

Let dk = E(∆k |Fk ). By definition, ∆k is the change in the value of X resulting from a

change only in one coordinate. So, if there exists a constant ck , such that |∆k | ≤ ck a.s.,

then |dk | ≤ ck a.s. and we can apply the Hoeffding inequality to obtain a tail bound for X.

However, in many cases, ck grows too rapidly and so the Hoeffding inequality does not provide

any reasonable tail bound. For improving the Hoeffding inequality, Lee and Su [7] obtained the

following reasonable tail bound for X.

Theorem 1.1 (See Theorem 1 in Lee and Su [7]). Assume that there exists a positive and finite

constant c such that for all k ≤ n, |∆k | ≤ c a.s. and there exist 0 < pk < 1 such that for each

k ≤ n, P(0 < |∆k | ≤ c|Fk−1 ) ≤ pk a.s. Then, for every t > 0,

t2

(1.1)

P(|X − EX| ≥ t) ≤ 2 exp − 2 Pn

.

2c

k=1 pk + 2ct/3

In this paper, we will demonstrate that if c Pnt pk is larger, especially if c Pnt pk ≥ 2.83e2.83 ,

k=1

k=1

we can obtain a more precise inequality than (1.1). In Section 2, we will give the main results

and show our inequalities are more precise than (1.1) in some cases. In Section 3, we apply our

results to the longest increasing subsequence.

2. M AIN R ESULTS

In this section, we will continue to use the notions of Section 1.

Theorem 2.1. Let X be an integrable random variable defined on a probability space (Ω, F, P)

which is in fact a function of n independent random variables ξ1 , ξ2 , . . . , ξn . We define Fk , ∆k ,

dk as in Section 1. Assume that there exist positive and finite constants ck such that for all

k ≤ n,

|∆k | ≤ ck

(2.1)

a.s.

and there exist 0 < pk < 1 such that for each k ≤ n,

P(0 < |∆k | ≤ ck |Fk−1 ) ≤ pk

(2.2)

a.s.

Then, for every t > 0,

(2.3)

t2

P(|X − EX| ≥ t) ≤ 2 exp − Pn

,

2 k=1 esck c2k pk

where s satisfies the equation s =

that s ≥ b, we will obtain

(2.4)

Pn

k=1

t

.

esck c2k pk

In addition, if there exists a constant b, such

P(|X − EX| ≥ t) ≤ 2e−bt/2 .

Proof. In fact, we only prove the form P(X − EX ≥ t), and the other form P(X − EX ≤ −t)

is similar. By Jensen’s inequality, for any s > 0, we have

E(esdk |Fk−1 ) = E(esE(∆k |Fk ) |Fk−1 ) ≤ E(es∆k |Fk−1 ),

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

a.e.

http://jipam.vu.edu.au/

M ARTINGALE I NEQUALITY

3

From (2.1), (2.2) and the following elementary inequality,

∀x ∈ R,

ex ≤ 1 + x +

|x|2 |x|

e ,

2

we can obtain

s∆k

E(e

|s∆k |2 |s∆k |

|Fk−1 ) ≤ E 1 + s∆k +

e

|Fk−1

2

s2

≤ 1 + esck E(∆2k |Fk−1 )

2

s2 sck 2

≤ 1 + e ck pk

2 2

s sck 2

≤ exp

e ck pk

a.e.

2

It is easy to check that

X − EX =

n

X

dk .

k=1

Thus, by Markov’s inequality, for any s > 0,

P(X − EX ≥ t) ≤ e−st Ees(X−EX)

Pn

≤ e−st Ees k=1 dk

h Pn−1

i

≤ e−st E es k=1 dk E esdn |Fn−1

Pn−1

s2 scn 2

≤ exp −st + e cn pn Ees k=1 dk

2

≤ ···

(

)

n

s2 X sck 2

≤ exp −st +

e ck pk .

2 k=1

If we could take

t

,

sck c2 p

k k

k=1 e

P

(2.3) can be shown. In fact, putting fn (s) = nk=1 esck sc2k pk , it is easy to see that for any n,

fn (s) is a continuous function in s, and is nondecreasing on [0, ∞) with fn (0) = 0. Thus, for

any t > 0, there exists only one solution that satisfies equation s = Pn etsck c2 pk . The remainder

k=1

k

of the proof is straightforward.

(2.5)

s = Pn

Remark 2.2. It is easy to see that the solution of the equation s = Pn etsck c2 pk could not be

k=1

k

given concretely. However, we can use the formula (2.4), by obtaining a low bound of s in

many cases.

Corollary 2.3. Under the conditions of Theorem 1.1, we assume that for all 1 ≤ k ≤ n, ck = c.

Then, for every t > 0,

t2

(2.6)

P(|X − EX| ≥ t) ≤ 2 exp − sc 2 Pn

,

2e c

k=1 pk

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

http://jipam.vu.edu.au/

4

Y U M IAO

where s satisfies the equation s =

that s ≥ b, we obtain

esc c2

t

P

n

k=1

pk

. In addition, if there exists a constant b, such

P(|X − EX| ≥ t) ≤ 2e−bt/2 .

(2.7)

Next, we will show that, in some cases, the condition s ≥ b in Corollary 2.3 could be obtained

and our results are better than inequality (1.1).

Proposition 2.4. Under the conditions of Corollary 2.3,

(R1 ): Assuming that for any given t > 0,

(2.8)

c

t

Pn

k=1 pk

≥ 2.83e2.83 ,

then we have the following inequality

P(|X − EX| ≥ t) ≤ 2e−2.83t/(2c) ,

(2.9)

and in this case, our bound e−2.83t/(2c) is better than (1.1).

(R2 ): Conversely, if for any given t > 0,

(2.10)

c

t

Pn

k=1

pk

≤ 2.82e2.82 ,

then (1.1) is better than our result.

Proof. By s =

esc c2

t

P

n

k=1

pk

and

c

Pnt

k=1

pk

≥ 2.83e2.83 , it is easy to see that

scesc ≥ 2.83e2.83

and

sc ≥ 2.83.

From Corollary 2.3, (2.9) can be obtained.

Next we will show that our bound e−2.83t/(2c) is better than (1.1). For c Pnt pk ≥ 3e3 , we

k=1

know

t

t

Pn

(2.11)

(1/c − s/3) < s,

s = sc 2 Pn

;

c k=1 pk

e c

k=1 pk

t

ts

t

⇔ 2 Pn

< Pn

+ s,

sesc = 2 Pn

;

c

3c k=1 pk

c

k=1 pk

k=1 pk

ts

t

⇔ sesc < Pn

+ s,

sesc = 2 Pn

;

3c k=1 pk

c

k=1 pk

t

t

⇔ esc < Pn

+ 1,

sesc = 2 Pn

;

3c k=1 pk

c

k=1 pk

n

X

t

⇔ c(esc − 1)

pk < t/3,

sesc = 2 Pn

;

c

p

k

k=1

k=1

⇔ 2c2 esc

n

X

k=1

pk < 2c2

n

X

pk + 2ct/3,

k=1

sesc =

c2

t

Pn

k=1

Thus, by comparing (2.6) and (1.1), the proof of (R1 ) is given under the condition

3e3 . To proving remainders, by (2.11), we only show the following relations

c Pnt pk (1/c − s/3) ≥ s, if 2.83e2.83 ≤ c Pnt pk < 3e3 ;

k=1

k=1

(2.12)

Pnt

(1/c − s/3) ≤ s, if c Pnt pk < 2.82e2.82 .

c

pk

k=1

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

c

pk

.

Pnt

k=1

pk

≥

k=1

http://jipam.vu.edu.au/

M ARTINGALE I NEQUALITY

5

5

0

−5

−10

−15

−20

−1

−0.5

0

0.5

1

1.5

2

2.5

3

3.5

4

Figure 1

Since s =

(2.13)

esc c2

t

P

n

k=1

pk

, (2.12) is equivalent to the following relations

cesc (1/c − s/3) ≥ 1, if 2.83e2.83 ≤

cesc (1/c − s/3) ≤ 1, if

c

Pnt

k=1

pk

c

Pnt

k=1

pk

< 3e3 ;

< 2.82e2.82 .

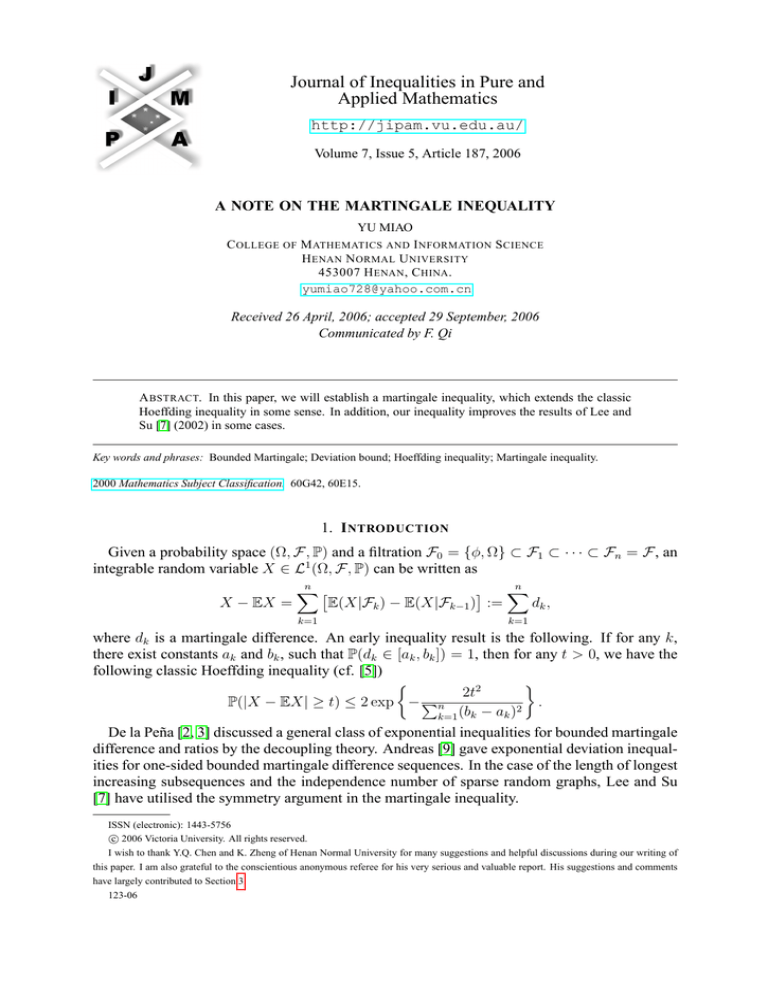

Letting f (s) = cesc (1/c − s/3) − 1 and sc = x, we have f (x) = ex (1 − x/3) − 1. It is not

difficult to check that f (x) is an increasing function in [0, 2.82] and a decreasing function in

[2.83, ∞) (or see Figure 1). And f (0) = 0, f (x0 ) = 0, where x0 ∈ [2.82, 2.83]. The rest is

obvious.

Remark 2.5. In the above proposition, though the bounds 2.82e2.82 and 2.83e2.83 are coarser,

we can easily determine which inequalities are a little sharper by using these bounds.

Remark 2.6. The above results show that for given n (resp. t), our inequality is more precise

in the case of sufficiently large t (resp. small n). However, in many cases, we need computer

power to use our inequality. For example, assuming c Pnt pk = B, where B is given, then we

k=1

often need to control the solution of the equation xex = B.

3. T HE L ONGEST I NCREASING S UBSEQUENCE

In this section, we discuss the longest increasing subsequence as in Lee and Su [7] (2002) and

show our results are little sharper. Consider the symmetric group Sn of permutations π on the

number 1, 2, . . . , n, equipped with the uniform probability measure. Given a permutation π =

(π(1), π(2), . . . , π(n)), an increasing subsequence i1 , i2 , . . . , ik is a subsequence of 1, 2, . . . , n

such that

i1 < i2 < · · · < ik , π(i1 ) < π(i2 ) < · · · < π(ik ).

We write Ln (π) for the length of longest increasing subsequences of π.

Let Ui = (Xi , Yi ), i = 1, 2, . . . , n, be a sequence of i.i.d. uniform sample on the unit square

[0, 1]2 . Ui1 , Ui2 , . . . , Uik is called a monotone increasing chain of height k if

Xij < Xij +1 , Yij < Yij +1 for j = 1, 2, . . . , k − 1.

Define Ln (U ) to be the maximum height of the chains in the sample U1 , U2 , . . . , Un .

By Hammersley [4] (1972) and Aldous and Diaconis [1] (1999), the following facts are

known:

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

http://jipam.vu.edu.au/

6

Y U M IAO

(F1 ): Ln (π) has the same distribution as Ln (U ).

n (π)

(F2 ): L√

→ 2, in probability and in mean.

n

0

0

0

Let {U1 , U2 , . . . , Un } be an independent copy of {U1 , U2 , . . . , Un }. It is easy to see that,

letting

0

0

0

0

0

∆k = Ln (U1 , . . . , Uk−1 , Uk , Uk+1 , . . . , Un ) − Ln (U1 , . . . , Uk−1 , Uk , Uk+1 , . . . , Un )

∆k takes values only +1, 0, −1. Moreover, since E(∆k |Fk−1 ), where Fk−1 = σ(U1 , U2 , . . . , Uk−1 ),

we have

P(∆k = +1|Fk−1 ) = P(∆k = −1|Fk−1 ).

Letting pk = 2ELn−k+1 (Uk , Uk+1 , . . . , Un )/(n − k + 1), from Lee and Su [7] (2002), there is

the following fact:

(F3 ): P(∆k = +1|Fk−1 ) ≤ pk /2.

For the longest increasing subsequence, we have the following result.

Theorem 3.1. There exists a constant δ < 1/2, such that for all sufficiently large n and any

r > 0,

δrn log n

.

(3.1)

P(|Ln (U ) − ELn (U )| > rn) ≤ 2 exp −

2

Proof. For any r > 0 and sufficiently large n, s in Corollary 2.3 needs to satisfy the equation

s = es Prn

. Since

n

k=1 pk

1

√ ELn (U ) → 2 as n → ∞,

n

we have

n

n

X

1 X ELk (U )

−1/2

√

→ 4 as n → ∞, i.e., n

pk → 4.

k

n k=1

k=1

√

By the equation s = es Prn

, we know that for sufficiently large n, ses = O( n). Thus there

n

p

k

k=1

exists a constant δ < 1/2, such that ses > eδ log n δ log n, i.e., s ≥ δ log n. By Corollary 2.3, we

have the result.

Remark 3.2. By Proposition 2.4, we know our results are sharper than the ones in Lee and Su

[7] to a certainty. Lee and Su [7] gave the following result by an application of inequality (1.1).

Theorem LS. Given any ε > 0, for all sufficiently large n and any t > 0,

t2

√

(3.2)

P (|Ln (π) − ELn (π)| ≥ t) ≤ 2 −

.

(16 + ε) n + 2t/3

Here if taking t = rn, then P (|Ln (π) − ELn (π)| ≥ rn) ≤ O(e−n ), which is coarser than

(3.1)

R EFERENCES

[1] D.J. ALDOUS AND P. DIACONIS, Longest increasing subsequences: From patience sorting to the

Baik-Deift-Johansson theorem, Bull. Amer. Math. Soc., 36 (1999), 413–432.

[2] V.H. DE LA PEÑA, A bound on the moment generating function of a sum of dependent variables

with an application to simple random sampling without replacement, Ann. Inst. H. Poincaré Probab.

Staticst., 30 (1994), 197–211.

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

http://jipam.vu.edu.au/

M ARTINGALE I NEQUALITY

7

[3] V.H. DE LA PEÑA, A general class of exponential inequalities for martingales and ratios, Ann.

Probab., 27 (1999), 537–564.

[4] J.M. HAMMERSLEY, A few seedlings of research, Proceedings of the Sixth Berkeley Symposium

on Mathematical and Statistical Probability, University of California Press, Berkeley, CA, (1972),

345–394.

[5] W. HOEFFDING, Probability inequalities for sums of bounded random variables, J. Amer. Statist.

Assoc., 58 (1963), 12–20.

[6] M. LEDOUX, Isoperimetry and gaussian analysis, Lectures on Probability Theory and Statistics,

Ecole d’Et’e de Probabilités de St-Flour XXIV-1994, P. Bernard (Editor), LNM 1648, SpringerVerlag, Berlin, 1996, 165–294.

[7] S. LEE AND Z.G. SU, The symmetry in the martingale inequality, Stat. Probab. Letters, 56 (2002),

83–91.

[8] P. MASSART, About the constants in Talagrand’s concentration inequalities for empirical processes, Ann. Probab., 28(2) (2000), 863–884.

[9] A. MAURER, A bound on the deviation probability for sums of non-negative random variables,

J. Inequal. Pure. Appl. Math., 4(1) (2003), Art. 15. [ONLINE: http://jipam.vu.edu.au/

article.php?sid=251].

[10] M. TALAGRAND, Concentration of measure and isoperimetric inequalities in product spaces,

Publ. Math. del’IHES, 81 (1995), 73–205.

J. Inequal. Pure and Appl. Math., 7(5) Art. 187, 2006

http://jipam.vu.edu.au/