A GOAL-ORIENTED DESIGN EVALUATION FRAMEWORK

FOR DECISION MAKING UNDER UNCERTAINTY

by

JUN BEOM KIM

Bachelor of Science, Aerospace Engineering

Seoul National University, Seoul, Korea, 1992

Master of Science, Mechanical Engineering

Massachusetts Institute of Technology, Cambridge, MA, 1995

Submitted to the Department of Mechanical Engineering

in Partial Fulfillment of the Requirements for the Degree of

DOCTOR OF PHILOSOPHY IN MECHANICAL ENGINEERING

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 1999

© 1999 Massachusetts Institute of Technology

All Rights Reserved

t........ .70........................

.n...or................-.

Signature of A uthor............................................

Engineering

Mechanical

/Department of

May 9, 1999

Certified by........................................

David R. Wallace

Esther and Harold E. Edgerton Associate Professor of Mechanical Engineering

Thesis Supervisor

Accepted by......................................................

Ain A. Sonin

Chairman, Department C

tee on Graduate Students

ENG

To myfatherfor his perseverance

and

To my motherfor her dedication

A GOAL-ORIENTED DESIGN EVALUATION FRAMEWORK

FOR DECISION MAKING UNDER UNCERTAINTY

by

JUN BEOM KIM

Submitted to the Department of Mechanical Engineering on May 9, 1999

in Partial Fulfillment of the Requirements for the Degree of

Doctor of Philosophy in Mechanical Engineering

ABSTRACT

This thesis aims to provide a supportive mathematical decision framework for use during

the process of designing products. This thesis is motivated by: (a) the lack of descriptive

design decision aid tools used in design practice and (b) the necessity to emphasize the

role of time-variability and uncertainty in a decision science context.

The research provides a set of prescriptive and intuitive decision aid tools that will help

designers in evaluating uncertain, evolving designs in the design process. When

integrated as part of a design process, the decision framework can guide designers into

the right development direction in the fluidic design process.

A probabilistic acceptability-based decision analysis model is built upon characterizing

design as a specification satisfaction process. The decision model is mathematically

defined and extended into a comprehensive goal-oriented design evaluation framework.

The goal oriented evaluation framework provides a set of tools that constitute a coherent

value-based decision analysis system under uncertainty. Its four components are: (a) the

construction of multi-dimensional acceptability preference functions capturing

uncertainties in designer's subjective judgment; (b) a new goal setting method, based

upon a discrete Markov chain, to help designers quantify product targets early in the

design process; (c) an uncertainty metric, based upon mean semi-variance analysis,

enabling designers to cope with uncertainties of single events by providing measures of

expectation, risk, and opportunity, and; (d) a data aggregation model for merging

different and uncertain expert opinions about a design's engineering performance.

These four components are intended to form a comprehensive and practical decision

framework based upon the notion of goal, enabling product designers to measure

uncertain designs in a systematic manner to make better decisions throughout the product

design process.

Doctoral Committee : Professor David R. Wallace (Chairman)

Professor Seth Lloyd

Professor Kevin N. Otto

ACKNOWLEDGEMENTS

This is a culmination of my four years' research at MIT Computer-aided Design

Laboratory in the field of design and decision theory.

My appreciation goes to my advisor Professor David R. Wallace. Countless times, he

patiently listened to my thoughts and provided me with much critical and constructive

feedback throughout the course of the research. I especially thank him for the trust and

encouragement that he had shown to me in all of our meetings.

I also would like to thank my committee members, Professor Kevin Otto for this

feedback on a few issues and Professor Seth Lloyd for the discussions in shaping two of

the topics presented in this thesis. Also I have to thank Dr. Abi Sarkar in Mathematics

Department for his inputs shaping Chapter 6 of this thesis.

I would like to mention all of my friends at CADLAB and MIT Korean community.

Some of the old members at CADLAB ; Paul, Nicola, Krish, Ashley, and Chun Ho.

Current members at CADLAB ; Nick, Ben, Shaun M., Shaun A., Manuel, Jaehyun, Jeff,

Pricilla, Chris, and Bill ; don't stay here too long. You deserve a window.

The Korean community at MIT has been the main source of relaxation and refreshment

throughout the long days at MIT. To name a few; Shinsuk, DongSik,HunWook,

DongHyun, Taejung, JuneHee, SungHwan, KunDong, JaeHyun, SangJun, Steve,

SokWoo. Without you guys, my life in the States must have been so boring.

Finally, I would like to express my deepest gratitude to my parents. Their unconditional

love and support throughout my life has shaped me and my thoughts. My brother is the

MAN. For all the support and encouragement that he had shown to me while I have been

away, I cannot thank him too much. We have been the best friends and will be as we go

along through the rest of our lives. I am so blessed to be a part of such a family.

One is one's own refuge, who else could it be?

- Buddha

I

9

TABLE OF CONTENTS

ABSTR A CT ..........................................................................................................................................................

5

TABLE O F C O N TEN TS ....................................................................................................................................

9

LIST O F FIG U RES ...........................................................................................................................................

13

LIST O F TA BLES .............................................................................................................................................

15

1.IN TR OD U C TION ...............................................................................................................................--.....--.

17

...............

M OTIVATION...............................................................................................................

..................................................................................................

AND

DELIVERABLE

THESIS G OAL

1.1

1.2

Goal-orientedpreferencefunction .....................................................................................

1.2.1

Go al settin g ..................................................................................................................................

1.2 .1.1

Preference function construction..............................................................................................

1.2.1.2

Decisions underdesign evolution and changing uncertainty...........................................

1.2.2

Uncertainty M easure....................................................................................................................21

1.2.2.1

M ultiple estimate aggregation................................................................................................

1.2.2.2

THESIS O RGANIZATION ....................................................................................................................

1.3

2. BA CK GR O U N D ............................................................................................................................................

2.1

2.2

Design D ecision Making....................................................................................................

D ecision M aking as an Integral Part of D esign Process .................................................

RELATED W ORK ................................................................................................................................

Qualitative D ecision Support Tools ..................................................................................

2.4.1

P u gh ch art.....................................................................................................................................

2 .4 .1.1

House of Quality ..........................................................................................................................

2.4.1.2

Q uantitativeD ecision Support Tools ................................................................................

2.4.2

Figure of M erit.............................................................................................................................

2.4.2.1

M ethod of Imprecision ............................................................................................................

2.4.2.2

2.4.2.3

2.4.2.4

2.4.3

2.4.4

Utility Theory...............................................................................................................................

Acceptability Model ....................................................................................................................

Other Decision Aid Tools....................................................................................................

D iscussion...............................................................................................................................

SUMM ARY .........................................................................................................................................

3. ACCEPTABILITY-BASED EVALUATION MODEL ............................................................................

3.2

3.2.1

25

26

27

2.3.2

3.1

22

22

D esign.....................................................................................................................................

D ecision Analysis ...................................................................................................................

DECISION A NALYSIS IN DESIGN.......................................................................................................

2.5

21

21

21

25

26

2.3.1

2.4

20

.........................---.

O VERVIEW ........................................................................................................

D ESIGN, DECISION M AKING, AND DESIGN PROCESS...................................................................

2.2.1

2.2.2

2.3

--- 17

19

28

28

29

31

32

32

32

34

34

35

36

39

42

43

44

45

O VERVIEW ........................................................................................................................................

A GOAL-ORIENTED APPROACH.........................................................................................................

45

45

Goalprogramming .................................................................................................................

46

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

10

Goal-baseddesign evaluationframework..........................................................................

3.2.2

A SPIRATION AND REJECTION LEVELS...........................................................................................

3.3

D efinition and Formulation ...............................................................................................

Absolute Scale of Aspiration and Rejection Levels............................................................

3.3.1

3.3.2

3.4

O NE D IM ENSIONAL A CCEPTABILITY FUNCTION..............................................................................

Formulation............................................................................................................................

Operation : Lottery Method...............................................................................................

3.4.1

3.4.2

M ULTI DIMENSIONAL A CCEPTABILITY FUNCTION .......................................................................

3.5

Mutual PreferentialIndependence.....................................................................................

Two-dim ensionalAcceptability Function............................................................................

3.5.1

3.5.2

3.5.2.1

3 .5.2.2

47

49

51

51

52

53

53

55

Formulation..................................................................................................................................55

So lutio n ........................................................................................................................................

56

58

D iscussion...............................................................................................................................

58

SUMM ARY .........................................................................................................................................

59

3.5.4

4. GOAL SETTING USING DYNAMIC PROBABILISTIC MODEL......................................................

O VERVIEW ........................................................................................................................................

4.1

47

N -dimensional Acceptability Function..............................................................................

3.5.3

3.6

46

61

61

4.1.1

Goal-basedM ethod................................................................................................................

62

4.1.2

Problem Statement .................................................................................................................

62

4.1.3

Approach ................................................................................................................................

A DISCRETE M ARKOV CHAIN : A STOCHASTIC PROCESS...............................................................

4.2.1

M arkov Properties.................................................................................................................

Transition Probabilitiesand M atrix...................................................................................

4.2.2

D YNAM IC PROBABILISTIC GOAL SETTING M ODEL .......................................................................

4.3

4.3.1

OperationalProcedure......................................................................................................

4.2

4.3.2

4.3.3

Identification of Candidate States ......................................................................................

Assessm ent of Individual States..........................................................................................

4.3.3.1

4.3.3.2

4.3.3.3

Local Probabilities .......................................................................................................................

M odeling Assumption on the Local Probabilities ..................................................................

Interpretation of Subjective Probabilities ...............................................................................

M arkov Chain Construction...............................................................................................

4.3.4

4.3.4.1

4.3.4.2

4.3.5

4.4.2

4.6

67

67

67

68

71

72

Decision Chain.............................................................................................................................72

Transition M atrix Construction................................................................................................

74

Limiting Probabilitiesand their Interpretation.................................................................

75

O peratio n ......................................................................................................................................

4 .3 .5 .1

4.3.5.2

Existence of Limiting Probabilities.........................................................................................

Interpretation of Limiting Probabilities .................................................................................

4.3.5.3

4.4

POST-A NALYSIS................................................................................................................................

4.4.1

Convergence Rate ..................................................................................................................

4.5

63

65

65

65

66

66

Statistical Test ........................................................................................................................

A PPLICATION EXAMPLE ...................................................................................................................

75

77

79

80

80

82

84

4.5.1

4.5.2

4 .5.2 .1

Purpose...................................................................................................................................

Procedure...............................................................................................................................

S e t-u p ............................................................................................................................................

84

85

85

4 .5 .2 .2

4.5.3

P articip an ts ...................................................................................................................................

G oal-setting Software ........................................................................................................

85

86

4.5.4

Result ......................................................................................................................................

87

4 .5 .4 .1

P ercep tio n .....................................................................................................................................

4.5.4.2

M easurement Outcome................................................................................................................88

SUMM ARY .........................................................................................................................................

5. DECISION MAKING FOR SINGLE EVENTS........................................................................................

5.1

5.2

O VERVIEW ........................................................................................................................................

EXPECTATION-BASED DECISIONS ..................................................................................................

5.2.1

5.2.2

Framework .............................................................................................................................

Allais paradox........................................................................................................................

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

87

88

91

91

92

92

92

11

Th e E xperim ent............................................................................................................................

5 .2 .2 .1

Alternative analysis......................................................................................................................94

5.2.2.2

Its Implication to Design Decision M aking .............................................................................

5.2.2.3

U NCERTAINTY CLASSIFICATION ...................................................................................................

5.3

Type I Uncertainty : Repeatable Event ..............................................................................

Type II Uncertainty : Single Event .....................................................................................

D iscussion...............................................................................................................................

5.3.1

5.3.2

5.3.3

DECISION MAKING UNDER TYPE II UNCERTAINTY........................................................................

5.4

92

95

98

98

98

99

99

100

101

102

5.4.1

5.4.2

5.4.3

M ean-varianceanalysis in finance theory ..........................................................................

Quantificationof D ispersion...............................................................................................

Opportunity and Risk ...........................................................................................................

5.4.3.1

5 .4 .3 .2

5.4.3.3

5.4.4

Bi-directional measurement.......................................................................................................102

D efin itio ns..................................................................................................................................10

Quantification.............................................................................................................................103

Interpretationof Opportunity/Risk in evolving design process..........................................

104

5.4.5

Discussion: Risk attitude......................................................................................................

106

5.5

EXAM PLE : ALLAIS PARADOX REVISITED.......................................................................................

107

Pre-Analysis .........................................................................................................................

First Gam e: L, Ivs. L1 .......................................................................................................

Second Game : L2 , vs. L2 ....................................................................................................

107

5.5.1

5.5.2

5.5.3

SUMM ARY .......................................................................................................................................

5.6

3

108

109

109

6. DATA A G GR EGATIO N M ODEL............................................................................................................111

OVERVIEW ......................................................................................................................................

6. 1

Problemfrom Information-Context.....................................................................................

6.1.1

RELATED RESEARCH ......................................................................................................................

6.2

Point Aggregate Model ........................................................................................................

ProbabilityD istributionAggregate M odel .........................................................................

6.2.1

6.2.2

PROBABILITY M ERGING MECHANISM ............................................................................................

6.3

I11

112

113

113

114

115

115

Approach: Probabilitym ixture............................................................................................

Analysis of difference among the Estimates........................................................................115

118

M erging with variance-difference.......................................................................................

6.3.1

6.3.2

6.3.3

6.3.3.1

6.3.3.2

Prerequisite: N-sample average................................................................................................

M odeling Assumption ...............................................................................................................

119

119

6.3.3.3

Pseudo-Sampling Size ...............................................................................................................

120

6.3.3.4

6.3.3.5

121

Confidence quantified in terms of Pseudo sample size............................................................

Pseudo Sampling Size in Probability M ixture M odel..............................................................121

6.3.4

6.3.4.1

6.3.5

6.3.6

M echanism for merging estimates with different means ....................................................

M odeling Assumption ...............................................................................................................

Quantificationof Variability among estimates...................................................................

A Complete Aggregate Model..............................................................................................125

EXAMPLE ........................................................................................................................................

SUMM ARY .......................................................................................................................................

6.4

6.5

122

122

123

125

126

7. APPLICA TIO N EX AM PLES....................................................................................................................127

7.1

7.2

OVERVIEW ......................................................................................................................................

A CCEPTABILITY AS AN INTEGRAL DECISION TOOL IN DOME........................................................

127

128

D OME system and Acceptability component......................................................................

D OME Components.............................................................................................................128

D ecision Support Component ..............................................................................................

128

7.2.1

7.2.2

7.2.3

7.2.3.1

7.2.3.2

7.2.3.3

7.2.4

An integratedModel.............................................................................................................

A CCEPTABILITY A S AN ONLINE COMMERCE DECISION GUIDE ....................................................

7.3

7.3.1

7.3.1.1

129

Acceptability Setting M odule....................................................................................................129

Criterion M odule........................................................................................................................130

Aggregator Module....................................................................................................................130

Background...........................................................................................................................

Shopping Decision Attribute Classification .............................................................................

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

131

133

134

134

12

7 .3.1.2

7.3.1.3

7.3.1.4

C urrent Tools ................................................................

7.3.2

..................................................

7.3.2.1

Constraint Satisfaction Problem.................................................................137

7.3.2.2

Utility Theory..........................................................---..........-.

7.3.2.3

Comparison of Decision Tools............................................................139

7.3.3

7.4

. -----------..............................

...... ...--- ..

O peration ............................................................-. --...----------------........................

Online M arket Trend ........................................................----....

- .......... -----... . .........................

D ecision Environm ent ....................................................-

System Realization........................................................................

. ----.. ------------............................

-----------.....................

135

136

136

137

138

140

140

. - - - --..................................

...... .. .

A rchitecture..............................................................-7.3.3.1

142

--.......................

-----..

--..............

Im plem entation R esult......................................................

7.3.3.2

143

..........................................................---..---.--.--...................

SUMMARY

8. CONCLUSIONS ...............................................-----.......--.-------------------...............................................

8.1

8.2

8.2.1

8.2.2

8.2.3

-----. ----------------------...........................

SUMM ARY OF THESIS ...........................................................CONTRIBUTIONS .............................................................................................................................

145

147

MathematicalBasisfor Acceptability M odel......................................................................

A Formal Goal Setting M odel..............................................................................................

Classificationof Uncertaintiesin Design Process.............................................................

147

148

148

RECOMMENDATIONS FOR FUTURE WORK ..............................................................

8.3

8.3.1

8.3.2

8.3.3

145

Dynamic Decision Model in Design Iteration Context.......................................................

Multidimensional Goal Setting Model ............................................................

Extension of Goal Setting Model as an Estimation Tool....................................................

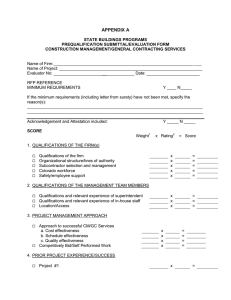

APPENDIX A ..............................-..-.--..-..--.-------.----------------------------------------...............................................

149

149

149

150

151

APPENDIX B...............................-....---.------.-----------------------------------------------...............................................153

BIBLIOGRAPHY ...................................--------...----------------------------------------------...............................................

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

157

13

LIST OF FIGURES

Figure 1.1 Design iteration for a two-attribute decision problem over time................. 18

Figure 1.2 Research elements in the proposed goal-oriented design evaluation framework

0...................................

. ..

26

Figure 2.1 Three main components of design ............................................................

30

Figure 2.2 Design Divergence and convergence ........................................................

32

Figure 2.3 An Evaluation matrix in Pugh chart...........................................................

33

..................................................

Figure 2.4 Main component of the House of Quality

36

Figure 2.5 A Lottery Method for utility function construction ....................................

37

independence...........................................

utility

Figure 2.6 A conditional lottery for

40

Figure 2.7 Recycling acceptability function...............................................................

40

Figure 2.8 Recycling cost acceptability with uncertainty ...........................................

48

Figure 3.1 A utility function for an attribute X ..........................................................

Figure 3.2 Acceptability function for attribute X in a two attribute problem (x, y)......... 49

Figure 3.3 Conceptual comparison of locally defined preference functions and

50

acceptability functions........................................................................................

design

single

a

for

Figure 3.4 A reference lottery for evaluating intermediate values

53

-................

attribute...........................................................................64

Figure 4.1 Saaty's ratio measurement in AHP ..........................................................

Figure 4.2 Break-down of the suggested dynamic probability model.......................... 66

Figure 4.3 Qualitative relationship between knowledge and uncertainty .................... 69

70

Figure 4.4 Elicitation of local probabilities...............................................................

Figure 4.5 Chains in the dynamic probability model. (T,si) represents an assessment of T

. ------------------............... 73

against s,.........................................................................--.

81

Figure 4.6 Quantification of information contents by second largest eigenvalue ......

n

of

eigenvalues

of

value

absolute

largest

second

the

Figure 4.7 A typical distribution of

83

by n m atrices under null hypothesis....................................................................

Figure 4.8 A null distribution of second largest eigen value of 7 x 7 stochastic matrices

83

(2,000 simulations)............................................................................................

86

space..................................................

state

of

Figure 4.9 First Screen : Identification

87

Figure 4.10 Second Screen: Elicitation of local probabilities .....................................

Figure 4.11 Expectation measurement result for time reduction(A) and quality

. 88

im provem ent(B )..........................................................................................

93

Figure 5.1 First Game : two lotteries for Allais paradox .............................................

93

Figure 5.2 Second Game : two lotteries for Allais paradox .........................................

95

Figure 5.3 Hypothetical weighting function for probabilities .....................................

100

...............................................

Figure 5.4 Portfolio construction with two investments

102

Figure 5.5 Com parison of different statistics ...............................................................

104

.............................................

metrics

suggested

Figure 5.6 Visualization for using the

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

14

105

Figure 5.7 (g,') plot of two designs A and B ..............................................................

Figure 5.8 Construction of a preference function and uncertainty estimate .................. 106

108

Figure 5.9 Deduced utility function for Allais paradox ................................................

Figure 6.1 Comparing different statistics proposed for differentiating distributions ..... 116

117

Figure 6.2 Classification of difference among estimates ..............................................

Figure 6.3 Concept on the underlying distribution and pseudo sampling size............... 120

122

Figure 6.4 Main stream data and a set of outlier ..........................................................

126

model.......................

Figure 6.5 Merging probability distributions using the proposed

129

Figure 7.1 D OM E com ponents....................................................................................

129

Figure 7.2 Acceptability Setting M odule .....................................................................

130

Figure 7.3 GU I of a criterion m odule ..........................................................................

131

.....................................................................

m

odule

aggregator

Figure 7.4 GU I of an

132

Figure 7.5 Acceptability related components in DOME model....................................

Figure 7.6 Movable Glass System as a subsystem of car door system.......................... 132

Figure 7.7 Figure (A) shows part of a design model in DOME environment, while Figure

(B) shows an aggregator module for overall evaluation ....................................... 133

135

Figure 7.8 Online shopping search operation...............................................................

137

Figure 7.9 Preference presentation using CSP .............................................................

139

...........

theory

utility

attribute

multi

using

project

T@T

for

Figure 7.10 User Interface

Figure 7.11 System architecture of a simple online shopping engine using acceptability

14 1

model as the decision guide .................................................................................

decision

the

of

Figure 7.12 Acceptability setting interface. The left window shows the list

attributes. The right panel allows the user to set and modify the acceptability

142

function for an individual attribute ......................................................................

Figure 7.13 Figure (A) is the main result panel where (B) is a detail analysis panel for a

143

specific product shown in the m ain panel ............................................................

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

15

LIST OF TABLES

Table 2.1

Table 2.2

Table 2.3

Table 4.1

Table 6.1

Correlation between decision components and design activities................. 29

35

M ethod of Imprecision General Axioms ....................................................

44

Comparison of different decision frameworks...........................................

Threshold point for matrices(based upon10,000 simulations)..................... 84

117

Comparison of differences among distributions ...........................................

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

16

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

17

1.

1.1

INTRODUCTION

MOTIVATION

Product design is becoming increasingly complex as the number of requirements that

must be met, both from within the company and from outside, increases. Customer

requirements need to be accurately identified and fulfilled. While underachievement in

some of the identified consumer needs will result in a launch of an unsuccessful product,

over-achievement at the cost of delayed product introduction is equally undesirable.

Additionally, designers must proactively consider internal needs such as manufacturing

or assembly engineers' needs to achieve a rapid time to market and desired quality levels.

Many Design for X (DFX) paradigms such as Design for Assembly reflect this trend.

From a pure decision-making viewpoint, modern design practice begs designers to be

well-trained multi-attribute decision-makers. Throughout design phases, designers have

to identify important attributes, generate design candidates, and evaluate them precisely.

This generation/evaluation activity will be repeated until a promising design candidate is

identified.

Design, from a process viewpoint, is iterative in nature, encompassing two main

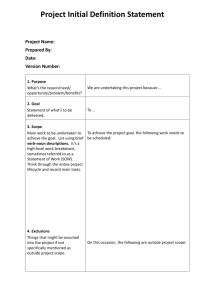

components - design synthesis and design evaluation (see Figure 1.1 ). A design activity

usually starts with a set of preliminary design specifications or goals. Then, the design

task is to generate a set of solutions that may satisfy the initial specifications.

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

18

attribattibue

*

I

i

1

e1asifdes

o

end of design1

Figure 1.1 Design iteration for a two-attribute decision problem over time

Those generated alternatives are evaluated against the initial specifications and promising

designs will be chosen for further development. Throughout the process, either design

candidates or specifications may be modified or introduced. This generation/evaluation

pair will be repeated a number of times until a suitable solution is achieved. The design

activity is an iteration of controlled divergence (design synthesis) and convergence

(design evaluation), which will be terminated upon achievement of a satisfactory

outcome.

This thesis aims to provide a supportive decision framework for use during the process of

designing products. Special emphasis will be given to ensure that the decision framework

is prescriptive - normative as well as descriptive. From normative viewpoint, the

framework should be sophisticated and mathematically rigorous enough to reflect the

designers' rationality in decision-making. On the other hand, from the descriptive

viewpoint, the framework should be based upon real design practices- how designers

work, perceive decision problem, and make intuitive decisions in the design process.

To be normative, the decision model must embrace the kind of formal decision analysis

paradigm such as in utility theory. For the model to be descriptive, designers' practice

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

19

must be observed and their processes should be fully reflected in the decision framework.

By incorporating these characteristics, it is hoped that the designers will embrace the

suggested framework in real-world design applications.

Specifically, this research effort will construct a comprehensive and prescriptive

engineering decision framework that,

+ From decision-analysis perspective, will emphasize the role of evolving uncertainties

over time.

+ From engineering view, will serve as an intuitive and practical decision guide for

engineers or designers.

+ From process perspective, will support all design phases, from concept selection to

numerical optimization.

1.2

THESIS GOAL AND DELIVERABLE

The outcome of this research should be an integrated design evaluation framework for

use in the context of an iterative design activity. The iterative design process, as

illustrated in Figure 1.1, introduces two main components that should be addressed

-

tradeoff between competing design attributes, and evolving uncertainty about design

properties.

The work will build on an acceptability model characterizing design activity as a

specification-satisfaction process (Wallace, Jakiela et al. 1995). Acceptability is quite

descriptive in its construction and one of the underlying goals throughout this research is

to develop a more solid mathematical basis for the acceptability model. In addition, the

effort will expand the acceptability-based decision model to form a comprehensive, goaloriented decision framework for designers to use during the design process.

The first part of the thesis will concentrate on the construction of goal oriented decision

metric and a goal setting method for the acceptability-based decision-making model. The

second part of the thesis will address metrics to support decisions subject to evolving

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

20

Goal-oriented

preference function

a(xy) q

preference fuswtion

1DM's

preference

,structure

-

Perform ance estim ate

f(x y)

DMx

E[a(X Y)]

experme

comnbinirngexpert 2

e

Suppltntarv

1___

Lam__e

Y

-

design evolution

Figure 1.2 Research elements in the proposed goal-oriented design

evaluation framework

uncertainty. A data aggregation model will also be covered in this second part of the

thesis. These main elements are shown in Figure 1.2.

1.2.1

Goal-oriented preference function

At the onset of a design process, designers or managers set target values (goals) for the

performance of a product under development, corresponding to customer needs, thereby

defining specifications. Assuming that these specifications are correct, the achievement

of these goals will be the development of a successful design. In this part of the research,

design decision making is characterized in terms of goal setting and the subsequent

construction of specifications (acceptability functions).

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

21

1.2.1.1 Goal setting

Although a significant body of literature emphasizes the benefit of using goal based

approaches, little work has yet been done on formal goal-setting methods - how to

quantify the goal level under uncertainty. This research will suggest a prescriptive way of

setting goals at the start of design activity. Since the goals are usually set with soft

information at the start of design activity, subjective probabilities will be used as basic

components for the constructed methodology.

1.2.1.2 Preference function construction

One-dimensional preference functions will then be constructed around the notion of the

"goal" and be properly extended to multi-dimensional cases. For this purpose, an

operating assumption based upon the preferential independence will be used. The

proposed value analysis system will hopefully achieve both the desired operational

efficiency and mathematical sophistication for multiple attribute design decision

problems.

1.2.2

Decisions under design evolution and changing uncertainty

1.2.2.1 Uncertainty Measure

The "value of information" is a well-established concept in decision science. It quantifies

the maximum worth a decision-maker will pay to get a perfect piece of information that

will completely resolve the associated uncertainty in the decision situation. Although it

provides answers to what-if questions, the resolution of all relevant uncertainties during

the design process is hypothetical and it does not provide a guide to the pending decision.

This research will address how to interpret and reflect the evolving uncertainties for the

decision. In order to achieve this, the proposed framework will first try to categorize

different kinds of uncertainties encountered in design process. Based upon the

classification, an additional metric to supplement the metric of expectation will be

suggested to cases where uncertainty is driven by lack of knowledge. The metric will

facilitate a more meaningful comparison among uncertain designs. In some cases, the

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

22

suggested metrics might help designers to identify an initially unattractive, but a

potentially better design once the associated uncertainties are fully resolved.

1.2.2.2 Multiple estimate aggregation

Design activities usually involve multiple designers. It is likely that designers will have

different judgments on how the intermediate design is likely to perform. Since each

estimate may possess different information, it is important that the different views are

properly used in the decision-making process. This component of the thesis will suggest a

systematic way of aggregating multiple estimates by different designers into a single

reference which, in turn, can be used for a more informed decision in an evolving design

process.

1.3

THESIS ORGANIZATION

Chapter 2 will provide background on design process and related work in the area of

design decision-making. A general discussion on design will be followed by the role of

decision-making tools in design process, after which subsections will discuss existing

decision-making tools and design assessment methods. At the end of the chapter it will

provide a qualitative comparison of different design evaluation tools. Based upon the

comparison, this chapter concludes that currently available tools fail to adequately

achieve the balance between operational simplicity and modeling sophistication. Further,

even the most sophisticated value analysis tool such as utility theory minimally addresses

the time variability and uncertainty part of a decision problem.

Chapter 3 begins the main body of the thesis. Key concepts in acceptability model aspiration and rejection levels - will be reviewed and given mathematical definitions.

This chapter then shows how these concepts are used to build a single attribute

acceptability function. With the introduction of an operational assumption of

acceptability independence, one dimensional model will be expanded a two-dimensional

case, and finally to a general n dimensional case, using mathematical induction.

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

23

Advantages and limitations of the acceptability model will be discussed at the last part of

the chapter.

Chapter 4 presents a formal goal setting method using dynamic probabilistic model. This

method is applicable when an expert tries to set a target value under lack of all relevant

information. The goal will be used in defining acceptability functions. The task of goal

setting is, in a mathematical sense, defined as a parameter estimation under uncertainty.

The operating philosophy behind the suggested model is to let designers explore their

knowledge base without worrying about maintaining consistency. The model uses a

discrete Markov Chain approach. A detailed description ranging from elicitation of

transition probabilities, construction of transition matrix, to the use of the limiting

probabilities will be detailed in this chapter. A post analysis at the end of the chapter can

be used to draw useful information about the states of decision-makers when they use the

suggested model.

Chapter 5 discusses decision making under uncertainties of single events. As a first step,

this chapter attempts to classify uncertainties in product development process. Based

upon different kinds of uncertainties encountered in design decision problems, this

chapter suggests that designers need a better understanding of their uncertainty structures

to make better informed decisions. Although the whole chapter is addressed in a

qualitative manner, a metric is suggested in order to supplement the popular expectation

measure.

While the preceding chapters address the preference side of the decision making, chapter

6 addresses a very practical issue in decision-making situations - uncertainty side. This

chapter will develop a mechanism for merging multiple estimates by different experts. A

probability mixture scheme based upon an opinion pool concept will be used.

Chapter 7 describes two software implementation examples using acceptability model as

an integrated decision guide. The acceptability decision model is currently used as a

decision guide in the MIT CADlab DOME software. Its implementation as part of a

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

24

systems model will be briefly discussed. As a second implementation example, the

acceptability model will be used as an online shopping guide. This application is

motivated by the characteristics of acceptability model -measurement accuracy at a

rather modest operational effort. These application examples will also discuss how the

acceptability model's characteristics will benefit both the buyers and the vendors at the

same time.

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

25

2. BACKGROUND

2.1

OVERVIEW

This chapter reviews previous work relevant to this thesis. The first part of the chapter

will provide a brief description of design and design process in general. After briefly

introducing the decision analysis, this chapter quickly merges these two topics, decisionmaking and design, emphasizing the role of decision analysis as an integral part of a

design process. The second part of the chapter introduces related work on design

decision-making tools, both qualitative and quantitative. Qualitative tools, which often

cover broader issues than decision analysis alone, are often used in early design phase to

provide an environment for a design group to discuss and reach a consensus on major

issues. The quantitative tools provide a more rigorous framework for designers to carry

out sophisticated tradeoff studies among competing uncertain designs. The last part of

this chapter will provide a qualitative framework to compare the methods surveyed. The

chapter closes with a discussion of desirable properties that a design decision framework

should possess for practical applications.

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

26

2.2

DESIGN, DECISION MAKING, AND DESIGN PROCESS

2.2.1

Design

Design facilitates the creation of new products and processes in order to meet the

customer's needs and aspirations. Virtually every field of engineering involves and

depends on the design or synthesis process to create a necessary entity (Suh 1990).

Therefore, design is such a diverse and complex field that it is challenging to provide a

comprehensive review on every aspect of design. From psychological view, design is a

creative activity that calls for sound understanding in many disciplines. From systems

view, design is an optimization of stated objectives with partly conflicting constraints.

From organizational view, design is an essential part of the product life-cycle (Pahl and

Beitz 1996)

Although there are variants describing product design, three main design components are

(Suh 1990).

+

problem definition

*

creative (synthetic) process

*

analyticprocess

The main task in the problem definition stage is to understand the problem in engineering

terms: the designers and managers must determine the product specifications based upon

the customer's needs as well as resources allocated for the design task. The subsequent

creative process is then to devise an embodiment of the physical entity that will satisfy

the specifications envisioned in the problem definition stage. This creative process can be

Problem

Definition

Creative

Process

Analytic

Process

Figure 2.1 Three main components of design

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

27

decomposed into conceptual, embodiment and/or detail design and is the least understood

part among the three, requiring the creative power of designers. The analytic process is

associated with measurement process, where designers evaluate the candidate solutions.

At this stage designers determine whether the devised solution(s) are good or not,

comparing the devised designs against the initial specifications.

2.2.2

Decision Analysis

Every decision analysis framework has a formalization process for the problem,

incorporating both objective and soft/subjective information. This explicit structuring

process allows the decision-maker to process assumptions, objectives, values,

possibilities, and uncertainties in a well summarized and consistent manner. The main

benefit of using decision analysis comes from this formalization process (Drake and

Keeney 1992; Keeney and Raiffa 1993), requiring the decision-maker to investigate the

problem in a thorough way to gain an understanding of the anatomy of the problem. The

decision analysis is useful if it provides a decision maker with increased confidence and

satisfaction in resolving important decision problems while requiring a level of effort that

is not excessive for the problem being considered (Drake and Keeney 1992)

A typical decision framework structures a decision problem into the following three main

constituents (Keeney and Raiffa 1993)

+ A decision maker is given a set of alternatives, X = {x,, x 2,..., IXJ, where each x,

represents one alternative.

+ The decision maker has a set of criteria, C=(c, c2,.., c).

+

Then, which one is the best solution among the alternatives based upon the set of

criteria?

Decision analysis helps the decision-makers in the following three areas.

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

28

+ Preference Elicitation: to identify the set of criteria and the quantification of each

criterion.

+ PerformanceAssessment: to assess the multi-dimensional performance of each

alternative.

* DecisionMetric: decision rule for choosing the "best" solution.

In most applications, these tasks become complicated, mainly due to the uncertaintiesin

the preference construction and performance assessment. A preference function is

obtained by transforming a decision-maker's implicit and subjective judgment into an

explicit value system. In many practical cases, the decision-makers are not sure about

their own judgment, complicating the elicitation process. However, sophisticated decision

tools should capture and quantify this uncertainty. On the side of performance

assessment, lack of hard information makes the quantification task very challenging. As a

result, the performance is usually expressed with degrees of uncertainty. Based upon the

constructed decision-maker's preference and performance estimate for each alternative,

the final decision metric suggests a course of action under both kinds of uncertainties.

2.3

DECISION ANALYSIS IN DESIGN

2.3.1

Design Decision Making

Among the three design activities, decision analysis would be most applicable to the

problem definition and analytic process. The activity of specification generation, the

main activity at the problem definition stage, overlaps with the identification of the

criterion set in the decision-making framework. The House of Quality (HOQ), from

Quality Function Deployment (Hauser and Clausing 1988), is an extensive design tool

applicable to the problem definition process. The HOQ provides a framework where

designers can translate the customer attributes into engineering attributes. In addition to

its basic functionality, it allows engineers to set target values for the engineering

attributes using competitive analysis. In general, preference elicitation component of

decision analysis can help designers to better understand and quantify the design task. In

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

29

the case of product development, the preference elicitation process can help designers to

accurately formulate the customer's needs.

The analytical process is another apparent design activity that can be helped by use of

decision analysis. At the analytical process, the designers will be given a set of design

candidates that will be evaluated against the set of criteria elicited at the problem

definition stage. For this purpose, the multi-dimensional performance of each design

candidate has to be assessed, often under uncertainty. The formal way of assessing the

performance of alternatives in decision analysis will ask the designers to more accurately

measure each alternative. The performance thus obtained, with the preferences elicited

earlier in the problem definition process, will be inputs to the metric system. The metric

system will suggest to the designer which design alternative to pursue for the subsequent

development stage.

These are two main visible areas where the use of decision analysis can improve the

design activities. Table 2.1 shows the correlation between design areas and decision

analysis components.

Table 2.1 Correlation between decision components and design activities

DESIGN ACTIVITY ELEMENT

Problem

Definition

>

Z

Creative

Process

Analytic

Process

Preference

Elicitation

Performance

Assessment

Decision

Metric

2.3.2

Decision Making as an Integral Part of Design Process

In most practical cases, initial design efforts will not yield a design solution meeting the

initial specifications. In this case, the designer might change the initial specifications to

accommodate the realized designs and terminate the design activity. More commonly,

designers will devise new design candidates to meet the initial specifications. In most

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

30

cases, the succeeding iteration will be a mixture of these two strategies. The designer will

try to devise new designs based upon a newly modified set of specifications. Design

assessment between iterations will provide a feedback to the designers suggesting a

guideline to the succeeding design activity. With this feedback, the design activity will be

iterated multiple times, resulting in a design process. The design process will continue

until a promising solution is identified which satisfies the initial or modified

specifications.

In addition to its role in the design activity, the scope of decision analysis becomes

broader in the design process. In the design iteration context, decision analysis tools can

be used to provide designers with critical feedback necessary for succeeding iterations.

The analysis might even suggest an appropriate direction for the following iteration. For

instance, the decision analysis result can help designers identify weakly performing

attributes of the most promising candidate. In the next iteration the main effort can be

directed to improve the weak performing attributes of the design. Extending the above

argument, continuous feedback from the analysis including measurement of the design

candidates would help designers to continuously monitor the strength and weakness of

each design. Ideally, design evaluations can be placed in many parts of the design process

to provide feedback to the designer. From the decision-making standpoint, design process

is often viewed as a controlled iteration of divergence and convergence (Pugh 1991).

Design Generation

*A

Denon

1101

degree of

uncertainty

DlOvion Realuion

Figure 2.2 Design Divergence and convergence

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

time

31

Divergence corresponds to the Ideation/Creation part of the design, and convergence to

the evaluation/selection part. The overall role of decision analysis tool as an integrated

part of design process is abstracted and visualized in Figure 2.2. This is a modified and

expanded interpretation of the controlled convergence diagram by Pugh (Pugh 1991). The

x abscissa represents time in the design process, while the y abscissa is a hypothetical

multi-dimensional design attribute space. For instance, this design space might be a cost/

performance pair. At the initiation of a design process, a target point A on the multidimensional design space will be set in the problem definition stage. A set of design

candidates will then be generated through the creative part of design. This part is again

modeled as each divergent part of the cone in Figure 2.2. The width of the cone

represents the uncertainties associated with the solutions. The ideation process may be

viewed as an exploration of uncertain design domains that may yield potentially

satisfactory designs. In the convergence part of design activity, the level of uncertainties

associated with design candidates will decrease through analysis and measurement.

The dots in the figure represent a trace of how the designer's aspiration level or

specification might undergo a change based upon the feedback from the

analysis/evaluation at the convergent part of the diagram. Given the newly set targets, a

new round of divergence is carried out. The critical role of analysis/evaluation is clear. It

guides the overall direction of the design process. This guidance can also direct the effort

and resource allocation in each succeeding design iteration.

2.4

RELATED WORK

So far, the role of decision analysis in both design and design process has been discussed.

This part of the chapter discusses current design decision-making tools. These existing

tools are classified as qualitative and quantitative. Well-known qualitative tools include

selection charts and some aspects of Quality Function Deployment. Among the well

known quantitative tools are Figure of Merit, Fuzzy Decision-Making, and Utility

Theory. These quantitative tools provide a more sophisticated and complete value

analysis but are more expensive in terms of operational effort. The Acceptability model,

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

32

the basis of the goal-oriented model of this thesis, is intended to reduce this operational

effort.

e

Qualitative Decision Support Tools

2.4.1.1 Pugh chart

Pugh chart is a selection matrix method often used in early design phase. It provides a

framework for a group of designers to discuss, compare, and evaluate uncertain design

candidates (Pugh 1991). Figure 2.3 shows an exemplary evaluation matrix used in Pugh's

total design method. In the chart shown, there are four concepts, from concepts I to 4,

under consideration and five criteria from A to E. One of the design candidates serves as

a datum point against which other concepts are compared. In the assessment, three

legends of +/-/S are used to specify "better than", "worse than", and "same as" the datum,

respectively. The design with the highest aggregated sum will be chosen as the best

candidate among the candidates. The Pugh chart is qualitative and does not allow an indepth analysis. However, it is intuitive enough for many designers to use in concept

selection process.

Concept 1

Concept 2

Concept 3

Concept 4

Concept 5

A

+

-

D

-

+

B

-

+

A

-

+

C

+

+

T

-+

S

D

+

S

U

+

S

E

S

S

M

-

-

2

1

0

-1

1

S

Figure 2.3 An Evaluation matrix in Pugh chart.

2.4.1.2 House of Quality

The basic aim of Quality Function Deployment (QFD) is to translate product

requirements stated in the customer's own words into a viable product specifications that

MassachusettsInstitute of Technology - Computer-aidedDesign Laboratory

33

can be designed and manufactured. American Supplier Institute defines QFD as (ASI

1987),

"A systemfor translatingcustomer requirements into appropriatecompany requirements

at every stage,from research through productiondesign and development, to

manufacture, distribution,installation and marketing, sales and service. "

House of Quality is a major tool in QFD to perform the necessary operations. HOQ is a

very versatile tool applicable to a broad range of design and the decision-making aspect

of HOQ will be discussed in this section. Figure 2.4 shows the major components of a

HOQ. The main body of the house serves as a map between the customer attributes and

the corresponding engineering attributes. The column next to the customer attributes

allows the designers to specify the relative importance among the customer attributes.

Then the designers, based upon the translated engineering attributes and competitive

analysis, can determine a set of targets for evaluation. There are many variant versions of

HOQ adding more functionality (Ramaswamy and Ulrich 1993; Franceschini and

Rossetto 1995).

Correlation Matrix

Requirements degree

of importance

Customer

Attributes

Product Engineering/Design

Requirements

Competitive

Benchmarking

Assessment

Relationship Matrix

Technical importance ranking

Figure 2.4 Main component of the House of Quality

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

34

Quantitative Decision Support Tools

2.4.2.1 Figure of Merit

The Figure of Merit (FOM), a commonly used design evaluation model, determines the

value of a certain design as a weighted average of individual attribute levels. A weighting

factor is often thought of as relative importance. A typical FOM for a design X. expresses

the overall value as

N

iwi

FOM(XJ)

=

- vi(xp,1)

N

'

=

(xj' 'j,

2

...

j,N

)

(2.1)

i=1

where,

Xi : aj" design candidate

N: total number of attributes

wi : weighting factor assigned to the i't attribute.

vi: performance indicator function of the i' criterion.

This method seems very popular since it is quite intuitive and easy to use. In fact,

variations of FOM seem to be in use in many decision situations.

Its operational simplicity and intuitiveness are its advantage. However, there are also

limitations.

First, unless the function vi's are defined as a closed form, the designer will have to

evaluate the i" performance indicator function for every design candidate. As the number

of designs subject to evaluation gets bigger, the designer may have to evaluate the

performance indicator in an exhaustive manner.

Second, the FOM method addresses the uncertainty in a simplified way. The Figure of

Merit Method addresses the preference and performance with one function, the

performance indicator function. In practical cases, x j ,the it' attribute level of aj' design

candidate may be uncertain. In the FOM method, the performance criteria function of vi

Massachusetts Institute of Technology - Computer-aided Design Laboratory

35

will be used to express both the preference and uncertain performance for a specific

design. As the problem becomes complex and gets bigger, this approach will not yield

accurate and consistent measurement results.

2.4.2.2 Method of Imprecision

Otto performed an extensive research on different design metric systems in decision

frameworks (Otto 1992). He argues that there are different kinds of uncertainties

encountered in design; possibility, probability, and imprecision. Imprecision in the

method refers to a designer's uncertainty in choosing among the alternatives (Otto and

Antonsson 1991). The method itself is very formal. A preference is defined as a map

from a space Xk to [0,1] c- R,

yk : Xk

(2.2)

--> [0,1]

that preserves the designer's preferential order over Xk., where k= 1,2,..n and n is the total

number of attributes. The subsequent step is to combine each preference, yk 's into an

aggregate preference. The first step taken for this aggregate preference construction was

to define a set of characteristics that describe and emulate designer's behavior in design

decision-making. This set of characteristics then is transformed into a set of axioms.

Table 2.2 shows the set of axioms proposed for the design decision metric. Among the

many possible aggregate models investigated, Zadeh's extension principle is used to

Table 2.2 Method of Imprecision General Axioms

Boundary condition

P(O,0...,0)=0 ,P(],...,1)=1

P(

N....

N)ffIk <k'

iu p)P(N1,...,k

,...,

P(i,...,p

M'N

k'-+k

P(p', ...,O,..., pN) =0

''''.k

N

Monotonicity

Continuity

Annihilation

P(p,...,p =YyIdempotency

Massachusetts Institute of Technology - Computer-aidedDesign Laboratory

36

aggregate the individual preferences (Wood, Otto et al. 1992). Employing the fuzzy

framework, the initial map pk can be regarded as fuzzy membership functions. The

concept of "fuzzy" is based upon concept of possibility, not probability, and there is some

debate on using this in decision science (French 1984).

2.4.2.3 Utility Theory

Utility theory is the most sophisticated analytical decision value analysis method,

originally developed for economic decision making. Since its formal inception by von

Neumann, it has been extensively developed by many researchers and has been applied to

many disciplines including design (Howard and Matheson 1984) (Tribus 1969; Thurston

1991).

Utility theory is built based upon a set of axioms that describes the behavior of a rational

decision-maker. Among the axioms are transitivity of preference and substitution. A

detailed discussion on the construction of utility theory is found in the classical book on

utility theory, Decision with Multiple Objectives (Keeney and Raiffa 1993). Since the

acceptability-based framework is to a large extent associated with utility theory, this

section will discuss basic formulation of utility theory and its relatedness to design

decision making.

A single attribute utility function quantifies the utility of an attribute level on a local scale

of [0.0 , 1.0]. Lottery method is used for the construction of a single attribute utility

function. In order to quantify the utility of $ 500 on a basis of ($ 0, $ 1,000), a decisionmaker participates in a hypothetical lottery as is shown in Figure 2.5.

P\

$ 1,000

$ 500

Figure 2.5 A Lottery Method for utility function construction

MassachusettsInstitute of Technology - Computer-aided Design Laboratory

37

The minimum probability, p, that will leave the decision-maker no different between the

sure amount of $ 500 and the lottery of ($ 0, $ 1,000 ) is defined as the utility for $ 500.

A continuous utility function for a certain range is constructed interpolating a number of

data points available in the range.

Construction of a multi attribute utility function is a challenging task compared to that of

one-dimensional function. Theoretically, an n-dimensional surface can be constructed by

interpolating and extrapolating a number of data points on the surface. However, this

direct assessment approach becomes impractical as the number of attributes or the surface

dimensionality increases.

Utility independence is an assumption often invoked regarding the decision-maker's

preference structure used in utility theory. Its role in multi attribute utility theory is very

similar to that of probabilistic independence in multivariate probability theory. Attribute