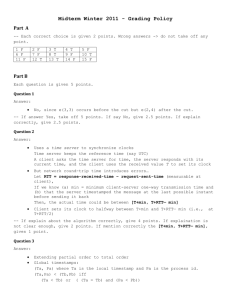

Inferring Queue State by Measuring Delay in a WiFi Network

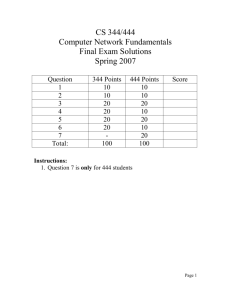

advertisement

Inferring Queue State by Measuring Delay in a WiFi Network David Malone, Douglas J Leith, Ian Dangerfield 11 May 2009 Wifi, Delay, Queue Length and Congestion Control • RTT a proxy for queue length. • Not too crazy in wired networks. • For Wifi? Counting Down (20us) Someone else transmits, stop counting! Transmissions (~500us) Data and then ACK Collision followed by timeout • With fixed traffic, what is impact of random service? • What is impact of variable traffic (not even sharing buffer)? • What will Vegas do in practice? • Want to understand these for future design work. Sample Previous work • V. Jacobson. pathchar — a tool to infer characteristics of internet paths. MSRI, April 1997. • N Sundaram, WS Conner, and A Rangarajan. Estimation of bandwidth in bridged home networks. WiNMee, 2007. • M Franceschinis, M Mellia, M Meo, and M Munafo. Measuring TCP over WiFi: A real case. WiNMee, April 2005. • G. McCullagh. Exploring delay-based tcp congestion control. Masters Thesis, 2008. Fixed Traffic: How bad is the problem? 200000 Observed Drain Time 180000 160000 Drain Time (us) 140000 120000 100000 80000 60000 40000 20000 0 0 5 10 Queue Length (packets) 15 20 What do the stats look like? 200000 1400 Drain Time Distribution Mean Drain Time Number of Observations 180000 1200 160000 Drain Time (us) 120000 800 100000 600 80000 60000 400 40000 200 20000 0 0 0 5 10 Packets in queue Note: the variance is getting bigger. 15 20 Number of Observations 1000 140000 How does it grow? 20000 20000 18000 16000 16000 14000 14000 12000 12000 10000 10000 8000 8000 6000 6000 4000 4000 2000 Number of Observations Drain Time Standard Deviation (us) Measured Estimate V sqrt(n) 18000 2000 0 2 4 6 8 10 12 14 Queue Length (packets) 16 18 20 22 For fixed traffic service time looks uncorrelated. Queue Length Prediction • Suppose traffic is fixed. • We have collect all statistics. • Given an RTT, can we guess how full queue is? • Easier: more or less than half full? Results of thresholding 0.6 Delay threshold wrong Delay Threshold wrong by 50% or more 0.5 Fraction of Packets 0.4 0.3 0.2 0.1 0 0 20000 40000 60000 80000 100000 120000 Threshold (us) 140000 160000 180000 200000 Using History • Mistake 10% of time, not good for congestion control. • Only using one sample, what happens if we use history. • Obvious thing to do: filter. Filters 7/8 Filter srtt ← 7/8srtt + 1/8rtt. Exponential Time srtt ← e −∆T /Tc srtt + (1 − e −∆T /Tc )rtt. Windowed Mean srtt ← mean rtt. last RTT Windowed Min srtt ← min rtt. last RTT How much better do we do? 0.6 Exp Time Threshold wrong Window Mean Threshold wrong 7/8 Filter Threshold wrong Window Min Threshold wrong Raw Threshold wrong Exp Time Threshold wrong by >= 50% Window Mean Threshold wrong by >= 50% 7/8 Filter Threshold wrong by >= 50% Window Min Threshold wrong by >= 50% Raw Threshold wrong by >= 50% 0.5 Fraction of Packets 0.4 0.3 0.2 0.1 0 0 20000 40000 60000 80000 100000 120000 Threshold (us) 140000 160000 180000 200000 Variable Network Conditions • Other traffic can change service rate. • Need not even share same buffer. • Nonlinear because of collisions. • What happens when we add/remove competing stations? Removing stations (4 → 1) 160000 20 140000 15 Queue Length (packets) Drain Time (us) 120000 100000 80000 60000 40000 10 5 20000 0 230 232 234 236 238 Time (s) 240 242 244 0 230 232 234 236 238 Time (s) 240 242 244 Adding stations (4 → 8, ACK Prio) 700000 20 600000 15 Queue Length (packets) Drain Time (us) 500000 400000 300000 10 200000 5 100000 0 100 120 140 160 Time (s) Even base RTT changes. 180 200 0 100 120 140 160 Time (s) 180 200 Vegas in Practice TargetCwnd = cwnd × baseRTT/minRTT Make decision based on TargetCwnd − cwnd. • Will Vegas make right decisions based on current RTT? • Will Vegas get correct base RTT? • Vary delay with dummynet. • Vary BW by adding competing stations. 450 45 400 40 350 35 300 30 cwnd (packets) Throughput (pps) Vegas with 5ms RTT 250 200 25 20 150 15 100 10 50 5 0 0 0 20 40 60 80 Time (s) 100 120 140 Lower bound like 1 − α/cwnd. 0 20 40 60 80 Time (s) 100 120 140 Vegas with 200ms RTT 450 140 400 120 350 100 cwnd (packets) Throughput (pps) 300 250 200 80 60 150 40 100 20 50 0 0 0 20 40 60 80 Time (s) 100 120 140 Sees loss and goes into Reno mode. 0 20 40 60 80 Time (s) 100 120 140 Conclusion • With fixed traffic, delay is quite variable. • Variability grows with buffer occupancy like √ n. • Obvious filters make things worse. • Need to deal with change in traffic conditions. • Linux Vegas does OK. • Switch to Reno helps. • Vegas insensitive at smaller buffer sizes. • Variability at larger buffer sizes still a problem.