CSE596 Problem Set 6 Answer Key Fall 2015

advertisement

CSE596

Problem Set 6 Answer Key

Fall 2015

(1) Prove that the following decision problem Crash is undecidable, by reduction from the

Acceptance Problem or Halting Problem:

Instance: A Turing machine M 0 with a single one-way-infinite tape, as in the text;

Question: Is there an input x such that M 0 (x) ‘crashes’ by attempting to move its head off

the left end of the tape?

Also answer whether the language of this decision problem is computably enumerable or not

and justify your answer. (12 + 3 + 3 = 18 pts.)

Answer: We reduce the Acceptance Problem to the “Crash” problem. Given an instance

(M, x) of the former, let us first convert M to M 0 (which never crashes) as above, to eliminate

any question of crashing elsewhere in the program. Since the construction in part (a) is effective,

this can be done by a computable code-transforming function. Now build a TM M 00 (with oneway infinite tape) that on any input y behaves like so: Erase y, write the hard-wired x, and

simulate M 0 (x). If and when it accepts, move to the left end and execute a final “suicide arc”

(qsui , ∧/∧, L, qsui ). This bit of code editing is also computable given M and x. The resulting

computable function f reduces APT M not only to “Crash” but also to a problem “Must-Crash”

that would be defined by asking whether M (x) crashes for all inputs x.

The language of the “Crash” problem is c.e. Write a Java program P that given any

instance M 00 first converts it to M 0 just like in (a). Then P “dovetails” the countably-many

computations M 0 (λ), M 0 (0), M 0 (1), M 0 (00), . . . . We want P to accept M 00 if and only if at least

one of those computations would crash. The conversion to M 0 makes this easy for P to detect

by the key observation that after the initial shift-over routine, M 0 henceforth sees ∧ when and

only when M 00 would have crashed. Thus P accepts if and when one of its dovetails sees ∧

(except for the initial shift-over phase). Then L(P ) = { M 00 : (∃y ∈ Σ∗ ) M 00 (y)crashes }, which

is the language of the “Crash” problem.

[The general significance is that for any program behavior X that is detectable, the languages

of the “Does-X” and “Can-Do-X” versions of the problem are c.e. The “Does-X” version has

instance hM, xi, while “Can-Do-X” has instance “just M ” and asks, does there exist an input

x such that M (x) does. . . The language of “Must-Do-X,” however, is in general not c.e.—it

is a reasonable study exercise to go over your notes of the proof in lecture that TOT and

ALLT M are neither c.e. nor co-c.e. substitute other behaviors X in place of “halting” and

“accepting,” respectively, and then almost the same proofs will show that the resulting “MustDo-X” problem’s language is neither c.e. nor co-c.e.]

(2) Text, “Homework 3.28” on page 71 or 72. (9+9 = 18 pts.)

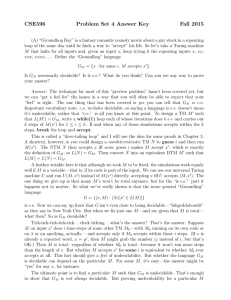

Answer: L1 is r.e.—We can imagine an NTM N that given any e tries to “guess” 20 different

strings x1 , . . . , x20 , and for each i, 1 ≤ i ≤ 20, simulates Me (i) in series (or in parallel). If and

when all 20 simulations end with Me ’s acceptance, N accepts e. Thus L(N ) = L1 , and since all

NTMs can be converted to equivalent DTMs, L1 is r.e. (Or if you don’t like nondeterminism,

you can program a dovetail loop of the kind shown in class that interleaves simulations of the

computations Me (λ), Me (0), Me (1), Me (00), . . . , and keeps an integer counter j. Every time

some simulation finds an accepting ID of Me , j is incremented, and if and when j == 20, M

accepts e. Then M is a DTM and L(M ) = L1 .

L2 is not r.e.—To show D ≤m L2 , make a reduction h such that for all e, h(e) is the code of

a TM M 000 that varies the M 00 in the second half of the answer to problem (2) by immediately

accepting y iff y is one of the first 20 strings. Then for all e,

e∈D

e∈

/D

=⇒

=⇒

=⇒

=⇒

=⇒

Me (e) ↑ =⇒ (∀n)[Me (e) doesn’t halt within n steps]

M 000 rejects all y > 20 =⇒ h(e) ∈ L2 ;

Me (e) ↓ =⇒ (∃n)[Me (e) does halt within n steps]

M 000 accepts all strings of length at least that n

|L(M 000 )| > 20 =⇒ h(e) ∈

/ L2 .

The reduction function h itself is a simple code insertion of the call to Me (e) and is just as

easily computable as all the above reductions. Thus D ≤m L2 via h, and since D is not c.e.,

L2 is not c.e. either.

(3) Show that the language L1 = { e : L(Me ) = { λ } } is neither c.e. nor co-c.e. (36 pts. total,

for 72 on the set)

Answer: It suffices to show K ≤m L1 and D ≤m L1 . The notation for the reduction is

snappier if you use the “halts” rather than “accepts” definitions of K and D (as I regard

implicitly in dropping the “TM” subscripts here), namely K = { e : Me (e) ↓ } and D = { e :

Me (e) ↑ }. But it really doesn’t matter if you use the original “KT M ” and “DT M ”—I’m mainly

varying because the down-arrow for “halts” looks nicer than writing the word “accepts” in

LaTeX math-mode. To define mappings f and g for the two respective reductions, let any

instance e of K-or-D be given. Then:

Take f (e) to be (the code of) a TM M 0 that on any input y first simulates Me (e). If and

when that halts, M 0 then accepts y if-and-only-if y = λ. This code translation f is clearly

computable—only the last instruction of M 0 about “. . . iff y = λ” is different from reductions

given already in lecture (plagiarize!). For correctness, observe that for all e:

e∈K

e∈

/K

=⇒

=⇒

Me (e) ↓ =⇒ L(M 0 ) = { λ } =⇒ f (e) ∈ L1 ;

Me (e) ↑ =⇒ L(M 0 ) = ∅ =⇒ f (e) ∈

/ L1 .

Now take g(e) to be (the code of) a TM M 00 that on any input y immediately accepts if y = λ.

If not, then M 00 (y) computes n = |y| and simulates Me (e) for n steps of Me . If the simulation

halts within n steps, M 00 accepts y; if not, then M 00 rejects y. This g is a variation on the

reduction from D to ALLT M shown in class, and it is likewise computable. For correctness,

e∈D

e∈

/D

=⇒

=⇒

=⇒

=⇒

Me (e) ↑ =⇒ (∀n)[Me (e) doesn’t halt within n steps]

M 00 rejects all y 6= λ =⇒ L(M 00 ) = { λ } =⇒ g(e) ∈ L1 ;

Me (e) ↓ =⇒ (∃n)[Me (e) does halt within n steps]

M 00 accepts all strings of length at least that n =⇒ L(M 00 ) 6= { λ } =⇒ g(e) ∈

/ L1 .

That’s all you need to construct and verify.

(4EC) For 12 points extra credit, let R(x, y, z) be a three-place decidable predicate and let

the language A be defined by: for all x, x ∈ A ⇐⇒ (∀y)(∃z)R(x, y, z). Show that A ≤m P T .)

Answer: We need to define f (x) = e(M ) such that M is total iff x ∈ A. The key is to

realize that this setup makes x embedded in the code of M (as technically has been happening

to “x” with all our reductions from APT M ), y the input to M , and z the object of a searching

loop within M . Define M on input y to behave as follows:

input y;

for (string z = lambda,0,1,00,01,...) {

if (R(x,y,z)) { halt; }

}

Then M (y) ↓ ⇐⇒ (∃z)R(x, y, z), so M is total iff this right-hand side holds for all y (and

the given x), which by the definition of A is iff x ∈ A. So A ≤m P T via f .

Bonus Answers

Note also that PT has a definition of this “∀∃” logical form: e is the code of a total TM

⇐⇒ (∀y)(∃t)T (e, y, t). Define Π2 to be the class of all languages having definitions of this

logical form. Then what we’ve shown is:

• P T ∈ Π2 ;

• For every A ∈ Π2 , A ≤m P T .

Namely, we have shown that P T is complete for Π2 under computable many-one reductions. The ALLT M language is similarly complete for Π2 .

The complements A0 of languages A ∈ Π2 have definitions of the form x ∈ A0 ⇐⇒

(∃y)(∀z)R0 (x, y, z) where R0 is a decidable predicate—in particular, R0 is the negation of R

from before. Those languages form the class Σ2 .

It turns out that Σ2 6= Π2 by a diagonalization proof similar to how we showed RE 6= co-RE,

The similarity is reinforced by the theorem that says a language L is c.e. iff there is a decidable

predicate R(x, y) such that for all x, x ∈ L ⇐⇒ (∃y)R(x, y).P This makes RE also called

Σ

Q1 because an ∃ quantifier is like the Boolean version of a sum , and ∀ is a kind of product

. The text defines these classes and proves the separation via Turing reductions in section

3.9, which I’ve skipped, but it can all be done without them, and defining a diagonal language

D2 ∈ Π2 \ Σ2 is left as a challenge.

The upshot is that P T , being Π2 -complete, does not belong to Σ2 . The language L1 ,

however, does belong to Σ2 : for all e,

e ∈ L1 ⇐⇒ (∃c)(∀x)(∀u) [T (e, λ, c) ∧ (x 6= λ =⇒ ¬T (e, x, d)].

Here we are re-interpreting the T -predicate to say that Me accepts λ within c steps rather

than halts. Actually, what Stephen Kleene really did with his T -predicate is make c a whole

computation (as a valid sequence of IDs) and define a separate function U (c) = 1 to say c

accepts, U (c) = 0 for rejects. The extra U is cumbersome, so we’ll eventually settle on making

T (e, x, c) mean that c is an accepting computation of Me on input x. No matter, any which

way L1 is in Σ2 and you can in fact put it in Σ2 ∩ Π2 . This is enough finally to explain why

string to show P T ≤m L1 cannot work—it doesn’t reduce to L1 .