Large-scale Incremental Processing Using Distributed Transactions and Notifications

advertisement

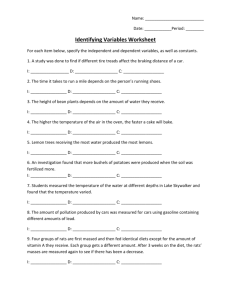

Large-scale Incremental Processing Using Distributed Transactions and Notifications Written By Daniel Peng and Frank Dabek Presented By Michael Over 1 Abstract Task: Updating an index of the web as documents are crawled Requires continuously transforming a large repository of existing documents as new documents arrive One example of a class of data processing tasks that transform a large repository of data via small, independent mutations 2 Abstract These tasks lie in a gap between the capabilities of existing infrastructure Databases – Storage/throughput requirements MapReduce – Create large batches for efficiency Percolator A system for incrementally processing updates to a large data set Deployed to create the Google web search index Now processes the same number of documents per day but reduced the average age of documents in Google search results by 50% 3 Outline Introduction Design Bigtable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 4 Task Task: Build an index of the web that can be used to answer search queries. Approach: Crawl every page on the web and process them Maintain a set of invariants – same content, link inversion Could be done using a series of MapReduce operations 5 Challenge Challenge: Update the index after recrawling some small portion of the web. Could we run MapReduce over just the recrawled pages? No, there are links between the new pages and the rest of the web Could we run MapReduce over the entire repository? Yes, this is how Google’s web search index was produced prior to this work What are some effects of this? 6 Challenge What about a DBMS? What about distributed storage systems like Bigtable? Cannot handle the sheer volume of data Scalable but does not provide tools to maintain data invariants in the face of concurrent updates Ideally, the data processing system for the task of maintaining the web search index would be optimized for incremental processing and able to maintain invariants 7 Percolator Provides the user with random access to a multiple petabyte repository Process documents individually Many concurrent threads ACID compliant transactions Observers – Invoked when a user-specified column changes Designed specifically for incremental processing 8 Percolator Google uses Percolator to prepare web pages for inclusion in the live web search index Can now process documents as they are crawled Reducing the average document processing latency by a factor of 100 Reducing the average age of a document appearing in a search result by nearly 50% 9 Outline Introduction Design Bigtable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 10 Design Two main abstractions for performing incremental processing at large scale: ACID compliant transactions over a random access repository Observers – a way to organize an incremental computation A Percolator system consists of three binaries: A Percolator worker A Bigtable tablet server A GFS chunkserver 11 Outline Introduction Design Bigtable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 12 Bigtable Overview Percolator is built on top of the Bigtable distributed storage system Multi-dimensional sorted map Keys: (row, column, timestamp) tuples Provides lookup and update operations on each row Row transactions enable atomic read-modify-write operations on individual rows Runs reliably on a large number of unreliable machines handling petabytes of data 13 Bigtable Overview A running BigTable consists of a collection of tablet servers Each tablet server is responsible for serving several tablets Percolator maintains the gist of Bigtable’s interface Percolator’s API closely resembles Bigtable’s Challenge: Provide the additional features of multirow transactions and the observer framework 14 Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 15 Transactions Percolator provides cross-row, cross-table transactions with ACID snapshot-isolation semantics Stores multiple versions of each data item using Bigtable’s timestamp dimension Provides snapshot isolation, which protects against write-write conflicts Percolator must explicitly maintain locks Example of transaction involving bank accounts 16 Transactions Key Bal:Data Bob Joe 8: 7: $6 6: 5: $10 8: 7: $6 6: 5: $2 Bal:Lock 8: 7: I am Primary 6: 5: 8: 7: Primary @ Bob.bal 6: 5: Bal:Write 8: data @ 7 7: 6: data @ 5 5: 8: data @ 7 7: 6: data @ 5 5: 17 Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 18 Timestamps Server hands out timestamps in strictly increasing order Every transaction requires contacting the timestamp oracle twice, so this server must scale well For failure recovery, the timestamp oracle needs to write the highest allocated timestamp to disk before responding to a request. For efficiency, it batches writes, and "pre-allocates" a whole block of timestamps. How many timestamps do you think Google’s timestamp oracle serves per second from 1 machine? 19 Answer: 2,000,000 (2 million) per second Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 20 Notifications Transactions let the user mutate the table while maintaining invariants, but users also need a way to trigger and run the transactions. In Percolator, the user writes “observers” to be triggered by changes to the table Percolator invokes the function after data is written to one of the columns registered by an observer 21 Notifications Percolator applications are structured as a series of observers Notifications are similar to database triggers or events in active database but they cannot maintain data invariants Percolator needs to efficiently find dirty cells with observers that need to be run To do so, it maintains a special “notify” Bigtable column, containing an entry for each dirty cell 22 Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 23 Evaluation Percolator lies somewhere in the performance space between MapReduce and DBMSs Converting from MapReduce – Percolator was built to create Google’s large “base” index, a task previously done by MapReduce In MapReduce, each day several billions of documents were crawled and fed through a series of 100 MapReduces, resulting in an index which answered user queries 24 Evaluation Using MapReduce, each document spent 2-3 days being indexed before it could be returned as a search result Percolator crawls the same number of documents, but the document is sent through Percolator as it is crawled The immediately advantage is a reduction in latency (the median document moves through over 100x faster than with MapReduce) 25 Evaluation Percolator freed Google from needing to process the entire repository each time documents were indexed Therefore, they can increase the size of the repository (and have, now 3x it’s previous size) Percolator is easier to operate – there are fewer moving parts: just tablet servers, Percolator workers, and chunkservers 26 Evaluation Question: How do you think Percolator performs in comparison to MapReduce if: 1% of the repository needs to be updated per hour? 30% of the repository needs to be updated per hour? 60% of the repository needs to be updated per hour? 90% of the repository needs to be updated per hour? 27 Evaluation 28 Evaluation Comparing Percolator versus “raw” Bigtable Percolator introduces overhead relative to Bigtable, a factor of four overhead on writes due to 4 round trips: Percolator -> Timestamp Server -> Percolator -> Tentative Write -> Percolator -> Timestamp Server -> Percolator -> Commit -> Percolator 29 Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 30 Related Work Batch processing systems like MapReduce are well suited for efficiently transforming or analyzing an entire repository DBMSs satisfy many of the requirements of an incremental system but does not scale like Percolator Bigtable is a scalable, distributed, and fault tolerant storage system, but is not designed to be a data transformation system CloudTPS builds an ACID-compliant datastore on top of distributed storage but is intended to be a backend for a website (stronger focus on latency and partition tolerance than Percolator) 31 Outline Introduction Design BigTable Transactions Timestamps Notifications Evaluation Related Work Conclusion and Future Work 32 Conclusion and Future Work Percolator has been deployed to produce Google’s websearch index since April, 2010 It’s goals were reducing the latency of indexing a single document with an acceptable increase in resource usage Scaling the architecture costs a very significant 30-fold overhead compared to traditional database architectures How much of this is fundamental to distributed storage systems and how much could be optimized away? 33