Conjugate gradient — orthogonality and conjugacy relations Mike Peardon — Hilary Term 2016

advertisement

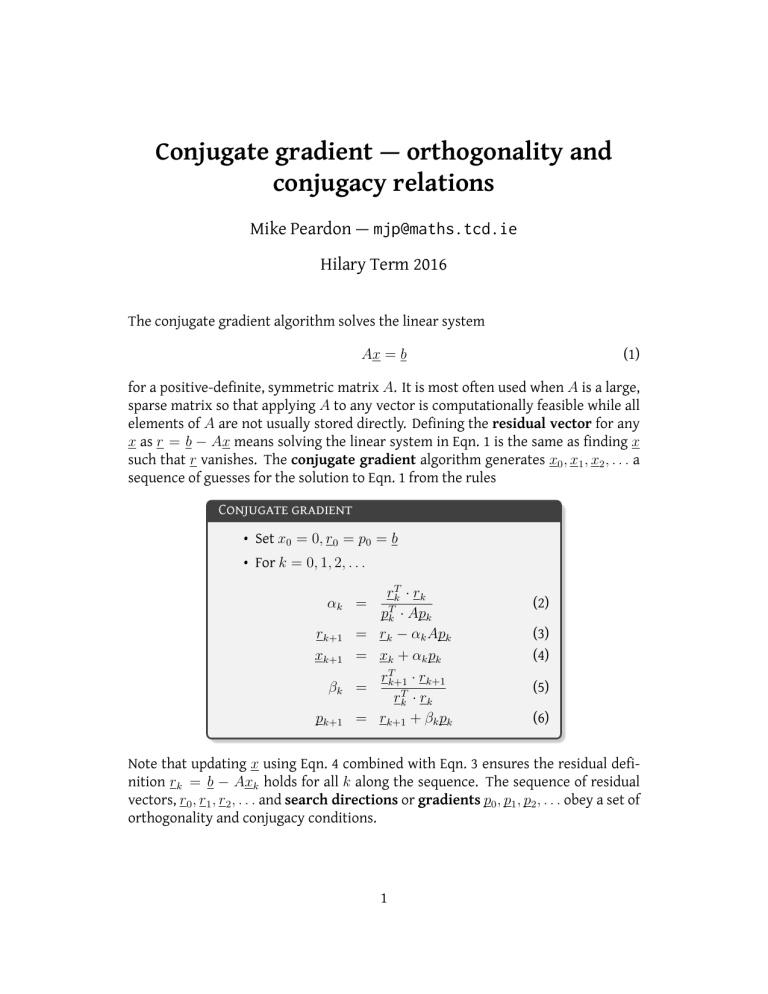

Conjugate gradient — orthogonality and conjugacy relations Mike Peardon — mjp@maths.tcd.ie Hilary Term 2016 The conjugate gradient algorithm solves the linear system (1) Ax = b for a positive-definite, symmetric matrix A. It is most often used when A is a large, sparse matrix so that applying A to any vector is computationally feasible while all elements of A are not usually stored directly. Defining the residual vector for any x as r = b − Ax means solving the linear system in Eqn. 1 is the same as finding x such that r vanishes. The conjugate gradient algorithm generates x0 , x1 , x2 , . . . a sequence of guesses for the solution to Eqn. 1 from the rules Conjugate gradient • Set x0 = 0, r0 = p0 = b • For k = 0, 1, 2, . . . αk = rk+1 = xk+1 = βk = pk+1 = rTk · rk pTk · Apk rk − αk Apk xk + α k p k rTk+1 · rk+1 rTk · rk rk+1 + βk pk (2) (3) (4) (5) (6) Note that updating x using Eqn. 4 combined with Eqn. 3 ensures the residual definition rk = b − Axk holds for all k along the sequence. The sequence of residual vectors, r0 , r1 , r2 , . . . and search directions or gradients p0 , p1 , p2 , . . . obey a set of orthogonality and conjugacy conditions. 1 Orthogonality and conjugacy conditions rTi · rj = 0 (i 6= j) pTi · rj = 0 (i < j) pTi · Apj = 0 (i 6= j) (7) (8) (9) For vectors in an n-dimensional space, Eqn. 7 can only hold for a set of at most n non-zero vectors. This implies that when the sequence reaches k = n, it must stop with rn = 0. This means in turn xn solves the problem given by Eqn. 1. How are these conditions maintained? To see the method solves the linear system of Eqn. 1 means making sure these conditions are obeyed until the sequence ends. The orthogonality and conjugacy conditions are maintained by the choices of the coefficients α and β, defined in Eqns. 2 and 5 and can be demonstrated by induction. The three conditions are shown in turn in the following section. For an induction proof, it is useful to establish the conditions hold between the vectors at the start of the sequence. Consider first rT0 · r1 = rT0 · (r0 − α0 Ap0 ) rT · r = rT0 · r0 − T0 0 rT0 · Ap0 p0 · Ap0 = 0, (10) where the first step of the recursions in Eqns. 2 and 3 are used, along with the initial choice of setting p0 = r0 and this also means that pT0 · r1 = 0. Now 1 T (r − rT1 ) · (r1 + β0 p0 ) α0 0 1 = (β0 rT0 · p0 − rT1 · r1 ) α0 1 rT1 · r1 T T = r · r − r · r 1 1 α0 rT0 · r0 0 0 = 0, pT1 · Ap0 = (11) using Eqns. 3, 5 and 6 and p0 = r0 again. This establishes the orthogonality and conjugacy relations hold at least for (i, j) = (0, 1). Notice the initial choice for p0 plays an important role. 2 The rT · r orthogonality condition Eqn. 7 holds thanks to the recursion for r (Eqn. 3) using α to make each new residual orthogonal to the previous one. The orthogonality relation can be shown by induction. Assume Eqns. 7,8 and 9 hold for all values of i and j up to some value (i, j) ≤ k. Now consider entry k + 1 in the sequence for r. Using Eqn. 3 gives rTi · rk+1 = rTi · rk − αk rTi · Apk . Take the case i < k and i 6= 0 and use Eqn. 6 to see rTi · rk+1 = rTi · rk − αk pTi · Apk − βi−1 pTi−1 · Apk , and all the dot products in the right-hand side vanish thanks to the induction assumption. The special case i = 0 gives rT0 · rk+1 = rT0 · rk − αk rT0 · Apk , but remember r0 = p0 so rT0 · Apk = pT0 · Apk and again all these dot-products give zero. That leaves the case i = k, where rTk · rk+1 = rTk · rk − αk rTk · Apk now set αk to take the value required to enforce the orthogonality rule, which is αk = rTk · rk rTk · rk = , rTk · Apk pTk · Apk where Eqn. 6 has been used to substitute p for r in the denominator. This is a useful algorithmic trick to ensure the denominator is non-zero when pk is non-zero, due to the positive-definite nature of A. Note this is the definition of αk given in Eqn. 2. Since Eqns. 10 and 11 show the orthogonality and conjugacy relations hold for k = 1, they must hold for all values of k < n. The pT · Ap conjugacy condition Eqn. 6 gives pTi · Apk+1 = pTi · Ark+1 + βk pTi · Apk The recursion for r from Eqn. 3 can be used to see (provided αi 6= 0 that Api = 1 (r − ri+1 ) , αi i which gives pTi · Apk+1 = 1 T ri · rk+1 − rTi+1 · rk+1 + βk pTi · Apk αi 3 and all these dot-products are zero when i < k. What about when i = k? This case gives 1 T ·r pTk · Apk+1 = − r + βk pTk · Apk . αk k+1 k+1 Now finding the value for βk needed to explicitly set this term to zero yields βk = rTk+1 · rk+1 pTk · Apk rTk+1 · rk+1 1 rTk+1 · rk+1 = = αk pTk · Apk rTk · rk pTk · Apk rTk · rk which is the expression given in Eqn 5. The pT · r orthogonality condition Eqn. 3 gives pTi · rk+1 = pTi · rk − αk pTi · Apk . For i < k both dot-products vanish by the induction assumption. Consider the case i = k which gives pTk · rk+1 = = = = pTk · rk − αk pTk · Apk . pTk · rk+1 − rTk · rk rTk · rk + βk−1 pTk−1 · rk − rTk · rk 0 Summary The conjugate gradient algorithm solves a sparse, symmetric, positive-definite linear system by iterative generation of a sequence of vectors. The sequence stops after at most n iterations in exact arithmetic. This result relies on a set of orthogonality and conjugacy conditions between entries in the sequence of residuals and search directions. In this note, these conditions were shown by induction. In practise, floating-point round-off error spoils these exact results and in applications, n is sufficiently large to make generating all n terms in the sequence impractical. Fortunately, the method shows exponential convergence and it is usually sufficient to stop the sequence early, when the modulus of the residual vectors falls below some reasonable tolerance. 4