Study Guide

advertisement

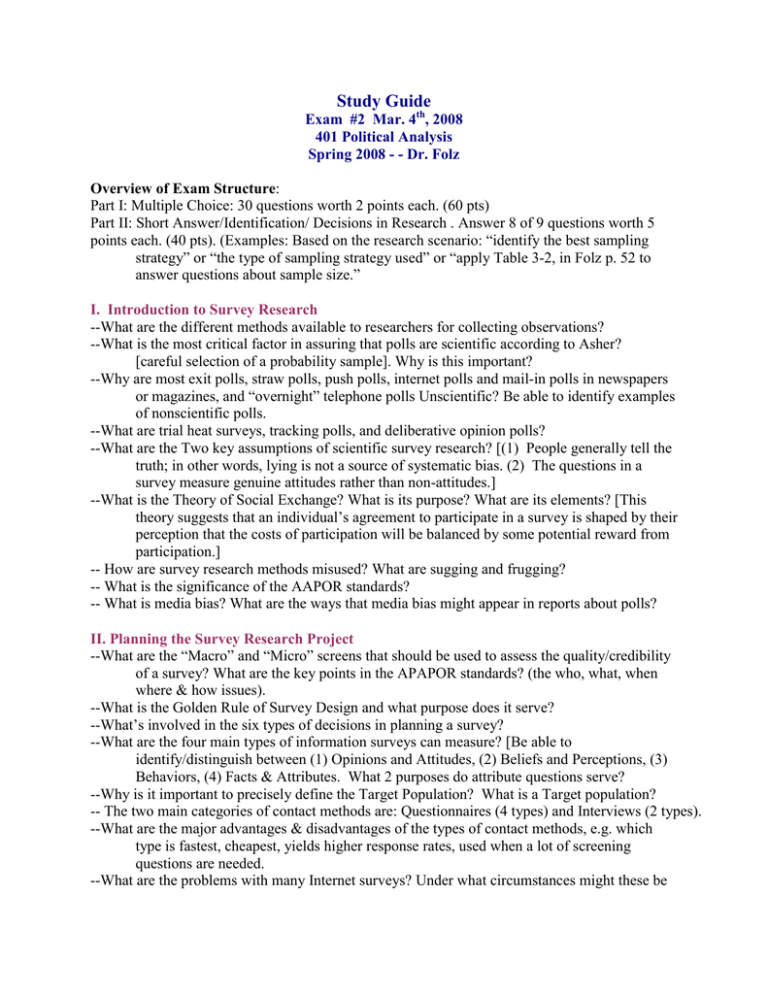

Study Guide Exam #2 Mar. 4th, 2008 401 Political Analysis Spring 2008 - - Dr. Folz Overview of Exam Structure: Part I: Multiple Choice: 30 questions worth 2 points each. (60 pts) Part II: Short Answer/Identification/ Decisions in Research . Answer 8 of 9 questions worth 5 points each. (40 pts). (Examples: Based on the research scenario: “identify the best sampling strategy” or “the type of sampling strategy used” or “apply Table 3-2, in Folz p. 52 to answer questions about sample size.” I. Introduction to Survey Research --What are the different methods available to researchers for collecting observations? --What is the most critical factor in assuring that polls are scientific according to Asher? [careful selection of a probability sample]. Why is this important? --Why are most exit polls, straw polls, push polls, internet polls and mail-in polls in newspapers or magazines, and “overnight” telephone polls Unscientific? Be able to identify examples of nonscientific polls. --What are trial heat surveys, tracking polls, and deliberative opinion polls? --What are the Two key assumptions of scientific survey research? [(1) People generally tell the truth; in other words, lying is not a source of systematic bias. (2) The questions in a survey measure genuine attitudes rather than non-attitudes.] --What is the Theory of Social Exchange? What is its purpose? What are its elements? [This theory suggests that an individual’s agreement to participate in a survey is shaped by their perception that the costs of participation will be balanced by some potential reward from participation.] -- How are survey research methods misused? What are sugging and frugging? -- What is the significance of the AAPOR standards? -- What is media bias? What are the ways that media bias might appear in reports about polls? II. Planning the Survey Research Project --What are the “Macro” and “Micro” screens that should be used to assess the quality/credibility of a survey? What are the key points in the APAPOR standards? (the who, what, when where & how issues). --What is the Golden Rule of Survey Design and what purpose does it serve? --What’s involved in the six types of decisions in planning a survey? --What are the four main types of information surveys can measure? [Be able to identify/distinguish between (1) Opinions and Attitudes, (2) Beliefs and Perceptions, (3) Behaviors, (4) Facts & Attributes. What 2 purposes do attribute questions serve? --Why is it important to precisely define the Target Population? What is a Target population? -- The two main categories of contact methods are: Questionnaires (4 types) and Interviews (2 types). --What are the major advantages & disadvantages of the types of contact methods, e.g. which type is fastest, cheapest, yields higher response rates, used when a lot of screening questions are needed. --What are the problems with many Internet surveys? Under what circumstances might these be 2 an appropriate contact strategy? --What is a Non-Attitude? What problems do they present for survey researchers? Be able to recognize examples. -- What are the three main strategies to deal with non-attitudes? (1) Use screen or filter questions, (2) Explain the issue in neutral, non-biased terms, (3) Ask questions only on the issues or matters that people in the population you want to contact know something about. III. Sampling Methods -- Be able to determine whether a poll is accurate or not based on information provided about each poll. -- Why do researchers engage in sampling? What is sampling? [The science of selecting cases in a way that enables the researcher to make accurate inferences about a larger population.] -- What are the four main steps in the scientific sampling process? 1. Decide who or what to sample (ID target population). 2. Decide the sample size. Who decides this? 3. Determine whether an accurate list of the target population exists to compile a sample frame. 4. Select a probability sampling design & then implement it. -- What is a probability sample? -- Be able to distinguish a probability from a non-probability sample. (Examples of non-probability samples include: availability sampling, snowball, quota, or any poll that has "self-selected" respondents or selection bias). -- Sampling Terminology: know the meaning of: a Target population, Sampling frame, Probability Sample, Variable, Sample Statistic, Population Parameter, & Sampling Error. -- What is a Confidence Level? (how sure we are that a sample’s estimates fall within a specified range of the sample statistic, usually 95%). -- What is a Confidence Interval (margin of error)—(the range of values within which a population parameter is estimated to lie. It is the margin of error (MoE) of a sample’s estimate for a population parameter). -- What determines the exact confidence level and confidence interval for a probability sample? (Be able to use these concepts to determine the accuracy of a sample’s estimates). -- Be able to explain why a large sample with a small error margin can still yield an inaccurate estimate of a population parameter. -- What is a Sampling Design? -- Probability Sampling Designs: Be able to recognize, distinguish, describe and apply each type: (1) Simple random sampling, (2) Stratified random sampling, (3) Systematic sampling (4) Cluster sampling, (5) Multi-stage sampling -- How large does a probability sample need to be? -- What is the logic of probability theory? [Multiple samples of a certain size will be distributed around an actual population parameter in a known way---they will resemble a normal, or bell-shaped curve. More sample estimates will cluster in the middle of the bell and fewer samples will produce estimates (or sample statistics) near the edges or tails of the bell curve. Probability sampling theory assumes the use of random selection of cases at some point in the selection process.] -- What is the central limit theorem? 3 -- What is the nature of the relationship between sampling error and sample size? [Smaller sampling error (more precise estimates) requires larger samples. As long as the population is relatively large, the sampling fraction (what proportion of the population the sample represents) does not have a big impact on precision because it is the absolute size of the sample that determines precision.] -- What is meant by random selection? [Random selection is a process for choosing cases from the sampling frame in a way that permits all members of the population to have an equal or at least known chance of being selected independent of any other event in the selection process. In random selection, none of the characteristics of cases are related to the selection process. Random selection is what allows us to employ the logic of probability sampling theory. -- Know how to use Table 3.1 in Folz. IV. Survey Design and Composing Good Questions --What is Folz’s maxim on survey design? --What are the hallmarks of a well-designed survey? -- Know the five guidelines for designing a survey: (1) List & rank order information objectives, (2) Decide what types of information each objective needs to measure, (3) Decide on question structure, (4) Avoid the types of biases related Instrumentation and Question Content (be able to recognize problems with these in a question), (5) Avoid priming effects of question order. --What is a question battery? --What is the purpose of a pre-test? --Why should survey researchers lead with their best, most interesting question? --Why should survey researchers usually avoid using open-ended questions? --What do closed-ended question formats with ordered choices provide that statements do not? --What is “Instrumentation” bias? (example: acquiescence response set bias; be able to recognize it). --Types of “Question Content” Bias: be able to recognize social desirability bias, a double-barreled question, a leading or loaded question, an unbalanced response set. --Why should survey researchers usually avoid naming public officials or using loaded terms or concepts in questions? --What is a dichotomous response set? --Be able to identify the level of measurement of a survey question. --What invites “pencil-whipping” in self-administered surveys? --What is a screen or branching question? Why are they used? --Why is it important to incorporate an explicit time frame in a question (when appropriate)? --What are “mush words?” Be able to recognize examples of this problem. --What are question batteries? What should begin each one? --What is a “false assumption” in a question and how can it result in an invalid measure? --What does it mean to have a response set that is mutually exclusive and exhaustive? --Why is it important to avoid "priming" effects on question placement? --Why is it important for a survey researcher to have “critical attitude” toward any proposed survey question? -- Can you recognize/identify defects in proposed survey questions?