ECE 568 Introduction to Parallel Processing, Spring 2009

advertisement

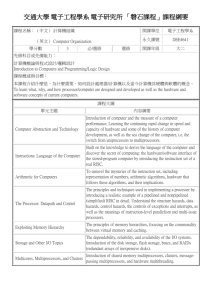

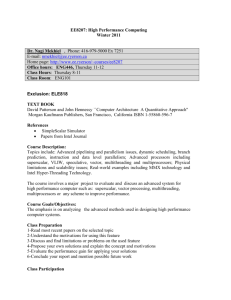

Department of Electrical and Computer Engineering ECE 568 Introduction to Parallel Processing, Spring 2009 Course Objective This course is intended to introduce graduate students to the field of modern computer architecture design stressing speedup and parallel processing techniques. The course is a comprehensive study of parallel processing techniques and their applications from basic concepts to state-of-the-art parallel computer systems. Topics to be covered in this course include the following: First, the need for parallel processing and the limitations of uniprocessors are introduced. Next, a substantial overview and basic concepts of parallel processing and their impact on computer architecture are introduced. This will include major parallel processing paradigms such as pipelining, superscalar, superpipeline, vector processing, multithreading, multi-core, multiprocessing, multicomputing, and massively parallel processing. We then address the architectural support for parallel processing such as (1) parallel memory organization and design, (2) cache design, (3) cache coherence strategies, (4) shared-memory vs distributedmemory systems, (5) symmetric multiprocessors (SMPs), distributed-shared memory(DSM) multiprocessors, multicomputers, and distributed systems, (6) processor design (RISC, superscalar, superpipeline, multithreading, multi-core processors, and speculative computing designs), (7) communication subsystem, (8) computer networks, routing algorithms and protocols, flow control, reliable communication, (9) emerging technologies (such as optical computing, optical interconnection networks, optical memories), (10) parallel algorithm design and parallel programming and software requirements, (11) case studies of several commercial parallel computers from the TOP500 list of supercomptuers (www.top500.org) This semster, we will be using the University of Arizona supercomputers for our programming needs. We will be designing parallel programming applications using MPI and possibly OpenMP on the two machines (ACE and Marin) provided by The University Computing Center. I will provide you further details on how to create accounts on these supercomputers. More information about these computers can be found on the www.hpc.arizona.edu look for computing systems. • Class time and place: Tuesday and Thursday 12:30 - 1: 45, in Harvill 415 • Instructor : Dr. Ahmed Louri • Office Hours: Tuesday and Thursday from 3:30 to 4:30 pm. If you cannot make the office hours, you can schedule an appointment. 1 • Means of Contact: I can be reached by phone during office hours at (520)621 - 2318. louri@ece.arizona.edu. Relevant Textbooks There is no one required textbook for this class. We will be using several books. There is not single book that will cover all the topics we will be addressing in this class. I would strongly encourage you to get hold of some of these books since they are the leading books in the field. The first book, is titled “Advanced Computer Architecture: Parallelism, Scalability, Programmability”, by Kai Hwang, McGraw Hill 1993. As you can see this is a bit old but has many of the fundamentals of the field. • The second book is titled:”Parallel Computer Architecture: A Hardware/Software Approach”, by David Culler J. P. Singh, Morgan Kaufmann, 1999. • Third book is “Scalable Parallel Computing: Technology, Architecture, Programming” Kai Hwang and Zhiwei Xu, McGraw Hill 1998. • Fouth book is “Principles and Practices of Interconnection Networks”William J. Dally and Brian Towles, Morgan Kaufmann 2003. • Fifth book is ”Introduction to Parallel Computing,” second edition Ananth Grama Gupta, Karypis, Kumar, Pearson, Adison Wesley, 2003. • Additionally, there are several other books written on the subject that can be considered as optional. Come see me for more details. • There will also be a lot of handout material from recent technical meetings and journals related to the field. These will be available throughout the semester. • Prerequisites: Knowledge of computer organization at the undergraduate level is sufficient. Grading Policy: This will consist of two exams, a term paper, and homework. The grade breakdown is as follows: The exams will account for 50% of the total grade, the term paper for another 20%, homework for 25%, and active class participation for 5%. 2 Tentative Course Outline (the topics may not be covered in the order provided below) 1. Introduction to Modern Computer Architectures (a) Structure and Organization of a Typical Uniprocessor Architecture (b) Major Limitations of Uniprocessor Systems (c) Need for Parallel Processing (d) Parallel Computer Structures (e) Parallel Classification Schemes (f) Performance Metrics for Parallel Computers 2. Memory Subsystem (a) Memory Hierarchy for Parallel Systems (b) Virtual Memory Organization (c) Cache Memories for Parallel Processing (d) Cache Coherence Schemes (snooping, directory based, and hybrids). (e) Shared-memory Organization (f) Shared-memory vs. Private-memory for Parallel Processing 3. Processor Design (a) Pipelining and Superpipelining (b) Superpipeline Superscalar Design (c) Multithreading (d) multi-core design 4. Communication Subsystem (a) Basic Communication Performance Criteria (b) Bus-based Communications (c) Static Networks topologies and routing (d) Dynamic Networks topologies and routing (e) Reconfigurable Networks 3 (f) Multistage Interconnection Networks (g) Design Trade-offs in Communication Networks (h) Network-on-chips (i) Optical Interconnects 5. Multiprocessors: Bus-based SMPs (a) Snoop-based multiprocessor design (b) snoopy cache coherence design (c) Interconnects for SMPs (d) Case Study 6. Multiprocessors: Directory-based Multiprocessors (a) Principles of MIMD Processing using distributed-shared memory. (b) Interconnects for DSMs. (c) Scalability issues. (d) Case Study 7. Multicomputers: Distributed-memory systems (a) Principles and design issues. (b) Clusters of Workstations (COWs), clusters of PCs 8. Parallel Program Design and Parallel Programming (a) Parallel Program Design Principles (b) Parallel Programming Languages: MPI and OpenMP 9. Emerging Technologies (a) The Role of Optics for Parallel Computing (b) The Role of Wireless Communication for Parallel Computing 10. Future Directions in Computer Architecture 4