Announcements CS 479, section 1: Natural Language Processing 9/6/2012

advertisement

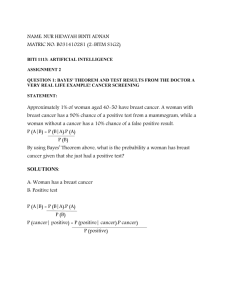

9/6/2012 This work is licensed under a Creative Commons Attribution‐Share Alike 3.0 Unported License. CS 479, section 1: Natural Language Processing Announcements Feedback on Reading Report #2 HW #0, Part 1 Help session: Thursday, 4pm, CS “GigaPix” room Early day: Friday Due: next Monday Lecture #4: Review of Probability Theory, continued Today only: TA office hours held in cubicle downstairs Objectives Back to our Example Build a sound framework for representing uncertainty Understand the fundamentals of probability theory Prepare to use Bayesian networks / directed graphical models extensively! Change the distribution Before and After Before: After: 1 9/6/2012 Conditional Independence Another Example Events and are conditionally independent of one another given event iff ∩ | | | i.e., knowing does not affect | in the presence of knowledge of This is equivalent to | | ∩ Another Example Conditionally Independent, Given C? Are A and B conditionally independent, given C? Bayes’ Theorem Bayes’ Theorem 2 9/6/2012 Bayes’ Theorem Bayes’ Theorem P( B | A) P( A | B) P( B) P ( A) Bayes, Thomas (1763) “An essay towards solving a problem in the doctrine of chances.” Philosophical Transactions of the Royal Society of London 53:370-418 The Denominator Computing P(A) from Partition Computing P(A) from Partition Bayes’ Theorem (2) Marginalization! 3 9/6/2012 Example Example (cont.) Test T for some rare phenomenon G (1 in 100,000) In presence of G, T will have positive indication 95% of time. In absence of G, T will have positive indication 0.005% of time. Suppose T gives a positive indication. What is the probability that G is actually present? Take out pencil and paper. You solve! Example (cont.) What’s Next? Remainder of Probability Theory Random Variables Important Ideas from Information Theory Bayesian Networks Joint (Generative) Models 4