Impact Evaluations

advertisement

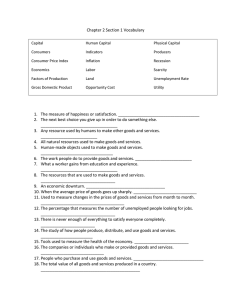

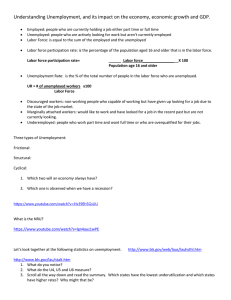

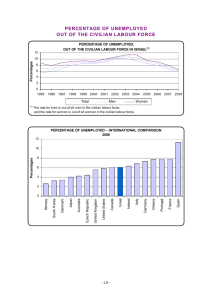

Impact Evaluations and Social Innovation in Europe Bratislava, 15 December, 2011 Joost de Laat (PhD) Human Development Economics Europe and Central Asia The World Bank Comments: jdelaat@worldbank.org PROGRESS 15 December 2011 Deadline Inputs Finance State budget European Social Fund Human resources Min. of Labor Public employment offices Private/public training providers Activities Project preparation activities (4 months) Identify 2000 long-term (at least 2 years) unemployed people interested in training program Identify 10 private / public training providers Design monitoring database Poject implementation activities (1 year). Offer training vouchers to 2000 unemployed Training institutes provide 3 months skills trainings to participating unemployed Enter data on training participation and job placement into database Outputs Project preparation outputs 2000 long term unemployed identified Contracts signed with 10 private/public training providers Monitoring database in place Project implementation Impacts on Outcomes Improved labor market skills of current long-term unemployed Improved employment rates of current long-term unemployed Improved wage rates of current long-term unemployed Reduced poverty of current long-term unemployed 2000 vouchers allocated Est. 1400 long term unemployed accept voucher and enlist in training Greater education enrolment among children of current longterm enrolment Est. 1000 long term unemployed complete training Improved health outcomes among children of current longterm unemployed. Est. 600 long term unemployed find jobs following training Database on participants Outline What? Impact Evaluations Who? How? Ethics? Why? ? Isolates causal impact on beneficiary outcomes What? Globally hundreds of randomized impact evaluations • Canadian self-sufficiency welfare program • Danish employment programs • Turkish employment program • India remedial education program • Kenya school deworming program • Mexican conditional cash transfer program (PROGRESA) • United States youth development programs Different from: e.g. evaluation measuring whether social assistance is reaching the poorest households Often coalitions of: • Governments • International organizations • Academics • NGOs • Private sector companies Who? Examples: • Poverty Action Lab (academic) • Mathematica Policy Research (private) • Development Innovations Ventures (USAID) • International Initiative for Impact Eval. (3ie) • WB Development IMpact Evaluation (DIME) Ex 1: Development Innovation Venture (USAID) 7 Ex 2: International Initiative for Impact Evaluation (3ie) Ex 3: MIT’s Poverty Action Lab (JPAL) 9 Ex 4: WB Development IMpact Evaluation (DIME) 10 Make publicly available training materials in partnership with other WB groups (e.g. Spanish Impact Evaluation Fund) 11 Location Date Countries Attending 12 Participants Project Teams 164 17 El Cairo, Egypt January 13-17, 2008 Managua, Nicaragua March 3-7, 2008 11 104 15 Madrid, Spain June 23-27, 2008 1 184 9 Manila, Philippines December 1-5, 2008 6 137 16 Lima, Peru January 26-30, 2009 9 184 18 Amman, Jordan March 8-12, 2009 9 206 17 Beijing, China July 20-24, 2009 1 212 12 Sarajevo, Bosnia September 21-25, 2009 17 115 12 Cape Town, South Africa December 7-11, 2009 14 106 12 Kathmandu, Nepal February 22-26, 2010 6 118 15 86 1,530 143 Total Organize trainings on impact evaluations in partnership with others (e.g. Spanish Impact Evaluation Fund) 12 Impact Evaluation Clusters • • • • • • • • • • • Conditional Cash Transfers Early Childhood Development Education Service Delivery HIV/AIDS Treatment and Prevention Local Development Malaria Control Pay-for-Performance in Health Rural Roads Rural Electrification Urban Upgrading ALMP and Youth Employment Help coordinate impact evaluations portfolio Basic Elements How to carry one out? • Comparison group that is identical at start of program • Prospective: evaluation needs to be built into design from start • Randomized evaluations generally most rigorous • Example: randomize phase-in (who goes first?) • Qualitative information – helps program design and understanding of the 'why' Ethics? Implementation considerations • Most programs cannot reach all: randomization provides each potential beneficiary fair chance of receiving program (early) • Review by ethical review boards Broader considerations •Important welfare implications of not spending resources effectively •Is the program very beneficial? If we know the answer, there is no need for an IE EU2020 Targets (selected) • 75% of the 20-64 year-olds to be employed • Reducing school drop-out rates below 10% • At least 20 million fewer people in or at risk of poverty and social exclusion Why? Policy Options Are Many • Different ALMPs, trainings, pension rules, incentives for men taking on more home care etc. etc. • For each policy options, also different intensities, ways of delivery… Selective Use of Impact evaluations • Help provide answers to program effectiveness and design in EU2020 areas facing some of the greatest and most difficult social challenges Why? But impact evaluations can also • Build public support for proven programs • Encourage program designers (govts, ngos, etc.) to focus more on program results • Provide incentive to academia to focus energies on most pressing social issues like Roma inclusion! Why? + Help Encourage Social Innovation Thank you for your attention!