IntroSurveyResearch - D-Lab

advertisement

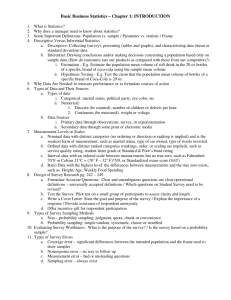

Survey Research Methodology Dlab Workshop Leora Lawton February 9, 2015 1 Outline Purposes of survey research A brief foray into sampling Considerations in design Phone, Mail, Internet, Face-to-Face Incentives 2 Surveys Defined A technique for gathering data, recording them with numeric codes, for the purpose of statistical analysis. Surveys are quantitative but can have qualitative components to them. Surveys typically involve interviewing individuals using mail, internet, face-to-face or telephone methods, but may also involve recording of existing data into a quantitative data base. Require identification of the research goal, sampling design, questionnaire design, analysis plan, and reporting. Survey research rests on a long history of scientific research for optimizing data quality through minimization of sources of statistical error. They are part art, but they are definitely science. Just because you’ve taken surveys does not mean you know how to design or conduct them. 3 The Process 1. 2. 3. 4. 5. 6. 7. 8. 9. Identify the research objective and verify that it is best served with a quantitative approach. Create an outline or conceptual model of your objective. Select the design most suited for your objective. Keep in mind that preliminary qualitative work may be required to identify survey items (questions). Identify the population best suited for providing the information and locate a list of potential respondents (sampling frame). Identify the means best suited for reaching this population. Design the instrument (questionnaire) using the conceptual model as your outline. Review, revise, test (repeat) Collect the data Prepare the data set for analysis Analyze and report. 4 Defining the Objective • Before you know what to write, you must first figure out what you want to know. Quantify Describe Correlate Categorize Explain Causality Determine Predict Classify Track & Trend Changes Example • Problem: There is a need to roll out a new public health program. • Research Objective: How can we design a program that will meet the public health goal and also resonate with the culture of the population we are targeting. 5 Identify the Relationship: I The conceptual model and framework is developed in your dissertation proposal. A conceptual model consists of the relationship between that which you seek to explain (which will be the dependent variable(s)) and the explanatory factors (independent variables). The relationship is explained by the theory. Example: An education program results in more students continuing to grad school Support, Value Meaningful Experience Enjoyment Enrolling in Grad School 6 Identify the Relationship. II Next, you begin to identify the detail of factors that will be used in your survey as real questions that people can answer meaningfully, with validity and reliability. Example: To build trust, one uses locally hired and trained staff, so it has familiarity and also adds more resources to the environment. The location of the service will be in the already utilized local health clinics. This will lead to more use of the service. Mentoring Stipends, scholarships Peer Relationships Intention, Applications, Enrollment Classes, Conferences 7 From Objective to Design • Once the objective is clearly identified, then you can begin thinking about – Sample – • Who can provide you this data, • Who is involved in the process or phenomenon, • Do you need a control or comparison group? – Design • • • • Experimental Cross-sectional Panel Longitudinal 8 From Objectives to Design • Experimental: Refer to Shadish, Cook and Campbell if using an experimental design – Comparing groups (control vs test) • Randomly assigned • Not randomly assigned – Self-controls – before after designs or panel (same respondents – Historical – comparing results from one sample with a later one (different respondents) 9 From Objectives to Design • Descriptive – Cross sectional (snapshot) – Cohorts – same people over time, or same sample frame over time (longitudinal in my book) – Case control - matching 10 Sample and Sampling • Who do you need data from? • Will they be able to provide meaningful data? • And how can you reach them? Perhaps the biggest challenge in data collection is identifying a target population that you can, in fact, reach. 11 Sample • Universe – target population • Sampling frame – list of people in your universe • Sample – the selection of potential respondents to be contacted • Respondents – the units who actually provided data – Non-respondents – the ones that didn’t. – Item non-response – didn’t answer specific questions. 12 Sampling • Once the sample population has been identified, you need: – Sample size • See Sample Size Calculator in Resources section of class website – Sample frame creation and selection • Coverage • Determination of eligible sample frame elements • Sources for acquiring samples 13 Sampling • Sampling strategies – Probability samples: each element has a > 0 probability of being selected • • • • Simple Random Sampling Cluster sampling Stratified Multistage – Non-probability – Snowballs. • Respondent Driven Sampling (RDS) 14 Data Collection Methods and Survey Instruments • Methods – Instruments – – – – – Face to face – paper, CAPI, handheld Telephone – paper, CATI, IVR Internet – email, web-based Mail – paper, scan-form Fax – paper Also… – Mixed Mode – e.g., fax, web, mail – Multimode – phone-mail-phone 15 Data Collection Method and Survey Instrument • Each method has advantages and disadvantages in terms of: – Ability to reach correct target – Budget – Time – Error (non-response, sampling error) – Appropriateness of data needed 16 Data Collection Method and Survey Instrument • Face to face: – Less commonly used – Often residential, door to door • Mall intercepts • Executive one-on-one in-depth interviews (IDIs) – Very time consuming and expensive – Well-trained interviewers, often matched for ethnicity, race and/or language – Residential sampling often uses census blocks – Paper, CAPI, handheld, or multi-mode. 17 Data Collection Method and Survey Instrument • Telephone: – RDD now challenged by lifestyle and tech developments – Both business and residential – Moderate time, but fairly expensive – Trained interviewers, may match for ethnicity, race and/or language – RDD, listed sample, or specialized lists – CATI, paper 18 Data Collection Method and Survey Instrument • Internet: – Replacing much telephone and mail research but losing representativeness. Ease of fielding introduces design and measurement error. – Both business and residential – Quick data collection, often at a very low cost – No interviewer effects, but could be browser effects – Specialized lists or ‘pop-ups’ – Web-based ASP, software, embedded forms. 19 Data Collection Method and Survey Instrument • Mail: – Some predict a come-back. – Both business and residential – SLOW data collection, moderate cost – No interviewer effects. No control on respondent – Specialized lists, census-based lists. – Paper, Scantron or similar forms. 20 Special Populations • Children – 13-17 • Similar to adults, but make the language more conversational and perky. Can use icons for survey rating scales. – 10-13 • Short, with pictures, but some abstract thinking, and they have already started taking assessment and similar tests, so or more familiar. Can look like survey – 6-9: • short, with pictures. Children are VERY literal. Limited abstract thinking, variable reading skills. Make it a game. • If web-based or CASI, add voice-overs. – 5 yrs or under: • do ethnographic only 21 Response Rate (brief intro) Your goal in research design is to reduce error as much as possible and enhance response rate as much as possible. • Response rate = – # responded / (# potential approached – ineligibles) • Non-response = 1 – RR • Examples of non-response: – – – – – Item non-response Terminations Refusals Language Callbacks 22 Writing a Questionnaire • The Groves et al. chapter focuses on the principles of asking questions, that is, the cognitive and psychological processes around respondents’ reading, interpreting and answering questions (also known as ‘items’). • The Dillman chapter (2) talks about specific rules around the items. • Also, look at the Questionnaire Guidelines document (.pdf) on the website for an overview of the process and the most common guidelines. 23 Writing a Questionnaire Your objectives are the ‘map’ to the actual questions. – Which factors in membership organizations identify oligarchy? – From literature review: • • • • • • Communication to members Input from members Following by-laws Leadership cycles out of office Stability of executive office infrastructure Crisis 24 Writing a Questionnaire • Further break down the concepts until you can come up with specific issues around which questions can be written. – Mentoring • • • • Did you visit the GSI consultant in Dlab (yes, no) How often? Did you work with any faculty members? Describe that experience (open-end) – Financial support • How important was the stipend for you? • How would you have paid for tuition if you didn’t have this program? – Classes • Which courses did you take? Overall, how much did you think the coursework contributed to your ability to do research? – Conferences • Did you attend an annual meeting? Did you present a paper? What else did you do at the conference – Peer relationships • Did you make friends in this program? 25 Cognition • Read/hear > Interpret > Identify answer > Provide answer • At any stage, respondent burden can overwhelm the process. • At any stage, researcher error can put up a stumbling block or hurdle. • At any stage, the survey instrument, whether interviewer-administered or self-administered, can block the process. 26 Respondent Burden: Don’t make them work • Read/Hear: The question is too complex, wordy, sloppy. • Interpret: Grammatically incorrect, outside the flow of thought, backwards logic, faulty logic, unfamiliar terms. • Identifying answer: Meaning to item found, remembered, calculated. Or not. • Answer provided: correctly or incorrectly, social desirable, satisficing. 27 Critical Feature • Once the data are collected, you must be able to answer the questions specified in your objectives. You won’t be able to if you don’t go through the objectives clearly, and map the questions to them. • If you don’t know what you are going to do with the data once it’s collected, you may not have the right question, or you haven’t been following objectives, or have none identified. – The question is unnecessary – The question is off-target 28 Critical Feature • Examples: – 1. Question: • How satisfied are you with the ease of reaching a representative by telephone? • What’s the objective?? • Possible meanings: – – – – Time to answer phone (number of rings) Number of ‘buttons’ to push before reaching correct rep Amount of time on hold. Number of reps before reaching correct one. – 2. Objective: • Understand interest in viewing edited reruns • Determine likelihood of viewing on another cable network – Question: How likely are you to watch an edited program on another network? – Will this question work? Why or why not? 29 Getting on first base • Because first impressions are all you have before they toss in the mail, hit the delete button, or hang up. • Think carefully about how to reach your target respondent. Especially for organizational studies (or businesses) you may need to try a handful of more qualitative approaches to see what works best. 30 Telephone Introduction: Principles • Phone call – Gate keepers and screeners – probe for correct respondent. • Busy > call back (6+ times) • No Answer > call back (6+ times) – Alternate time of day, day of week (can program this into CATI) • Refusal: Soft > ask to reschedule or call another time. Employ different interview who has good track record with converting refusals • Refusal: Hard > apologize for intrusion. Ask for another time, another person. Code as refusal. 31 Telephone Introduction: Principles • In our introductions, we: • * introduce ourselves - interviewer's name and Indiana University Center for Survey Research; * briefly describe the survey topic (e.g., barriers to health insurance); * describe the geographic area we are interviewing (e.g., people in Indiana) or target sample (e.g., aerospace engineers); * describe how we obtained the contact information (e.g., the telephone number was randomly generated; we received your name from a professional organization); * identify the sponsor (e.g., National Endowment for the Humanities); * describe the purpose(s) of the research (e.g., satisfaction with services provided by a local agency); * give a "good-faith" estimate of the time required to complete the interview (this survey will take about 10 minutes to complete); * promise anonymity and confidentiality (when appropriate); * mention to the respondent that participation is voluntary; * mention to the respondent that item-nonresponse is acceptable; * ask permission to begin. • • • • • • • • • • 32 Telephone Intro: Example • "Hello, I'm [fill NAME] from the Center for Survey Research at Indiana University. We're surveying Indianapolis area residents to ask their opinions about some health issues. This study is sponsored by the National Institutes of Health and its results will be used to research the effect of community ties on attitudes towards medical practices. • The survey takes about 40 minutes to complete. Your participation is anonymous and voluntary, and all your answers will be kept completely confidential. Your telephone number was randomly generated by a computer. If there are any questions that you don't feel you can answer, please let me know and we'll move to the next one. So, if I have your permission, I'll continue." • At the end, we offer the respondent information on how to contact the principal investigator. For example: • "John Kennedy is the Principal Investigator for this study. Would you like Dr. Kennedy's address or telephone number in case you want to contact him about the study at any time?" 33 Mail Intro: Principles • Dillman’s Tailored Design Method – Advance letter, on letterhead, from sponsor (where applicable) – Packet contains cover letter, instrument, postage-paid return envelope, $2 incentive. – Postcard reminder 2 weeks later – Second packet 4 weeks later – Follow-up phone call if possible 34 Email Intro: Principles • Use principles of social psychology – – – – – – – Politely greet Ask for help Appeal to altruism Anchor to self-interest Define help needed Tell them what to do “K.I.S.S.” – “From” from identifiable source, personally known (not a personal acquaintance, but where a relationship exists) – “Subject” Avoid the look of spam. – Follow-up with reminder emails. Most responses occur with 72 hours. Follow-up can be 4-7 days later. Too many follow-ups is perceived as spam. 35 Email Intro: Examples • Email invitation – From: An organization you know – Subject: Take a survey about your <interest> – Message: Dear <name if possible> • We are conducting a survey to evaluate our recent annual meeting in Montreal. Please take a couple of moments to share with us your experience and opinions. The survey will take about 4 minutes, and will greatly benefit future planning in ORG so conferences will be even more rewarding and enjoyable for you. Just click on the link below to get started. (If no button appears, copy and paste the URL into your browser.) • Sincerely, • John Doe, Survey Director If you have any questions, contact me directly at 800 555-1212, or email jdoe@org.org. • Pop-up – Hi! We are conducting a survey to evaluate our website. You can help by providing your opinions. Doing so will make this website better for you. The survey is short: just 3 or 4 minutes. Please click on the link below and get started! – (top of survey) Thanks for taking our brief survey! We greatly appreciate all of your feedback. 36 Incentives • Few topics are covered in more depth than the issue of incentives. – How much for which audiences is necessary to maximize participation? – Do incentives cause bias? – What form? Cash? Coupons? Gifts? – When cash/gifts are not acceptable, then what? 37 Incentives • Mail surveys – $2 more than $1, more than nothing. $5 not much more help. – Some suggest higher incentives, upon second try, especially for hard-toreach populations. • Phone Surveys – Often not needed. Long ones should have it though. • Email Surveys – Experiments with prizes, drawings, cash, coupons, gift cards. Rewarding panel members especially critical. • Special Audiences – consider summaries of results, charity contributions. – Government – Business – Physicians, IT, other oversampled professions: More Cash. $75+ 38 Examples of papers from AAPOR 2006 • Increasing Response Rates with Incentives – Non-Monetary Incentive Strategies in Online Panels – Incentive Check Content Experimentation – Effect of Incentives on Mail Survey Response: A Cash and Contingent Valuation Experiment • Experimenting with Incentives – Are One-Time Increases in Respondent Fee Payments CostEffective on a Longitudinal Survey? An Analysis of the Effect of Respondent. Fee Experiments on Long-Term Participation in the NLSY97 – Personal Contact and Performance-Based Incentives – Effect of Progressive Incentives on Response Rates – Lottery Incentives with a College-Aged Population 39