Lec6-ifetch

advertisement

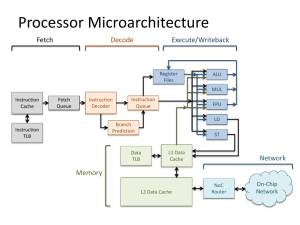

ECE 4100/6100 Advanced Computer Architecture Lecture 6 Instruction Fetch Prof. Hsien-Hsin Sean Lee School of Electrical and Computer Engineering Georgia Institute of Technology Instruction Supply Issues Instruction Fetch Unit Execution Core Instruction buffer • Fetch throughput defines max performance that can be achieved in later stages • Superscalar processors need to supply more than 1 instruction per cycle • Instruction Supply limited by – Misalignment of multiple instructions in a fetch group – Change of Flow (interrupting instruction supply) – Memory latency and bandwidth 2 Aligned Instruction Fetching (4 instructions) Row Decoder PC=..xx000000 ..00 ..01 ..10 ..11 Can pull out one row at a time 00 01 10 11 A0 A4 A8 A12 A1 A5 A9 A13 A2 A6 A10 A14 A3 A7 A11 A15 inst 1 inst 2 inst 3 inst 4 One 64B Icache line Cycle n Assume one fetch group = 16B 3 Misaligned Fetch Row Decoder PC=..xx001000 ..00 ..01 ..10 ..11 00 01 10 11 A0 A4 A8 A12 A1 A5 A9 A13 A2 A6 A10 A14 A3 A7 A11 A15 One 64B Icache line Rotating network inst 1 inst 2 inst 3 inst 4 Cycle n IBM RS/6000 4 Split Cache Line Access Row Decoder PC=..xx111000 ..00 ..01 ..10 ..11 00 01 10 11 A0 A4 A8 A12 B0 B4 A1 A5 A9 A13 B1 B5 A2 A6 A10 A14 B2 B6 A3 A7 A11 A15 B3 B7 inst 1 inst 2 inst 3 inst 4 Be broken down to 2 physical accesses cache line A cache line B Cycle n Cycle n+1 5 Split Cache Line Access Miss Row Decoder PC=..xx111000 ..00 ..01 ..10 ..11 Cache line B misses 00 01 10 11 A0 A4 A8 A12 C0 C4 A1 A5 A9 A13 C1 C5 A2 A6 A10 A14 C2 C6 A3 A7 A11 A15 C3 C7 inst 1 inst 2 inst 3 inst 4 cache line A cache line C Cycle n Cycle n+X 6 High Bandwidth Instruction Fetching • Wider issue More instruction feed • Major challenge: to fetch more than one non-contiguous basic block per cycle • Enabling technique? – Predication – Branch alignment based on profiling – Other hardware solutions (branch prediction is a given) BB4 BB1 BB2 BB3 BB5 BB7 BB6 7 Predication Example Source code if (a[i+1]>a[i]) a[i+1] = 0 else a[i] = 0 Typical assembly lw lw blt sw j L1: sw L2: r2, r3, r3, r0, L2 [r1+4] [r1] r2, L1 [r1] Assembly w/ predication lw lw sgt (p4) sw (!p4) sw r2, [r1+4] r3, [r1] pr4, r2, r3 r0, [r1+4] r0, [r1] r0, [r1+4] • Convert control dependency into data dependency • Enlarge basic block size – More room for scheduling – No fetch disruption 8 Collapse Buffer [ISCA 95] • To fetch multiple (often non-contiguous) instructions • Use interleaved BTB to enable multiple branch predictions • Align instructions in the predicted sequential order • Use banked I-cache for multiple line access 9 Collapsing Buffer Fetch PC Interleaved BTB Cache Bank 1 Cache Bank 2 Interchange Switch Collapsing Circuit 10 Collapsing Buffer Mechanism Interleaved BTB A E Bank Routing E A E F G H A B C D Valid Instruction Bits Interchange Switch A B C D E F G H Collapsing Circuit A B C D E F G H A B C E G 11 High Bandwidth Instruction Fetching • To fetch more, we need to cross multiple basic blocks (and/or multiple cache lines) • Multiple branches predictions BB4 BB1 BB2 BB3 BB5 BB7 BB6 12 Multiple Branch Predictor [YehMarrPatt ICS’93] • Pattern History Table (PHT) design to support MBP • Based on global history only Pattern History Table (PHT) Branch History Register (BHR) bk …… Tertiary prediction b1 p1 p2 p2 update Secondary prediction p1 Primary prediction 13 Multiple Branch Predictin Fetch address (br0 Primary prediction) BTB entry BB1 br1 T (2nd) BB2 br2 T BB4 F BB3 F (3rd) BB5 T BB6 • Fetch address could be retrieved from BTB • Predicted path: BB1 BB2 BB5 • How to fetch BB2 and BB5? BTB? – Can’t. Branch PCs of br1 and br2 not available when MBP made – Use a BAC design F BB7 14 Branch Address Cache V br Taken Target Address Tag 23 bits 1 2 V br V br 30 bits Not-Taken Target Address T-T Address T-N Address N-T Address N-N Address 30 bits 212 bits per fetch address entry Fetch Addr (from BTB) • Use a Branch Address Cache (BAC): Keep 6 possible fetch addresses for 2 more predictions • br: 2 bits for branch type (cond, uncond, return) • V: single valid bit (to indicate if hits a branch in the sequence) • To make one more level prediction – Need to cache another 8 more addresses (i.e. total=14 addresses) – 464 bits per entry = (23+3)*1 + (30+3) * (2+4) + 30*8 15 Caching Non-Consecutive Basic Blocks • High Fetch Bandwidth + Low Latency BB3 BB1 BB5 BB2 BB4 Fetch in Conventional Instruction Cache BB1 BB2 BB3 BB4 BB5 Fetch in Linear Memory Location 16 Trace Cache • Cache dynamic non-contiguous instructions (traces) • Cross multiple basic blocks • Need to predict multiple branches (MBP) E F G H I J K A B C D C H I J I$ Fetch (5 cycles) I$ Trace Cache A B C D E F G H I J E F G A B D A B C D E F G H I J Collapsing Buffer Fetch (3 cycles) A B C D E F G H I J T$ Fetch (1 cycle) 17 Trace Cache [Rotenberg Bennett Smith MICRO‘96] 11: 3 branches. 1: the trace ends w/ a branch 1st 2nd Br taken Br Not taken 11, 1 10 For T.C. miss Line fill buffer Br Br flag mask Tag Fall-thru Address Taken Address M branches BB1 BB2 BB3 T.C. hits, N instructions Branch 1 Branch 2 Branch 3 Fetch Addr MBP • Cache at most (in original paper) – M branches OR (M = 3 in all follow-up TC studies due to MBP) – N instructions (N = 16 in all follow-up TC studies) • Fall-thru address if last branch is predicted not taken 18 Trace Hit Logic Fetch: A Tag Multi-BPred T N BF Mask Fall-thru Target A 10 11,1 X Y N = 0 1 Cond. AND Match Remaining Block(s) Match 1st Block Next Fetch Address Trace hit 19 Trace Cache Example BB Traversal Path: ABDABDACDABDACDABDAC 5 insts Cond 1: 3 branches Cond 2: Fill a trace cache line Cond 3: Exit A 6 insts B 12 insts A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 C A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11 C12 D1 D2 D3 D4 A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 A1 A2 A3 A4 A5 D 4 insts C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11C12 D1 D2 D3 D4 Exit 16 instructions Trace Cache (5 lines) 20 Trace Cache Example BB Traversal Path: ABDABDACDABDACDABDAC 5 insts Cond 1: 3 branches Cond 2: Fill a trace cache line Cond 3: Exit A 6 insts B 12 insts A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 C A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11 C12 D1 D2 D3 D4 A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 A1 A2 A3 A4 A5 D 4 insts C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11C12 D1 D2 D3 D4 Exit Trace Cache (5 lines) 21 Trace Cache Example BB Traversal Path: ABDABDACDABDACDABDAC 5 insts A 6 insts B Trace Cache is Full 12 insts A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 C A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11 C12 D1 D2 D3 D4 A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 A1 A2 A3 A4 A5 D 4 insts C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11C12 D1 D2 D3 D4 Exit Trace Cache (5 lines) 22 Trace Cache Example BB Traversal Path: ABDABDACDABDACDABDAC 5 insts How many hits? A 6 insts B What is the utilization? 12 insts A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 C A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11 C12 D1 D2 D3 D4 A1 A2 A3 A4 A5 B1 B2 B3 B4 B5 B6 D1 D2 D3 D4 A1 A2 A3 A4 A5 D 4 insts C1 C2 C3 C4 C5 C6 C7 C8 C9 C10C11C12 D1 D2 D3 D4 Exit 23 Redundancy • Duplication – Note that instructions only appear once in I-Cache – Same instruction appears many times in TC • Fragmentation – If 3 BBs < 16 instructions – If multiple-target branch (e.g. return, indirect jump or trap) is encountered, stop “trace construction”. – Empty slots wasted resources • Example – A single BB is broken up to (ABC), (BCD), (CDA), (DAB) – Duplicating each instruction 3 times 6 A 4 B 6 C 3 D (ABC) =16 inst (BCD) =13 inst (CDA) =15 inst (DAB) =13 inst A B C B C D C D A A B D Trace Cache 24 Indexability • TC saved traces (EAC) and (BCD) • Path: (EAC) to (D) E A – Cannot index interior block (D) • Can cause duplication • Need partial matching – (BCD) is cached, if (BC) is needed B C D G E B A C C D Trace Cache 25 Pentium 4 (NetBurst) Trace Cache Front-end BTB No I$ !! iTLB and Prefetcher L2 Cache Decoder Trace $ BTB Trace-based prediction (predict next-trace, not next-PC) Trace $ Rename, execute, etc. Decoded Instructions 26