Choosing a sample size

advertisement

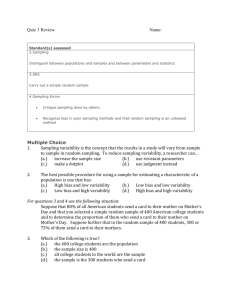

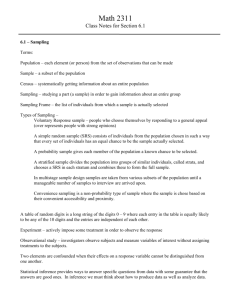

Know the symbols and the meanings We can always easily calculate/know a statistic Often we don’t know (and will never know) the value of the parameter(s); we would have to take a census... Is that even possible? Pew Research Center surveyed 1650 adult internet users to estimate the proportion of American internet users between 18 & 32 years old. Common wisdom hold that this group of young adults is among the heavier users of the internet. The survey found that 30% of the respondents in the sample were between ages 18 & 32. Identify the population, sample, parameter, & statistic (‘estimator’)? This example... proportions... we have ‘means’ too... Drawing conclusions about a population on the basis of observing only a small subset of that population (i.e., a sample); more on this a little later... Always involves some uncertainty Does a given sample represent a particular population accurately? Is the sample systemically ‘off?’ By a lot? By a little? Recall, bias is… Being systematically ‘off;’ scale 5 pound heavy; clock 10 minutes fast, etc. Textbook explains two different types of bias: measurement bias & sampling bias Don’t need to know if a particular bias is measurement or sampling; just need to know the concept of bias Let’s discuss… • People who choose themselves by responding to a general appeal • Biased because people with strong opinions, especially negative opinions, are most likely to respond • Often very misleading • With a partner, come up with one real-life example of voluntary response sampling with which you are familiar; 30 seconds & share out All of our examples discussed make our sample statistic systematically ‘off;’ in other words, bias Worthless data Convenience Sampling: Choosing individuals who are easiest to reach. With a partner, think of a real-life example of convenience sampling. 30 seconds, then share out. Situational examples Bias; worthless data Convenience sampling & voluntary sampling are both bias sampling methods How do we minimize (we can’t eliminate; just minimize as much as possible) bias when sampling/taking samples? Let impersonal chance/randomness do the choosing; more on this... (remember, in voluntary response people chose to respond; in convenience sampling, interviewer made choice; in both situations, personal choice creates bias) Simple Random Sample (SRS) – type of probability or random sample; this type of sampling is without replacement SRS The – chance selects the sample use of chance selecting the sample is the essential principle of statistical sampling Several different types of random sampling, all involve chance selecting the sample Choosing samples by chance gives all individuals an equal chance to be chosen We will focus on Simple Random Sampling (SRS) SRS ensures that every set of n individuals has an equal chance to be in the sample/actually selected Easiest ways to use chance/SRS: • Names in a hat • Random digits generator in calculator or Minitab • Random digits table Random digits table: table of random digits, long string of digits 0, 1, ..., 9 in which: • Each entry in the table is equally likely to be 0–9 • Entries are independent. Knowing the digits in one point of the table gives no information about another part of the table • Table in rows & columns; read either way (but usually rows); groups & rows – no meaning; just easier to read 0 – 9 equally likely 00 – 99 equally likely 000 – 999 equally likely Joan’s small accounting firm serves 30 business clients. Joan wants to interview a sample of 5 clients to find ways to improve client satisfaction. To avoid bias, she chooses a SRS of size 5. Enter table at a random row Notice her clients are numbered (labeled) with 2digits numbers (if this isn’t already done, you must label your list), so we are going to go by 2-digit number in table Ignore all 2-digit number that are beyond 30 (our data is numbered from 01 to 30) Ignore duplicates Continue until we have 5 distinct 2-digit numbers chosen & identify who those clients are 1. Label... Assign a numerical label to every individual 2. Random Digits Table (or Minitab or names in hat)... Select labels at random 3. Stopping Rule ... Indicate when you should stop sampling 4. Identify Sample ... Use labels to identify subjects/individuals selected to be in the sample Be certain all labels have the same # of digits if using RDT(ensures individuals have the same chance to be chosen) Use shortest possible label, i.e., 1 digit for populations up to 10 members (can use labels from 0 to 9), 2 digits for populations from 11 – 100 members (can use labels from 00 to 99), etc. -this is just a good standard of practice... Label students; what labels should we use? Label candy; what labels should we use? SRS of 2 students using Random Digits Table; enter table on line ___ SRS of 2 students using my Minitab (will my Minitab be different from your Minitab?) Should we allow duplicates? When should we and when should we not? To take a SRS, we need a list of our population Is this always possible? Could we get a list of everyone in the United States? In Santa Clarita? COC students? Randomization is great to help with voluntary response bias and convenience bias But it doesn’t help at all with other possible bias... such as wording, non-response, undercoverage, etc. Let’s discuss other sources of potential bias which could make our statistic/our data worthless Most samples suffer from some degree of under coverage (another type of bias) What is bias again?? .... ... Bias is systemically favoring a particular outcome Under coverage occurs when a group(s) is left out of the process of choosing the sample somehow/entirely Talk in your groups and come up with an example of under coverage (some groups in population are left out of the process of choosing the sample) Another source of bias in many/most sample surveys is non-response, when a selected individual cannot be contacted or refuses to cooperate Big problem; happens very often, even with aggressive follow-up Almost impossible to eliminate non-response; we can just try to minimize as much as possible Note: Most media polls won’t/don’t tell us the rate of non-response Response bias...occurs when respondents are untruthful, especially if asked about illegal or unpopular beliefs or behaviors Can you come up with some situations where people might be less than truthful? Who won the “First Lady Debate?” http://www.youtube.com/watch?v=EohGmGQUhA http://perezhilton.com/tv/JIMMY_KIMMEL_LIV E_Who_Won_The_Presidential_Debate_BEFOR E_It_Even_Happened/?id=79b7cb451ec00#.Vb -lN_NViko http://www.mrctv.org/videos/kimmel-publicweighs-first-lady-debate Should we ban disposable diapers? A survey paid for by makers of disposable diapers found that 84% of the sample opposed banning disposable diapers. Here’s the actual question: It is estimated that disposable diapers account for less than 2% of the trash in today’s landfills. In contract, beverage containers, third-class mail and yard wastes are estimated to account for about 21% of the trash in landfills. Given this, in your opinion, would it be fair to ban disposable diapers? Remember our survey ... Possible activity: Sampling Distribution on football lovers... Even if we use SRS/probability sampling, and we are very careful in reducing bias as much as possible, the statistics we get from a given sample will likely be different from the statistics we get from another sample. Statistics vary from sample to sample We can improve our results (our sample statistic can get closer to what our population parameter actually is; our variability decreases) by increasing our random sample size Remember samples vary; parameters are fixed Even if we use SRS/probability sampling, and we are very careful in reducing bias as much as possible, the statistics we get from a given sample will likely be different from the statistics we get from another sample. Statistics vary from sample to sample We can improve our results (our sample statistic can get closer to what our population parameter actually is; our variability decreases) by increasing our random sample size Remember samples vary; parameters are fixed Statistics (like sample proportions) vary from sample to sample A larger n (sample size) decreases standard deviation (variability) A smaller n (sample size) increases standard deviation (variability) But remember no matter how much we increase/how large our sample is, a large sample size does not ‘fix’ underlying issues, like bad wording, under coverage, convenience sampling, etc. Who carried out the survey? How was the sample selected/was the sample representative of the population? How large was the sample? What was the response rate? How were the subjects contacted? When was the survey conducted? What was the exact question asked? True value of population parameter (p or μ) is like a bull’s eye on a target Sample statistic ((𝑥, 𝑝, etc.) is like an arrow fired at the target; sometimes it hits the bull’s eye and sometimes it misses Keep in mind… we (very) often don’t know how close to the bull’s eye we are… When we take many samples from a population (sampling distribution), bias & variability can look like the following: - Bias, aim, accuracy - Variability, precision, standard deviation AND most of the time, we don’t even know where the target is! ... We are trying to estimate where the target is...estimation method... We want: low bias & low variability (good aim & good precision) Properly chosen statistics computed from random samples of sufficient size will (hopefully) have low bias & low variability (good aim & precision) Hits the bull’s eye on the target (even though we don’t know where the target is) Can’t eliminate bias & variability (bad aim & precision); can just do all that we can to reduce bias & variability How good are our estimators? The sampling distribution of a statistic is the distribution of values taken by the statistic in all possible samples of the same size from the same population. The population we will consider is the scores of 10 students on an exam as follows: The parameter of interest is the mean score in this population. The sample is a SRS drawn from the population. Use RDT (enter at random line) to draw a SRS of size n = 3 from this population. Calculate the mean ( x ) of the sample score. This statistic is an estimate of the population parameter, μ. Repeat this process 5 times. Plot your 5 xs on the board. We are constructing a sampling distribution. What is the approximate value of the center of our sampling distribution? What is the shape? Based on this sampling distribution, what do you think our true population parameter, μ, is? Now let’s calculate our true population parameter, μ. Also, remember the Reece’s Pieces simulation ... If we use good, sound statistical practices, we can get pretty good estimates of our population parameters based on samples Also, our estimates get better and better if ... Our estimates get better and better as our ‘n’ increases... not our population size, but our sample size, n. In other words, the precision (variability; how much So, an estimator based on a sample size of 10 is just as precise taken from a population of 1000 people as it is taken from a population of a million. Let’s explore this idea more… it fluctuates) of an estimator (a sample statistic, like a sample proportion or a sample mean) does not depend on the size of the population; it depends only on the sample size. Consider 1000 SRS’s of n = 100 for proportion of U.S. adults who watched Survivor Guatemala Discuss in your groups some observations you have about this distribution; share out. n = 100 (1000 SRS for both sampling distributions) n = 1000 What do you notice? Given that sampling randomization is used properly, the larger the SRS size ( n ), the smaller the spread (the more tightly clustered; the more precise) of the sampling distribution. Center doesn’t change significantly (good estimator; unbiased estimator) Shape doesn’t change significantly Spread (range, standard deviation) does change significantly; spread is not an unbiased estimator A SRS of 2,500 from U.S. population of 300 million is going to be just as accurate/same amount of variability/precision as that same size SRS of 2,500 from 750,000 San Francisco population Both just as precise (given that population is well-mixed); equally trustworthy; no difference in standard deviation, how ‘spread out’ distribution is Not about population size; it’s about sample size (n) As long as the population is large relative to the sample size, the precision has nothing to do with the size of the population, but only with the size of the sample. Increased sample size improves precision/ reduces variability Surveys based on larger sample sizes have smaller standard error (SE) and therefore better precision (less variability) Standard Deviation (or standard error) = p(1 p) n Standard Deviation (or standard error) = p(1 p) n Let’s try the formula: Let’s say that 20,000 students attend COC; and 20% of them say their favorite ice cream is vanilla. If we were to take a SRS of 100 students and ask them if vanilla is their favorite flavor of ice cream, our standard deviation would be... Standard Deviation (or standard error) = p(1 p) n Now let’s say that 20,000 students attend COC; and 20% of them say their favorite ice cream is vanilla. If we were to take a SRS of 1,000 students and ask them if vanilla is their favorite flavor of ice cream, our standard deviation would be... Standard Deviation (or standard error) = p(1 p) n Now let’s say that 20,000 students attend COC; and 20% of them say their favorite ice cream is vanilla. If we were to take a SRS of 2,000 students and ask them if vanilla is their favorite flavor of ice cream, our standard deviation would be... Standard Deviation (or standard error) = p(1 p) n Increased sample size means we have more information about our population so our variability/standard deviation is going to be less. Increased sample size improves precision/ reduces variability Surveys based on larger sample sizes have smaller standard error (SE) and therefore better precision (less variability) Trade-offs… Cost increases, time-consuming, etc. Life was good and easy with the Normal distribution Could easily calculate probabilities Good working model If we could use the Normal distribution with sampling distributions for proportions, life would be great Guess what? We can. Meet the Central Limit Theorem Has many versions (one for proportions, one for means, etc.) Let’s To discuss proportions for now use CLT with proportions, three conditions must be met Random; samples collected randomly from population Large sample; np ≥ 10 and n(1 – p) ≥ 10; proportion of expected successes and failures at least 10 Big population (population at least 10 times sample size) 20,000 students attend COC; and we know that 20% of them say their favorite ice cream is vanilla. We want to find out... IF we asked a SRS of 100 students if vanilla is their favorite ice cream, what’s the probability that half or more of them would say ‘yes.’? Let’s check conditions to see if we can use the CLT to calculate that probability. Random? Large sample: np ≥ 10 and n(1 – p) ≥ 10 ? Big population: population is at least 10 times the sample size? 20,000 students attend COC; and we know that 20% of them say their favorite ice cream is vanilla. We want to find out... IF we asked a SRS of 15 students if vanilla is their favorite ice cream, what’s the probability that half or more of them would say ‘yes.’? Let’s check conditions to see if we can use the CLT to calculate that probability. Random? Large sample: np ≥ 10 and n(1 – p) ≥ 10 ? Big population: population is at least 10 times the sample size? 20,000 students attend COC; and we know that 20% of them say their favorite ice cream is vanilla. We want to find out... IF we asked a SRS of 5,000 students if vanilla is their favorite ice cream, what’s the probability that half or more of them would say ‘yes.’? Let’s check conditions to see if we can use the CLT to calculate that probability. Random? Large sample: np ≥ 10 and n(1 – p) ≥ 10 ? Big population: population is at least 10 times the sample size? It’s important to check conditions before we calculate probabilities to make sure that what we calculate is accurate, valuable, meaningful information (not nonsense) Random Large sample: np ≥ 10 and n(1 – p) ≥ 10 Big population: population is at least 10 times the sample size the examples have described situations in which we know the value of the population parameter, p. Very unrealistic The whole point of carrying out a survey (most of the time) is that we don’t know the value of p, but we want to estimate it Think about the elections... Parties are taking a lot of sample surveys (polls) to see who is ‘leading’ Took a random sample of 2,928 adults in the US and asked them if they believed that reducing the spread of AIDS and other infectious diseases was an important policy goal for the US government. 1,551responded Spiral ‘yes;’ 53% back for a moment... Random? Large sample? Big population? Random? Large sample? Big population? (So, if we wanted to find a probability using the CLT, we could...) These are the exact conditions we must check to create a confidence interval as well More on confidence intervals in a few... The above percentage just tells us about OUR sample of those specific 2,928 people. What about another sample? Would we get a different % of yes’s? What about the percentage of all adults in the US who believe this? How much larger or smaller than 53% might it be? Do we think a majority (more than 50%) of Americans share this belief? We don’t know p, population parameter; we do ˆ for this sample; it’s 53% know p We don’t know p, population parameter; we do know p ˆ for this sample; it’s 53%. We also know: Our estimate (53%) is unbiased; remember sampling distributions? (maybe not exactly = p; maybe just a little low or a little high) Standard error (typical amount of variability) is pˆ (1 pˆ ) 0.53(1 0.53) about 0.0092 0.9% n 2928 Because we have a ‘large sample,’ the probability distribution of our p ˆ s is close to Normally distributed & centered around the true population parameter. True, unknown population parameter probably centered around 0.53; Normally distributed; standard error (SD; amount of variability in sample statistic) = 0.009; so ... About 68% of the data is as close or closer than 1 standard error away from the unknown population parameter, p 95% of the data is as close or closer than 2 standard errors away from the unknown population parameter, p 99.7% of the data is as close or closer than 3 standard errors away from the unknown population parameter, p True, unknown population parameter probably centered around 0.53; Normally distributed; standard error (SD) = 0.009 So we can be highly confident, 99.7% confident, that the true, unknown population proportion, p, is between 0.53 + (3)(0.009) to 0.53 – (3)(0.009) This is a confidence interval; we are 99.7% confident that the the interval from about 50.3% to 55.7% captures the true, unknown population proportion of Americans who believe that reducing the spread of AIDS and other infectious diseases is an important policy goal for the US government. True, unknown population parameter probably centered around 0.53; Normally distributed; standard error (SD) = 0.009 What if we wanted to construct a confidence interval in which we are 95% confident? What proportion of us have at least one tattoo? So our sample statistic, our p ˆ= If we were to ask another group of COC students, we would get another (likely different) p ˆ 445 Math 075 students were asked this last Spring; 133/445 = 0.299 = 29.9% had at least one tattoo Remember, larger n, generally less variation; but still value (unbiased estimator) centered at same We want to be able to say with a high level of certainty what proportion of all COC students have at least one tattoo. But we don’t know the true, unknown population parameter, p. We don’t know p (population parameter) ˆ (sample statistic); actually we have 2 We do know p sample statistics – our class and the Math 075 data Our estimators are unbiased (what does that mean?) check conditions for each of our samples: Let’s Random selection; Large sample; Big population Can we use either sample statistic (either pˆ )? If so, which should we use? Calculate our standard deviation (our standard error) Our distribution is ≈ Normal (because our conditions are met), centered around p ˆ ; 68% with 1 SD; 95% within 2 SDs; 99.7% within 3 SDs Let’s create a 95% confidence interval ... We are 95% confident that the interval from _____ to _____ captures the true, unknown population parameter, p, the proportion of all COC students that have at least one tattoo. This is a confidence interval with a 95% confidence level Our distribution is ≈ Normal (because our conditions are met), centered around p ˆ ; 68% with 1 SD; 95% within 2 SDs; 99.7% within 3 SDs Let’s create a 99.7% confidence interval ... We are 99.7% confident that the interval from _____ to _____ captures the true, unknown population parameter, p, the proportion of all COC students that have at least one tattoo. This is a confidence interval with a 99.7% confidence level Our distribution is ≈ Normal (because our conditions are met), centered around p ˆ ; 68% with 1 SD; 95% within 2 SDs; 99.7% within 3 SDs Let’s create a 68% confidence interval ... We are 68% confident that the interval from _____ to _____ captures the true, unknown population parameter, p, the proportion of all COC students that have at least one tattoo. This is a confidence interval with a 68% confidence level Our distribution is ≈ Normal (because our ˆ ; 68% conditions are met), centered around p with 1 SD; 95% within 2 SDs; 99.7% within 3 SDs lengths of our did you notice about the confidence intervals as we changed from 68% confident to 95% confident to 99.7% confident? What More on this a little later... Statistical inference provides methods for drawing conclusions about a population based on sample data Methods used for statistical inference assume that the data was produced by properly randomized design Confidence intervals, are one type of inference, and are based on sampling distributions of statistics. The other type of inference we will learn and practice is hypothesis testing (more on this later). Estimator ± margin of error (MOE) Our estimator we just used was our sample proportion, our p ˆ Our margin of error we just used was our standard error, our standard deviation, Margin of error tells us amount we are most likely ‘off’ with our estimate Margin of error helps account for sampling variability (NOT any of the bias’ we discussed...voluntary response, non-response, et.) that the mean temperature in Santa Clarita in degrees Fahrenheit is between -50 and 150? that the mean temperature in Santa Clarita in degrees Fahrenheit is between 70 and 70.001? that the mean temperature in Santa Clarita in degrees Fahrenheit is between -50 and 150? that the mean temperature in Santa Clarita in degrees Fahrenheit is between 70 and 70.001? In general, large interval high confidence level; small interval lower confidence level 99% confidence level (or 99.7%) 95% confidence level 90% confidence level Typically we want both: a reasonably high confidence level AND a reasonably small interval; but there are trade-offs; more on this in a little bit Will we ever know for sure if we captured the true unknown population parameter p? No. Actual p is unknown. Interpretation of a confidence interval: “I am ___% confident that the interval from _____ to _____ captures the true, unknown population proportion of (context).” The lower the confidence level (say 10% confident), the shorter the confidence interval (I am 10% confident that the mean temperature in Santa Clarita is between 70.01 degrees and 70.02 degrees) The higher the confidence level (say 99% confident), the wider the confidence interval (I am 99% confident that the mean temperature in Santa Clarita is between 40 degrees and 100 degrees) What else effects the length of the confidence interval? Larger the n (sample size), shorter the confidence interval (small MOE) Smaller the n (sample size), longer the confidence interval (large MOE) Let’s look at how sample size (n) effects the length of the confidence interval, specifically the margin of error Remember, estimate ± margin of error Margin of error Let’s put some numbers in as a simple example... Larger the n (sample size), shorter the confidence interval (small MOE) Smaller the n (sample size), longer the confidence interval (large MOE) So, if you want (need) high confidence level AND small(er) interval (margin of error), it is possible if you are willing to increase n Can be expensive, time-consuming Sometimes In not realistic (why?) reality, you may need to compromise on the confidence level (lower confidence level) and/or your n (smaller n). Alcohol abuse is considered by some as the #1 problem on college campuses. How common is it? A recent SRS of 10,904 US college students collected information on drinking behavior & alcohol-related problems. The researchers defined “frequent binge drinking” as having 5 or more drinks in a row 3 or more times in the past 2 weeks. According to this definition, 2,486 students were classified as frequent binge drinkers. Based on these data, what can we say about the proportion of all US college students who have engaged in frequent binge drinking? Let’s create a confidence interval so we can approximate the true population proportion of all US college students who engaged in frequent binge drinking. How confident do we want to be (i.e, what confidence level do we want to use)? We must check conditions before we calculate a confidence interval... Random? Large sample? Big population? Perform Minitab calculations 1 sample, proportion Options, 99 CL Data, summarized data, events, trials “+”, data labels Always conclude with interpretation, in context I am 99% confident that the interval from 21.8% to 24.9% contains the true, unknown population parameter, p, the actual proportion of all US college students who have engaged in frequent binge drinking. Let’ use our Math 075 statistic of 133/445 students had at least one tattoo (or 29.9%) We already checked our conditions Previously we created confidence intervals at 68%, 95%, and 99.7% confidence levels (applied Empirical Rule... as an introduction to confidence intervals) Now let’s use Minitab and construct a 90% confidence interval; an 80% confidence interval. Be sure to practice interpreting these confidence intervals as well. Often researchers choose the margin of error & confidence level they want ahead of time/before survey So they need to have a particular n to achieve the MOE and the CL they want. p(1 p) MOE z * n Often researchers choose the MOE A common CL is 95%, so z* ≈ 2 Can solve for n & get formula in textbook 0.5(1 0.5) m 2 n A company has received complaints about its customer service. They intend to hire a consultant to carry out a survey of customers. Before contacting the consultant, the company president wants some idea of the sample size that she will be required to pay for. One critical question is the degree of satisfaction with the company's customer service. The president wants to estimate the proportion p of customers who are satisfied. She decides that she wants the estimate to be within 3% (0.03) at a 95% confidence level. No idea of the true proportion p of satisfied customers; so use p = 0.5. The sample size required is given by p(1 p) MOE z * n 0.5(1 0.5) 0.03 2 n n = 1111.11 So if the president wants to estimate the proportion, p, of customers who are satisfied, at the 95% confidence level, with a margin of error of 3%, she would need a sample size (an n) of at least 1,112.