Slide

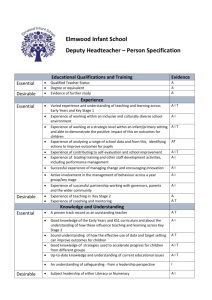

advertisement

Lp Row Sampling by Lewis Weights Richard Peng M.I.T. Joint with Michael Cohen (M.I.T.) OUTLINE • Row Sampling • Lewis Weights • Computation • Proof of Concentration DATA A • n-by-d matrix A, nnz(A) non-zeros • Columns: components • Rows: features Computational tasks • Identify patterns • Interpret new data Officemate’s ‘matrix tourism’: SUBSAMPLING MATRICES What applications need: reduce both rows/columns Fundamental problem: row reduction • #features>> #components • #rows (n) >> #columns (d) A A’=SA Approaches: • Subspace embedding: S that works for most A • Adaptive: build S based on A Run more expensive routines on A’ LINEAR MODEL Ax x1A:,1 x2A:,2 x3A:,3 • Can add/scale data points • x: coefficients, combo: Ax Interpret new data point b: DISTANCE MINIMIZATION x A b -1 Minx ║Ax–b║ p • p=2: Euclidean norm of x, least squares • p=1: absolute deviations, robust regression Simplified view: • Ax – b= [A, b] [x; -1] • min║Ax║p with one entry of x fixed ROW SAMPLING Pick some (rescaled) rows of A’ so that ║Ax║p≈1+ε║A’x║p for all x A A’ A’ = SA, S: • Õ(d) × n • one non-zero per row Feature selection Error notation ≈: a≈kb if there exists kmin, kmax s.t. kmax/kmin ≤ k and kmina ≤ b ≤ kmax a ON GRAPHS A: edge-vertex incidence matrix x: labels on vertices Row for edge uv: |aix|p = |xu – xv|p • • p = 1: (fractional) cuts [Benczur-Karger `96]: cut sparsifiers • • p = 2: energy of voltages [Spielman-Teng `04]: spectral sparsification A’ with O(dlogd) rows in both cases [Naor `11][ Matousek `97] on graphs, L2/ spectral sparsifiers after normlization work for all 1 ≤ p ≤ 2 PREVIOUS POLY-TIME ALGORITHMS Assuming ε = constant P 2 # rows By dlogd Matrix concentration bounds ([Rudelson-Vershynin `07], [Tropp `12]) d [Batson-Spielman-Srivastava `09] 1 d2.5 2 1 < p < 2 dp/2+2 2<p [Dasgupta-Drineas-Harb -Kumar-Mahoney `09] dp+1 [DMMW`11][CDMMMW`12][CW`12][MM `12][NN `12] [LMP `12]: input-sparsity time, O(nnz(A) + poly(d)) GENERAL MATRICES 1/2 A: 1/2 A’: 1 1/2 1/2 1-dimensional: only `interesting’ vector: x = [1] L2 distance = 1/1 = 1 L1 distance = 2/1 = 2 Difference = distortion between L2 and L1: n1/2 OUR RESULTS P 1 Previous d2.5 Our dlogd Uses [Talagrand `90] 1 < p < 2 dp/2+2 dlogd(loglogd)2 [Talagrand `95] 2<p dp/2logd dp+1 [Bourgain-MilmanLindenstrauss `89] • Runtime: input-sparsity time, O(nnz(A) + poly(d)) • When p < 4, overhead is O(dω) • For p = 1, elementary proof that gets most details right Will focus on p = 1 for this talk SUMMARY • Goal: sample rows of matrices to preserve ║Ax║p. • Graphs: preserving p-norm preserves all q < p. • Different notion for general matrices. OUTLINE • Row Sampling • Lewis Weights • Computation • Proof of Concentration IMPORTANCE SAMPLING General scheme: Probability pi for each row • keep with probability pi • If picked, rescale to keep expectation Before: |aiTx|p sample, rescale by si After: |a’iTx|p = |siaiTx|p w.p. pi 0 w.p. 1 - pi Only one non-zero row: need to keep Need: E[|a’iTx|p] = |aiTx|p pi(si)p = 1 si pi-p E[║A’x║pp] = ║Ax║pp, need concentration ISSUES WITH SAMPLING BY NORM norm sampling: pi =║ai║22 column with one entry Need: ║A[1;0;…;0]║p≠ 0 Bridge in graph Need: connectivity MATRIX-CHERNOFF BOUNDS τ: L2 statistical leverage scores τi = aiT(ATA)-1ai ai row i of A Sampling rows w.p. pi = τi logd gives ║Ax║2≈║A’x║2 ∀x w.h.p. On graphs: weight of edge × effective resistance [Foster `49] Σi τi = rank ≤ d O(dlogd) rows [CW`12][NN`13][LMP`13][CLMMPS`15]: can estimate L2 leverage scores in O(nnz(A) + dω+a) time MATRIX-CHERNOFF BOUNDS w*: L1 Lewis Weights wi*2 = aiT(ATW*-1A)-1ai Recursive definition Sampling rows w.p. pi = wi logd gives ║Ax║1≈║A’x║1 ∀x w.h.p. Equivalent: wi* = wi*-1aiT(ATW*-1A)-1ai Leverage score of row i of W*-1/2A Σi wi*= d by Foster’s theorem Will show: • can get w ≈ w* using calls to L2 leverage score estimators / in O(nnz(A) + dω+a) time • Existence and uniqueness WHAT IS LEVERAGE SCORE Length of ai after `whitening transform’ Approximations are basis independent: Max ║Ax║p/║A’x║p = Max ║AUx║p/║A’ Ux║p Can reorganize the columns of A: Transform so ATA = I (isotropic position) Interpretation of matrix-Chernoff When A is in isotropic position, norm sampling works WHITENING FOR L1? 1 0 0 (1-ε2)1/2 0 ε/k 0 ε/k 0 … 0 ε/k Split εof ai into k2 copies of ε/k ai Most of ║A[0,1]║1 from small rows: k2 (ε/k) = kε, big when k > ε • Total sampling probability < lognε • Problematic when k > ε-1 > logn (this can also happen in a non-orthogonal manner) WHAT WORKS FOR ANY P • n > f(d), • A is isotropic, ATA = I, • All row norms < 2d/n, Sampling with pi = ½ gives ║Ax║p≈║A’x║p ∀x P 1 f(d) dlogd Citation [Talagrand `90] 1<p<2 dlogd(loglogd)2 [Talagrand `95] 2<p dp/2logd [Bourgain-MilmanLindenstrauss `89] Symmetrization ([Rudelson-Vershynin `07]): uniformly sample to f(d) rows gives ║Ax║p≈║A’x║p ∀x SPLITTING BASED VIEW Matrix concentration bound: uniform sampling works when all L2 leverage scores are same “Generalized whitening transformation”: Split ai into wi (fractional) “copies” s.t. all rows have L2 leverage scores d/n Split ai into wi copies Preserve |aiTx|p: each copy = wi-1/pai L2: wi copies of wi-1/2ai • Quadratic form: wi (wi-1/2ai)T(wi-1/2ai) = aiTai • wi (d/n)τi suffices SPLITTING FOR L1 Preserve L1: wi copies of wi-1ai 1 0 0 2 w2=4 1 0 0 1/2 0 1/2 0 1/2 0 1/2 New quadratic form: 1 0 0 1 Measuring leverage scores w.r.t. a different matrix! Row ai wi copies of (wi-1ai)T(wi-1ai) Lewis quadratic form: Σwi (wi-1ai)T(wi-1ai) = ATW-1A CHOICE OF N Lewis quadratic form: ATW-1A All L2 leverge scores same: d/n = wi-2aiT(ATW-1A)-1ai or: wi = n/d wi-1aiT(ATW-1A)-1ai Sanity check: Σwi = n/d (Σwi-1aiT(ATW-1A)-1ai )= n n’ rows instead: w’ w (n’/n) works Check: • ATW’-1A = (n/n’) ATW-1A • aiT(ATW’-1A)-1ai = (n’/n) aiT(ATW-1A)-1ai • wi'-2aiT(ATW’-1A)-1ai = (n/n’ wi)-2 (n’/n) aiT(ATW-1A)-1ai =(n/n’) wi-2aiT(ATW-1A)-1ai = d/n' CHOICE OF N wi: weights to split into n rows n’ rows instead: w’ w (n’/n) # samples of row i: (d f(d) / n) w Lp Lewis weights: w that gives n = d rows • ‘Fractional’ copies, w*i < 1 • Recusive definition • f(d) / d: sampling overhead, akin to O(logd) from L2 matrix Chernoff bounds LEWIS WEIGHTS Lp Lewis weights: w* s.t. wi*2/p = aiT(ATW*1-2/pA)-1ai Recursive definition, will show existence / computation next w* = L2 leverage scores of W*1/2-1/pA • Sum: d • p = 2: 1/2-1/p = 0, same as L2 leverage scores of A 2-approximate Lp Lewis weights: w s.t. wi2/p ≈2 aiT(ATW1-2/pA)-1ai L1: wi ≈2 (aiT(ATW-1A)1 1/2 INVOKING EXISTING RESULTS Symmetrization ([Rudelson-Vershynin `07]): importance sampling using 2-approximate Lp Lewis weights gives A’ s.t. ║Ax║p≈║A’x║p ∀x P 1 # rows dlogd Citation [Talagrand `90] 1<p<2 dlogd(loglogd)2 [Talagrand `95] 2<p dp/2logd [Bourgain-MilmanLindenstrauss `89] SUMMARY • • • • • Goal: sample rows of matrices to preserve ║Ax║p. Graphs: preserving p-norm preserves all q < p. Different notion for general matrices. Sampling method: importance sampling. Leverage scores (p-norm) Lewis weights. OUTLINE • Row Sampling • Lewis Weights • Computation • Proof of Concentration FINDING L1 LEWIS WEIGHTS Need: wi ≈2 (aiT(ATW-1A)-1ai)1/2 Algorithm: pretend w is the right answer, and iterate w’i (aiT(ATW-1A)-1ai)1/2 Has resemblances to iterative reweighted least squares Each iteration: compute leverage scores w.r.t. w, O(nnz(A) + dω+a) time We show: if 0 < p < 4, distance between w and w’ rapidly decreases CONVERGENCE PROOF OUTLINE w’i w’’i (aiT(ATW-1A)-1ai)1/2 (aiT(ATW’-1A)-1ai)1/2 (aiTPai)1/2 for some matrix P Goal: show distance between w’ and w’’ less than distance between w and w’ Spectral similarity of matrices: A ≈ k B if xTAx ≈ k xTBx ∀x Implications of P ≈ k Q : • P-1 ≈ k Q-1 • UTPU ≈ k UTQU for all matrices U CONVERGENCE FOR L1 Iteration steps: w’i (aiT(ATW-1A)-1ai)1/2 w’’i (aiT(ATW’-1A)-1ai)1/2 Assume: w ≈ k w’ Composition: Invert: Apply to vector ai: W ≈ k W’ W-1 ≈ k W’-1 ATW-1A ≈ k ATW’-1A (ATW-1A)-1 ≈ k (ATW’-1A)-1 aiT(ATW-1A)-1ai ≈ k aiT(ATW’-1A)-1ai w’i2 ≈ k w’’i2 w’i ≈ k1/2 w’’i Fixed point iteration: log(k) halves per step! OVERALL SCHEME We show: if initialize with wi = 1, After 1 step we have wi ≈ n w’i Convergence bound gives w(t) ≈ 2 w(t+1) in O(loglogn) rounds p 2logdw are good sampling probabilities Input-sparsity time: stop when w(t) ≈ nc w(t+1) • O(log(1/c)) rounds suffice. • Over-sampling by factor of nc. Uniqueness: w’i (aiT(ATW-1A)-1ai)1/2 is a contraction mapping, can show same convergence rate to fixed point OPTIMIZATION FORMULATION Lp Lewis weights: wi*2 = aiT(ATW*1-2/pA)-1ai poly-time algorithm: solve Max det(M) s.t. Σi (aiTMai)p/2 ≤ d M P.S.D. wi* • Convex problem when p > 2 • Also leads to input-sparsity time algorithms SUMMARY • • • • • • • Goal: sample rows of matrices to preserve ║Ax║p Graphs: preserving p-norm preserves all q < p. Different notion for general matrices. Sampling method: importance sampling. Leverage scores (p-norm) Lewis weights. Iterative computation when 0 < p < 4. Solutions to max determinant. OUTLINE • Row Sampling • Lewis Weights • Computation • Proof of Concentration PROOFS OF KNOWN RESULTS P 1 Citation [Talagrand `90] + [Pisier `89, Ch2] # pages 8 + ~30 1 < p < 2 [Talagrand `95] + 16 + 12 [Ledoux-Talagrand `90, Ch15.5] 2<p [Bourgain-Milman-Lindenstrauss `89] 69 Will show: elementary proof for p = 1 Tools used: • Gaussian processes • K-convexity for p = 1 • Majorizing measure for p > 1 CONCENTRATION ‘Nice’ case: • ATA = I (isotropic position) • ║ai ║22 <ε2 / logn • Sampling with pi = ½ Can use this to show the general case pick half the rows, double them si: copy of row i: 0 w.p. 1/2 2 w.p. 1/2 ║Ax║1 - ║A’x║1 = Σi |aiTx| - Σi si|aiTx| = Σi (1 – si)|aiTx| RADAMACHER PROCESSES σi = 1 – si ±1 w.p. ½ each Radamacher random variables Need to bound (over choices of σ ): Maxx, ║Ax║ ≤ 1Σi σi |aiTx| 1 Comparison theorem [Ledoux-Talagrand `89]: suffices to bound (expectation over σ) Maxx, ║Ax║ ≤ 1Σi σi aiTx 1 = Maxx, ║Ax║ ≤ 1σTAx 1 Proof via Hall’s theorem on the hypercube TRANSFORMATION: Maxx, ║Ax║ 1 TAx σ ≤1 ATA = I (assumption) = Maxx, ║Ax║ ≤ Maxy, ║y║ 1 1 TAATAx σ ≤1 TAATy σ ≤1 Dual norm: Maxy, ║y║ = ║σTAAT║∞ 1 ≤1 bTy = ║b║∞ EACH ENTRY (σTAAT)j = ΣiσiaiTaj Khintchine’s inequality (with logn moment): w.h.p Σσibi ≤ O( ( logn ║b║22)1/2 ) Σi(aiTaj)2 = Σi ajTaiaiTaj = ajT ATA aj INITIAL ASSUMPTIONS • ATA = I (isotropic position) • ║ai ║22 <ε2 / logn ajT ATA aj = ║aj║22 < ε2 / logn Khintchine’s inequality: w.h.p. each entry < O( ( logn ║b║22)1/2 ) = O(ε) Unwind proof stack: • W.h.p. ║σTAAT║∞ < ε • Maxx, ║Ax║ ≤ 1σTAx < ε 1 • Pass moment generating function through comparison theorem gives result SUMMARY • • • • • • • • • Goal: sample rows of matrices to preserve ║Ax║p. Graphs: preserving p-norm preserves all q < p. Different notion for general matrices. Sampling method: importance sampling. Leverage scores (p-norm) Lewis weights. Iterative computation when 0 < p < 4. Solutions to max determinant. Convergence: bound max of a vector. Follows from scalar Chernoff bounds. OPEN PROBLEMS What are Lewis weights on graphs? Elementary proof for p ≠ 1? O(d) rows for 1 < p < 2? Better algorithms for p ≥ 4 Fewer rows for structured matrices (e.g. graphs) when p > 2? Conjecture: O(dlogf(p)d) for graphs • Generalize low-rank approximations • • • • • Reference: http://arxiv.org/abs/1412.0588