Chapter 6 Numerical Methods for Ordinary Differential Equations

advertisement

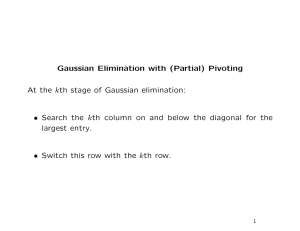

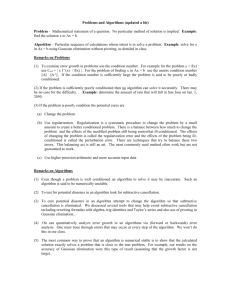

Chapter 7 Numerical Methods for the Solution of Systems of Equations 1 Introduction 2 This chapter is about the techniques for solving linear and nonlinear systems of equations. Two important problems from linear algebra: – The linear systems problem: – The nonlinear systems problem: 7.1 Linear Algebra Review 3 4 Theorem 7.1 and Corollary 7.1 5 Singular v.s. nonsingular Tridiagonal Matrices Upper triangular: Lower triangular: Symmetric matrices, positive definite matrices…… The concepts of independence/dependence, spanning, basis, vector space/subspace, dimension, and orthogonal/orthonormal should review…… 6 7.2 Linear Systems and Gaussian Elimination 7 In Section 2.6, the linear system can be written as a single augmented matrix: Elementary row operations to solve the linear system problems: Row equivalent: if we can manipulate from one matrix to another using only elementary row operations, then the two matrices are said to be row equivalent. Theorem 7.2 8 Example 7.1 9 Example 7.1 (con.) 10 Partial Pivoting 11 The Problem of Naive Gaussian Elimination The problem of naive Gaussian elimination is the potential division by a zero pivot. For example: consider the following system The exact solution: What happens when we solve this system using the naive algorithm and the pivoting algorithm? 12 Discussion 13 Using the naive algorithm: Using the pivoting algorithm: incorrect correct 7.3 Operation Counts 14 You can trace Algorithms 7.1 and 7.2 to evaluate the computational time. 7.4 The LU Factorization 15 Our goal in this section is to develop a matrix factorization that allows us save the work from the elimination step. Why don’t we just compute A-1 (to check if A is nonsingular)? – The answer is that it is not cost-effective to do so. – The total cost is (Exercise 7) What we will do is show that we can factor the matrix A into the product of a lower triangular and an upper triangular matrix: The LU Factorization 16 Example 7.2 17 Example 7.2 (con.) 18 The Computational Cost The total cost of the above process: If we already have done the factorization, then the cost of the two solution steps: Constructing the LU factorization is surprisingly easy. – 19 The LU factorization is nothing more than a very slight reorganization of the same Gaussian elimination algorithm we studied earlier in this chapter. The LU Factorization : Algorithms 7.5 and 7.6 20 21 22 Example 7.3 23 Example 7.3 (con.) L U 24 Pivoting and the LU Decomposition Can we pivoting in the LU decomposition without destroying the algorithm? – – 25 Because of the triangular structure of the LU factors, we can implement pivoting almost exactly as we did before. The difference is that we must keep track of how the rows are interchanged in order to properly apply the forward and backward solution steps. Example 7.4 Next page 26 Example 7.4 (con.) We need to keep track of the row interchanges. 27 Discussion How to deep track of the row interchanges? – Using an index array – 28 For example: In Example 7.4, the final version of J is you can check that this is correct. 7.5 Perturbation, Conditioning, and Stability Example 7.5 29 7.5.1 Vector and Matrix Norms For example: – Infinity norm: – 30 Euclidean 2-norm: Matrix Norm The properties of matrix norm: (1) For example: – The matrix infinity norm: – 31 The matrix 2-norm: (2) Example 7.6 17 22 11 22 56 2 0 11 2 14 32 7.5.2 The Condition Number and Perturbations Condition number Note that 33 Definition 7.3 and Theorem 7.3 34 AA-1= I 35 Theorem 7.4 36 Theorems 7.5 and 7.6 37 Theorem 7.7 38 Definition 7.4 39 An example: Example 7.7 40 Theorem 7.9 41 Discussion 42 Is Gaussian elimination with partial pivoting a stable process? – For a sufficiently accurate computer (u small enough) and a sufficiently small problem (n small enough), then Gaussian elimination with partial pivoting will produce solutions that are stable and accurate. 7.5.3 Estimating the Condition Number 43 Singular matrices are perhaps something of a rarity, and all singular matrices are arbitrarily close to a nonsingular matrix. If the solution to a linear system changes a great deal when the problem changes only very slightly, then we suspect that the matrix is ill conditioned (nearly singular). The condition number is an important indicator to find the ill conditioned matrix. Estimating the Condition Number Estimate the condition number 44 Example 7.8 45 7.5.4 Iterative Refinement 46 Since Gaussian elimination can be adversely affected by rounding error, especially if the matrix is ill condition. Iterative refinement (iterative improvement) algorithm can use to improve the accuracy of a computed solution. Example 7.9 47 Example 7.9 (con.) compare 48 7.6 SPD Matrices and The Cholesky Decomposition 49 SPD matrices: symmetric, positive definite matrices You can prove this theorem using induction method. The Cholesky Decomposition There are a number of different ways of actually constructing the Cholesky decomposition. All of these constructions are equivalent, because the Cholesky factorization is unique. One common scheme uses the following formulas: n 50 This is a very efficient algorithm. You can read Section 9.22 to learn more about Cholesky method. 7.7 Iterative Method for Linear Systems: a Brief Survey If the coefficient matrix is a very large and sparse, then Gaussian elimination may not be the best way to solve the linear system problem. Why? – 51 Even though A=LU is sparse, the individual factors L and U may not be as sparse as A. Example 7.10 52 Example 7.10 (con.) 53 Splitting Methods (details see Chapter 9) 54 Theorem 7.13 55 Definition 7.6 56 Theorem 7.14 57 Conclusion: Example of Splitting Methods-Jacobi Iteration 58 Jacobi iteration: In this method, matrix M = D. Example 7.12 59 Example 7.12 (con.) 60 Example of Splitting Methods-Gauss-Seidel Iteration 61 Gauss-Seidel Iteration : In this method, matrix M = L. Example 7.13 62 Theorem 7.15 63 Example of Splitting Methods-SOR Iteration 64 SOR: successive over-relaxation iteration Example 7.14 65 Theorem 7.16 66